Assumptions & Diagnostics

In this lab, you will be provided with a comprehensive overview of linear regression assumptions and diagnostics. Therefore, whilst the lab might be appear rather lengthy, it will serve as a handy reference for you to use in the future and refer back to when needed.

LEARNING OBJECTIVES

- Be able to state the assumptions underlying a linear model.

- Specify the assumptions underlying a linear model with multiple predictors.

- Assess if a fitted model satisfies the assumptions of your model.

- Assess the effect of influential cases on linear model coefficients and overall model evaluations.

Linear Model Assumptions

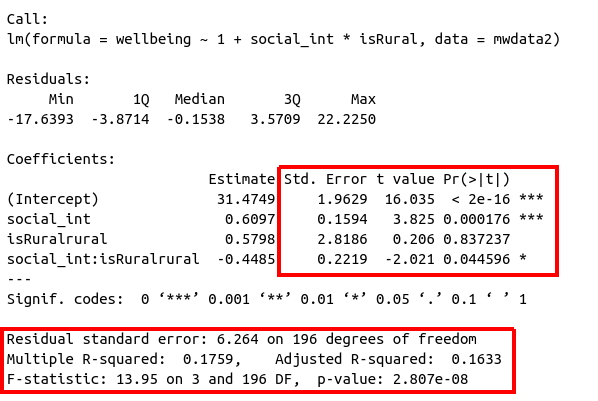

In the previous labs, we have fitted a number of regression models, including some with multiple predictors. In each case, we first specified the model, then visually explored the marginal distributions and relationships of variables which would be used in the analysis. Finally, we fit the model, and began to examine the fit by studying what the various parameter estimates represented, and the spread of the residuals (the parts of the output inside the red boxes in Figure 1)

Figure 1: Multiple regression output in R, summary.lm(). Residuals and Coefficients highlighted

But before we draw inferences using our model estimates or use our model to make predictions, we need to be satisfied that our model meets a specific set of assumptions. If these assumptions are not satisfied, the results will not hold.

Figure 2: Multiple regression output in R, summary.lm(). Hypothesis tests highlighted

You can remember the four assumptions by memorising the acronym LINE:

- L - Linearity

- I - Independence

- N - Normality

- E - Equal variance

If at least one of these assumptions does not hold, say N - Normality, you might be reporting a LIE. Recall the assumptions of the linear model:

- Linearity: The relationship between \(y\) and \(x\) is linear.

- Independence of errors: The error terms should be independent from one another.

- Normality: The errors \(\epsilon\) are normally distributed in the population.

- Equal variances (“Homoscedasticity”): The variability of the errors \(\epsilon\) is constant across \(x\).

Because we don’t have the data about the entire population, we check the assumptions on the errors by looking at their sample counterpart: the residuals from the fitted model = observed values - fitted values = \(y_i - \hat y_i\).

The residuals \(\hat \epsilon_i\) are the sample realisation of the actual, but unknown, true errors \(\epsilon_i\) for the entire population. Because these same assumptions hold for a regression model with multiple predictors, we can assess them in a similar way. However, there are a number of important considerations.

In this lab, we will check the assumptions of two models - one simple linear model, and one with multiple predictors, and assess whether these models meet the assumptions outlined above. We will be working with two different datasets that you have used in previous labs: riverview and wellbeing.

Guided exercises

Open a new RMarkdown document. Copy the code below to load in the tidyverse packages, read in the riverview.csv and wellbeing.csv datasets and fit the following two models:

\[ \begin{aligned} M1&: \quad \text{Income} = \beta_0 + \beta_1 \cdot \text{Education} + \epsilon \\ M2&: \quad \text{Wellbeing} = \beta_0 + \beta_1 \cdot \text{Outdoor Time} + \beta_2 \cdot \text{Social Interactions} + \epsilon \end{aligned} \]

library(tidyverse)

# read in the riverview data

rvdata <- read_csv(file = "https://uoepsy.github.io/data/riverview.csv")

# read in the wellbeing data

wbdata <- read_csv(file = "https://uoepsy.github.io/data/wellbeing.csv")

# fit the linear models:

rv_mdl1 <- lm(income ~ 1 + education, data = rvdata) #riverview model

wb_mdl1 <- lm(wellbeing ~ outdoor_time + social_int, data = wbdata) #wellbeing modelNote: We have have forgone writing the 1 in lm(y ~ 1 + x.... The 1 just tells R that we want to estimate the Intercept, and it will do this by default even if we leave it out.

Linearity

Simple Linear Regression

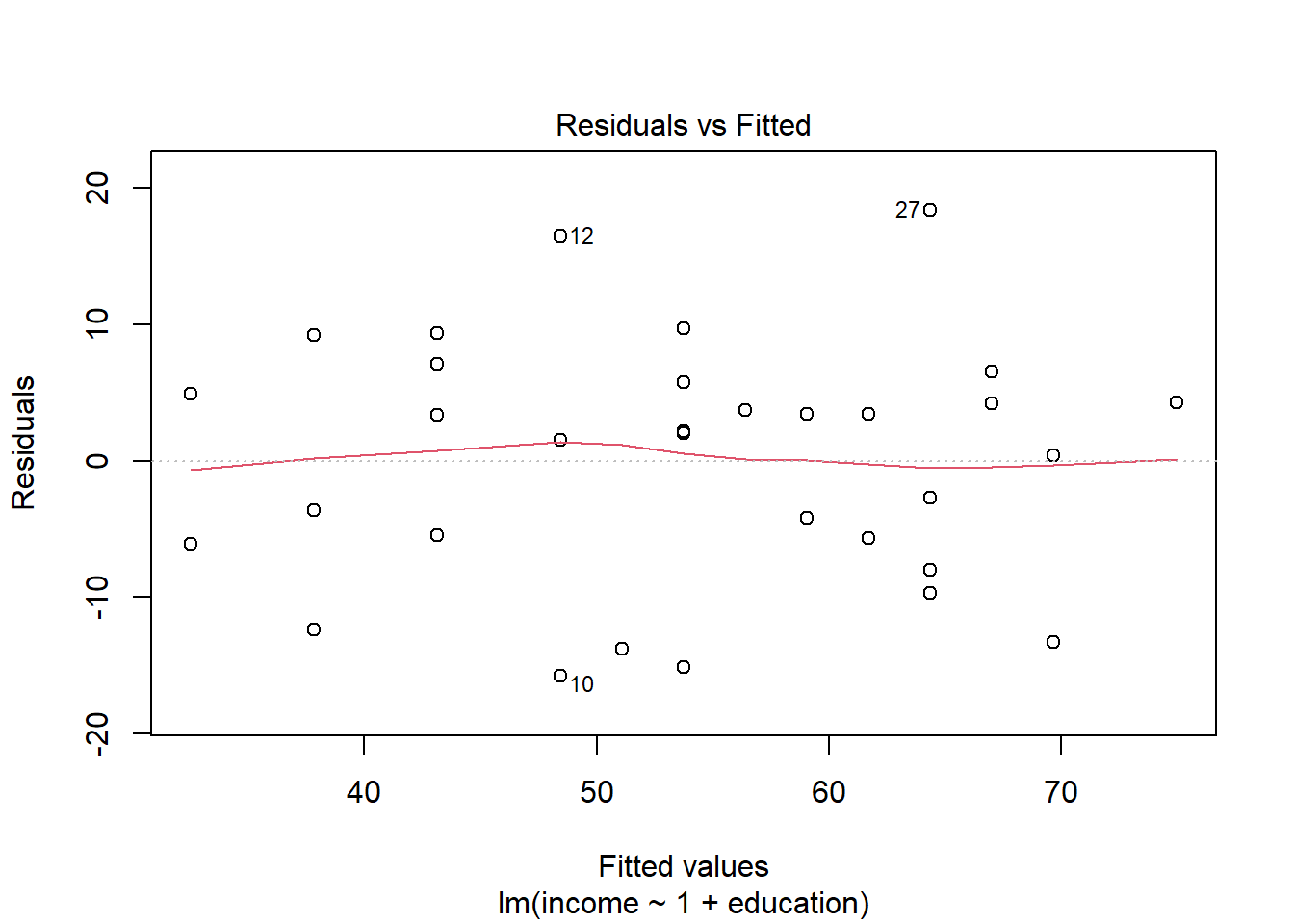

In simple linear regression (SLR) with only one explanatory variable, we could assess linearity through a simple scatterplot of the outcome variable against the explanatory. This would allow us to check if the errors have a mean of zero. If this assumption was met, the residuals would appear to be randomly scattered around zero.

The rationale for this is that, once you remove from the data the linear trend, what’s left over in the residuals should not have any trend, i.e. have a mean of zero.

Multiple Regression

In multiple regression, however, it becomes more necessary to rely on diagnostic plots of the model residuals. This is because we need to know whether the relations are linear between the outcome and each predictor after accounting for the other predictors in the model.

In order to assess this, we use partial-residual plots (also known as ‘component-residual plots’). This is a plot with each explanatory variable \(x_j\) on the x-axis, and partial residuals on the y-axis.

Partial residuals for a predictor \(x_j\) are calculated as: \[ \hat \epsilon + \hat \beta_j x_j \]

In R we can easily create these plots for all predictors in the model by using the crPlots() function from the car package.

Check if the fitted model satisfies the linearity assumption for rv_mdl1. Write a sentence summarising whether or not you consider the assumption to have been met. Justify your answer with reference to the plots.

Create partial-residual plots for the wb_mdl1 model.

Remember to load the car package first. If it does not load correctly, it might mean that you have need to install it.

Write a sentence summarising whether or not you consider the assumption to have been met. Justify your answer with reference to the plots.

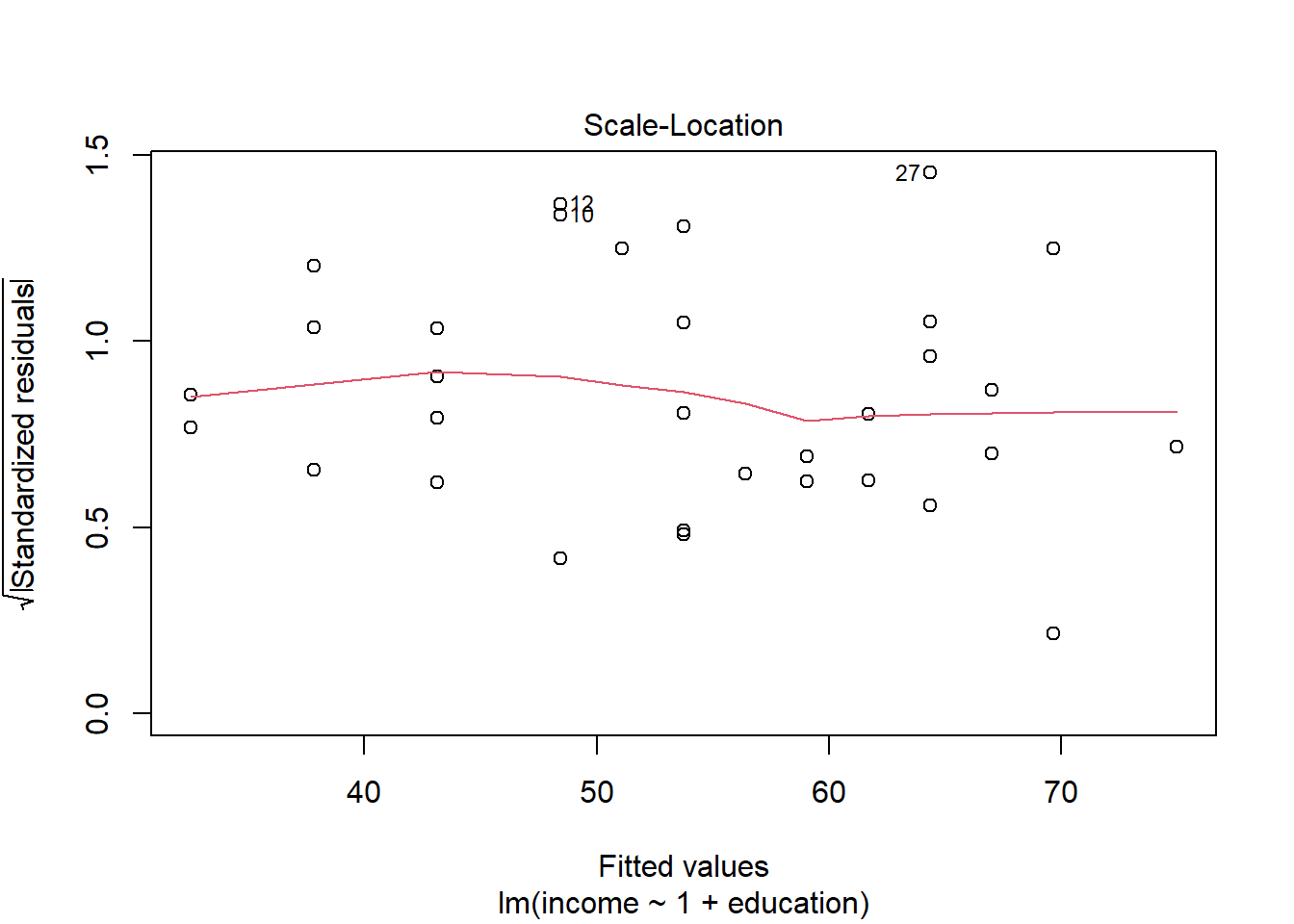

Equal variances (Homoscedasticity)

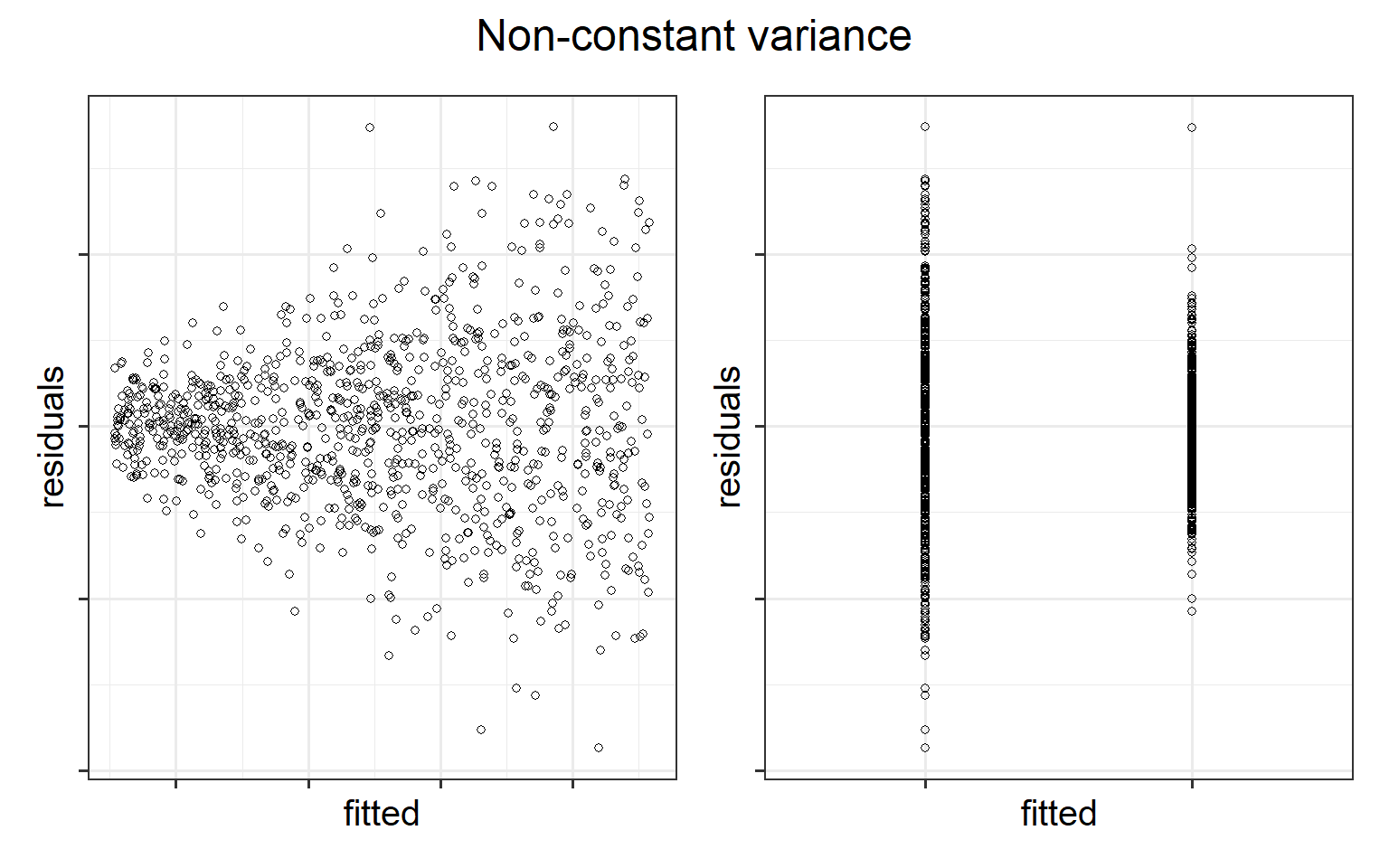

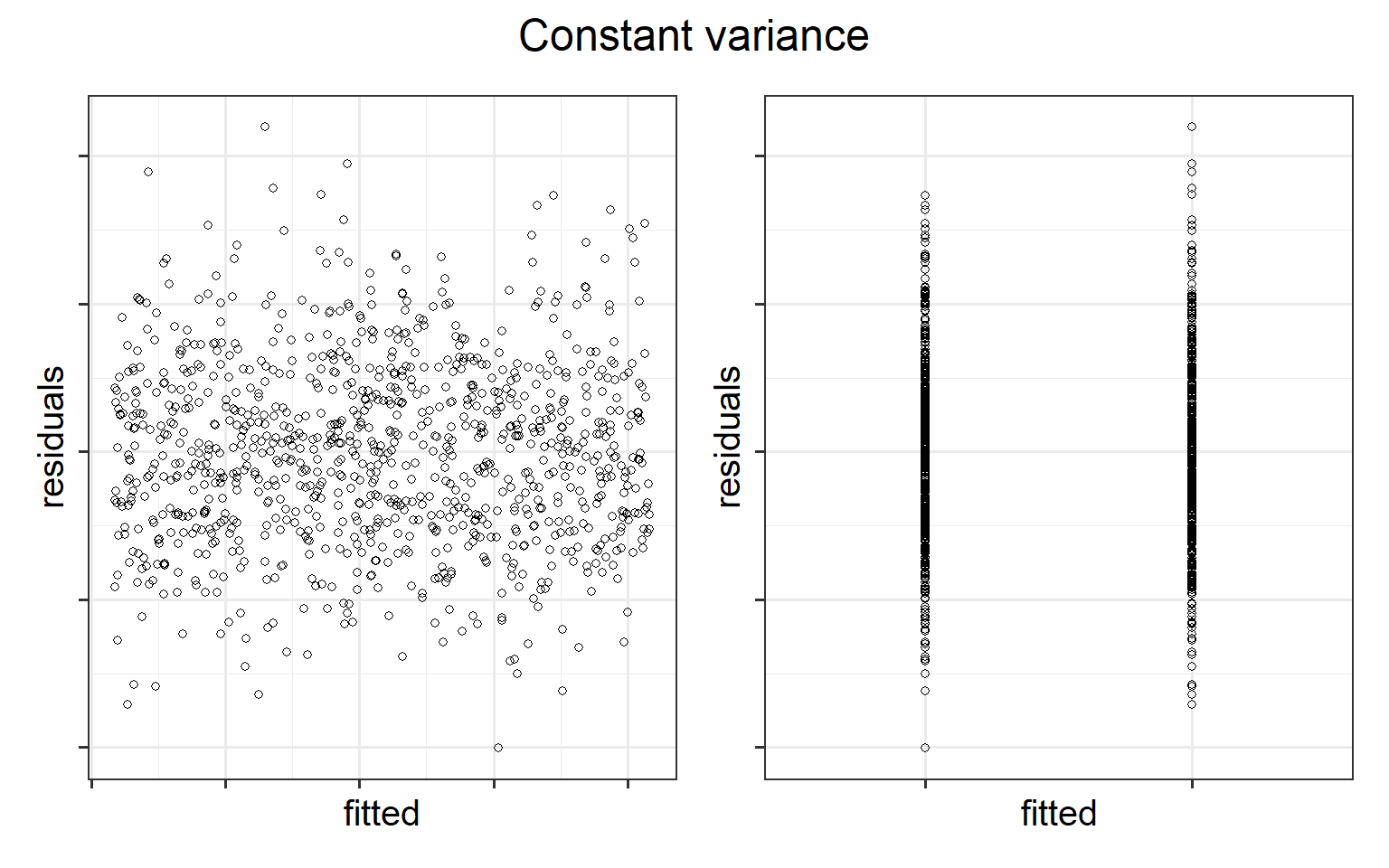

The equal variances assumption is that the error variance \(\sigma^2\) is constant across values of the predictor(s) \(x_1, \dots, x_k\), and across values of the fitted values \(\hat y\). This sometimes gets termed “Constant” vs “Non-constant” variance. Figures 3 & 4 shows what these look like visually.

Figure 3: Non-constant variance for numeric and categorical x

Figure 4: Constant variance for numeric and categorical x

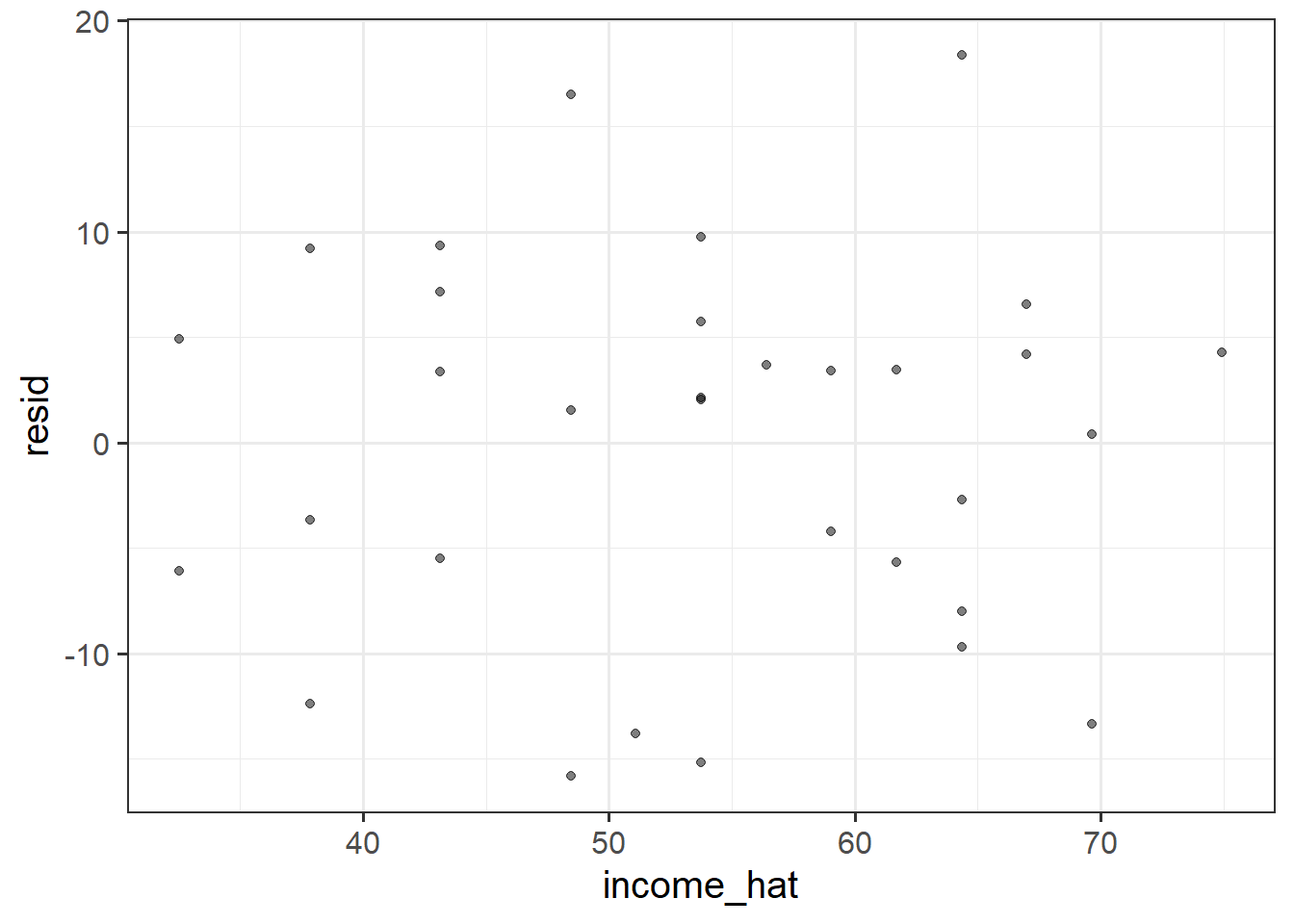

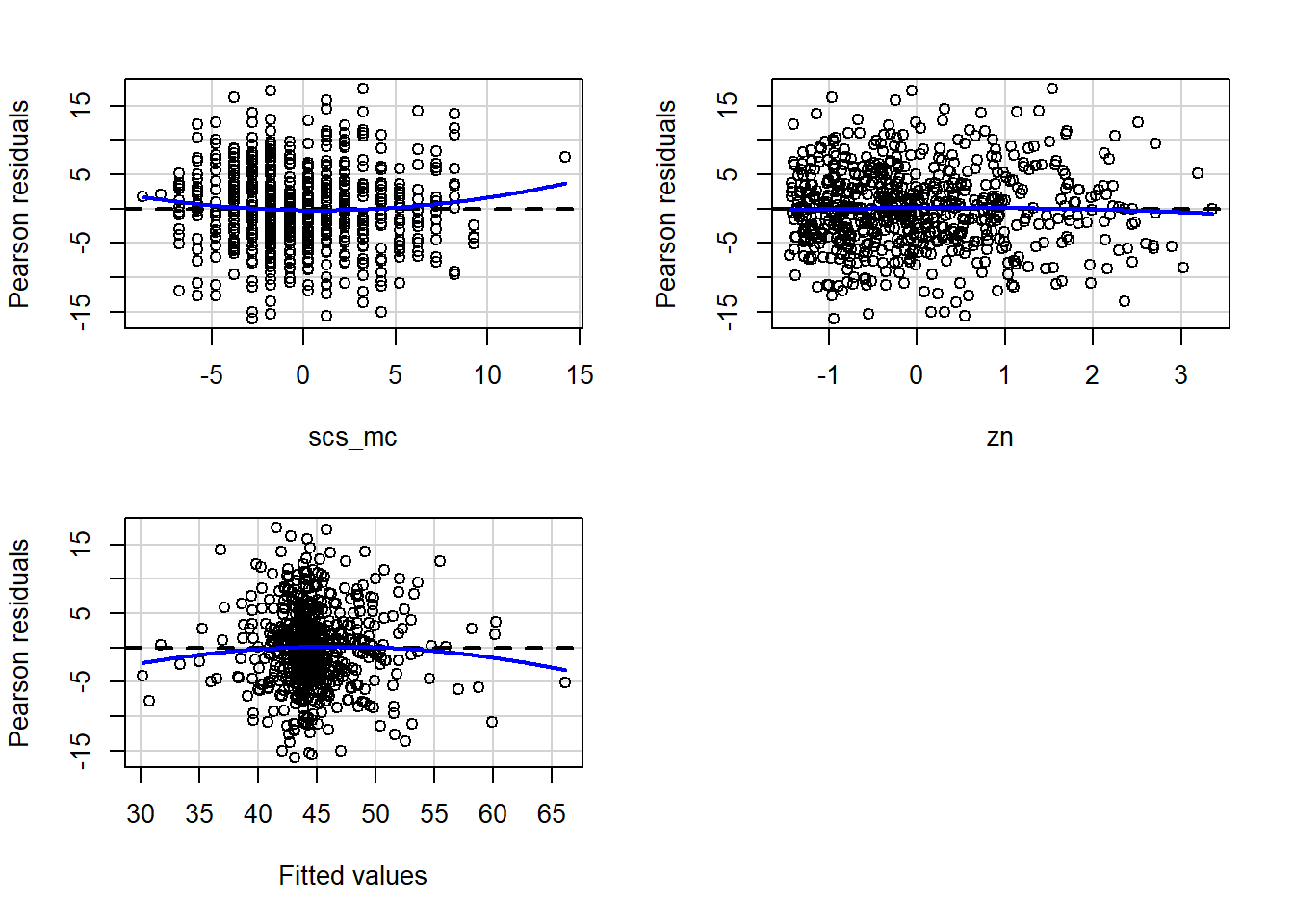

In R we can create plots of the Pearson residuals against the predicted values \(\hat y\) and against the predictors \(x_1\), … \(x_k\) by using the residualPlots() function from the car package. This function also provides the results of a lack-of-fit test for each of these relationships (note when it is the fitted values \(\hat y\) it gets called “Tukey’s test”).

Check if the fitted models rv_mdl1 and wb_mdl1 satisfy the equal variance assumption. Use residualPlots() to plot residuals against the predictor.

Write a sentence summarising whether or not you consider the assumption to have been met for each model. Justify your answer with reference to plots.

Independence

The ‘independence of errors’ assumption is the condition that the errors do not have some underlying relationship which is causing them to influence one another.

There are many sources of possible dependence, and often these are issues of study design. For example, we may have groups of observations in our data which we would expect to be related (e.g., multiple trials from the same participant). Our modelling strategy would need to take this into account.

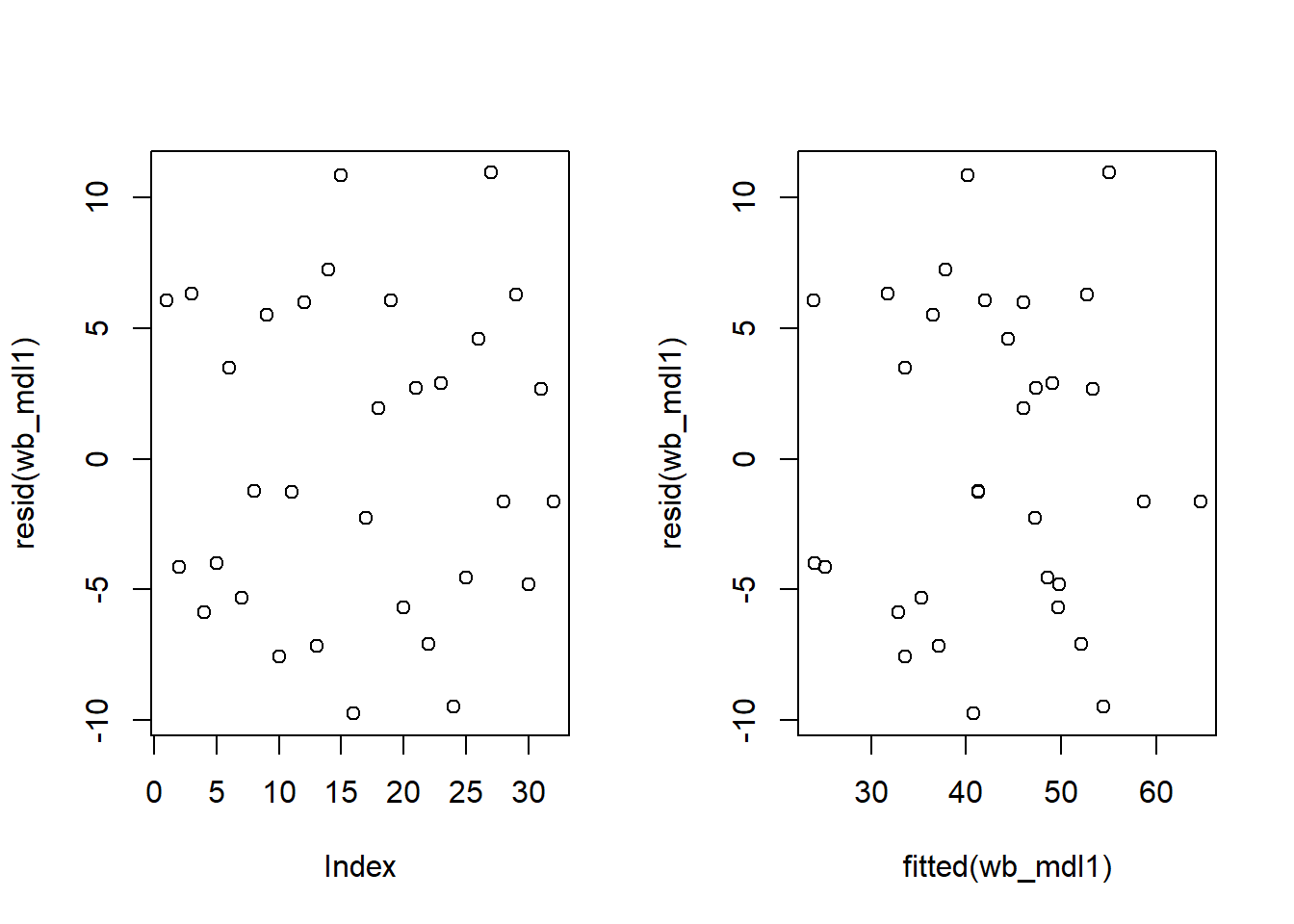

One form of dependence is autocorrelation - this is when observations influence those adjacent to them. It is common in data for which time is a variable of interest (e.g, the humidity today is dependent upon the rainfall yesterday).

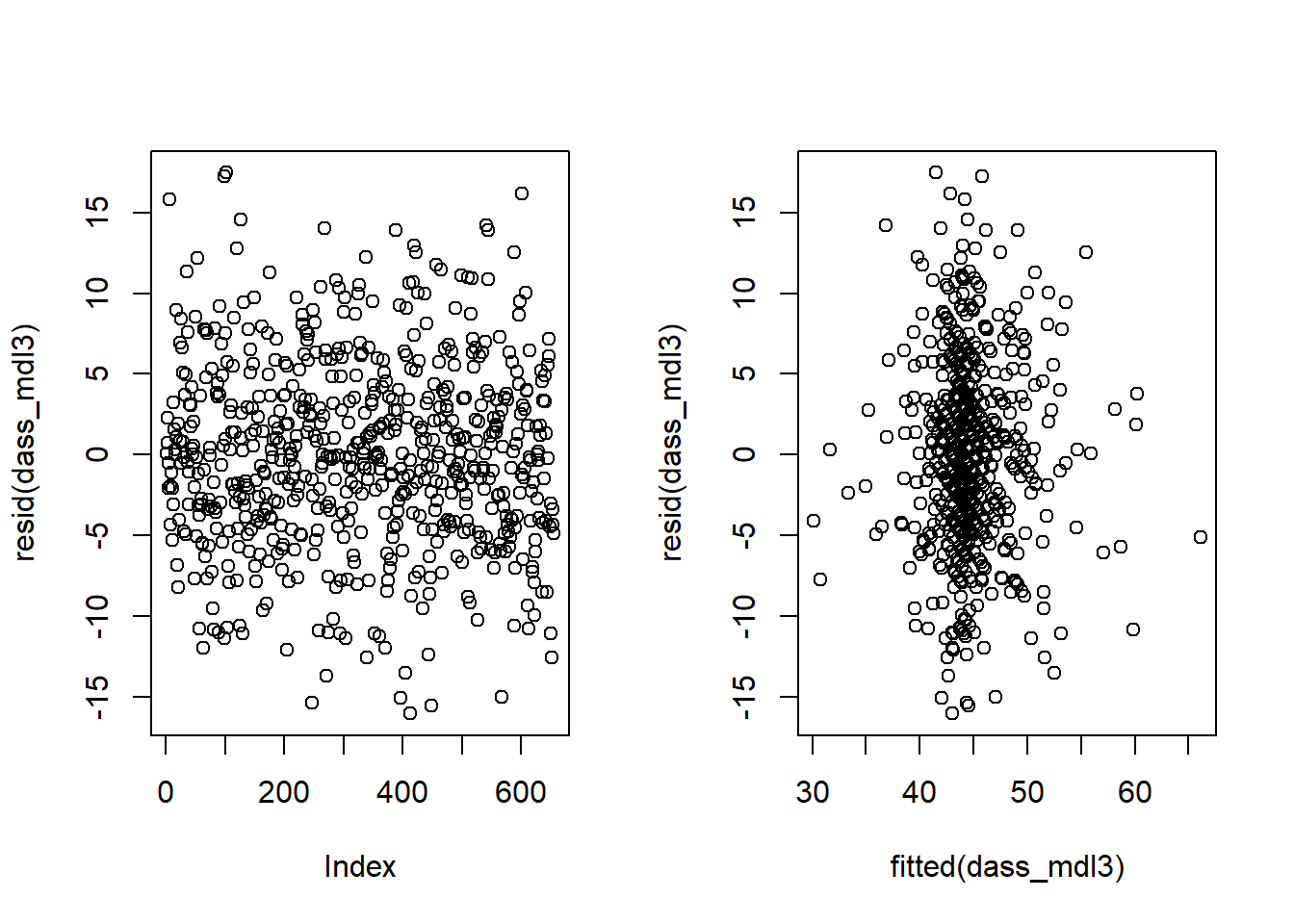

For both rv_mdl1 and wb_mdl1, visually assess whether there is autocorrelation in the error terms.

Write a sentence summarising whether or not you consider the assumption of independence to have been met for each (you may have to assume certain aspects of the study design).

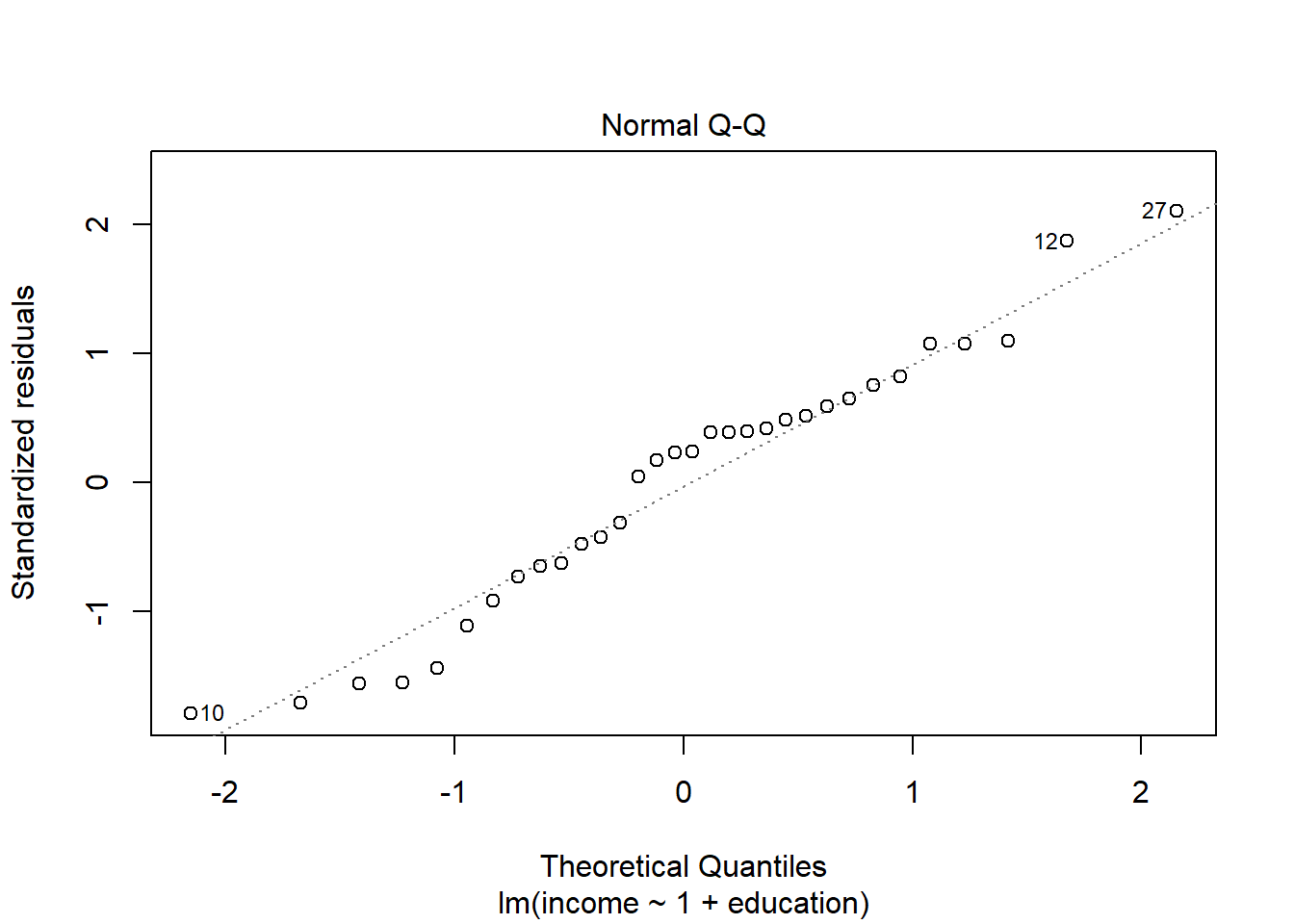

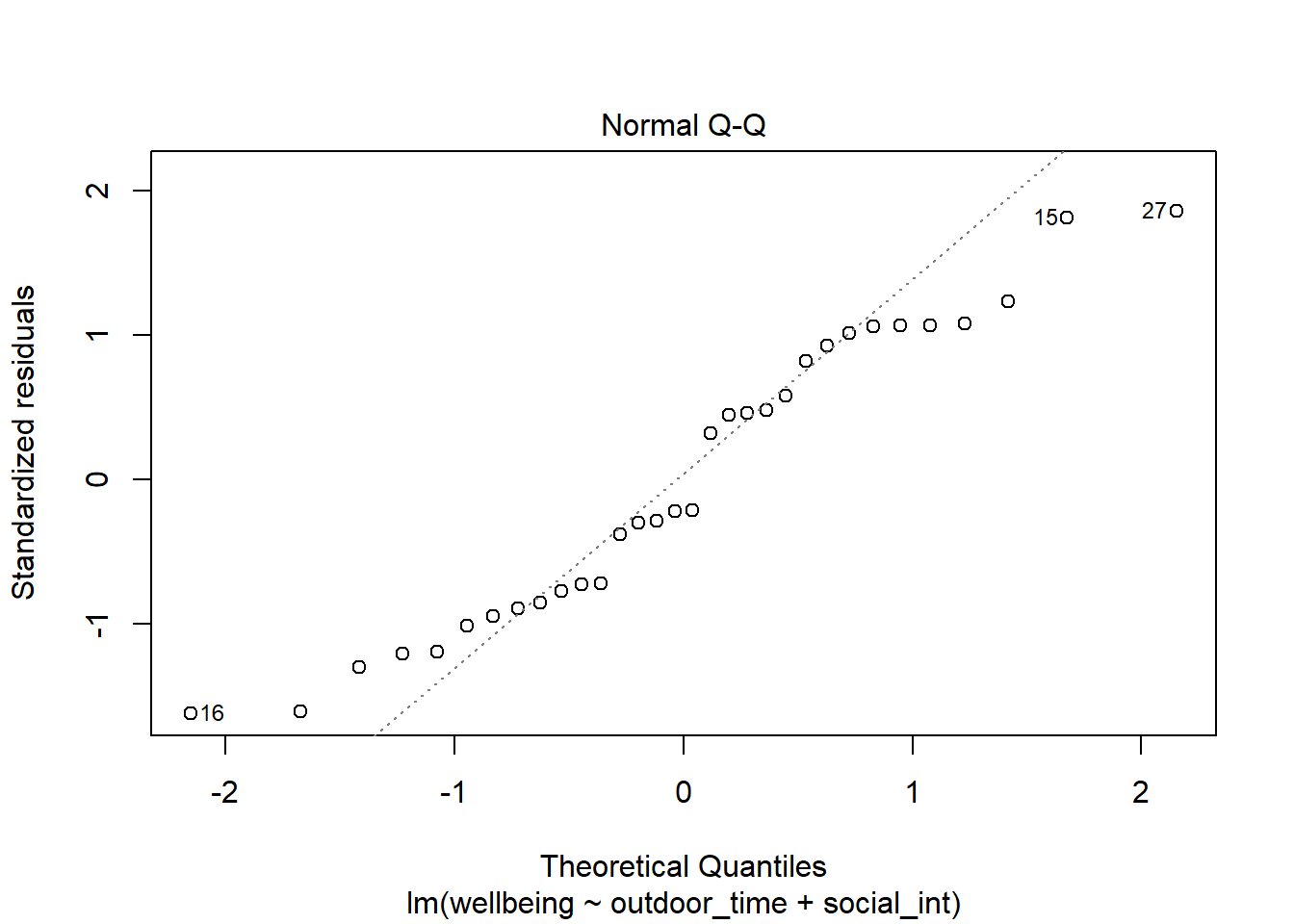

Normality of errors

The normality assumption is the condition that the errors \(\epsilon\) are normally distributed in the population.

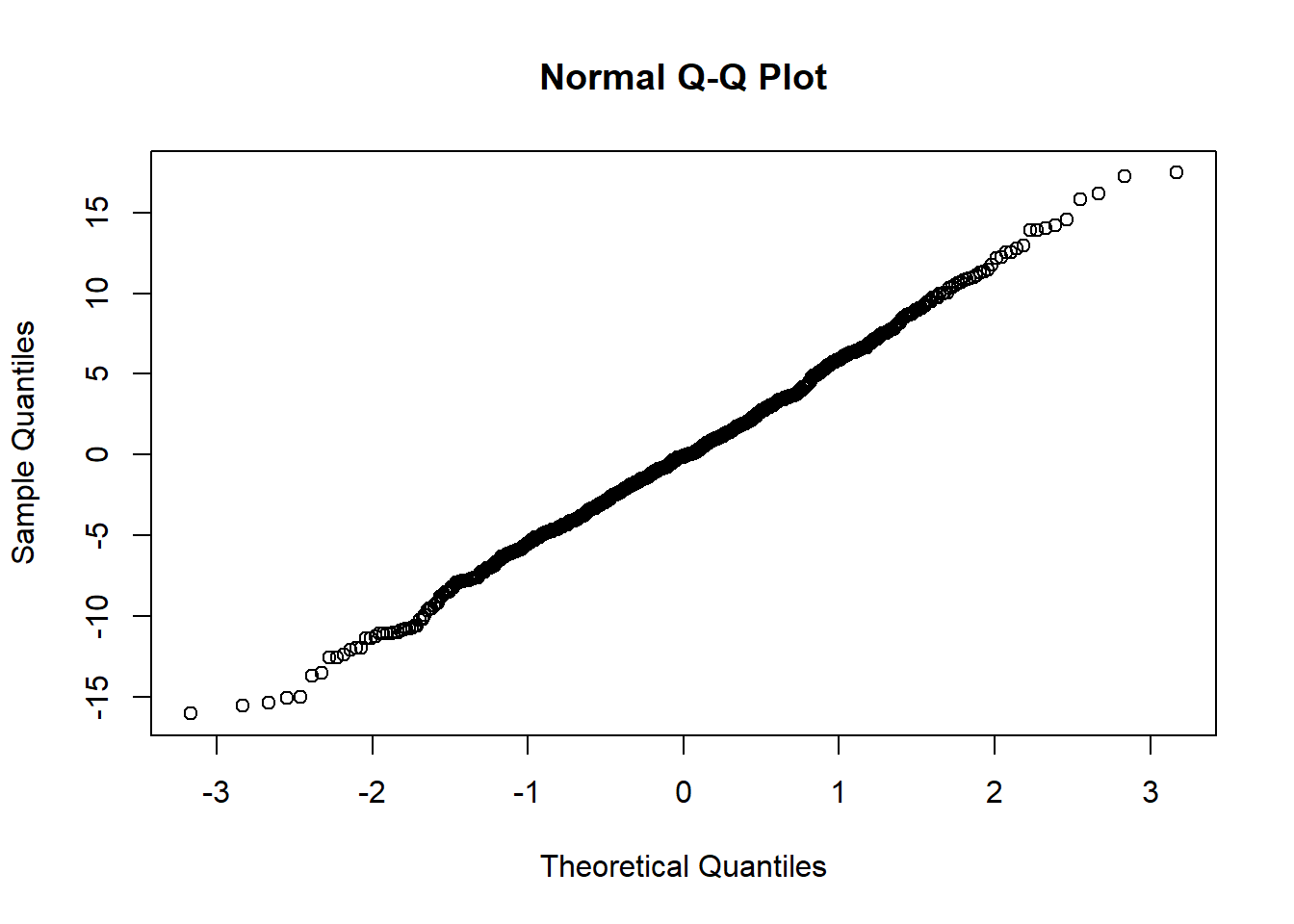

We can visually assess this condition through histograms, density plots, and quantile-quantile plots (QQplots) of our residuals \(\hat \epsilon\).

Assess the normality assumption by producing a qqplot of the residuals (either manually or using plot(model, which = ???)) for both rv_mdl1 and wb_mdl1.

Write a sentence summarising whether or not you consider the assumption to have been met. Justify your answer with reference to the plots.

Multicollinearity

For the linear model with multiple explanatory variables, we need to also think about multicollinearity - this is when two (or more) of the predictors in our regression model are moderately or highly correlated.

We can assess multicollinearity using the variance inflation factor (VIF), which for a given predictor \(x_j\) is calculated as:

\[

VIF_j = \frac{1}{1-R_j^2} \\

\]

Suggested cut-offs for VIF are varied. Some suggest 10, others 5. Define what you will consider an acceptable value prior to calculating it. You could loosely interpret VIF values larger than 5 as moderate multicollinearity and values larger than 10 as severe multicollinearity.

In R, the vif() function from the car package will provide VIF values for each predictor in your model.

Calculate the variance inflation factor (VIF) for the predictors in the model.

Write a sentence summarising whether or not you consider multicollinearity to be a problem here.

Individual Case Diagnostics

We have seen in the case of the simple linear regression that individual cases in our data can influence our model more than others. We know about:

- Regression outliers: A large residual \(\hat \epsilon_i\) - i.e., a big discrepancy between their predicted y-value and their observed y-value.

- Standardised residuals: For residual \(\hat \epsilon_i\), divide by the estimate of the standard deviation of the residuals. In R, the

rstandard()function will give you these - Studentised residuals: For residual \(\hat \epsilon_i\), divide by the estimate of the standard deviation of the residuals excluding case \(i\). In R, the

rstudent()function will give you these.

- Standardised residuals: For residual \(\hat \epsilon_i\), divide by the estimate of the standard deviation of the residuals. In R, the

- High leverage cases: These are cases which have considerable potential to influence the regression model (e.g., cases with an unusual combination of predictor values).

- Hat values: are used to assess leverage. In R, The

hatvalues()function will retrieve these.

- Hat values: are used to assess leverage. In R, The

- High influence cases: When a case has high leverage and is an outlier, it will have a large influence on the regression model.

- Cook’s Distance: combines leverage (hatvalues) with outlying-ness to capture influence. In R, the

cooks.distance()function will provide these. Alongside Cook’s Distance, we can examine the extent to which model estimates and predictions are affected when an entire case is dropped from the dataset and the model is refitted.

- Cook’s Distance: combines leverage (hatvalues) with outlying-ness to capture influence. In R, the

- DFFit: the change in the predicted value at the \(i^{th}\) observation with and without the \(i^{th}\) observation is included in the regression.

- DFbeta: the change in a specific coefficient with and without the \(i^{th}\) observation is included in the regression.

- DFbetas: the change in a specific coefficient divided by the standard error, with and without the \(i^{th}\) observation is included in the regression.

- COVRATIO: measures the effect of an observation on the covariance matrix of the parameter estimates. In simpler terms, it captures an observation’s influence on standard errors.

You can get a whole bucket-load of these measures with the influence.measures() function:

influence.measures(my_model)will give you out a dataframe of the various measures.summary(influence.measures(my_model))will provide a nice summary of what R deems to be the influential points.

For questions 8-12, we will be working with our wb_mdl1 only. Feel free to apply the below to your rv_mdl1 too as as extra practice.

Create a new tibble which contains:

- The original variables from the model (Hint, what does

wb_mdl1$modelgive you?) - The fitted values from the model \(\hat y\)

- The residuals \(\hat \epsilon\)

- The studentised residuals

- The hat values

- The Cook’s Distance values.

Looking at the studentised residuals, are there any outliers?

Looking at the hat values, are there any observations with high leverage?

Looking at the Cook’s Distance values, are there any highly influential points?

You can also display these graphically using plot(model, which = 4) and plot(model, which = 5).

Use the function influence.measures() to extract these delete-1 measures of influence.

Try plotting the distributions of some of these measures.

Tip: the function influence.measures() returns an infl-type object. To plot this, we need to find a way to extract the actual numbers from it.

What do you think names(influence.measures(wb_mdl1)) shows you? How can we use influence.measures(wb_mdl1)$<insert name here> to extract the matrix of numbers?

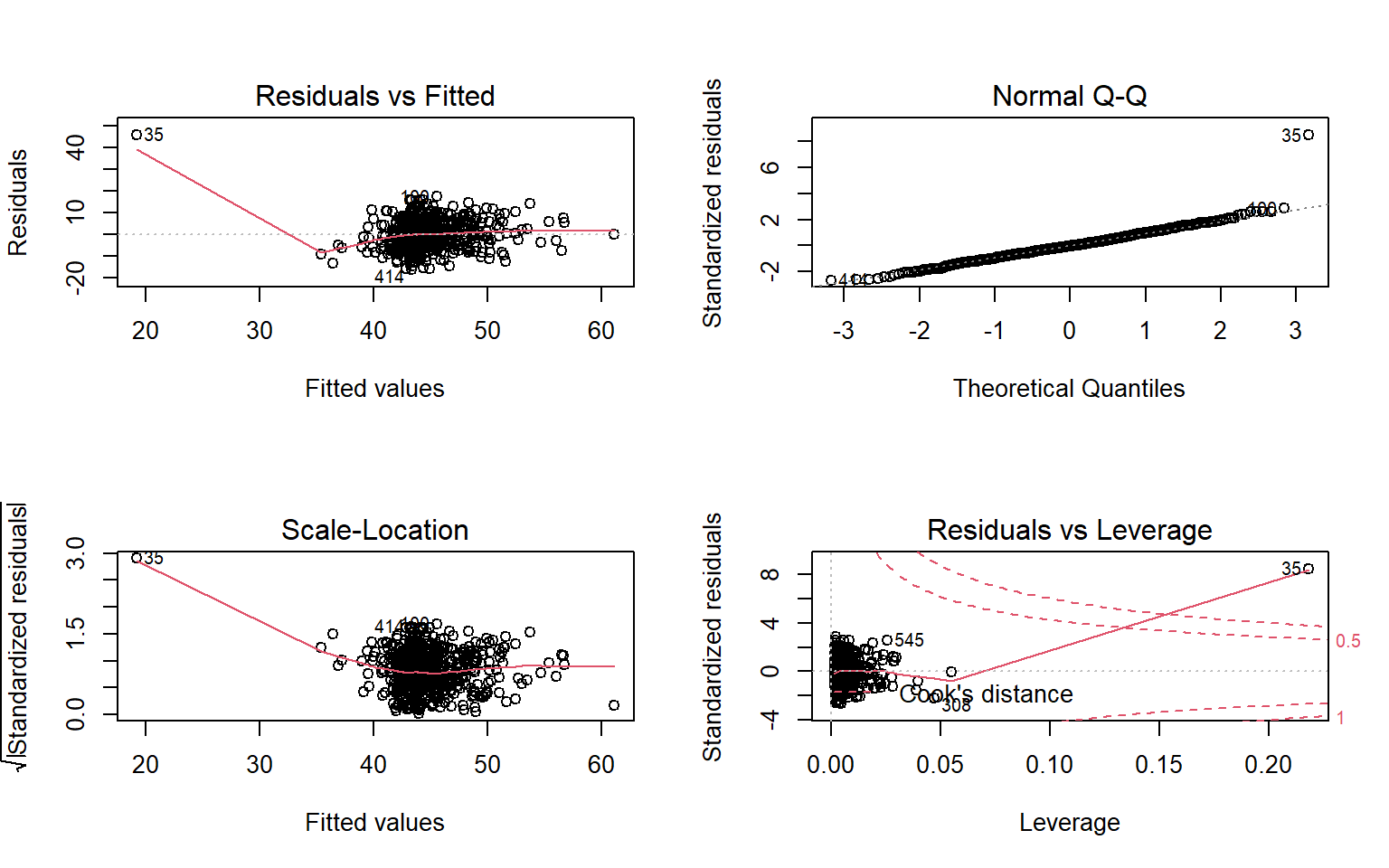

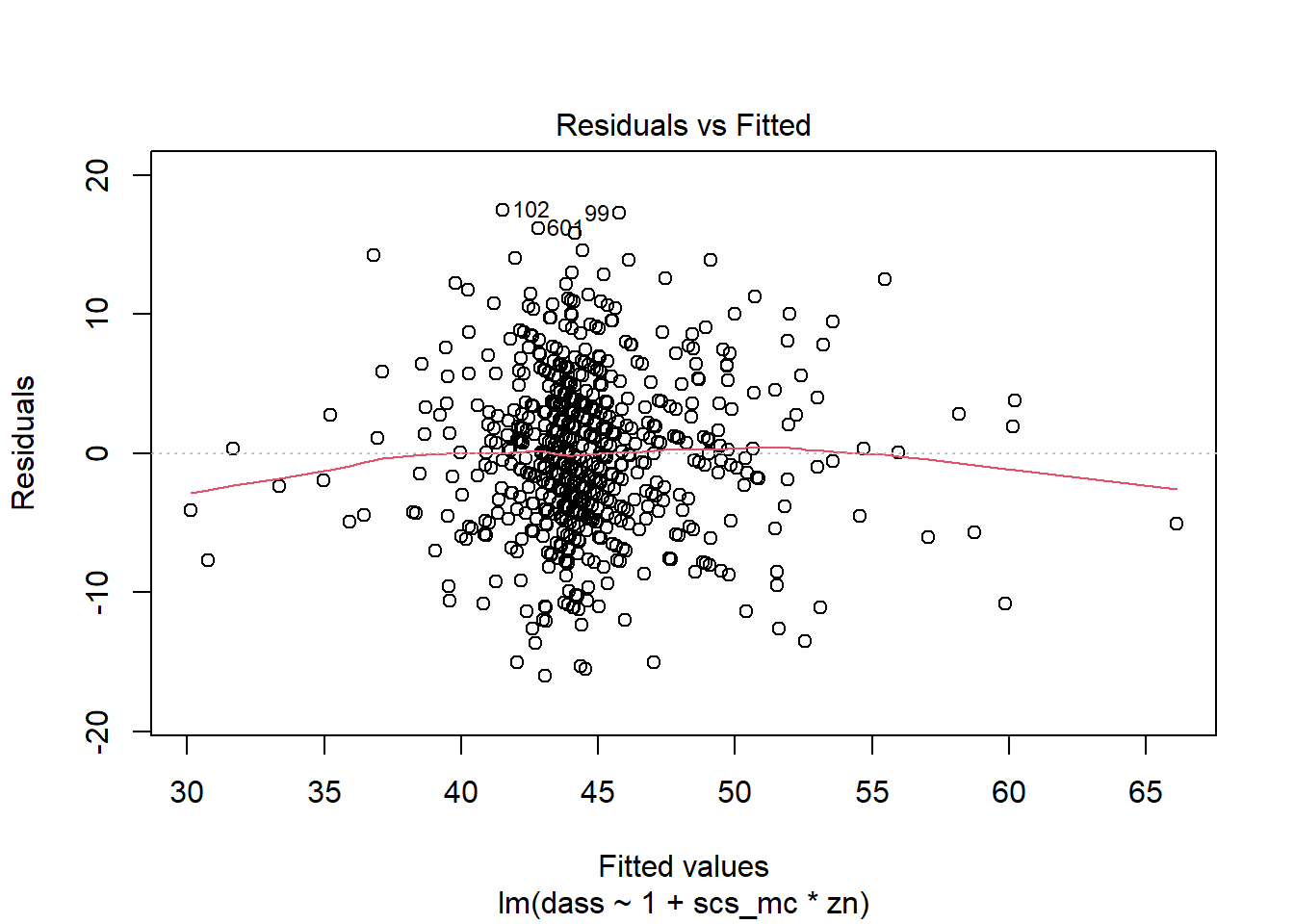

Less guided exercises - extra practice

Create a new section header in your Rmarkdown document, as we are moving onto a different dataset.

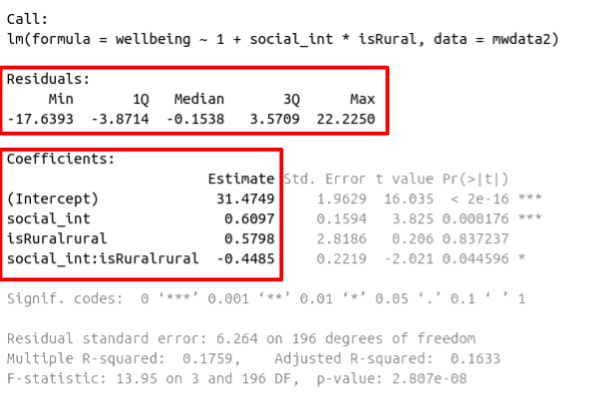

The code below loads the dataset of 656 participants’ scores on Big 5 Personality traits, perceptions of social ranks, and scores on a depression and anxiety scale.

scs_study <- read_csv("https://uoepsy.github.io/data/scs_study.csv")- Fit the following interaction model:

- \(\text{DASS-21 Score} = \beta_0 + \beta_1 \cdot \text{SCS Score} + \beta_2 \cdot \text{Neuroticism} + \beta_3 \cdot \text{SCS score} \cdot \text{Neuroticism} + \epsilon\)

- Check that the model meets the assumptions of the linear model (Tip: to get a broad overview you can pass your model to the

plot()function to get a series of plots). - If you notice any violated assumptions:

- address the issues by, e.g., excluding observations from the analysis, or replacing outliers with the next most extreme value (Winsorisation).

- after fitting a new model which you hope addresses violations, you need to check all of your assumptions again. It can be an iterative process, and the most important thing is that your final model (the one you plan to use) meets all the assumptions.

Tips:

- When there is an interaction in the model, assessing linearity becomes difficult. In fact,

crPlots()will not work. To assess, you can create a residuals-vs-fitted plot like we saw in the guided exercises above.

- Interaction terms often result in multicollinearity, because these terms are made up of the product of some ‘main effects.’ Mean-centering the variables like we have here will reduce this source of structural multicollinearity (“structural” here refers to the fact that multicollinearity is due to our model specification, rather than the data itself)

- You can fit a model and exclude specific observations. For instance, to remove the 3rd and 5th rows of the dataset:

lm(y ~ x1 + x2, data = dataset[-c(3,5),]). Be careful to remember that these values remain in the dataset, they have simply been excluded from the model fit.