Simple linear regression

Information about solutions

Solutions for these exercises are available immediately below each question.

We would like to emphasise that much evidence suggests that testing enhances learning, and we strongly encourage you to make a concerted attempt at answering each question before looking at the solutions. Immediately looking at the solutions and then copying the code into your work will lead to poorer learning.

We would also like to note that there are always many different ways to achieve the same thing in R, and the solutions provided are simply one approach.

Be sure to check the solutions to last week’s exercises.

You can still ask any questions about previous weeks’ materials if things aren’t clear!

LEARNING OBJECTIVES

- Be able to specify a simple linear model.

- Understand what fitted values and residuals are.

- Be able to interpret the coefficients of a fitted model.

- Be able to test hypotheses and construct confidence intervals for the regression coefficients.

Research question

Let’s imagine a study into income disparity for workers in a local authority. We might carry out interviews and find that there is a link between the level of education and an employee’s income. Those with more formal education seem to be better paid.

Now we wouldn’t have time to interview everyone who works for the local authority so we would have to interview a sample, say 10%.

In this lab we will use the riverview data (see below) to examine whether education level is related to income among the employees working for the city of Riverview, a hypothetical midwestern city in the US.

Load the required libraries and import the riverview data into a variable named riverview.

Data exploration

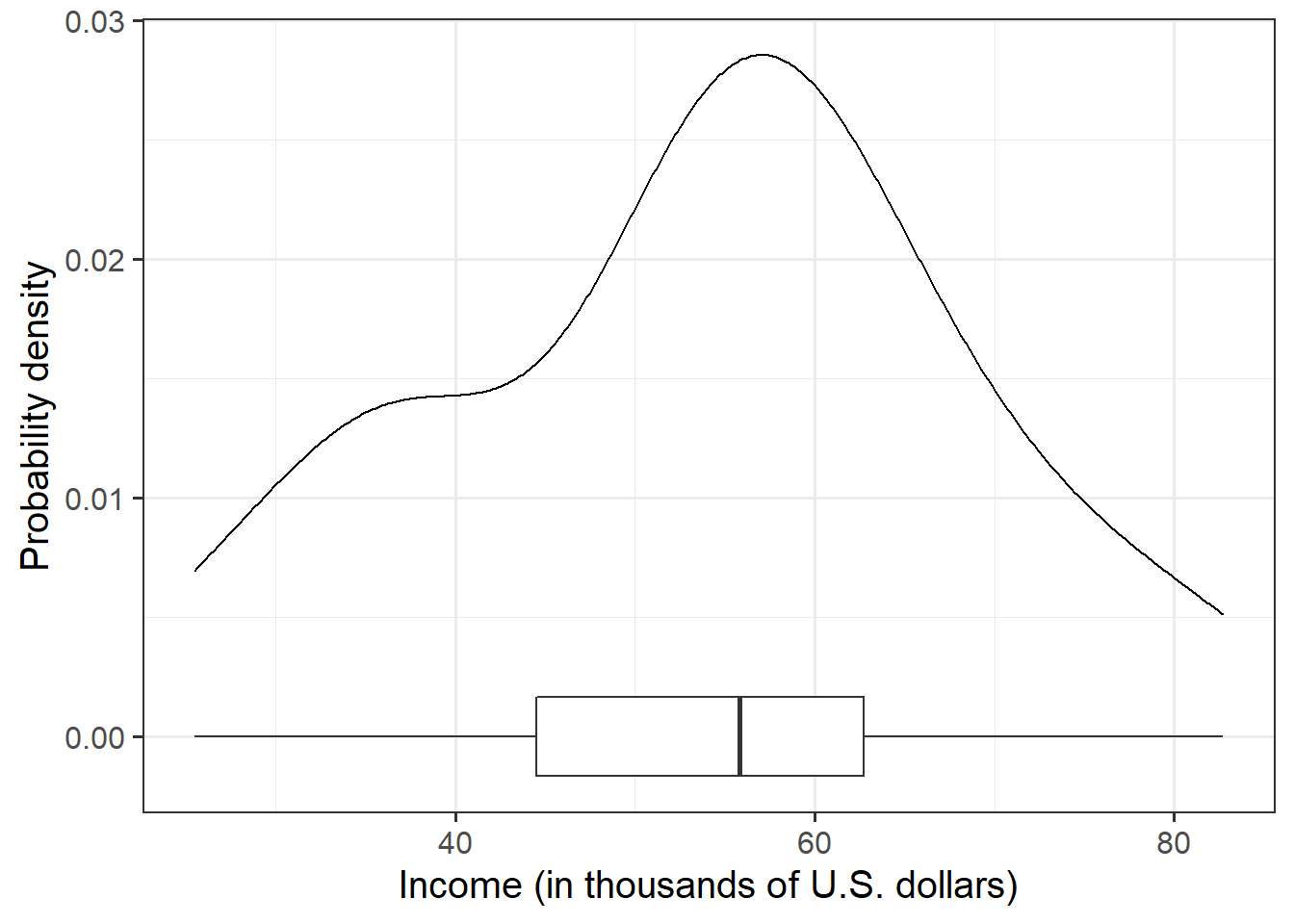

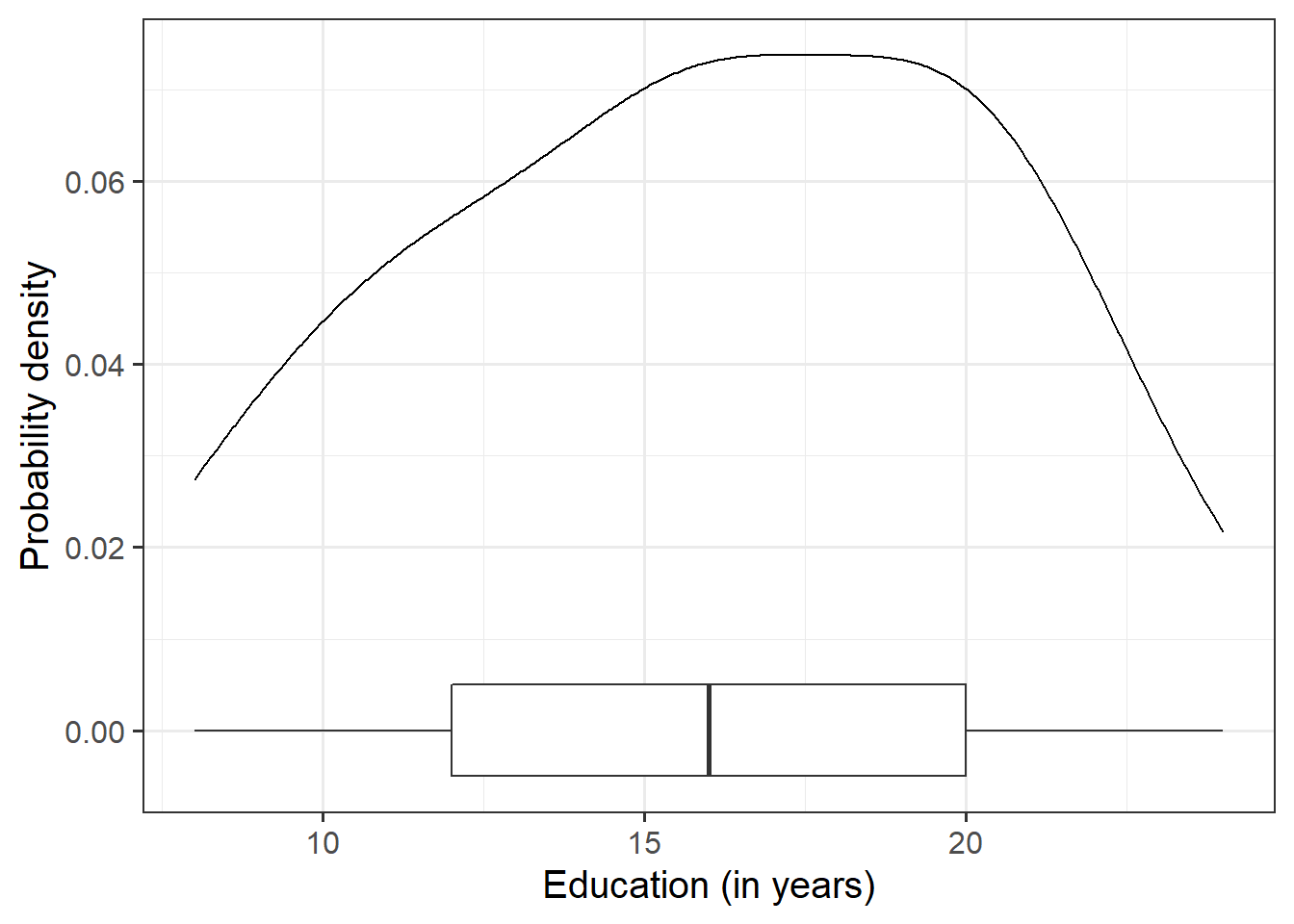

Marginal distributions

Typical steps when examining the marginal distribution of a numeric variable are:

Visualise the distribution of the variable. You could use, for example,

geom_density()for a density plot orgeom_histogram()for a histogram.Comment on the shape of the distribution. Look at the shape, centre and spread of the distribution. Is it symmetric or skewed? Is it unimodal or bimodal?

Identify any unusual observations. Do you notice any extreme observations?

Display and describe the marginal distribution of employee incomes.

Display and describe the marginal distribution of education level.

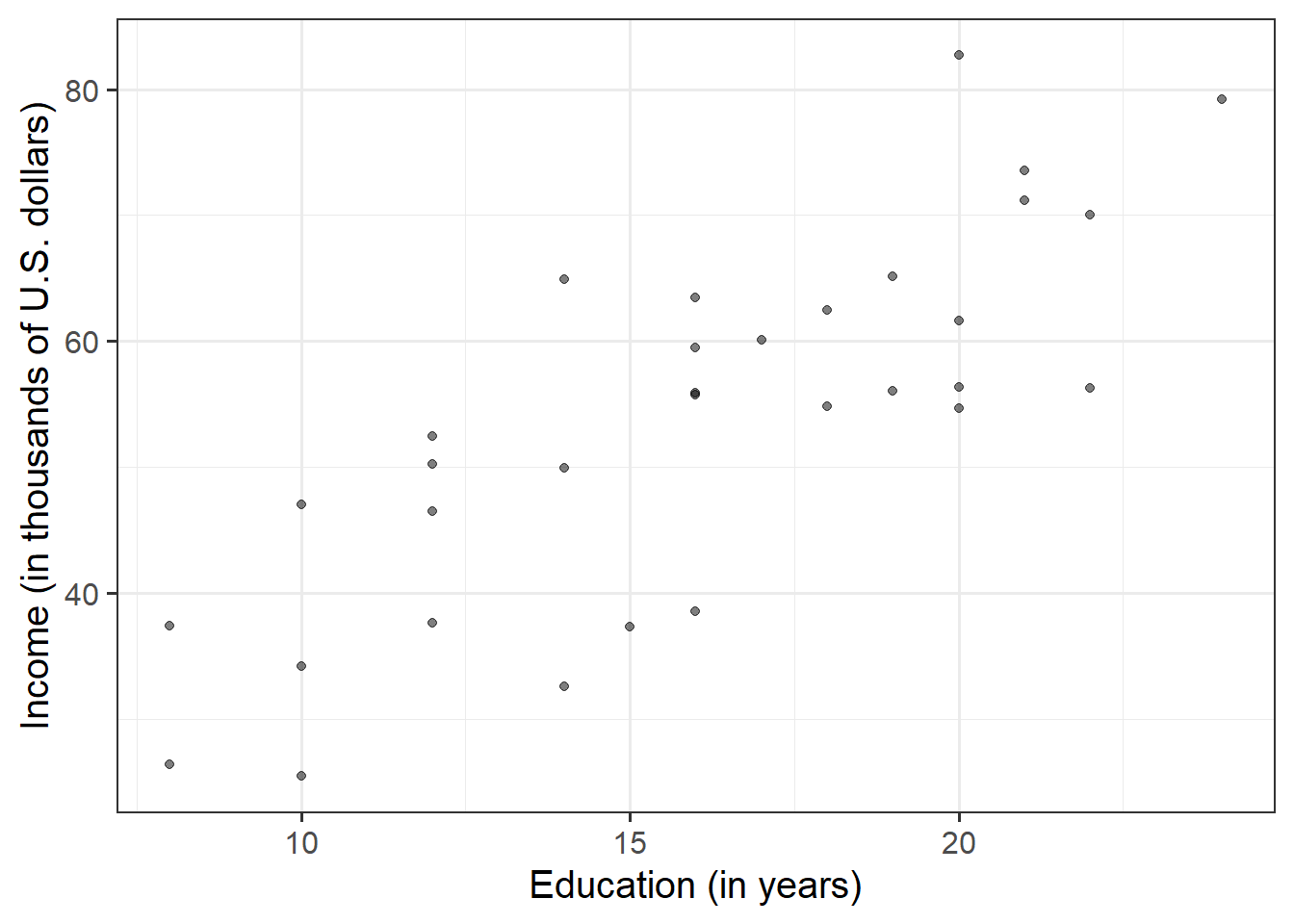

Relationship between variables

After examining the marginal distributions of the variables of interest in the analysis, we typically move on to examining relationships between the variables.

When describing the relationship between two numeric variables, we typically look at their scatterplot and comment on four characteristics of the relationship:

- The direction of the association indicates whether large values of one variable tend to go with large values of the other (positive association) or with small values of the other (negative association).

- The form of association refers to whether the relationship between the variables can be summarized well with a straight line or some more complicated pattern.

- The strength of association entails how closely the points fall to a recognizable pattern such as a line.

- Unusual observations that do not fit the pattern of the rest of the observations and which are worth examining in more detail.

Create a scatterplot of income and education level.

Use the scatterplot above to describe the relationship between income and level of education among the employees in the sample.

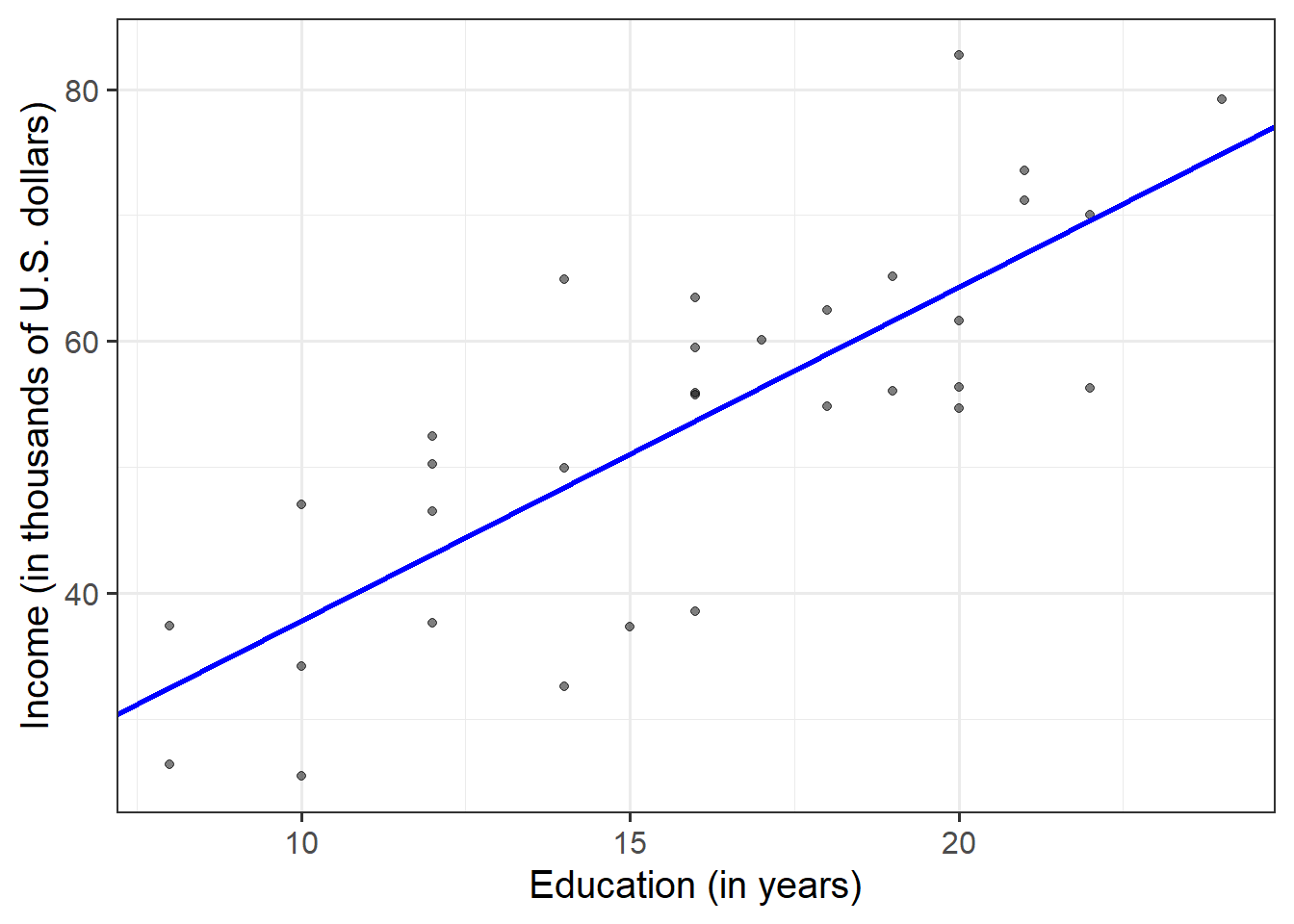

Model specification and fitting

The scatterplot highlights a linear relationship, where the data points are scattered around an underlying linear pattern with a roughly-constant spread as x varies.

Hence, we will try to fit a simple (= one x variable only) linear regression model:

\[ y = \beta_0 + \beta_1 x + \epsilon \quad \text{where} \quad \epsilon \sim N(0, \sigma) \text{ independently} \]

where “\(\epsilon \sim N(0, \sigma) \text{ independently}\)” means that the errors around the line have mean zero and constant spread as x varies.

Fit the linear model to the sample data using the lm() function and name the output mdl.

Write down the equation of the fitted line.

Hint:

The syntax of the lm() function is:

lm(<response variable> ~ 1 + <explanatory variable>, data = <dataframe>)

Explore the following equivalent ways to obtain the estimated regression coefficients — that is, \(\hat \beta_0\) and \(\hat \beta_1\) — from the fitted model:

mdlmdl$coefficientscoef(mdl)coefficients(mdl)summary(mdl)

Interpret the estimated intercept and slope in the context of the question of interest.

Explore the following equivalent ways to obtain the estimated standard deviation of the errors — that is, \(\hat \sigma\) — from the fitted model mdl:

sigma(mdl)summary(mdl)

Interpret the estimated standard deviation of the errors in the context of the research question.

Plot the data and the fitted regression line. To do so:

- Extract the estimated regression coefficients e.g. via

betas <- coef(mdl) - Extract the first entry of

betasviabetas[1] - Extract the second entry of

betasviabetas[2] - Provide the intercept and slope to the function

geom_abline(intercept = <intercept>, slope = <slope>)

Fitted and predicted values

To compute the model-predicted values for the data in the sample:

predict(<fitted model>)fitted(<fitted model>)fitted.values(<fitted model>)mdl$fitted.values

predict(mdl)## 1 2 3 4 5 6 7 8

## 32.53175 32.53175 37.83435 37.83435 37.83435 43.13694 43.13694 43.13694

## 9 10 11 12 13 14 15 16

## 43.13694 48.43953 48.43953 48.43953 51.09083 53.74212 53.74212 53.74212

## 17 18 19 20 21 22 23 24

## 53.74212 53.74212 56.39342 59.04472 59.04472 61.69601 61.69601 64.34731

## 25 26 27 28 29 30 31 32

## 64.34731 64.34731 64.34731 66.99861 66.99861 69.64990 69.64990 74.95250To compute model-predicted values for other data:

predict(<fitted model>, newdata = <dataframe>)

We first need to remember that the model predicts income using the independent variable education. Hence, if we want predictions for new data, we first need to create a tibble with a column called education containing the years of education for which we want the prediction.

newdata <- tibble(education = c(11, 23))

newdata## # A tibble: 2 x 1

## education

## <dbl>

## 1 11

## 2 23Then we take newdata and add a new column called income_hat, computed as the prediction from the fitted mdl using the newdata above:

newdata <- newdata %>%

mutate(

income_hat = predict(mdl, newdata = newdata)

)

newdata## # A tibble: 2 x 2

## education income_hat

## <dbl> <dbl>

## 1 11 40.5

## 2 23 72.3Residuals

The residuals represent the deviations between the actual responses and the predicted responses and can be obtained either as

mdl$residuals;resid(mdl);residuals(mdl);- computing them as the difference between the response and the predicted response.

Use predict(mdl) to compute the fitted values and residuals. Mutate the riverview dataframe to include the fitted values and residuals as extra columns.

Assign to the following symbols the corresponding numerical values:

- \(y_{3}\) = response variable for unit \(i = 3\) in the sample data

- \(\hat y_{3}\) = fitted value for the third unit

- \(\hat \epsilon_{5} = y_{5} - \hat y_{5}\) = the residual corresponding to the 5th unit.

Inference for regression coefficients

Consider again the output of the summary() function:

summary(mdl)##

## Call:

## lm(formula = income ~ 1 + education, data = riverview)

##

## Residuals:

## Min 1Q Median 3Q Max

## -15.809 -5.783 2.088 5.127 18.379

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.3214 6.1232 1.849 0.0743 .

## education 2.6513 0.3696 7.173 5.56e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 8.978 on 30 degrees of freedom

## Multiple R-squared: 0.6317, Adjusted R-squared: 0.6194

## F-statistic: 51.45 on 1 and 30 DF, p-value: 5.562e-08To quantify the amount of uncertainty in each estimated coefficient that is due to sampling variability, we use the standard error (SE) of the coefficient. Recall that a standard error gives a numerical answer to the question of how variable a statistic will be because of random sampling.

The standard errors are found in the column “Std. Error.” That is, the SE of the intercept is 6.1232, and the SE of the slope corresponding to the education variable is 0.3696.

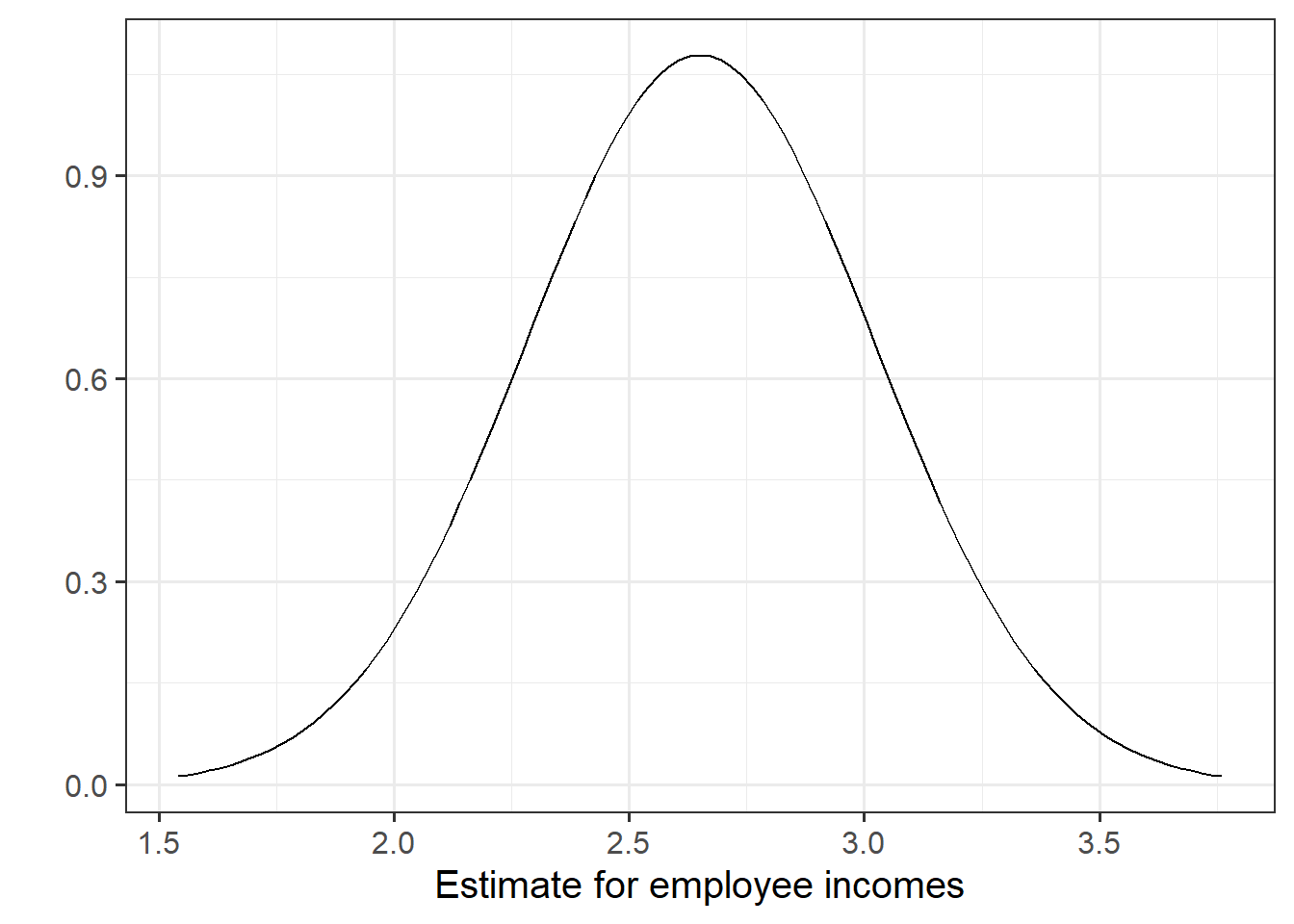

In this example the slope, 2.651, has a standard error of 0.37. One way to envision this is as a distribution. Our best guess (mean) for the slope parameter is 2.651. The standard deviation of this distribution is 0.37, which indicates the precision (uncertainty) of our estimate.

Figure 4: Sampling distribution of the slope coefficient. The distribution is approximately bell-shaped with a mean of 2.651 and a standard error of 0.37.

It shouldn’t surprise you that the reference distribution in this case is a t-distribution with \(n-2\) degrees of freedom, where \(n\) is the sample size. Recall the main formulas for obtaining a confidence interval and a test-statistic:

Test statistic

A test statistic for the null hypothesis \(H_0: \beta_1 = 0\) is \[ t = \frac{\hat \beta_1 - 0}{SE(\hat \beta_1)} \] which follows a t-distribution with \(n-2\) degrees of freedom.

Confidence interval

A confidence interval for the population slope is \[ \hat \beta_1 \pm t^* \cdot SE(\hat \beta_1) \] where \(t^*\) denotes the critical value chosen from t-distribution with \(n-2\) degrees of freedom for a desired \(\alpha\) level of confidence.

Test the hypothesis that the population slope is zero — that is, that there is no linear association between income and education level in the population.

Compute a confidence interval for the regression slope