| variable | description |

|---|---|

| Diagnosis | Diagnosis classifies the three types of individuals: 1 = Amnesic patients, 2 = Huntingtons patients, and 3 = Control group of individuals with no known neurological disorder |

| Task | Task tells us to which one of three tasks each study participant was randomly assigned to: 1 = Grammar (which consists of classifying letter sequences as either following or not following grammatical rules), 2 = Classification (which consists of classifying stimuli into certain groupings, based on previously indicated information about the groups characteristics), and 3 = Recognition (which consists of recognising particular stimuli as stimuli that have previously been presented during the task) |

| Y | Score |

Simple Effects, Pairwise Comparisons, & Corrections

Learning Objectives

At the end of this lab, you will:

- Understand how to interpret simple effects for experimental designs

- Understand how to conduct pairwise comparisons

- Understand how to apply corrections available for multiple comparisons

What You Need

- Be up to date with lectures

- Have completed previous lab exercises from Semester 1 Week 7, Semester 1 Week 8, Semester 1 Week 11, Semester 2 Week 1, Semester 2 Week 2, and Semester 2 Week 3.

Required R Packages

Remember to load all packages within a code chunk at the start of your RMarkdown file using library(). If you do not have a package and need to install, do so within the console using install.packages(" "). For further guidance on installing/updating packages, see Section C here.

For this lab, you will need to load the following package(s):

- tidyverse

- psych

- kableExtra

- sjPlot

- interactions

- patchwork

- emmeans

Presenting Results

All results should be presented following APA guidelines.If you need a reminder on how to hide code, format tables/plots, etc., make sure to review the rmd bootcamp.

The example write-up sections included as part of the solutions are not perfect - they instead should give you a good example of what information you should include and how to structure this. Note that you must not copy any of the write-ups included below for future reports - if you do, you will be committing plagiarism, and this type of academic misconduct is taken very seriously by the University. You can find out more here.

Lab Data

You can download the data required for this lab here or read it in via this link https://uoepsy.github.io/data/cognitive_experiment.csv

Note, you have already worked with some of this data last week - see Semester 2 Week 3 lab, but we now have a third Task condition - Classification.

Study Overview

Research Question

Are there differences in types of memory deficits for those experiencing different cognitive impairment(s)?

In this week’s exercises, we will further explore questions such as:

- Does level \(i\) of the first factor have an effect on the response?

- Does level \(j\) of the second factor have an effect on the response?

- Is there a combined effect of level \(i\) of the first factor and level \(j\) of the second factor on the response? In other words, is there interaction of the two factors so that the combined effect is not simply the additive effect of level \(i\) of the first factor plus the effect of level \(j\) of the second factor?

Setup

- Create a new RMarkdown file

- Load the required package(s)

- Read the cognitive_experiment dataset into R, assigning it to an object named

cog

Exercises

Study & Analysis Plan Overview

Firstly, examine the dataset, and perform any necessary and appropriate data management steps.

Next, consider what would be the most appropriate coding constraint to apply in order to best address the research question - i.e., are we interested in whether group X (e.g., Amnesic) differed from group Y (e.g., Huntingtons), or whether group X (e.g., Amnesic) differed from the grand mean?

Choose appropriate reference levels for the Diagnosis and Task variables based on your decision above.

Data Management

- The

str()function will return the overall structure of the dataset, this can be quite handy to look at

- Convert categorical variables to factors, and if needed, provide better variable names*

- Label factors appropriately to aid with your model interpretations if required*

- Check that the dataset is complete (i.e., are there any

NAvalues?). We can check this usingis.na()

Note that all of these steps can be done in combination - the mutate() and factor() functions will likely be useful here.

Coding Constraints

- If you think you’d benefit from a refresher on coding constraints, it might be best to revisit the materials from Semester 1 Block 2 (especially the dummy vs effects coding flashcard).

- If you would like an overview of coding constraints in the context of interaction models, review the categorical x categorical example > coding constraints flashcard.

Reference Levels

- Review the specifying reference levels flashcard.

*See the numeric outcomes & categorical predictors flashcard.

Provide a brief overview of the study design and data, before detailing your analysis plan to address the research question.

- Give the reader some background on the context of the study (you might be able to re-use some of the content you wrote for Semester 2 Week 3 lab here, but note that we now have an extra condition within Task)

- Outline data checks / data cleaning

- State what type of analysis you will conduct in order to address the research question

- Specify the model to be fitted to address the research question (note that you will need to specify the reference level of your categorical variables. This will be somewhat similar to last week, but with the addition of Classification in Task, our model will contain a different number of parameters)

- Specify your chosen significance (\(\alpha\)) level

- State your hypotheses

Much of the information required can be found in the Study Overview codebook.

The statistical models flashcards may also be useful to refer to. Specifically the interaction models flashcards and categorical x categorical example flashcards might be of most use.

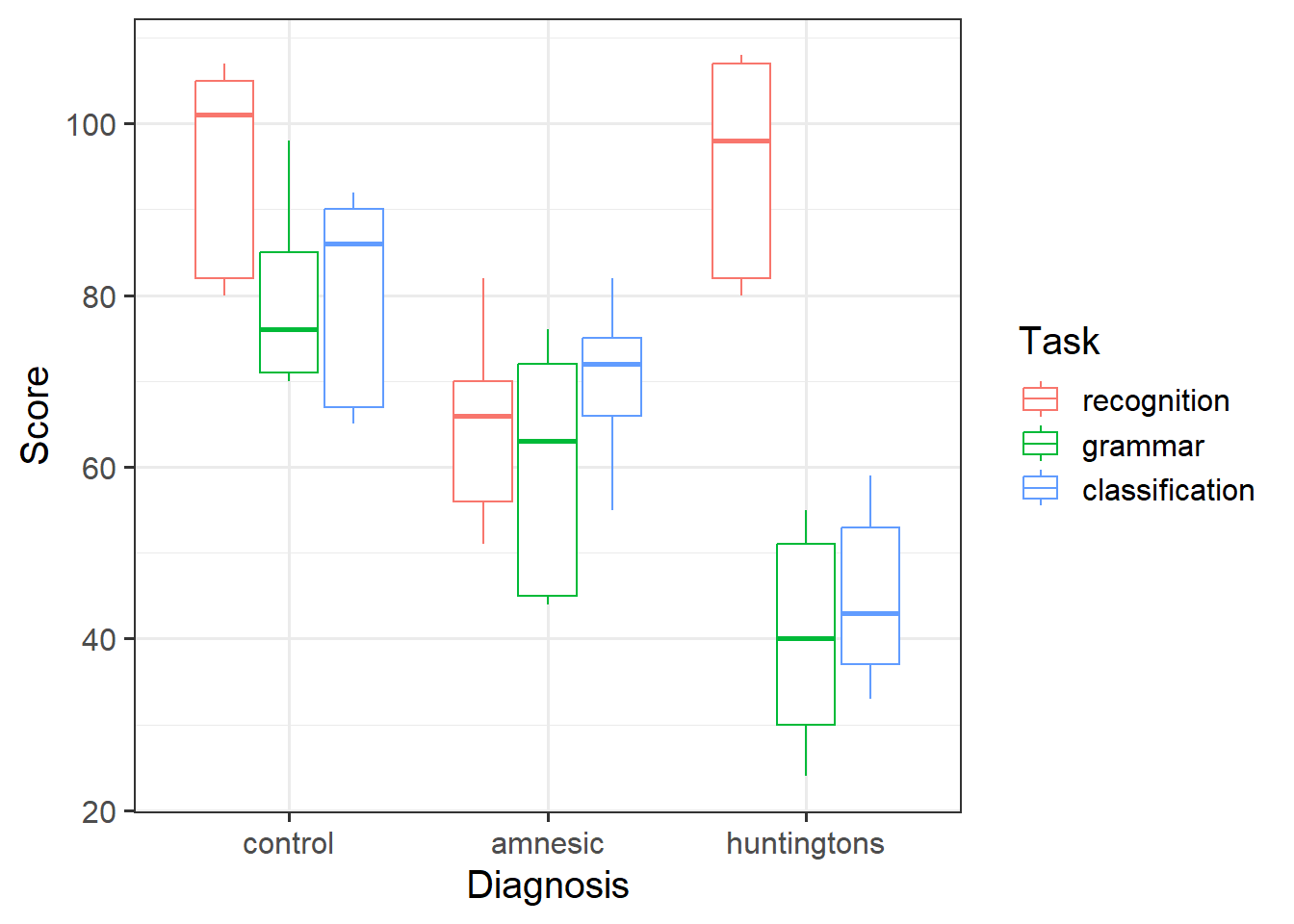

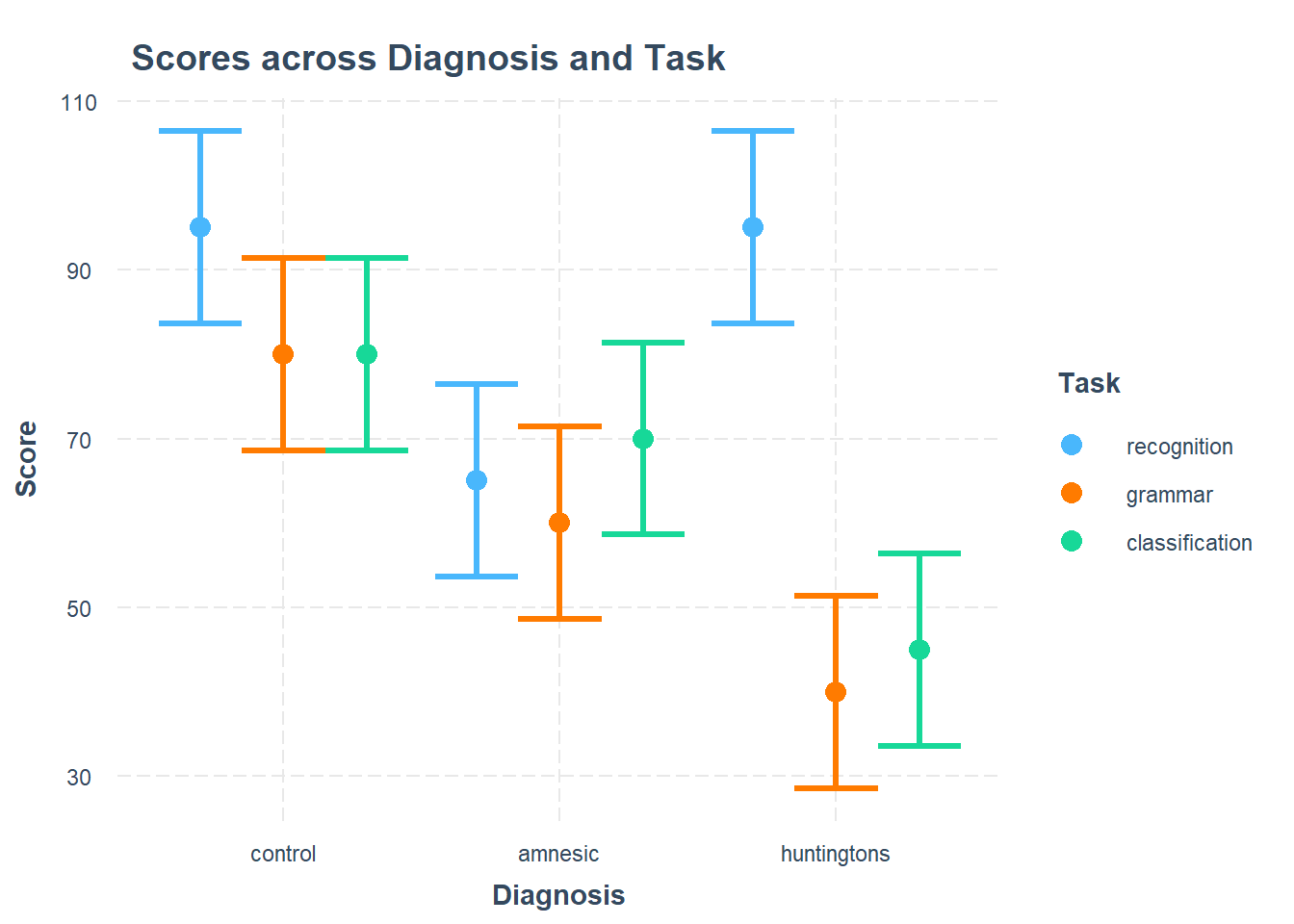

Descriptive Statistics & Visualisations

Provide a table of descriptive statistics and visualise your data.

Interpret the descriptive statistics and visualisations in the context of the study (i.e., comment on any observed differences among groups).

Review the many ways to numerically and visually explore your data by reading over the data exploration flashcards.

For examples, see flashcards on descriptives statistics tables - categorical and numeric values examples and categorical x categorical example - visualise data.

Make sure to comment on any observed differences among the sample means of the different conditions.

Model Fitting & Interpretation

Fit the specified model using lm(), and assign it the name “mdl_int”.

Provide key model results in a formatted table and plot the interaction model before reporting in-text the overall model fit.

Model Building

- We can fit interaction models using the

lm()function.

- For an overview, see the interaction models flashcards.

- For an example, review the interaction models > categorical x categorical example > model building flashcards.

Results Table

- Use

tab_model()from the sjPlot package. For a quick guide, review the tables flashcard.

Plot Model

- Using the

cat_plot()function from the interactions package, visualise the interaction effects from your model. - For an overview and example, review the interaction models > categorical x categorical example > model visualisation flashcards.

Contrast Analysis

Let’s move onto testing differences between specific group means.

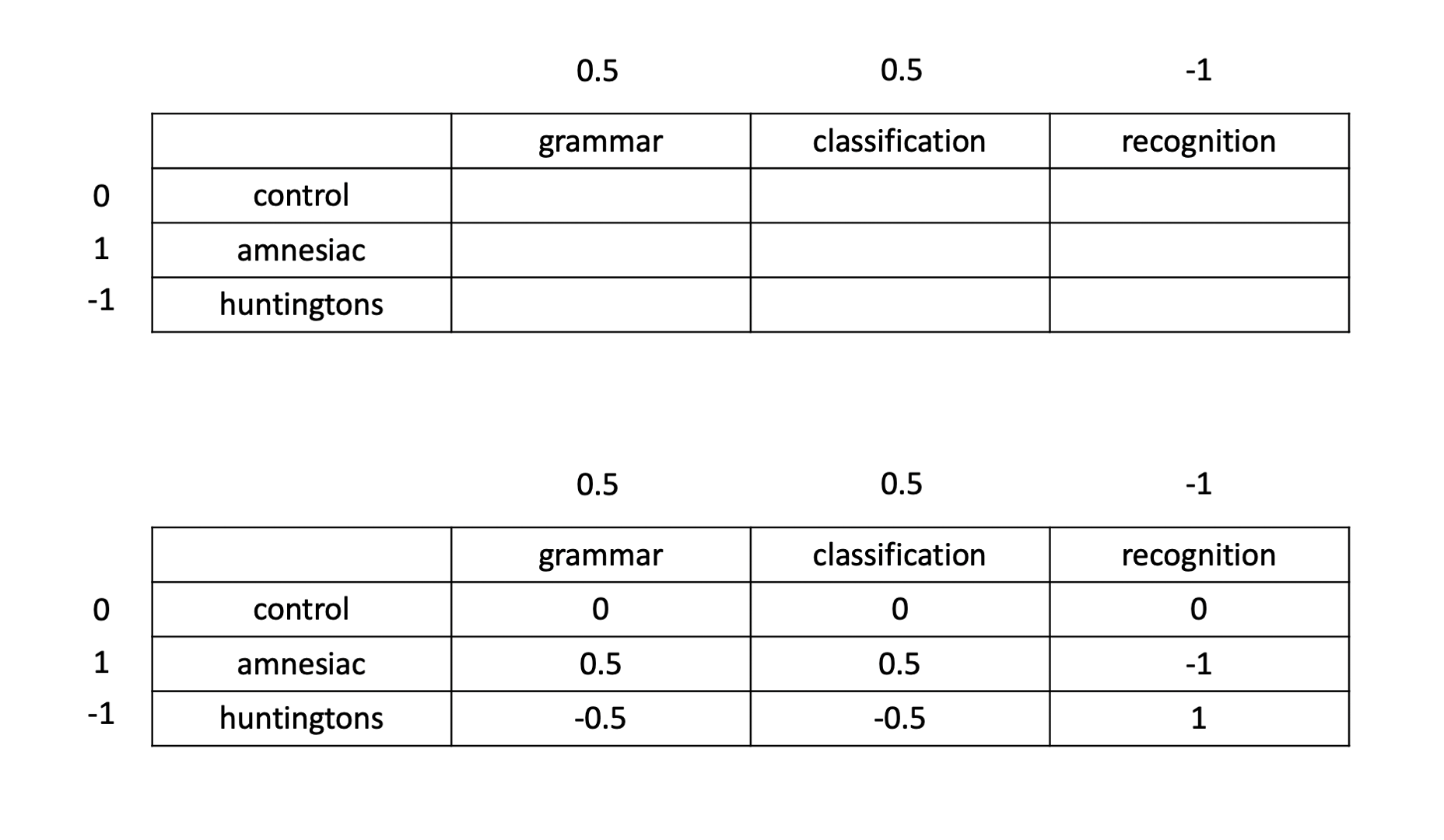

In terms of the diagnostic groups, we want to compare the individuals with amnesia to those with Huntingtons. This corresponds to a contrast with coefficients of 0, 1, and −1, for control, amnesic, and Huntingtons, respectively.

Similarly, in terms of the tasks, we want to compare the average of the two implicit memory tasks with the explicit memory task. This corresponds to a contrast with coefficients of 0.5, 0.5, and −1 for the three tasks.

When we are in presence of a significant interaction, the coefficients for a contrast between the means are found by multiplying each row coefficient with all column coefficients as shown below:

Specify the coefficients to be used in the contrast analysis, and present in a table.

Next, formally state the contrast that the researchers were interested in as testable hypotheses.

For an overview and example, review the manual contrasts flashcards.

Firstly, use emmeans() to obtain the estimated means and uncertainties for your factors.

Next, specify the coefficients of the comparison and run the contrast analysis, obtaining 95% confidence intervals.

Report the results of the contrast analysis in full.

For an overview and example, review the manual contrasts flashcards.

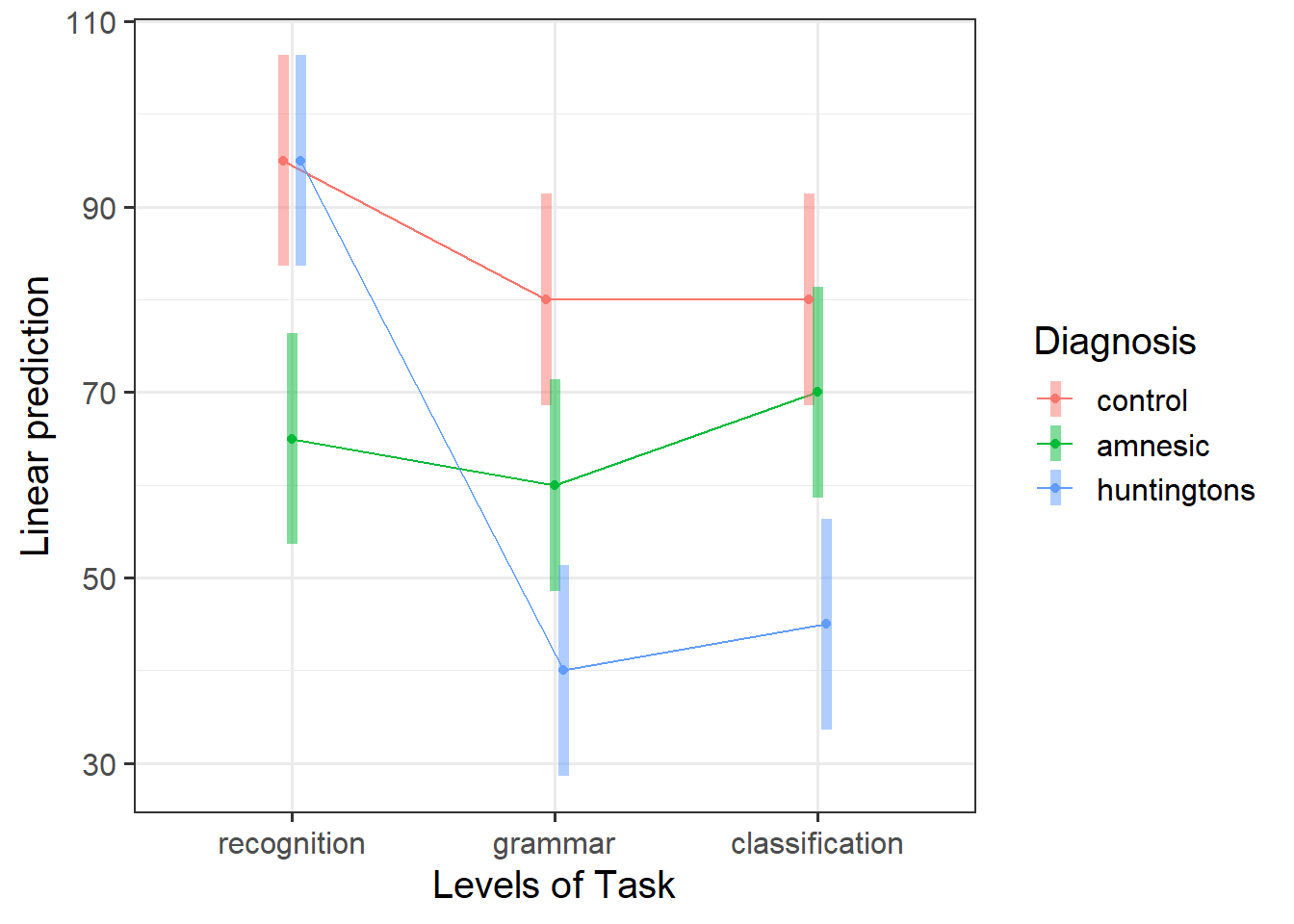

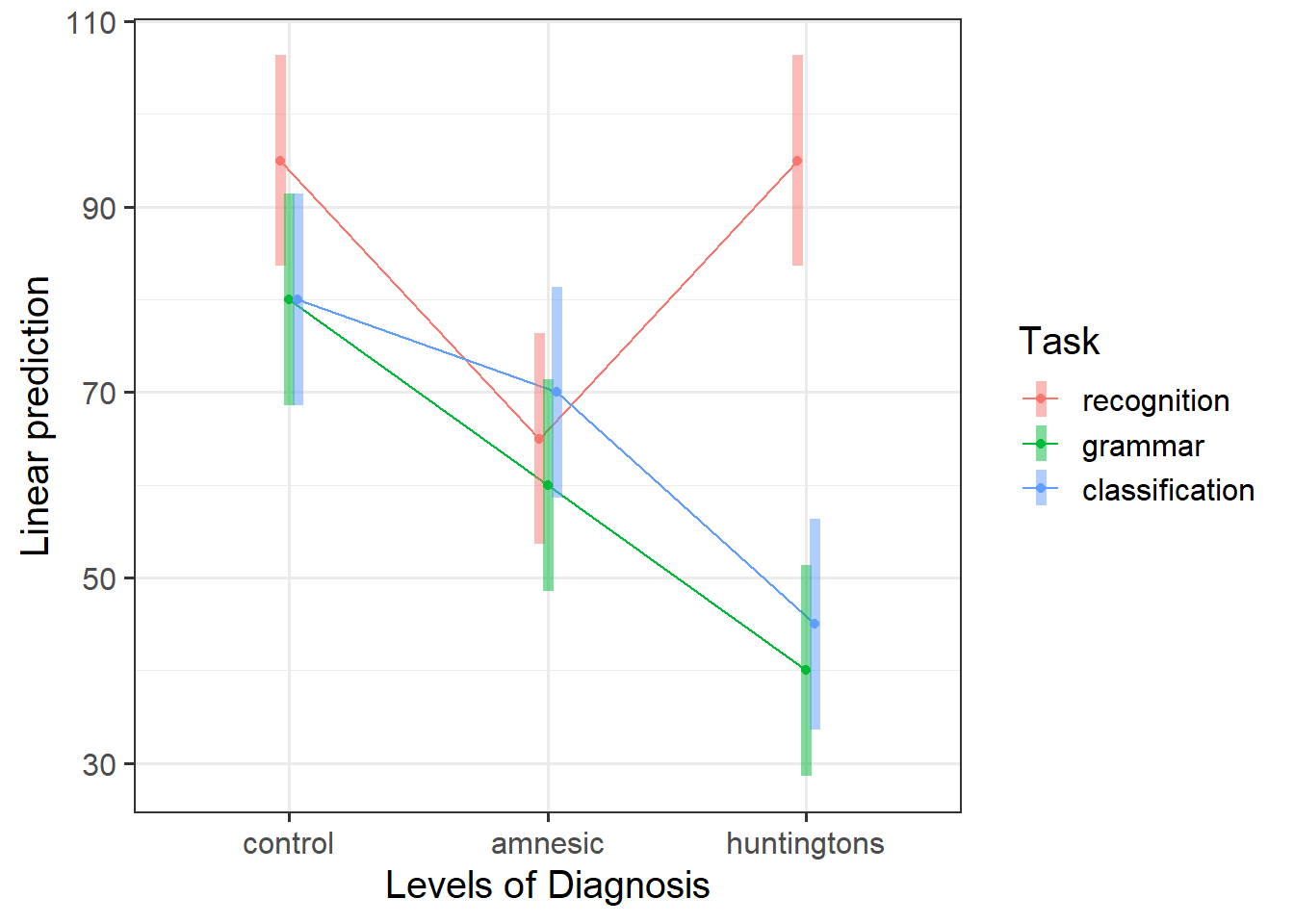

Simple Effects

Examine the simple effects for Task at each level of Diagnosis; and then the simple effects for Diagnosis at each level of Task.

For an overview and example, review the simple effects flashcards.

Visualise the interaction, displaying two plots - one with Diagnosis on the x-axis, and the other with Task on the x-axis.

Considering the simple effects that you noted above, identify the significant effects and match them to the corresponding points of your interaction plot.

For an overview and example, review the simple effects flashcards.

Recall that the patchwork package allows us to arrange multiple plots using either / or | or +.

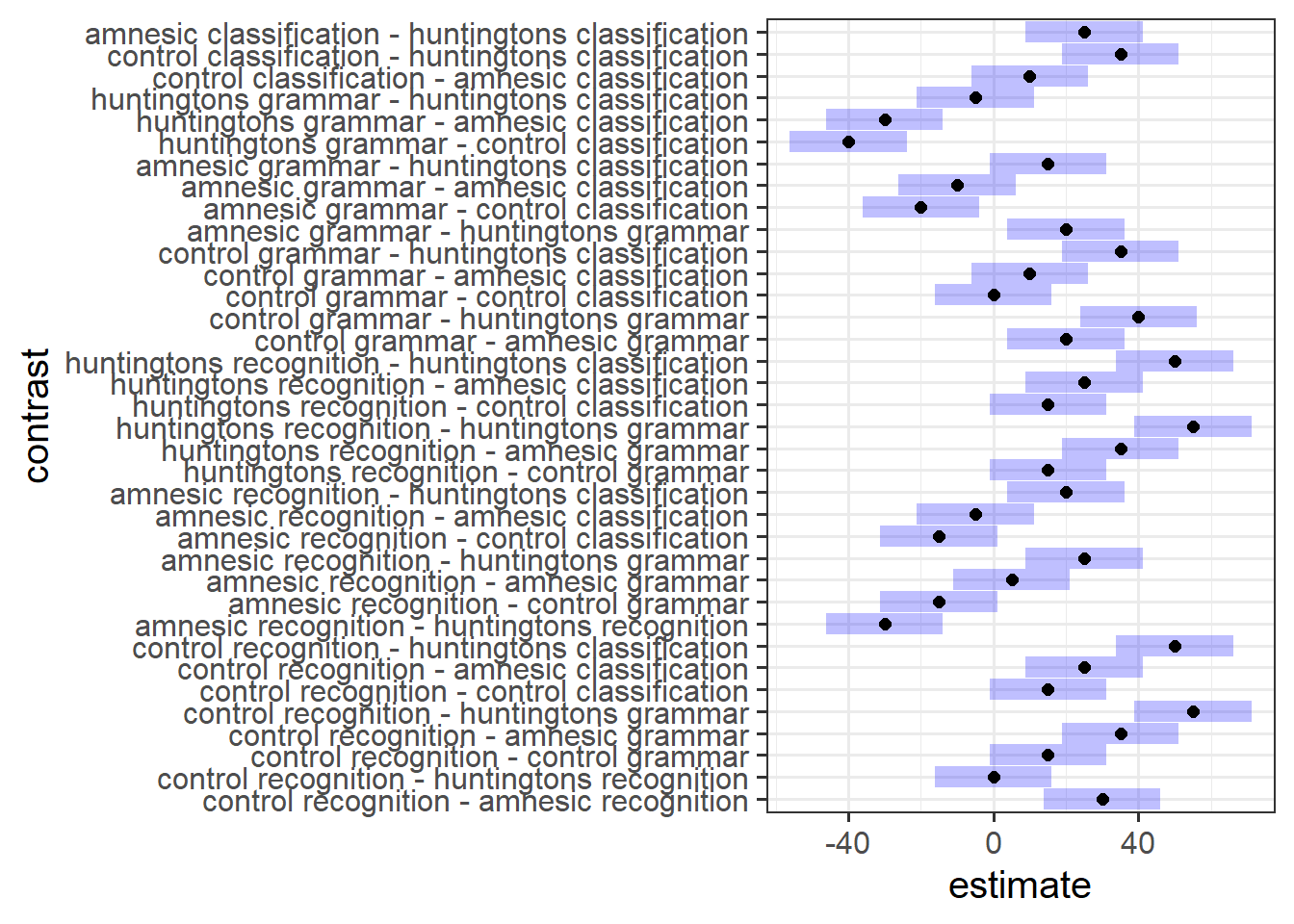

Pairwise Comparisons & Multiple Corrections

Conduct exploratory pairwise comparisons to compare all levels of Diagnosis with all levels of Task, applying no correction (note that Tukey will be automatically applied since we are comparing groups of means, so you will need to overwrite this).

Without adjusting our \(\alpha\) (or \(p\)-value), why might any inferences drawn from your output be problematic?

For an overview, review the multiple comparisons flashcards.

Select an appropriate method to adjust for multiple comparisons, and then obtain confidence intervals.

Comment on how these \(p\)-values differ from your raw (i.e., unadjusted) \(p\)-values.

For an overview, review the multiple comparisons flashcards.

Compile Report

Knit your report to PDF, and check over your work. To do so, you should make sure:

- Only the output you want your reader to see is visible (e.g., do you want to hide your code?)

- Check that the tinytex package is installed

- Ensure that the ‘yaml’ (bit at the very top of your document) looks something like this:

---

title: "this is my report title"

author: "B1234506"

date: "07/09/2024"

output: bookdown::pdf_document2

---If you are having issues knitting directly to PDF, try the following:

- Knit to HTML file

- Open your HTML in a web-browser (e.g. Chrome, Firefox)

- Print to PDF (Ctrl+P, then choose to save to PDF)

- Open file to check formatting

To not show the code of an R code chunk, and only show the output, write:

```{r, echo=FALSE}

# code goes here

```To show the code of an R code chunk, but hide the output, write:

```{r, results='hide'}

# code goes here

```To hide both code and output of an R code chunk, write:

```{r, include=FALSE}

# code goes here

```You must make sure you have tinytex installed in R so that you can “Knit” your Rmd document to a PDF file:

install.packages("tinytex")

tinytex::install_tinytex()

Footnotes

the differences between the group means for the comparison as labelled↩︎