| variable | description |

|---|---|

| age | Age in years of respondent |

| outdoor_time | Self report estimated number of hours per week spent outdoors |

| social_int | Self report estimated number of social interactions per week (both online and in-person) |

| routine | Binary 1=Yes/0=No response to the question 'Do you follow a daily routine throughout the week?' |

| wellbeing | Warwick-Edinburgh Mental Wellbeing Scale (WEMWBS), a self-report measure of mental health and well-being. The scale is scored by summing responses to each item, with items answered on a 1 to 5 Likert scale. The minimum scale score is 14 and the maximum is 70 |

| location | Location of primary residence (City, Suburb, Rural) |

| steps_k | Average weekly number of steps in thousands (as given by activity tracker if available) |

Multiple Linear Regression & Standardization

Learning Objectives

At the end of this lab, you will:

- Extend the ideas of single linear regression to consider regression models with two or more predictors

- Understand how to interpret significance tests for \(\beta\) coefficients

- Understand how to standardize model coefficients and when this is appropriate to do

- Understand how to interpret standardized model coefficients in multiple linear regression models

Requirements

Required R Packages

Remember to load all packages within a code chunk at the start of your RMarkdown file using library(). If you do not have a package and need to install, do so within the console using install.packages(" "). For further guidance on installing/updating packages, see Section C here.

For this lab, you will need to load the following package(s):

- tidyverse

- patchwork

- sjPlot

- ppcor

- kableExtra

Presenting Results

All results should be presented following APA guidelines.If you need a reminder on how to hide code, format tables/plots, etc., make sure to review the rmd bootcamp.

The example write-up sections included as part of the solutions are not perfect - they instead should give you a good example of what information you should include and how to structure this. Note that you must not copy any of the write-ups included below for future reports - if you do, you will be committing plagiarism, and this type of academic misconduct is taken very seriously by the University. You can find out more here.

Lab Data

You can download the data required for this lab here or read it in via this link https://uoepsy.github.io/data/wellbeing_rural.csv

Study Overview

Research Question

Is there an association between wellbeing and time spent outdoors after taking into account the association between wellbeing and social interactions?

Setup

- Create a new RMarkdown file

- Load the required package(s)

- Read the wellbeing dataset into R, assigning it to an object named

mwdata

Exercises

In the first section of this lab, you will focus on the statistics contained within the highlighted sections of the summary() output below. You will be both calculating these by hand and deriving via R code before interpreting these values in the context of the research question following APA guidelines. In the second section of this lab, you will focus on standardization. We will be building on last weeks lab example throughout these exercises.

Lab 2 Recap

Fit the following multiple linear regression model, and assign the output to an object called mdl, and examine the summary output.

\[ \text{Wellbeing} = \beta_0 + \beta_1 \cdot Social~Interactions + \beta_2 \cdot Outdoor~Time + \epsilon \]

We can fit our multiple regression model using the lm() function. For a recap, see the statistical models flashcards, specifically the multiple linear regression models - description & specification card.

Significance Tests for \(\beta\) Coefficients

Test the hypothesis that the population slope for outdoor time is zero — that is, that there is no linear association between wellbeing and outdoor time (after controlling for the number of social interactions) in the population.

See the t value flashcard (within simple & multiple regression models - extracting Information > model coefficients > t value).

Review this weeks lecture slides for an example of how to do this by-hand and in R.

Obtain 95% confidence intervals for the regression coefficients, and write a sentence about each one.

Recall the formula for obtaining a confidence interval:

A confidence interval for the population slope is \[ \hat \beta_j \pm t^* \cdot SE(\hat \beta_j) \] where \(t^*\) denotes the critical value chosen from t-distribution with \(n-k-1\) degrees of freedom (where \(k\) = number of predictors and \(n\) = sample size) for a desired \(\alpha\) level of confidence.

Review this weeks lecture slides for an example of how to do this by-hand and in R.

Standardization

Fit two regression models using the standardized response and explanatory variables. For demonstration purposes, fit one model using z-scored variables, and the other using the scale() function.

Both of these methods - z-scoring and scale() - will give us a standardized model.

Examine the estimates from both standardized models - what do you notice?

Review the simple & multiple regression models - extracting information > model coefficients flashcards.

Consider whether the values the same, or different? What would you expect them to be and why?

Examine the ‘Coefficients’ section of the summary() output from the standardized and unstandardized models - what do you notice? In other words, what is the same / different?

How do these standardized estimates relate to the semi-partial correlation coefficients?

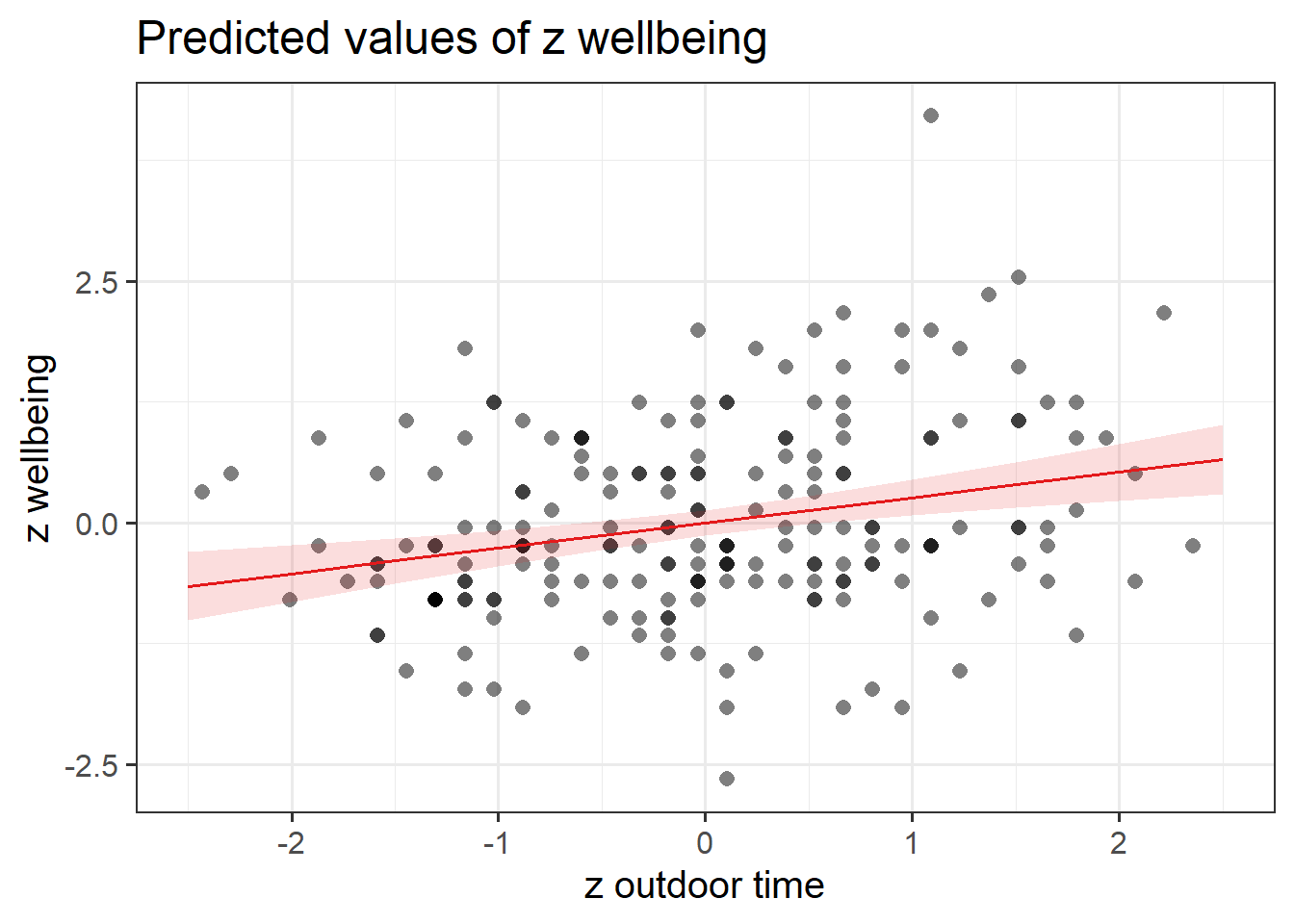

Produce a visualisation of the association between wellbeing and outdoor time, after accounting for social interactions.

Semi-partial (part) correlation coefficient

Firstly, think about what semi-partial correlation coefficients and standardized \(\beta\) coefficients represent:

- Semi-partial correlation coefficients (which you may also see referred to as part correlations) estimate the unique contribution of each predictor variable to the explained variance in the dependent variable, while controlling for the influence of all other predictors in the model.

- Standardized \(\beta\) estimates represent the change in the dependent variable in standard deviation units for a one-standard-deviation change in the predictor variable, whilst holding all other predictors constant.

To calculate semi-partial (part) correlation coefficients, you will need to use the spcor.test() from the ppcor package.

Recall that you can look at the estimates from either ‘mdl_s’ or ‘mdl_z’ - they contain the same standardized model estimates.

Note this is quite a difficult question (really it could be optional), and the exercise is designed to get you to think about how semi-partial correlation coefficients and standardized \(\beta\) coefficients are related.

Plotting

To visualise just one association, you need to specify the terms argument in plot_model(). Don’t forget you can look up the documentation by typing ?plot_model in the console.

Since using plot_model(), We need to use ‘mdl_z’ here not ‘mdl_s’ - it won’t work with a model that’s used the scale() function.

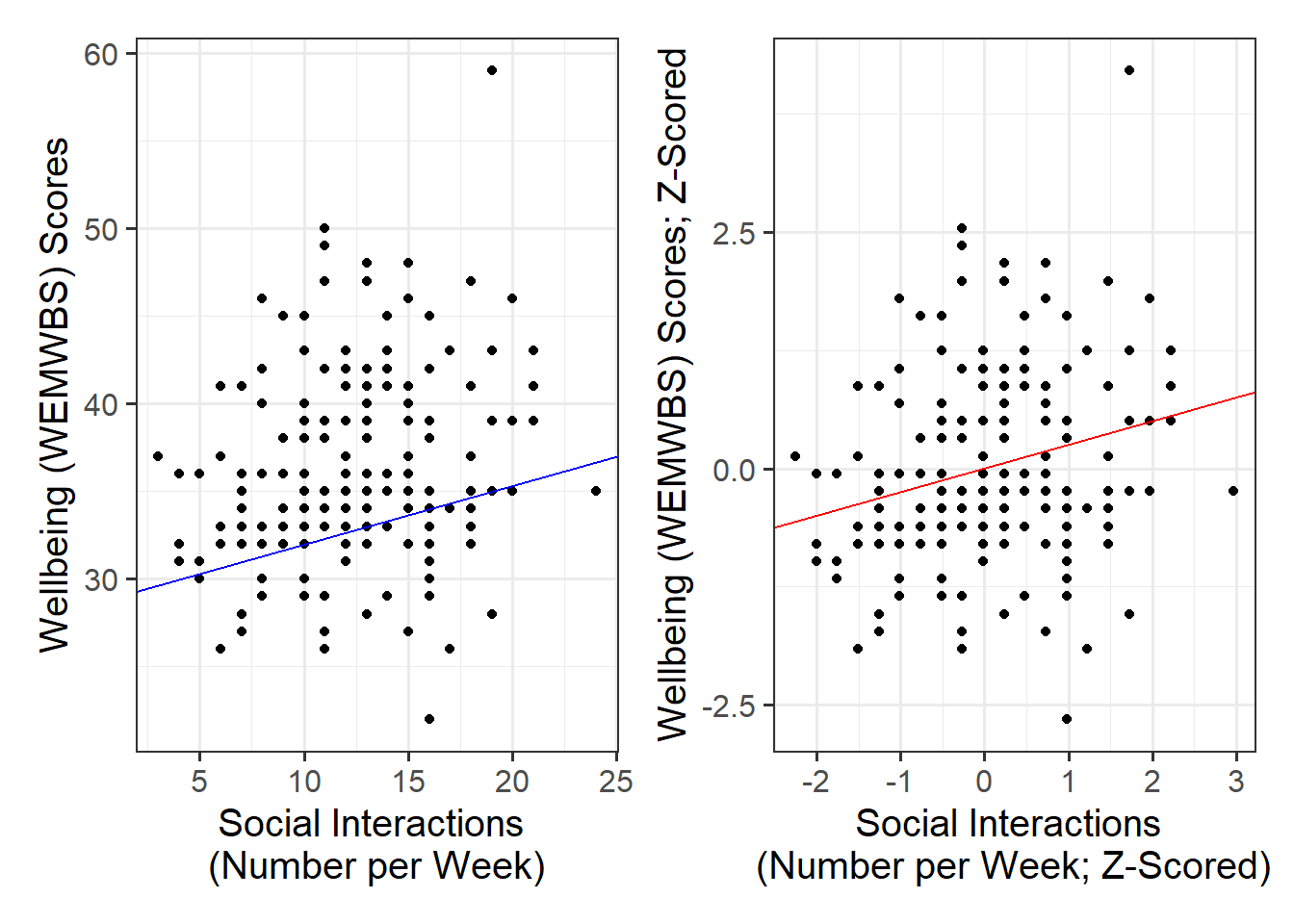

Plot the data and the fitted regression line from both the unstandardized and standardized models. To do so, for each model:

- Extract the estimated regression coefficients e.g., via

betas <- coef(mdl) - Extract the first entry of

betas(i.e., the intercept) viabetas[1] - Extract the second entry of

betas(i.e., the slope) viabetas[2] - Provide the intercept and slope to the function

Note down what you observe from the plots - what is the same / different?

This is very similar to Lab 1 Q7.

Extracting values

The function coef() returns a vector (a sequence of numbers all of the same type). To get the first element of the sequence you append [1], and [2] for the second.

Plotting

In your ggplot(), you will need to specify geom_abline(). This might help get you started:

geom_abline(intercept = intercept, slope = slope)

You may also want to plot these side by side to more easily compare, so consider using | from patchwork. For further ggplot() guidance, see the how to visualise data flashcard.

Writing Up & Presenting Results

Provide key model results from the standardized model in a formatted table.

Use tab_model() from the sjPlot package. For a quick guide, review the tables flashcard.

Since using tab_model(), We need to use ‘mdl_z’ here not ‘mdl_s’ - it won’t work with a model that’s used the scale() function.

Interpret the results from the standardized model the context of the research question.

Make reference to the your regression table.

Remember to inform the reader of the scale of your variables.

Compile Report

Knit your report to PDF, and check over your work. To do so, you should make sure:

- Only the output you want your reader to see is visible (e.g., do you want to hide your code?)

- Check that the tinytex package is installed

- Ensure that the ‘yaml’ (bit at the very top of your document) looks something like this:

---

title: "this is my report title"

author: "B1234506"

date: "07/09/2024"

output: bookdown::pdf_document2

---If you are having issues knitting directly to PDF, try the following:

- Knit to HTML file

- Open your HTML in a web-browser (e.g. Chrome, Firefox)

- Print to PDF (Ctrl+P, then choose to save to PDF)

- Open file to check formatting

To not show the code of an R code chunk, and only show the output, write:

```{r, echo=FALSE}

# code goes here

```To show the code of an R code chunk, but hide the output, write:

```{r, results='hide'}

# code goes here

```To hide both code and output of an R code chunk, write:

```{r, include=FALSE}

# code goes here

```You must make sure you have tinytex installed in R so that you can “Knit” your Rmd document to a PDF file:

install.packages("tinytex")

tinytex::install_tinytex()