| variable | description |

|---|---|

| ID | Participant ID |

| stress1 | acute stress |

| stress2 | chronic stress |

| stress3 | environmental stress |

| stress4 | psychological stress |

| stress5 | physiological stress |

| IQ1 | verbal ability |

| IQ2 | verbal memory |

| IQ3 | inductive reasoning |

| IQ4 | spatial orientiation |

| IQ5 | perceptual speed |

Week 11 Exercises: More SEM!

Prenatal Stress & IQ data

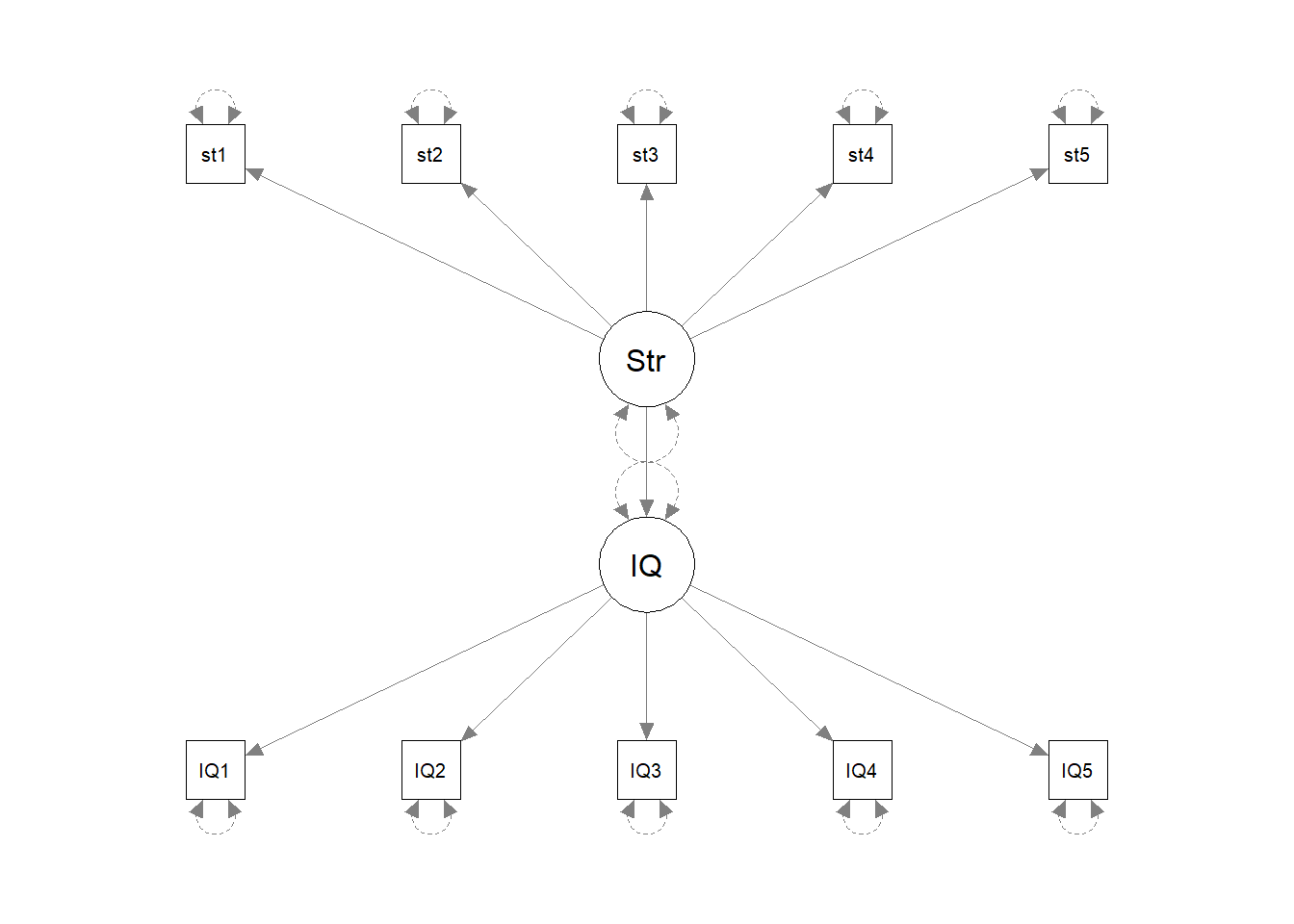

A researcher is interested in the effects of prenatal stress on child cognitive outcomes.

She has a 5-item measure of prenatal stress and a 5 subtest measure of child cognitive ability, collected for 500 mother-infant dyads.

- The data is available as a .csv file here: https://uoepsy.github.io/data/stressIQ.csv

Before we do anything with the data, grab some paper and sketch out the full model that you plan to fit to address the researcher’s question.

Tip: everything you need is in the description of the data. Start by drawing the specific path(s) of interest. Are these between latent variables? If so, add in the paths to the indicators for each latent variable.

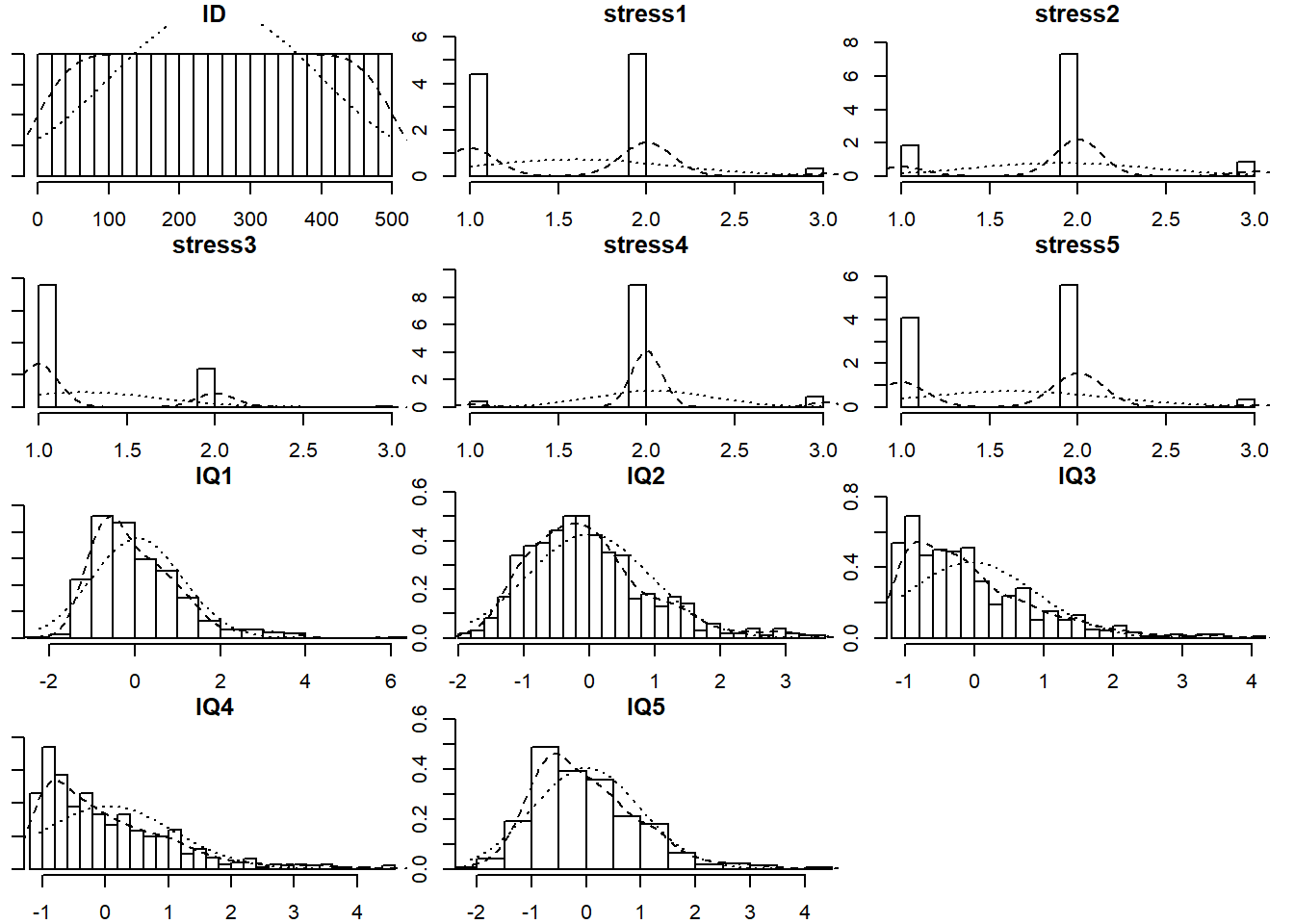

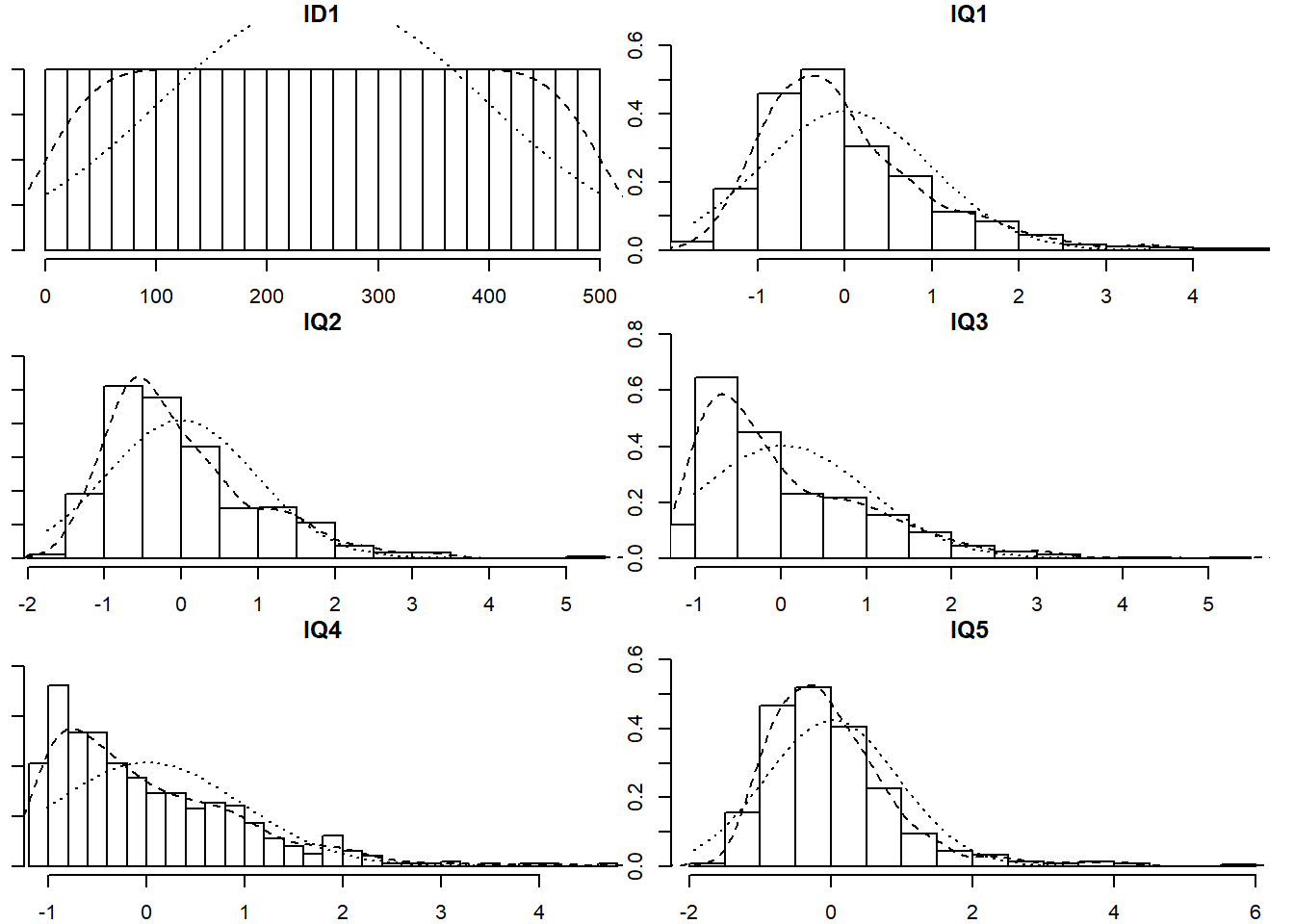

Read in the data and explore it. Look at the individual distributions of each variable to get a sense of univariate normality, as well as the number of response options each item has.

The multi.hist() function from the psych package is pretty useful for getting quick histograms of multiple variables. If necessary, you can set multi.hist(data, global = FALSE) to let each histogram’s x-axis be on a different scale.

Non-normality

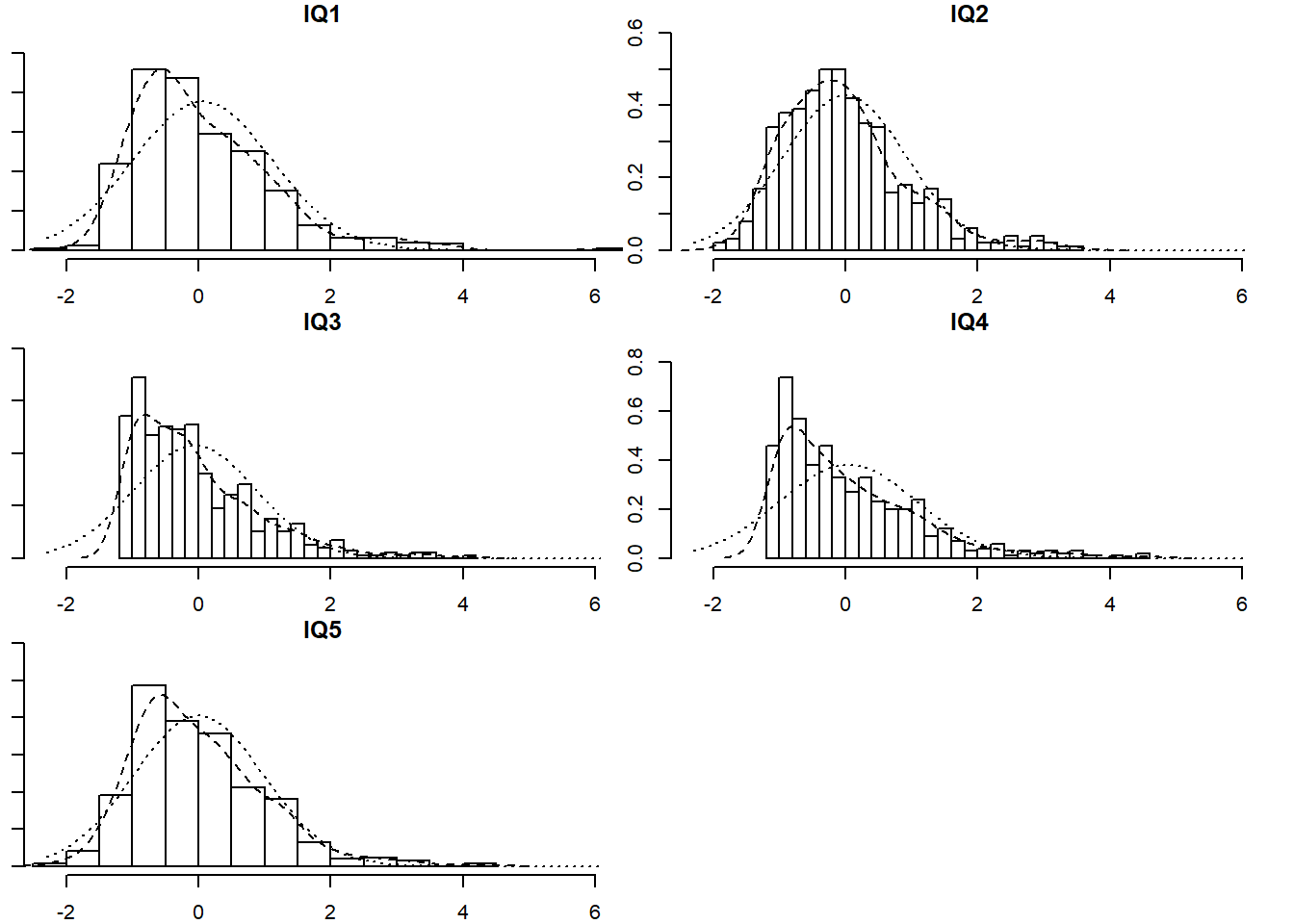

Fit a one factor CFA for the IQ items using an appropriate estimation method.

Be sure to first check their distributions (both numerically and visually). The describe() function from the psych package will give you measures of skew and kurtosis.

One of the key assumptions of the models we have been fitting so far is that our variables are all normally distributed, and are continuous. Why?

Recall how we first introduced the idea of estimating a SEM: comparing the observed covariance matrix with our model implied covariance matrix. This heavily relies on the assumption that our observed covariance matrix provides a good representation of our data - i.e., that \(var(x_i)\) and \(cov(x_i,x_j)\) are adequate representations of the relations between variables. While this is true if we have a “multivariate normal distribution”, things become more difficult when our variables are either not continuous or not normally distributed.

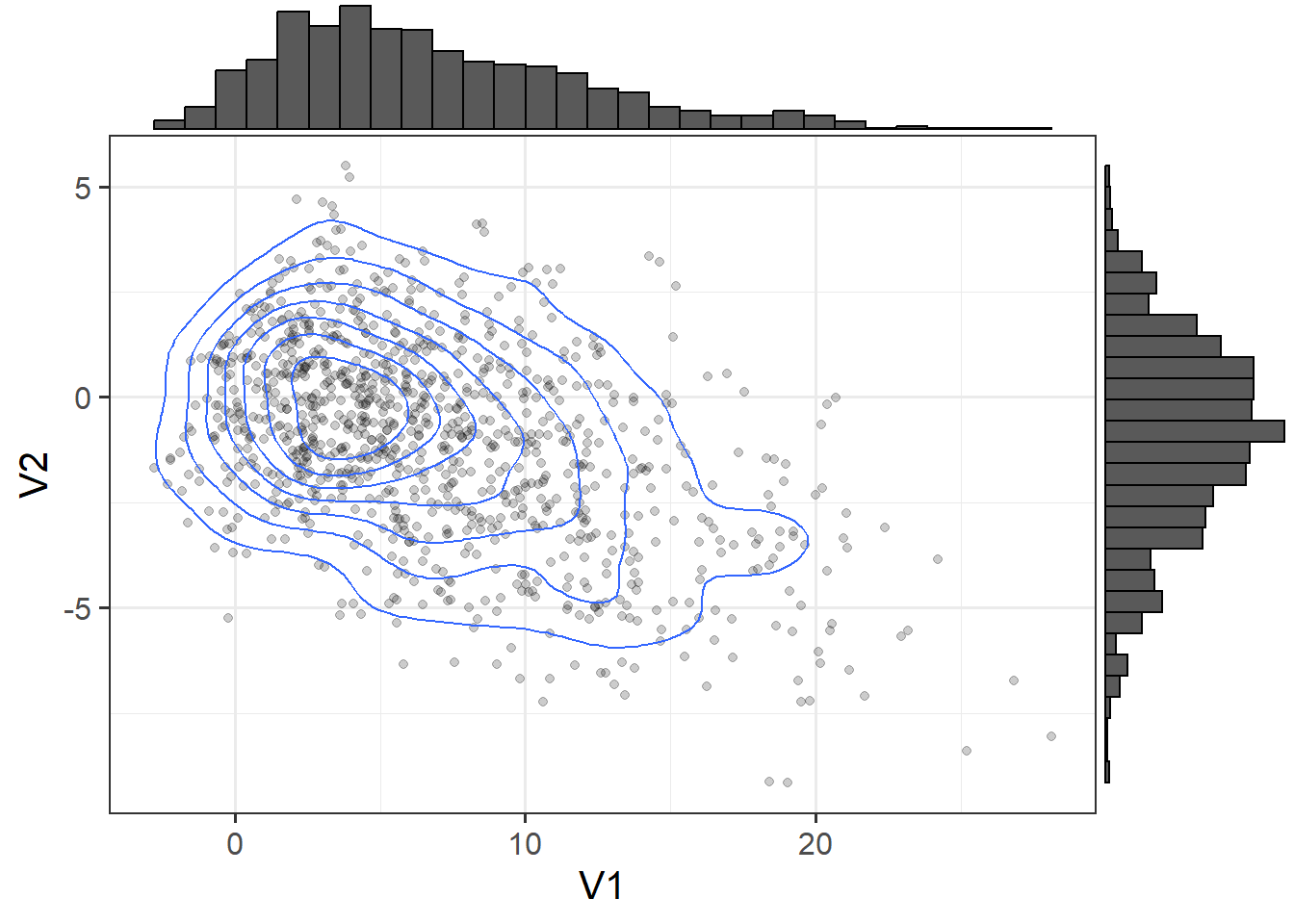

The multivariate normal distribution is fundamentally just the extension of our univariate normal (the bell-shaped curve we are used to seeing) to more dimensions. The condition which needs to be met is that every linear combination of the \(k\) variables has a univariate normal distribution. It is denoted by \(N(\boldsymbol{\mu}, \boldsymbol{\Sigma})\) (note that the bold font indicates that \(\boldsymbol{\mu}\) and \(\boldsymbol{\Sigma}\) are, respectively, a vector of means and a matrix of covariances).1

The idea of the multivariate normal can be a bit difficult to get to grips with in part because there’s not an intuitive way to visualise it once we move beyond 2 or 3 variables.

With 2 variables, you can think of it just like a density plot but in 3 dimensions, as in Figure 1. You can also represent this as a “contour” plot, which is like looking down on Figure 1 from above (just like a map!)

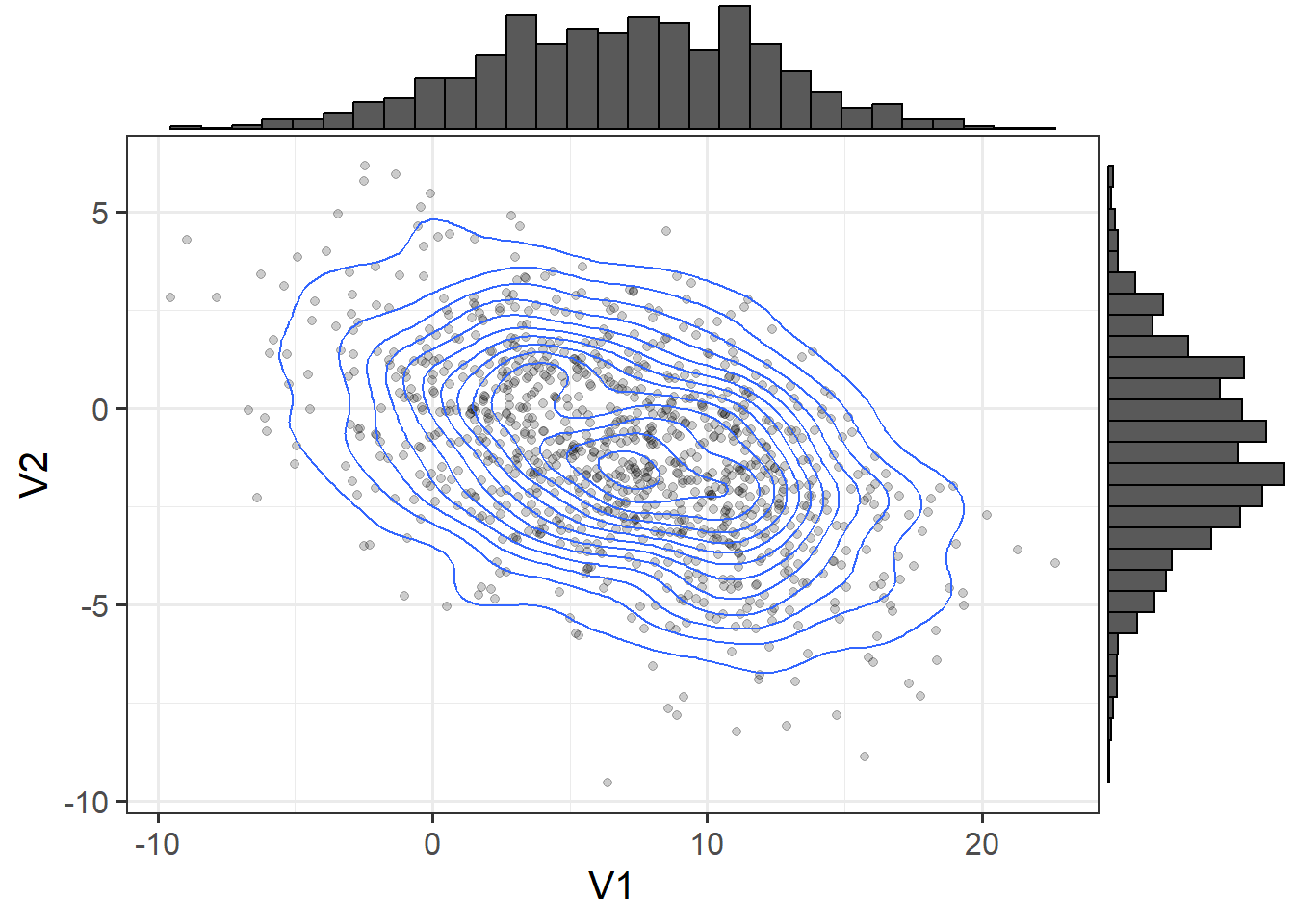

Now let’s look at a situation where we have some skewed variables. Figure 2 shows such a distribution, and Figure 3 shows the contour plot (i.e. it is like Figure 2 viewed from above).

3D

Contour

If we compute our covariance matrix from the 2 skewed variables, we get:

V1 V2

V1 27.822839 -5.770942

V2 -5.770942 5.542513But this fails to capture information about important properties of the data relating to features such as skew (lop-sided-ness) and kurtosis (pointiness). As an example, if we were to simulate data based on this covariance matrix, we get something like Figure 4, which is clearly limited in its reflection of our actual data.

3D

Contour

For determining what constitutes deviations from normality, there are various ways to calculate the skewness and kurtosis of univariate distributions (see Joanes, D.N. and Gill, C.A (1998). Comparing measures of sample skewness and kurtosis if you’re interested). In addition, there are various suggested rules of thumb we can follow. Below are the most common:

Skewness rules of thumb:

- \(|skew| < 0.5\): fairly symmetrical

- \(0.5 < |skew| < 1\): moderately skewed

- \(1 < |skew|\): highly skewed

Kurtosis rule of thumb:

- \(Kurtosis > 3\): Heavier tails than the normal distribution. Possibly problematic

What we can do

In the event of non-normality, we can still use maximum likelihood to find our estimated factor loadings, coefficients and covariances. However, our standard errors (and our \(\chi^2\) model fit) will be biased. We can use a robust maximum likelihood estimator that essentially applies corrections to the standard errors2 and \(\chi^2\) statistic.

There are a few robust estimators in lavaan, but one of the more frequently used ones is “MLR” (maximum likelihood with robust SEs). You can find all the other options at https://lavaan.ugent.be/tutorial/est.html.

We can make use of these with: sem(model, estimator="MLR") or cfa(model, estimator="MLR").

Examine the fit of the CFA model of IQ.

If it doesn’t fit very well, consider checking for areas of local misfit (i.e., check your modindices()), and adjust your model accordingly.

Ordered Categoricals

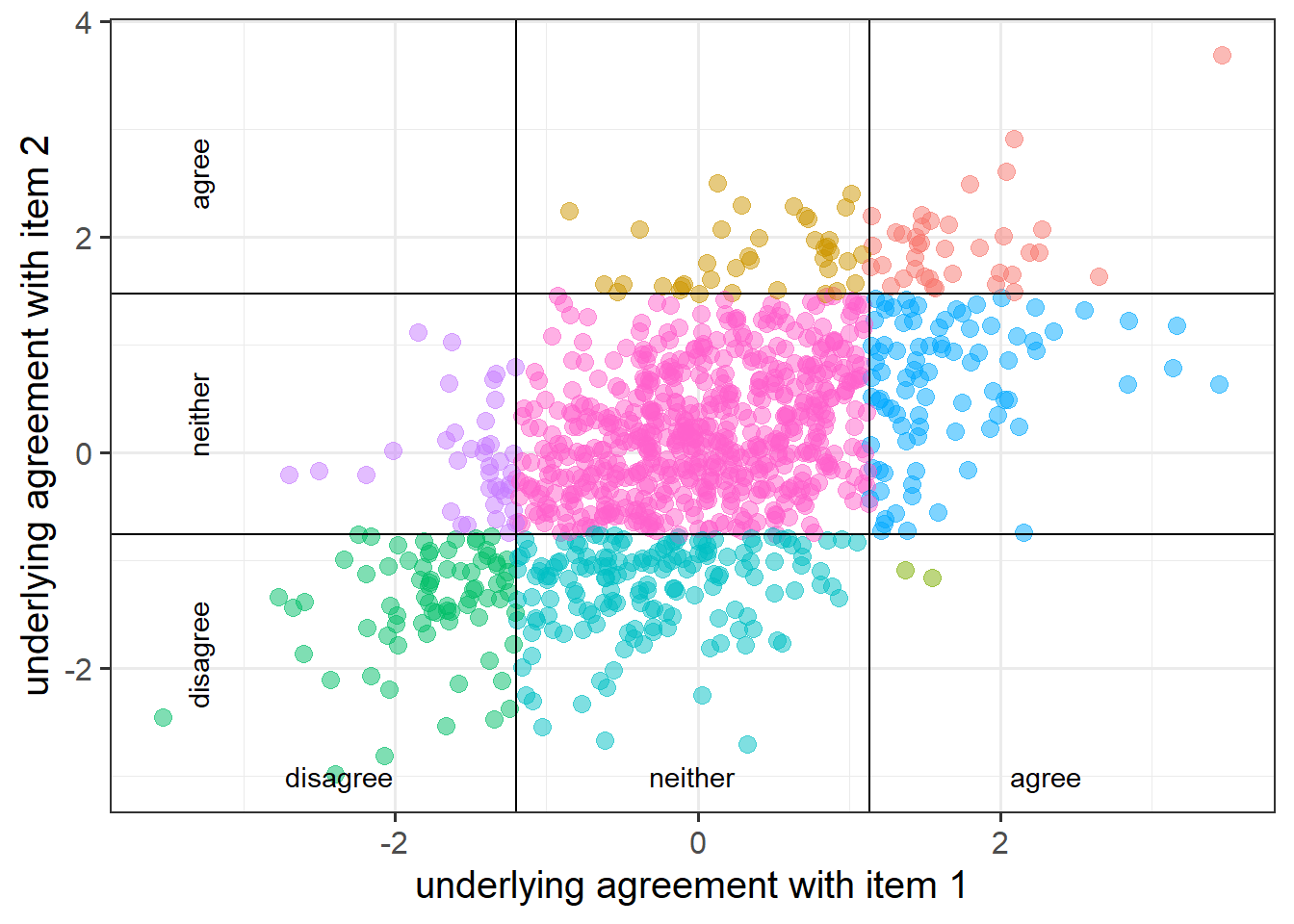

Fit a one-factor confirmatory factor analysis for the latent factor of Stress. Note that the items are measured on a 3-point scale!

Sometimes we can treat ordinal data as if it is continuous. When exactly this is appropriate is a contentious issue - some statisticians might maintain that it is never appropriate to treat ordinal data as continuous. In psychology, much research using SEM centers around questionnaire data, which lends itself to likert data (for instance, “strongly agree”,“agree”,“neither agree nor disagree”,“disagree”,“strongly disagree”). An often used rule of thumb is that likert data with \(\geq 5\) levels can be treated as if they are continuous without unduly influencing results (see Johnson, D.R., & Creech, J.C. (1983). Ordinal measures in multiple indicator models: A simulation study of categorization error).

But what if we don’t have 5+ levels? An important note is that even if your questionnaire included 5 response options for people to choose from, if participants only used 3 of them, then you actually have a variable with just 3 levels.

Estimation techniques exist that allow us to model such variables by considering the set of ordered responses to correspond to portions of an underlying continuum. This involves estimating the ‘thresholds’ of that underlying distribution at which people switch from giving one response to the next.

Rather than working with correlations, this method involves using “polychoric correlations”, which are estimates of the correlation between two theorized normally distributed continuous variables, based on their observed ordinal manifestations.

What we can do

In R, lavaan will automatically switch to a categorical estimator (called “DWLS”3) if we tell it that we have some ordered-categorical variables. We can use the ordered = c("item1name","item2name","item3name", ...) argument.

This is true for both the cfa() and sem() functions.

Inspect your summary model output. Notice that we have a couple of additional things - we have ‘scaled’ and ‘robust’ values for the fit statistics (we have a second column for all the fit indices but using a scaled version of the \(\chi^2\) statistic, and then we also have some extra rows of ‘robust’ measures), and we have the estimated ‘thresholds’ in our output (there are two thresholds per item in this example because we have a three-point response scale). The estimates themselves are not of great interest to us.

Now its time to build the full SEM.

Estimate the effect of prenatal stress on IQ.

Remember: We know that IQ is non-normal, so we would like to use a robust estimator (e.g. MLR). However, as lavaan will tell you if you try using estimator="MLR", this is not supported for ordered data (i.e., the Stress items). It suggests instead using the WLSMV (weighted least square mean and variance adjusted) estimator.

As it happens, the WLSMV estimator is just DWLS with a correction to return robust standard errors. If you specify estimator="WLSMV" then your standard errors will be corrected, but don’t be misled by the fact that the summary here will still say that the estimator is DWLS.

Missingness

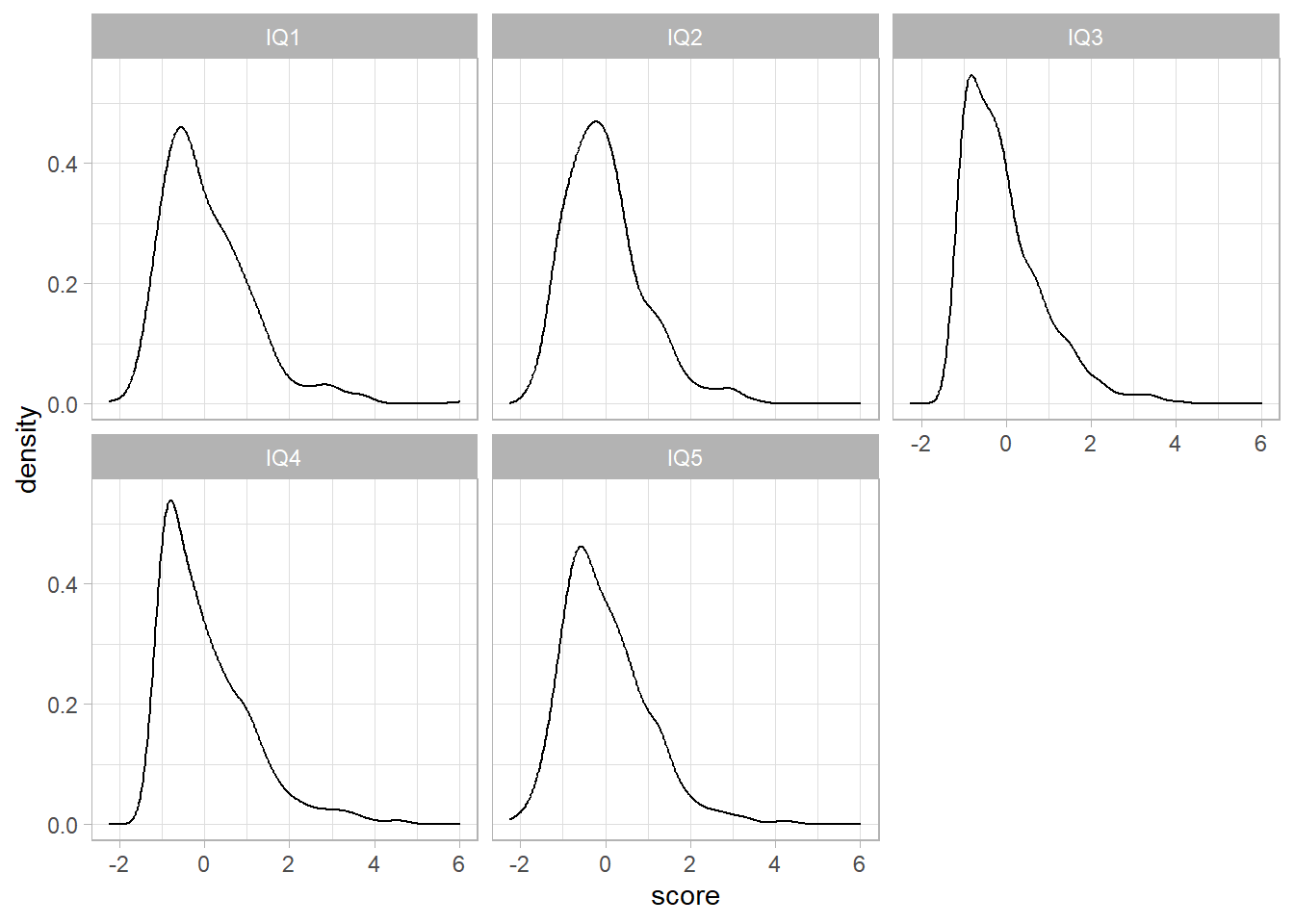

In order to try and replicate the IQ CFA, our researcher collects a new sample of size \(n=500\). However, she has some missing data (specifically, those who scored poorly on earlier tests tended to feel discouraged and chose not to complete further tests).

Read in the new dataset, plot and numerically summarise the univariate distributions of the measured variables, and then conduct a CFA using the new data, taking account of the missingness (don’t forget to also use an appropriate estimator to account for any non-normality). Does the model fit well?

- The data can be found at https://uoepsy.github.io/data/IQdatam.csv, and is in .csv format.

It is very common to have missing data. Participants may stop halfway through the study, may decline to be followed up (if it is longitudinal) or may simply decline to answer certain sections. In addition, missing data can occur for all sorts of technical reasons (e.g, website crash and auto-submit a questionnaire, etc.).

It is important to understand the possible reasons for missing data in order to appropriately consider what data you do have. If missing data are missing completely random, then the data you do have should still be representative of the population. But suppose you are studying cognitive decline in an aging population, and people who are suffering from cognitive impairment are less likely to attend the follow-up assessments. Is this missingness random? No. Does it affect how you should consider your available data to represent the population of interest? Yes.

There are three main reasons that we certain data may be missing, and the acronyms are a nightmare:4

MCAR: Missing Completely At Random. Data which are MCAR are missing data for which the propensity for missingness is completely independent of any observed or unobserved variables. It is truly random if the data are missing or not.

MAR: Missing At Random. Data which are MAR are missing data for which the propensity for missingness is not random, but it can be fully explained by some variables for which there is complete data. In other words, there is a systematic relationship between missing values and observed values. For example, people who are unemployed at time of assessment will likely not respond to questions on work-life satisfaction. Missing values on work-life satisfaction is unrelated to the levels of work-life satisfaction, but related to their employment status.

MNAR: Missing Not At Random. Data which are MNAR are missing data for which the propensity for missingness is related to the value which is missing. For example, suppose an employer tells employees that there are a limited number of bonuses to hand out, and then employees are asked to fill out a questionnaire. Thos who are less satisfied with their job may not respond to questions on job satisfaction (if they believe it will negatively impact their chances of receiving a bonus).

What we can do

In SEM, there is an extremely useful method known as full information maximum likelihood (FIML) which, allows us to use all our available data (including those that have only some values present).

FIML separates data into the different patterns of missingness (i.e. those that are missing on IQ1, those missing on IQ1 and IQ2, those missing on just IQ2, and so on). The likelihood of each group can be estimated from the parameters that are available for that pattern. The estimation process then finds the set of parameters that maximise the likelihood of the full dataset (we can sum up the loglikelihoods of the datsets with each missingness pattern). This way it utilises all observed variables (hence “full information”) to estimate the model parameters.

A downside, one could argue, is that you may have specific theoretical considerations about which observed variables should weigh in on estimating missingness in variable \(x\) (rather than all variables), in which case imputation techniques may be preferable. FIML only provides unbiased estimates if the data are MAR (i.e. if the missingness depends on other variables present in the data).

In lavaan, we can make use of full information maximum likelihood by using either missing = "FIML" or missing = "ML" (they’re the same) in the functions cfa() and sem().

Thus far in our analyses, we have been simply excluding any row for which there is missingness. This is typically known as “listwise deletion”, and is the default for many modelling approaches. Any person who is missing some data on a variable in our model will simply not be included. This method assumes that the data are “Missing Completely At Random”, because we make the assumption that studying only the people who have complete data does not bias our results in any way.

Other traditional approaches are to use basic imputation methods such as “mean/median imputation” (replace missing values with the average of that variable). These can be okay, but they assume that the imputed values are exactly what we would have observed had it not been missing, rather than estimates. The lack of variability around this imputed value will shrink the standard errors because it is assumed that no deviations exist among the substituted values. Another option is “regression imputation”, where we replace missing values with the predicted values from a regression model of the variable with missingness onto some relevant predictors. This is better because our estimates of the values of missing observations could be much better, but we still make an assumption that these estimates are perfect, when we know they are just estimates.

A more sophisticated approach to this is “Multiple Imputation” (MI). Multiple imputation is similar to the regression imputation approach described above in that it predicts the value of missing observations based on the covariates, but instead of predicting just one value, it predicts many. Rather than saying “the predicted value for this missing observation is 5”, we say “the predicted value for this missing observation is from the distribution \(\sim N(5,2)\)”. So we simulate \(m\) draws from that distribution, and we end up with \(m\) predicted values. We make \(m\) copies of our data and replace the missing observations with each of our \(m\) predicted values. We then do our analysis on each \(m\) imputed dataset, and pool the results!

Note that the summary of the model output when we used FIML also told us that there are 3 patterns of missingness.

Can you find out a) what the patterns are, and b) how many people are in each pattern?

is.na() will help a lot here, as will distinct() and count().

Extras: Equivalent models

Returning to our full SEM, adjust your model so that instead of IQ ~ Stress we are fitting Stress ~ IQ (i.e. child cognitive ability \(\rightarrow\) prenatal stress).

Does this model fit better or worse than our first one?

Extras: Simulating SEM data

An extremely useful approach to learning both R and statistics is to create yourself some fake data on which you can try things out. Because you create the data, you can control any relationships, group differences etc. In doing so, you essentially make yourself a target to aim for.

Many of you will currently be in the process of collecting/acquiring data for your thesis. If you are yet to obtain your data, we strongly recommend that you start to simulate some data with the expected distributions in order to play around and test how your analyses works, and how to interpret the results.

I estimate that I generate fake data at least a couple of times every week just to help me work out things I don’t understand. It also enables for easy sharing of reproducible chunks of code which can perfectly replicate issues and help discussions.

While structural equation models are a fairly complex method, simulating data from a pre-defined SEM is actually pretty straightforward. We just specify a population model (i.e. giving it some values of each parameter) and then sample from it.

For example:

popmod <- "

biscuits =~ .7*oreo + .6*digestive + .7*custardcream +

.6*jammydodger + .8*bourbon + .3*jaffacake

"

newdata <- simulateData(popmod, sample.nobs = 300)

head(newdata) oreo digestive custardcream jammydodger bourbon jaffacake

1 0.06992848 -1.96401881 -0.6930186 0.9031730 -3.7925025 0.24151604

2 1.14049039 0.08674750 1.3059567 0.6697633 -1.2861885 1.07859713

3 1.52744333 0.97322962 -0.1676939 0.8226969 0.9266996 -2.55767075

4 -0.08882437 0.19439799 1.1692537 0.2738211 3.1872644 0.01498641

5 -0.18472543 -0.06220265 0.7107427 0.9779674 1.1212930 -0.87409201

6 -0.68054395 -1.80212884 0.4449138 -2.0590937 0.8689626 -2.29479125- Do a little bit of research and find a paper that fits a structural equation model. Simulate some data from it.

- Save the dataset (

write_csv()will help), and share it with someone else, along with a description of the theoretical model. Can they estimate a model that fits well?

Extras: Extensions of SEM

We have really only scraped the surface of the different things we can do with SEM. If you are interested in taking you learning further, then some of the next things to start looking into:

- Multigroup analysis (testing the model across two or more populations)

- Jöreskog, K. G. (1971). Simultaneous factor analysis in several populations. Psychometrika, 36(4), 409-426.

- Sorbom, D. (1974). A general method for studying differences in factor means and factor structures between groups. British Journal of Mathematical and Statistical Psychology, 27, 229-239.

- Jöreskog, K. G. (1971). Simultaneous factor analysis in several populations. Psychometrika, 36(4), 409-426.

- Latent Growth Curves (actually just the same as a multilevel model!! 🤯 )

- Michael Clark has a great lot of resources on this, and it makes the link between random effects and latent variables super clear.

Footnotes

We’ve actually seen this previously in multilevel modelling, when we had random intercepts, random slopes, and the covariance between the two!↩︎

These are similar corrections to ones that we apply in a regression world, see LM Troubleshooting↩︎

Diagonally Weighted Least Squares↩︎

To me, these are some of the most confusing terms in statistics, because “at random” is used to mean “not completely random”!?? It might be easier to think of “missing at random” as “missing conditionally at random”, but then it gives the same acronym as “completely at random”!↩︎