Bootstrap for regression

When our research hypothesis involves a population slope, for example, we wish to test whether in a model such as \[ y = \beta_0 + \beta_1 x + \epsilon \] the slope coefficient is significantly different from 0 or not.

However, the population slope is an example of a parameter, a numerical summary of the population. Typically, we do not have data for the entire population, so we can only compute a numerical summary for a sample, called a statistic. We use the statistic \(\widehat \beta_1\) (= estimated regression slope) to estimate the unknown population parameter \(\beta_1\) (= true regression slope).

In the past weeks we have used the results from the t-test and corresponding p-values returned by the summary() function. However, such test results are valid only when the regression assumptions are satisfied.

For example, recall the Riverview data from Semester 1:

library(tidyverse)

riverview <- read_csv(file = "https://uoepsy.github.io/data/riverview.csv")

mdl <- lm(income ~ 1 + education, data = riverview)

summary(mdl)##

## Call:

## lm(formula = income ~ 1 + education, data = riverview)

##

## Residuals:

## Min 1Q Median 3Q Max

## -15.809 -5.783 2.088 5.127 18.379

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.3214 6.1232 1.849 0.0743 .

## education 2.6513 0.3696 7.173 5.56e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 8.978 on 30 degrees of freedom

## Multiple R-squared: 0.6317, Adjusted R-squared: 0.6194

## F-statistic: 51.45 on 1 and 30 DF, p-value: 5.562e-08Another key question we were able to answer by looking at the summary() output, was “How accurate can we expect the estimates to be?” This was given by the standard error (SE in short), reported in the Std. Error column. The SE tells us the size of a typical “estimation error,” i.e. a typical distance between an estimate for a given sample and the true but unknown population value.

Clearly, now you might wonder, what should we do then if there is no way to satisfy the regression assumptions by transforming variables?

Here’s the answer: bootstrap!

This week we examine bootstrapping, which is another technique for drawing inferences to the population from a regression model. This method is assumption-free and does not rely on conditions such as normality of the residuals.

Before continuing, this table summarises the new terms that will be explained throughout the exercises.

Bootstrap Terminology

- A parameter is a numerical summary for the population, e.g. the population slope \(\beta_1\)

- A statistic is a numerical summary calculated from the sample data, e.g. the estimated slope in the sample \(\widehat \beta_1\)

- A bootstrap sample is chosen with replacement from an existing sample, using the same sample size.

- A bootstrap statistic is a statistic computed for each bootstrap sample.

- A bootstrap distribution collects bootstrap statistics for many bootstrap samples.

Age, Knowledge and Attitudes About Science

This week’s lab explores whether attitudes towards science and faith are related to knowledge about science and to age, using a subset of data from the 2005 Eurobarometer 63.1 survey.

The research question guiding this example is:

Is age and knowledge about science associated with attitudes towards science and faith?

We can also state this in the form of two research hypotheses:

- There is a significant linear relationship between people’s age and their attitudes to science and faith after accounting for their scientific knowledge.

- There is a significant linear relationship between people’s scientific knowledge and their attitudes to science and faith after accounting for their age.

The Data

Download the data here: https://uoepsy.github.io/data/science-faith-attitude.csv

This example uses three variables from the Eurobarometer 63.1 survey:

| Variable | Description |

|---|---|

kstot

|

Score on a science “quiz” composed of 13 true/false items. |

toomuchscience

|

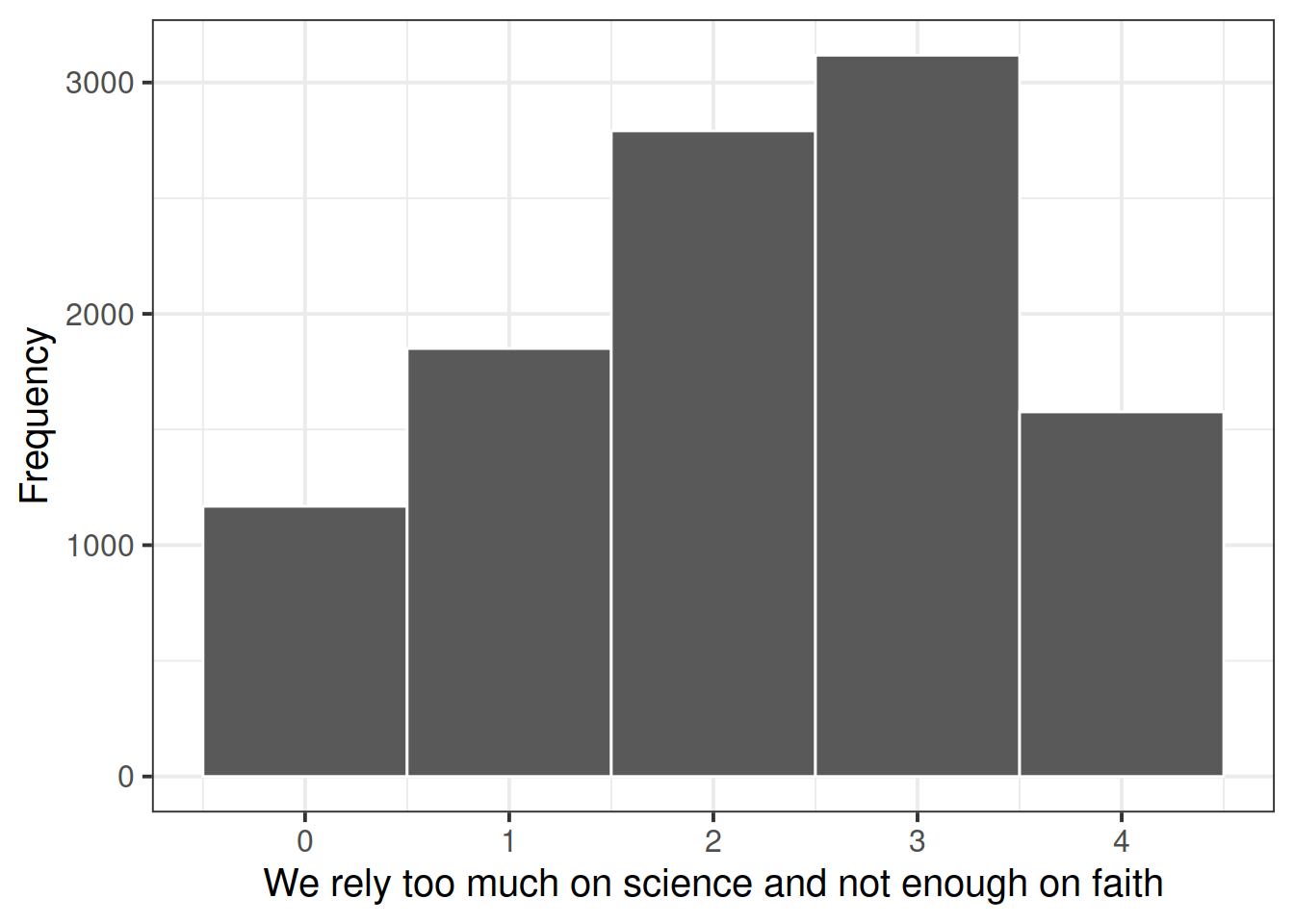

Attitude to science and faith (question wording: “We rely too much on science and not enough on faith”; responses on a 5-point scale from strongly disagree to strongly agree). |

age

|

Age measured in years. |

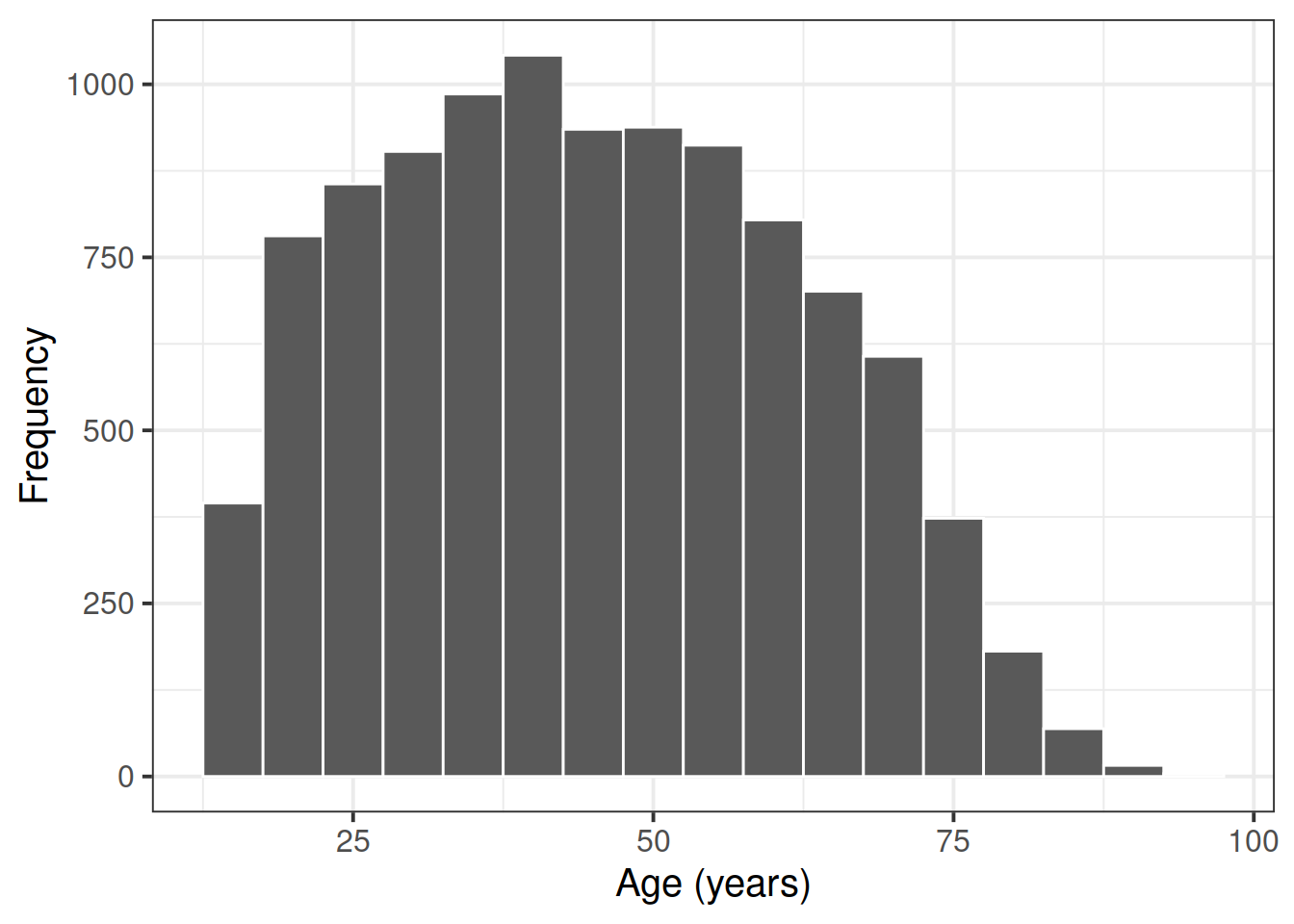

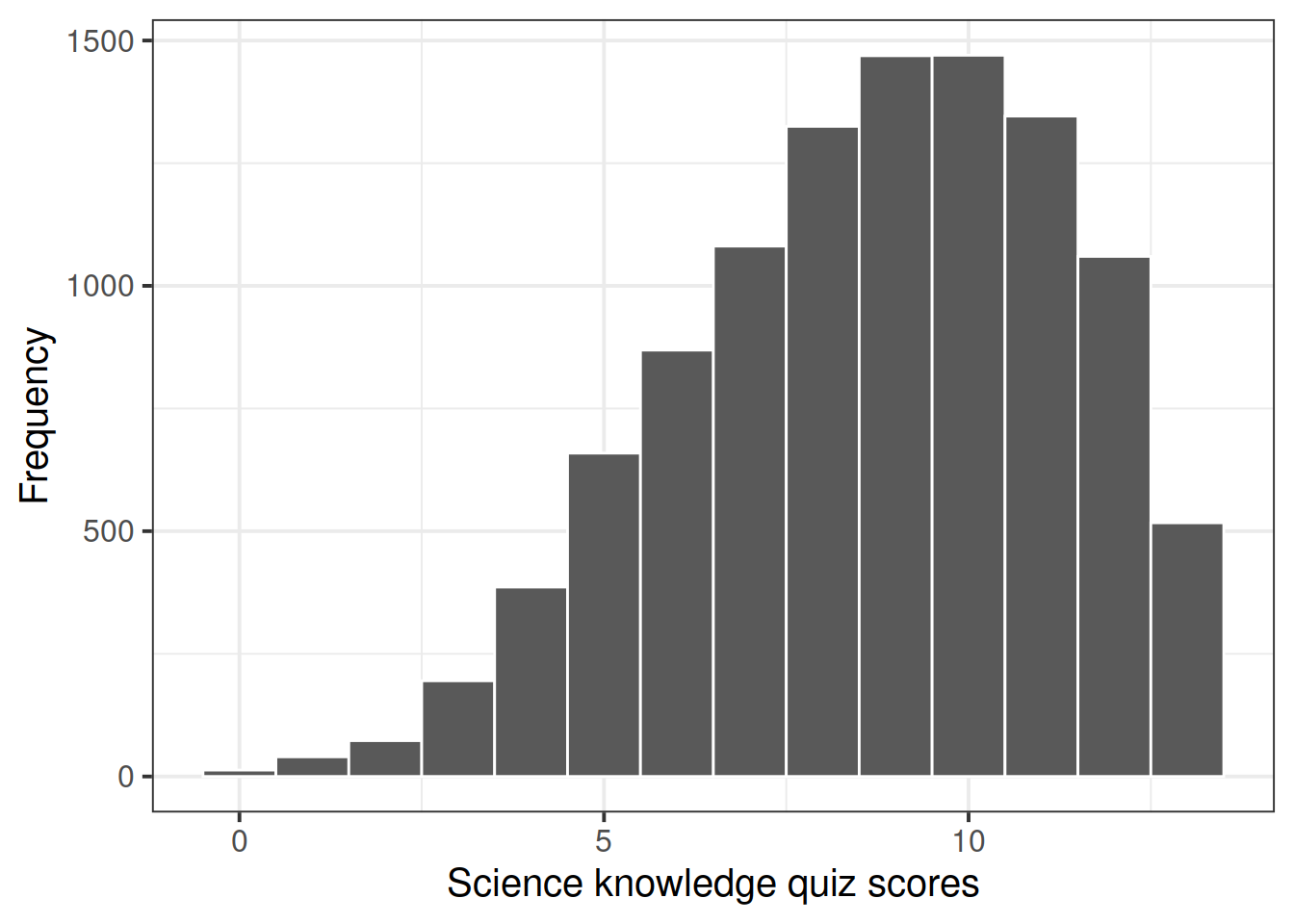

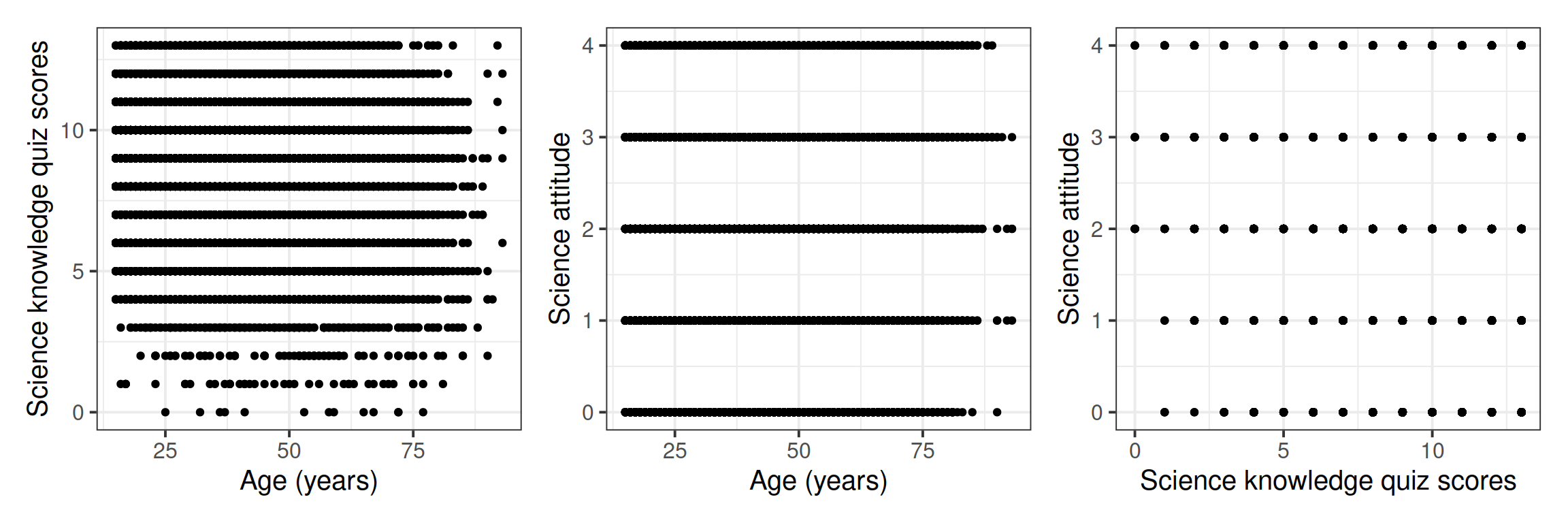

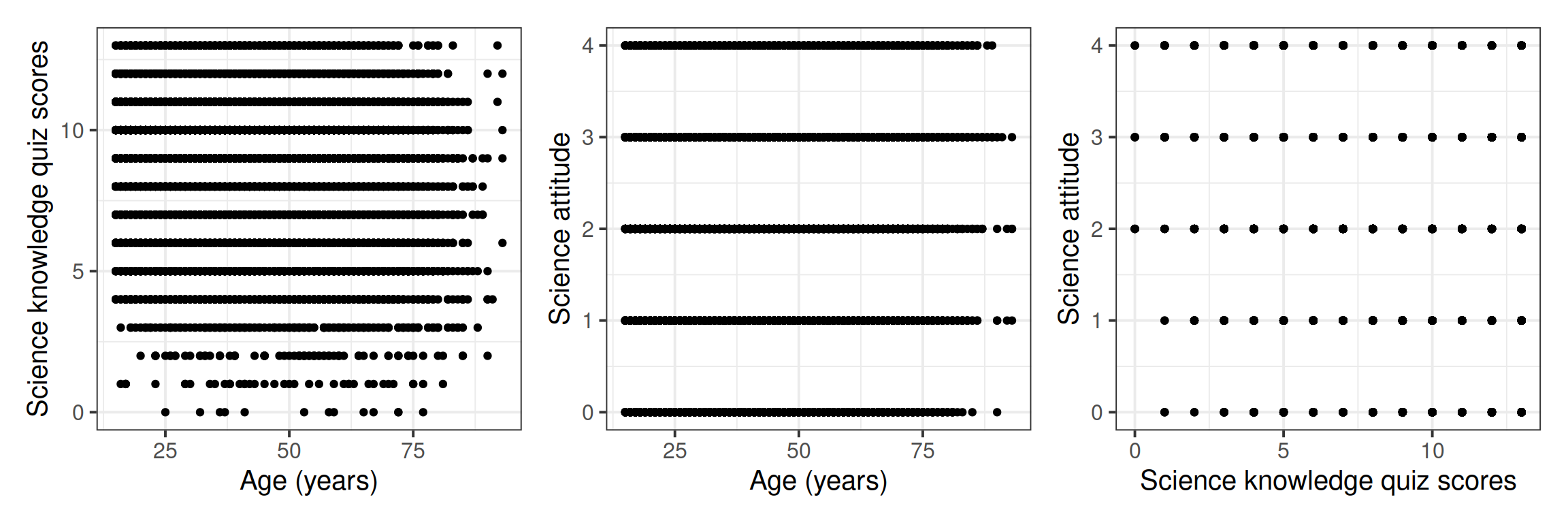

The science knowledge quiz has a range of 0 to 13. Its mean is about 8.7. The attitude to science and faith question has five categories, ranging from 0 to 4, with a mean of about 2.5. Age has a range of 15–93, with a mean of about 45. This is formally an ordinal variable but, in line with common practice in applied research, we regard it as continuous, as we do the other two variables as well.

Read the data into R, and call the data

ebsurvey. Remember, you can download the data here: https://uoepsy.github.io/data/science-faith-attitude.csvHow many observations and how many variables are there?

Today we will be only using the

kstot,age, andtoomuchsciencecolumns. Subset the data to only have those 3 columns.Is there any missing values? If yes, remove the rows with missing values.

Give the variables more meaningful names. Rename kstot to science_knowledge and rename toomuchscience to attitude.

Hint: have a look at the help page of the function rename.

Before producing multiple regression models, it is a good idea to look at each variable separately.

Explore the distribution of age in years, age.

Explore the distribution of science knowledge quiz scores, science_knowledge.

Explore the distribution of attitude to science and faith scores, attitude.

Visualise the pairwise relationships between your variables and explore the possible correlations.

Now that we have explored each variable by itself, we can estimate the multiple regression model.

\[ \texttt{attitude}_i = \beta_0 + \beta_1 \ \texttt{science_knowledge}_i + \beta_2 + \texttt{age}_i + \epsilon_i \]

Regression results are often presented in a table that reports the coefficient estimates, their estimated standard errors, t-scores, and levels of statistical significance.

- Fit the model specified above using R

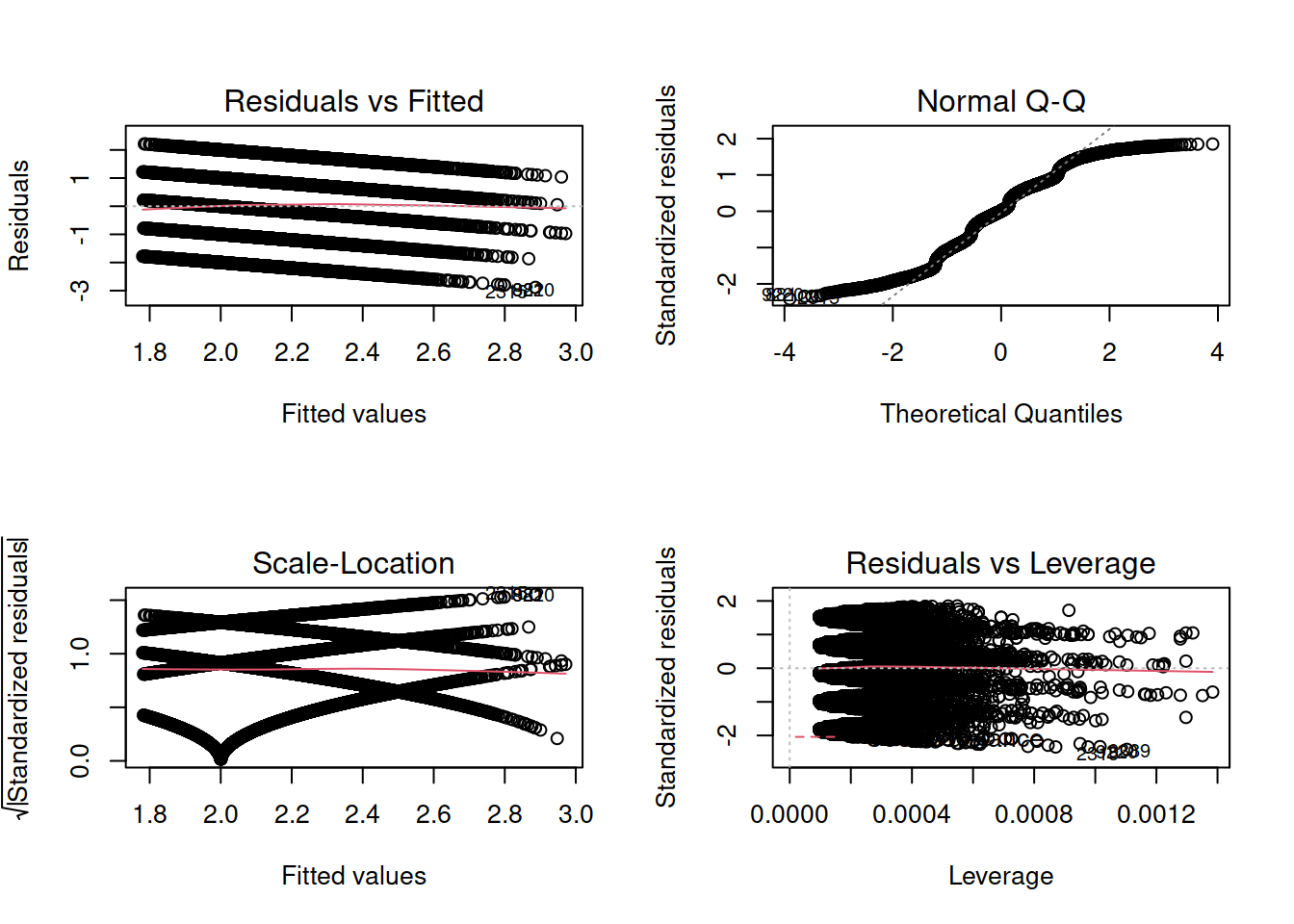

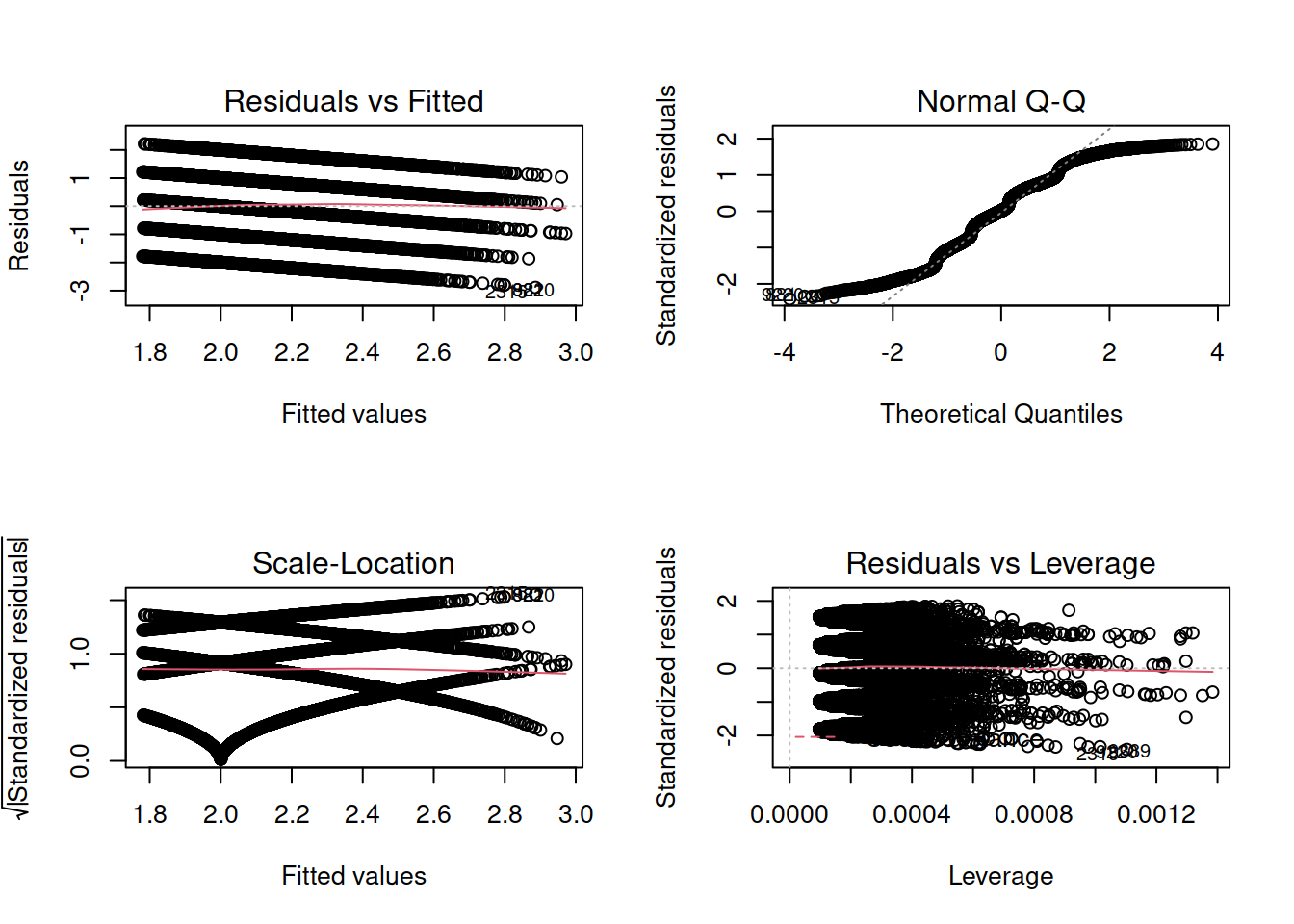

- Check for violations of the model assumptions.

If you are doubting that the model assumptions are satisfied, don’t throw your PC up in the air, but rather keep reading!

The Bootstrap

The bootstrap is a general approach to assessing whether the sample results are statistically significant or not, which does not rely on specific distributional assumptions such as normality of the errors. It is based on sampling repeatedly with replacement (to avoid always getting the original sample exactly) from the data at hand, and then computing the regression coefficients from each resample. We will equivalently use the word bootstrap sample or resample (for SAMPLE with REplacement).

The basic principle is:

The population is to the original sample

as

the original sample is to the bootstrap samples.

Because we only have one sample of size \(n\), and we do not have access to the data for the entire population, we consider our original sample as our best approximation to the population. To be more precise, we assume that the population is made up of many, many copies of our original sample. Then, we take multiple samples each of size \(n\) from this assumed population. This is equivalent to sampling with replacement from the original sample.

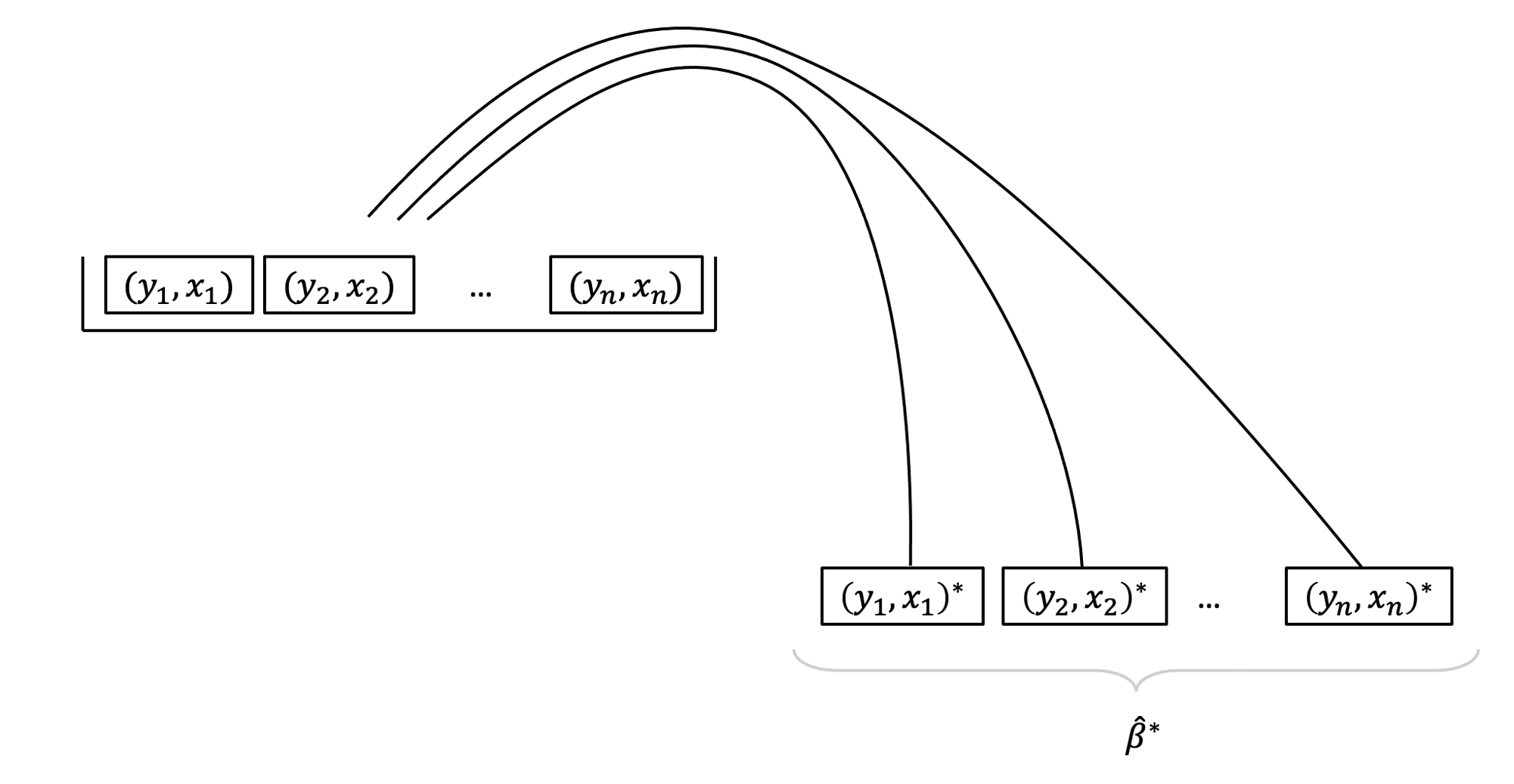

We will explain, without loss of generality, the bootstrap for regression in the simple case where our sample data consist of measurements on a response \(y\) and predictor \(x\) for a sample of \(n\) individuals (or units):

\[ \begin{matrix} \text{Individual, }i & \text{Response, }y & \text{Predictor, }x \\ 1 & y_1 & x_1 \\ 2 & y_2 & x_2 \\ \vdots & \vdots & \vdots\\ n & y_n & x_n \\ \end{matrix} \]

We can write this in compact form by saying that we have sample data comprising \(n\) pairs of (response, predictor) data. This is our original sample: \[ (y_1, x_1), (y_2, x_2), \dots, (y_n, x_n) \]

For the original sample, we can obtain estimated intercept and slope: \[ \begin{aligned} \widehat \beta_0 &= \text{estimated intercept for original sample } (y_1, x_1), (y_2, x_2), \dots, (y_n, x_n)\\ \widehat \beta_1 &= \text{estimated slope for original sample } (y_1, x_1), (y_2, x_2), \dots, (y_n, x_n) \end{aligned} \]

To obtain one bootstrap sample from the original sample, sample \(n\) pairs with replacement from the original sample. Denote the new \(n\) pairs with an asterisk. Note that we can have repetitions of pairs from the original sample: \[ (y_1, x_1)^*, (y_2, x_2)^*, \dots, (y_n, x_n)^* \]

Then, we can fit the linear model to the data in the bootstrap sample, and compute the regression coefficients in the bootstrap sample, \(\widehat \beta_0^*\) and \(\widehat \beta_1^*\). These two regression coefficients are examples of bootstrap statistics. We call bootstrap statistic any numerical summary of the bootstrap sample, in the same way that a statistic is a numerical summary of a sample.

\[ \begin{aligned} \widehat \beta_0^* &= \text{estimated intercept for resample } (y_1, x_1)^*, (y_2, x_2)^*, \dots, (y_n, x_n)^*\\ \widehat \beta_1^* &= \text{estimated slope for resample } (y_1, x_1)^*, (y_2, x_2)^*, \dots, (y_n, x_n)^* \end{aligned} \]

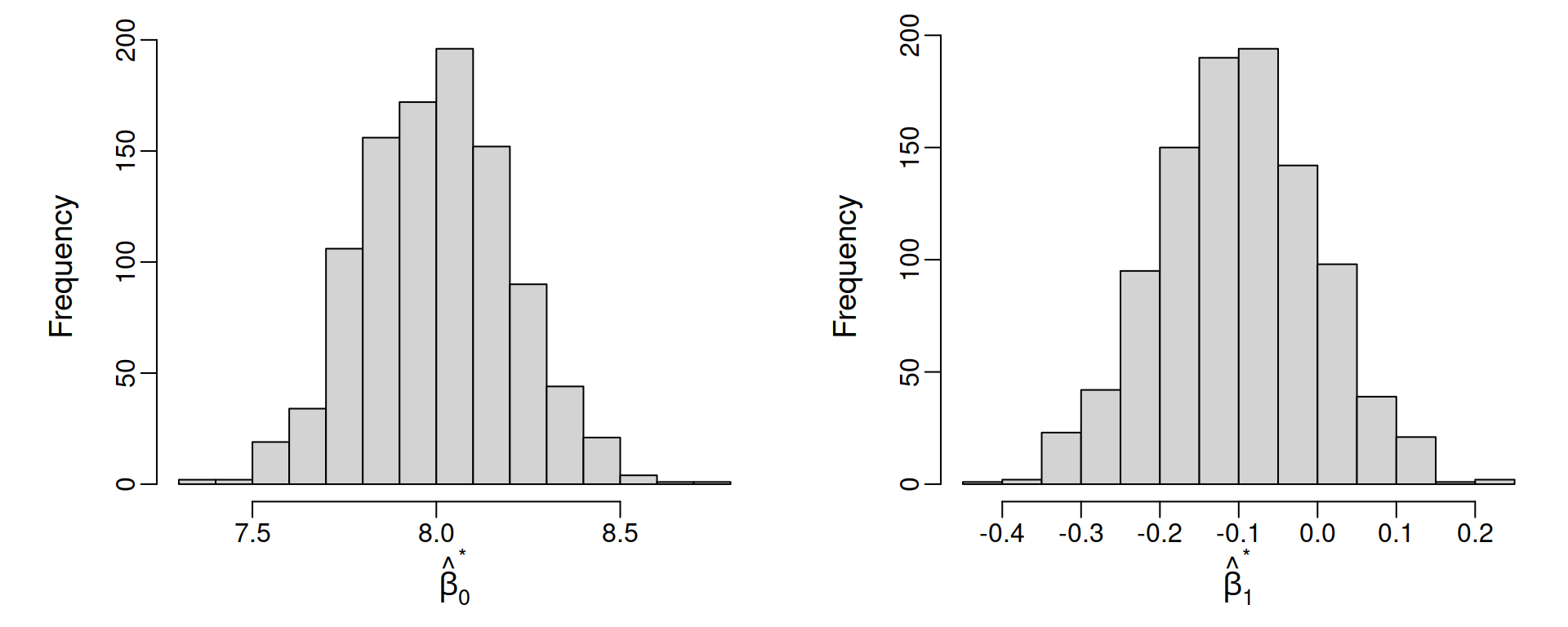

Now, imagine doing this many times. That is, taking many bootstrap samples (say \(R = 1,000\)), each of size \(n\) individuals, and computing the regression intercept and slope for each bootstrap sample.

You will obtain \(R\) bootstrap intercepts and \(R\) bootstrap slopes. Denote by \(\widehat \beta_{0}^{(5)}\) the bootstrap intercept in the 5th bootstrap sample. Similarly, \(\widehat \beta_{1}^{(5)}\) is the bootstrap slope in the 5th bootstrap sample.

\[

\widehat \beta_{0}^{(1)}, \

\widehat \beta_{0}^{(2)}, \dots, \

\widehat \beta_{0}^{(R)} \\

\widehat \beta_{1}^{(1)}, \

\widehat \beta_{1}^{(2)}, \dots, \

\widehat \beta_{1}^{(R)}

\]

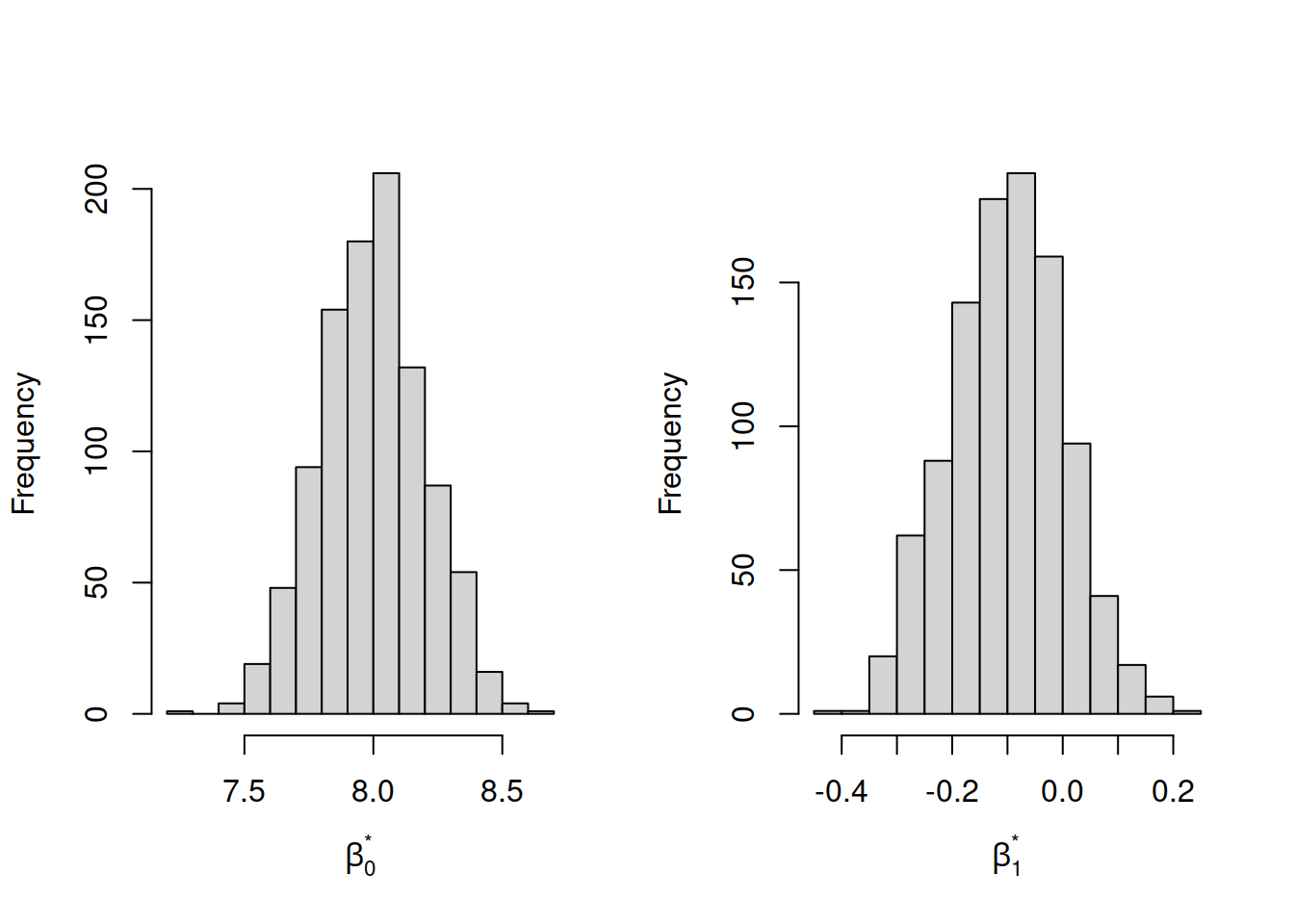

You can visualise the distribution of the \(R = 1,000\) bootstrap intercepts and slopes with histograms:

That sounds all nice in theory! But how do I actually do this in R? Is it difficult???

No, it’s super easy! Follow these steps:

Step 1. Load the car library

library(car)Step 2. Use the Boot() function (do not forget the uppercase B!) which takes as arguments:

- the fitted model

f, saying which bootstrap statistics to compute on each bootstrap sample. By defaultf = coef, returning the regression coefficients.R, saying how many bootstrap samples to compute. By defaultR = 999.ncores, saying if to perform the calculations in parallel (and more efficiently). However, this will depend on your PC, and you need to find how many cores you have by runningparallel::detectCores()on your PC. By default the function usesncores = 1.

Step 3. Run the code. However, please remember that the Boot() function does not want a model which was fitted using data with NAs. In our case we are fine because we already removed them with na.omit.

boot_mdl <- Boot(mdl, R = 999)Step 4. Look at the summary of the bootstrap results:

summary(boot_mdl)##

## Number of bootstrap replications R = 999

## original bootBias bootSE bootMed

## (Intercept) 2.7882487 1.8311e-03 0.05545034 2.7909036

## science_knowledge -0.0802763 -5.1728e-05 0.00442885 -0.0802218

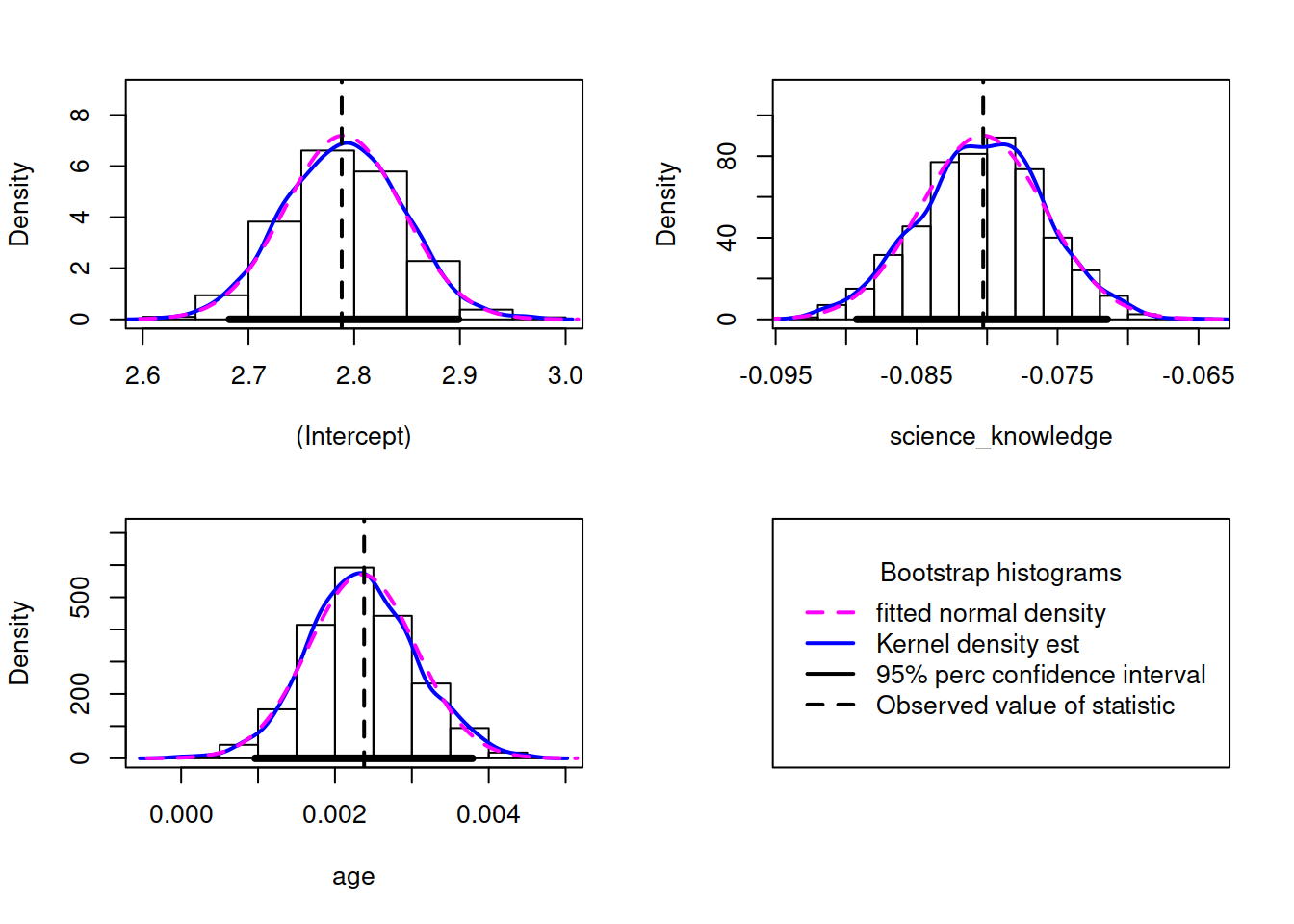

## age 0.0023794 -2.5833e-05 0.00069909 0.0023376The above output shows, for each regression coefficient, the value in the original sample in the column original, and then we will focus on the bootSE column, which estimates the variability of the coefficient from bootstrap sample to bootstrap sample. The bootSE provides us the bootstrap standard error, or bootstrap SE in short. We use this to answer the key question of how accurate our estimate is.

Step 5. Compute confidence intervals. Use your preferred confidence level, by default this is 95%:

Confint(boot_mdl, level = 0.95, type = "perc")## Bootstrap percent confidence intervals

##

## Estimate 2.5 % 97.5 %

## (Intercept) 2.788248712 2.682016848 2.898655860

## science_knowledge -0.080276256 -0.089240855 -0.071479349

## age 0.002379446 0.000962663 0.003786739The type = "perc" argument tells R to return the values that comprise 95% of all values in between them, i.e. the value with 2.5% of observations below it and the value with 2.5% of observations above it and 97.5% of observations below it.

If you want to make it into a nice table:

Confint(boot_mdl, type = "perc") %>%

kable(digits = 3, caption = 'Bootstrap 95% CIs') %>%

kable_styling(full_width = FALSE)| Estimate | 2.5 % | 97.5 % | |

|---|---|---|---|

| (Intercept) | 2.788 | 2.682 | 2.899 |

| science_knowledge | -0.080 | -0.089 | -0.071 |

| age | 0.002 | 0.001 | 0.004 |

We are 95% confident that the population intercept is between 2.68 and 2.9. We are 95% confident that the population slope for science knowledge is between -0.09 and -0.07. We are 95% confident that the population slope for age is between 0.001 and 0.004.

The results in Table 4 report an estimate of the intercept (or constant) as equal to approximately 2.8. The constant of a multiple regression model can be interpreted as the average expected value of the dependent variable when all of the independent variables equal zero. In this case, the independent variable science knowledge has only a handful of respondents that score zero, and no one is aged zero, so the constant by itself does not tell us much. Researchers do not often have predictions based on the intercept, so it often receives little attention. A better choice would be to mean centre age, and refit the model with a mean centred age variable!

The estimated value for the slope coefficient linking knowledge to attitude is estimated to be approximately -0.08. This represents the average marginal effect of knowledge on attitude, and can be interpreted as the expected change in the dependent variable on average for a one-unit increase in the independent variable, controlling for age. In this example, every increase in quiz score by one point is associated with a decrease in attitude score of about –0.08, adjusted for age. Bearing in mind the valence of the question wording, this means that those who are more knowledgeable tend to be more favourable towards science – i.e. disagreeing with the statement.

The slope coefficient linking age to attitude is estimated to be approximately 0.002. This represents the average marginal effect of each additional year on attitude, and can be interpreted as the expected change in the dependent variable on average for a one-unit increase in the independent variable, controlling for science knowledge. For this example, that means that for every year older a person is, their attitude score is expected to increase by 0.002, controlling for science knowledge. This may seem like a very small effect, but remember that this is the effect of only one additional year. Bearing in mind the valence of the question wording, this means that older people tend to be less favourable towards science – i.e. agreeing with the statement.

The bootstrap confidence intervals table also reports that the 95% confidence intervals for both slope estimates do not include 0. This leads us to reject both null hypotheses at the 5% significance level, and conclude that there appear to be relationships for both age and science knowledge with attitude to science and faith.

Remember the summary of them model:

summary(mdl)##

## Call:

## lm(formula = attitude ~ science_knowledge + age, data = ebsurvey)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.88643 -0.97484 0.00913 0.88427 2.21489

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.7882487 0.0537759 51.849 < 2e-16 ***

## science_knowledge -0.0802763 0.0045095 -17.802 < 2e-16 ***

## age 0.0023794 0.0006792 3.503 0.000461 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.196 on 10500 degrees of freedom

## Multiple R-squared: 0.03223, Adjusted R-squared: 0.03204

## F-statistic: 174.8 on 2 and 10500 DF, p-value: < 2.2e-16The R-squared for the model is 0.031, which means that approximately 3% of the variance in attitude is explained by science knowledge and age.

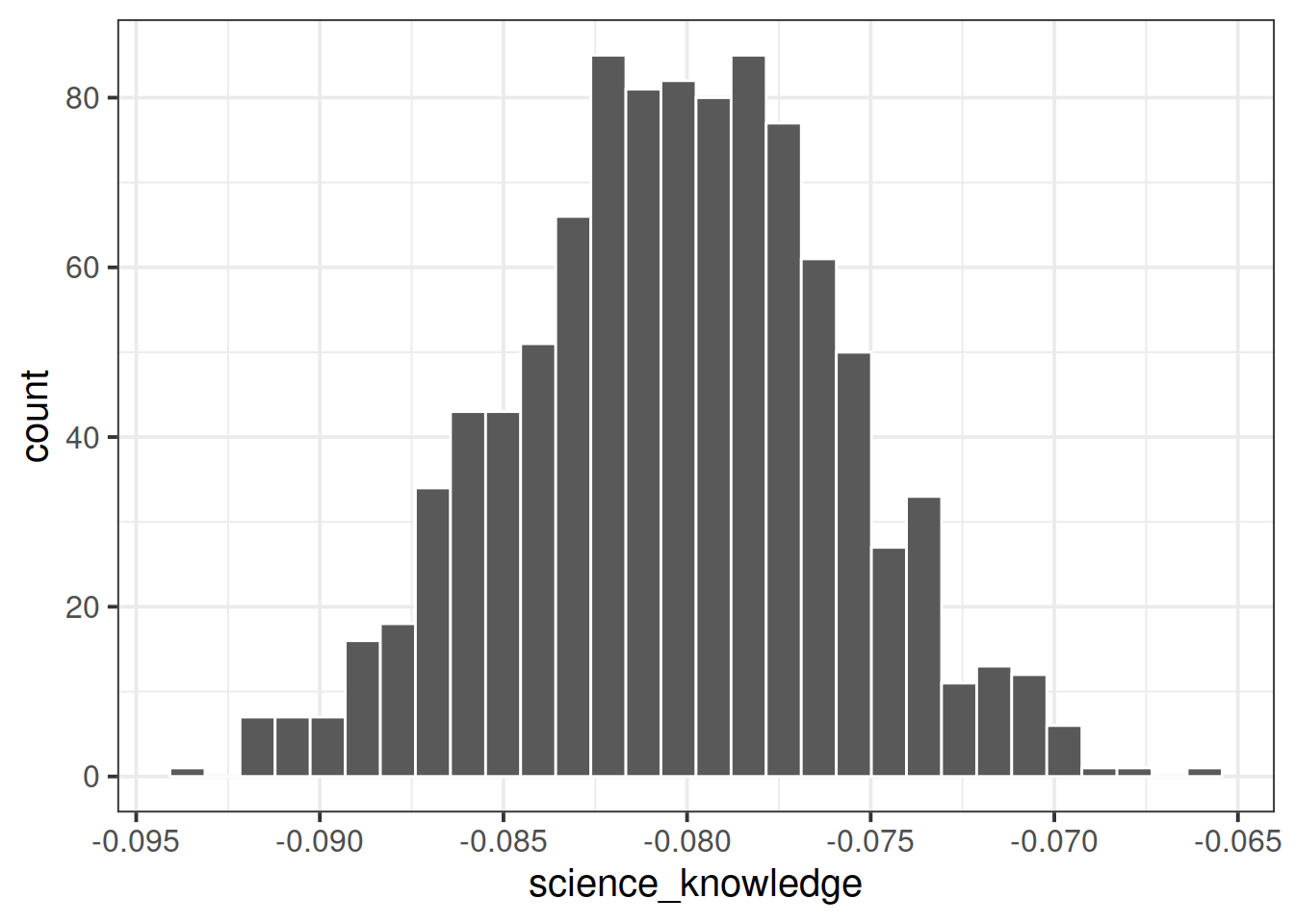

How can we visualise the bootstrap intercepts and slopes? They are stored in the boot_mdl object.

This will show you the \(R = 999\) estimates, using head to show only the top 6.

head(boot_mdl$t)## (Intercept) science_knowledge age

## [1,] 2.860584 -0.07946250 0.0009295118

## [2,] 2.729586 -0.07512774 0.0024392332

## [3,] 2.789480 -0.08029869 0.0025441840

## [4,] 2.749536 -0.07630859 0.0024819148

## [5,] 2.913322 -0.09170225 0.0012816341

## [6,] 2.803966 -0.07888200 0.0020855342While this shows you the estimated intercept and slope in the original sample

boot_mdl$t0## (Intercept) science_knowledge age

## 2.788248712 -0.080276256 0.002379446You can visualise the uncertainty in the estimates by plotting histograms either manually:

plot_data <- as_tibble(boot_mdl$t)

plot_data## # A tibble: 999 x 3

## `(Intercept)` science_knowledge age

## <dbl> <dbl> <dbl>

## 1 2.86 -0.0795 0.000930

## 2 2.73 -0.0751 0.00244

## 3 2.79 -0.0803 0.00254

## 4 2.75 -0.0763 0.00248

## 5 2.91 -0.0917 0.00128

## 6 2.80 -0.0789 0.00209

## 7 2.76 -0.0803 0.00311

## 8 2.75 -0.0787 0.00308

## 9 2.69 -0.0733 0.00286

## 10 2.77 -0.0795 0.00259

## # … with 989 more rowsggplot(plot_data, aes(science_knowledge)) +

geom_histogram(color = 'white')

Or using the built-in function from the car package, which simply takes the bootstrap results boot_mdl:

hist(boot_mdl, ci = "perc", legend = "separate")

Presenting results

Write a short paragraph summarising the results of this lab.

Include:

- A brief description of which data were used to answer which questions.

- A plot of your variables and summary statistics.

- Describe your model and report results

- Interpret results in context.

References

Adapted from:

- Allum, N. (2015). Learn about multiple regression in SPSS with data from the eurobarometer (63.1, jan–feb 2005). In SAGE Research Methods Datasets Part 1. SAGE Publications, Ltd.