Sampling distributions

LEARNING OBJECTIVES

- Recognise the difference between parameters and statistics

- Be able to use a sample statistic to estimate an unknown parameter

- Understand what a sampling distribution is

- Understand the concept of standard error

- Recognise why sample size matters

Fundamentals of inference

This section contains essential terminology and functions that are needed to complete the exercises provided.

Population vs sample

Typically:

- We do not have data for the entire population. There are different possible reasons:

- It’s too expensive to collect them

- Because of deadlines, there is not sufficient time

- It’s not possible to reach the entire population

- It’s much easier to obtain data by taking a sample from that population of interest and measuring only the units chosen in the sample.

- Note that units do not necessarily have to be individuals, but they could be schools, companies, etc.

- We wish to use the sample data to:

- Investigate a claim about the whole population.

- Test an hypothesis about the entire population.

- Answer a question about the whole population.

The process of using information from a sample (the part) in order to draw conclusions about the entire population (the whole) is known as statistical inference.

Parameters vs statistics

As we do not typically have the data for the entire population, the population is considered as “unknown” with respect the data that we wish to investigate. We could shortly say that the population data are unknown.

For example, if we are interested in the average IQ in the population, we don’t have the resources to go to every single individual and test their IQ score. So, in this respect, the population IQ scores are unknown.

As a consequence of this, any numerical summary of the population data is also unknown. In the above example, the population mean IQ score is unknown and needs to be estimated.

Sample data are more readily available or feasible to collect. Imagine collecting a sample of 50 individuals, chosen at random from the population, and testing each to obtain their IQ score. If you performed the random experiment, you would then obtain a sequence of 50 IQ measurements.

At the same time, it is also feasible to compute any numerical summary of the sample data. For example, you can compute the mean IQ score for those 50 individuals in the sample.

We typically use this terminology to distinguish a numerical summary when computed in the population (unknown) or in the collected sample (known).

A parameter is a numerical summary of a population.

A statistic is a numerical summary of the sample.

A statistic is often used as a “best guess” or “estimate” for the unknown parameter. That is, we use the (sample) statistic to estimate a (population) parameter.

In the above example, the population mean IQ score is the parameter of interest, while the sample mean IQ score is the statistic.

It is typical to use special notation to distinguish between parameters and statistics in order to convey with a single letter: (1) which numerical summary is being computed, and (2) if it is computed on the population or on the sample data.

The following table summarizes standard notation for some population parameters, typically unknown, and the corresponding estimates computed on a sample.

| Numerical summary | Population parameter | Sample statistic |

|---|---|---|

| Mean | \(\mu\) | \(\bar{x}\) or \(\hat{\mu}\) |

| Standard deviation | \(\sigma\) | \(s\) or \(\hat{\sigma}\) |

| Proportion | \(p\) | \(\hat{p}\) |

The Greek letter \(\mu\) (mu) represents the population mean (parameter), while \(\bar{x}\) (x-bar) or \(\hat{\mu}\) (mu-hat) is the mean computed from the sample data (sample statistic).

The Greek letter \(\sigma\) (sigma) represents the population standard deviation (parameter), while \(s\) or \(\hat{\sigma}\) (sigma-hat) is the standard deviation computed from the sample data (sample statistic).

The Greek letter \(p\) represents the population proportion (parameter), while \(\hat{p}\) (p-hat) is the proportion computed from the sample data (sample statistic).

(Optional) Statistics are random variables

The process of sampling \(n\) people at random from the population is a random experiment, as it leads to an uncertain outcome. Hence, a statistic is a numerical summary of a random experiment and for this reason it is a random variable (random number).

Before actually performing the random experiment and picking individuals for the sample, the sample mean is a random number, as its value is uncertain. Once we actually perform the random experiment and measure the individuals in the sample, we have an observed value for the sample mean, i.e. a known number.

We will then distinguish between:

- a statistic - a random variable (random number) that is uncertain because it involves a random experiment.

- an observed statistic - the actual number that is computed once the sample data have been collected by performing the chance experiment.

Notation-wise we distinguish between a statistic (random variable) and an observed statistic (observed number) by using, respectively, an uppercase letter or a lowercase letter.

Uppercase letters refer to random variables. Recall that a random variable represents a well defined number, but whose value is uncertain as it involves an experiment of chance in order to reach to an actual value.

For example, \(\bar X\) = “mean IQ score in a random sample of 50 individuals” is a random variable. It is clearly defined in operational terms by: (1) take a sample of 50 individuals at random, (2) measure their IQ score, and (3) compute the mean of their 50 IQ scores. However, the actual value that we can obtain is uncertain as it is the result of an experiment of chance involving random sampling from a population. There are many possible values we could obtain, so we are not sure which one we will see.

Another example: \(X\) = “number of heads in 10 flips of a coin”. This is also a clearly defined experiment: (1) flip a coin 10 times and (2) count the number of heads. However, the result is a random number, which is uncertain and will be unknown until we actually perform the experiment and flip a coin 10 times.

Lowercase letters refer to observed (i.e., realised) values of the random variable. An observed value of a random variable is just a number.

For example, once we actually collect 50 individuals and measure their IQ scores, we can sum those 50 numbers and divide the sum by 50 to obtain the observed sample mean. Say the sample mean IQ score is 102.3, we would write \(\bar x = 102.3\).

In short, we would use for a sample mean:

- Statistic (sample mean): an uppercase letter before the value is actually known: \(\bar X\)

- Observed statistic (observed sample mean): a lowercase letter once the value is actually known: \(\bar x\)

Avoiding bias due to sampling

Sampling bias occurs when the method used to select which units enter the sample causes the sample to not be a good representation of the population.

If sampling bias exists, we cannot generalise our sample conclusions to the population.

To be able to draw conclusions about the population, we need a representative sample. The key in choosing a representative sample is random sampling. Imagine an urn with tickets, where each ticket has the name of each population unit. Random sampling would involve mixing the urn and blindly drawing out some tickets from the urn. Random sampling is a strategy to avoid sampling bias.

Simple random sampling

When we select the units entering the sample via simple random sampling, each unit in the population has an equal chance of being selected, meaning that we avoid sampling bias.

When instead some units have a higher chance of entering the same, we have misrepresentation of the population and sampling bias.

In general, we have bias when the method of collecting data causes the data to inaccurately reflect the population.

Sampling distribution

The natural gestation period (in days) for human births is normally distributed in the population with mean 266 days and standard deviation 16 days. This is a special case which rarely happens in practice: we actually know what the distribution looks like in the population.

We will use this unlikely example to study how well does the sample mean estimate the population mean and, to do so, we need to know what the population mean is so that we can compare the estimate and the true value. Remember, however, that in practice the population parameter would not be known.

We will consider data about the gestation period of the 49,863 women who gave birth in Scotland in 2019. These can be found at the following address: https://uoepsy.github.io/data/pregnancies.csv

First, we read the population data:

library(tidyverse)

gest <- read_csv('https://uoepsy.github.io/data/pregnancies.csv')

dim(gest)## [1] 49863 2The data set contains information about 49,863 cases. For each case an identifier and the length of pregnancy Look at the top six rows of the data set (the “head”):

head(gest)## # A tibble: 6 × 2

## id gest_period

## <dbl> <dbl>

## 1 1 256.

## 2 2 269.

## 3 3 253.

## 4 4 292.

## 5 5 271.

## 6 6 253.We now want to investigate how much the sample means will vary from sample to sample. To do so, we will take many samples from the population of all gestation periods, and compute for each sample the mean.

To do so, we will load a function which we prepared for you called rep_sample_n(). This function is used to take a sample of \(n\) units from the population, and it lets you repeat this process many times.

To get the function in your computer, run this code:

source('https://uoepsy.github.io/files/rep_sample_n.R')NOTE

You need to copy and paste the line

source('https://uoepsy.github.io/files/rep_sample_n.R')at the top of each file in which you want to use the rep_sample_n() function.

The function takes the following arguments:

rep_sample_n(data, n = <sample size>, samples = <how many samples>)datais the populationnis the sample sizesamplesis how many samples of size \(n\) you want to take

Before doing anything involving random sampling, it is good practice to set the random seed. This is to ensure reproducibility of the results. Random number generation in R works by specifying a starting seed, and then numbers are generated starting from there.

Set the random seed to any number you wish. Depending on the number, you will get the same results as me or not:

set.seed(1234)Obtain 10 samples of \(n = 5\) individuals each:

samples <- rep_sample_n(gest, n = 5, samples = 10)

samples## # A tibble: 50 × 3

## sample id gest_period

## <dbl> <dbl> <dbl>

## 1 1 40784 255.

## 2 1 40854 275.

## 3 1 41964 281.

## 4 1 15241 246.

## 5 1 33702 247.

## 6 2 35716 267.

## 7 2 17487 289.

## 8 2 15220 266.

## 9 2 19838 238.

## 10 2 2622 281.

## # … with 40 more rowsThe samples data frame contains 3 columns:

sample, telling us which sample each row refers toid, telling us the units chosen to enter each samplegest_period, telling us the gestation period (in days) of each individual

Note that the tibble samples has 50 rows, which is given by 5 individuals in each sample * 10 samples.

You can inspect the sample data in the following interactive table in which the data corresponding to each sample have been colour-coded so that you can distinguish the rows belonging to the 1st, 2nd, …, and 10th sample:

Now, imagine computing the mean of the five observation in each sample. This will lead to 10 means, one for each of the 10 samples (of 5 individuals each).

sample_means <- samples %>%

group_by(sample) %>%

summarise(mean_gest = mean(gest_period))

sample_means## # A tibble: 10 × 2

## sample mean_gest

## <dbl> <dbl>

## 1 1 261.

## 2 2 268.

## 3 3 273.

## 4 4 269.

## 5 5 261.

## 6 6 271.

## 7 7 252.

## 8 8 262.

## 9 9 258.

## 10 10 267.As you can see this leads to a tibble having 10 rows (one for each sample), where each row is a mean computed from the 5 individuals which were chosen to enter the sample.

The gestation period (in days) for the first five women sampled wereThis sample has a mean of \(\bar x\) = 260.93 days.

The second sample of 5 women had gestation periodsThe second sample has a mean gestation period of \(\bar x\) = 267.98 days.

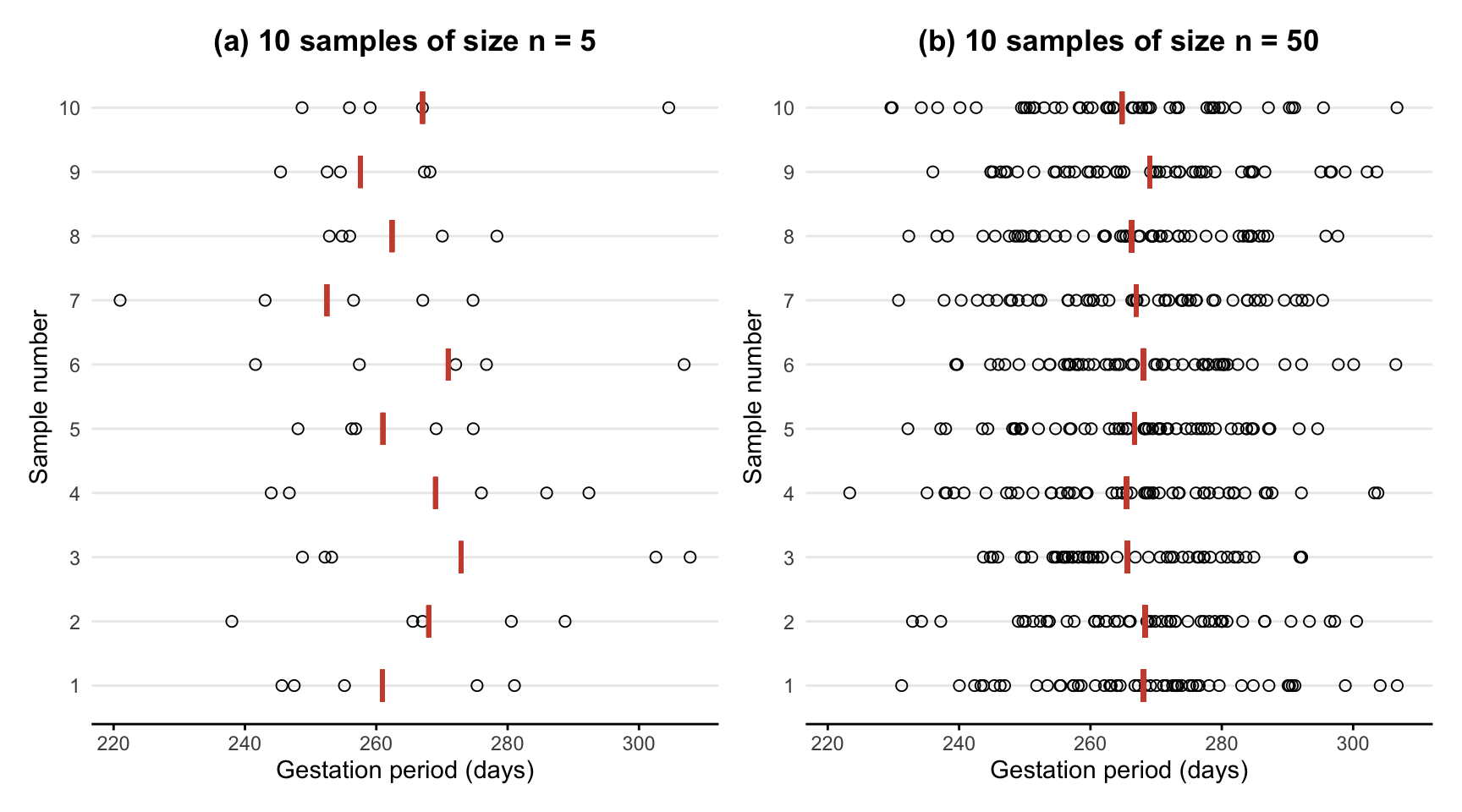

In Figure 1 we display the individual gestation periods in each sample as dots, along with the means gestation period \(\bar x\) of each sample. The position of the sample mean is given by a red vertical bar.

We then increased the sample size to 50 women and took 10 samples each of 50 individuals. This set of samples together with their means is also plotted in Figure 1.

Figure 1: Gestation period (in days) of samples of individuals.

Two important points need to be made from Figure 1. First, each sample (and therefore each sample mean) is different. This is due to the randomness of which individuals end up being in each sample. The sample means vary in an unpredictable way, illustrating the fact that \(\bar X\) is a summary of a random experiment (randomly choosing a sample) and hence is a random variable. Secondly, as we increase the sample size from 5 to 50, there appears to be a decrease in the variability of sample means (compare the variability in the vertical bars in panel (a) and panel (b)). That is, with a larger sample size, the sample means fluctuate less and are more “consistent”.

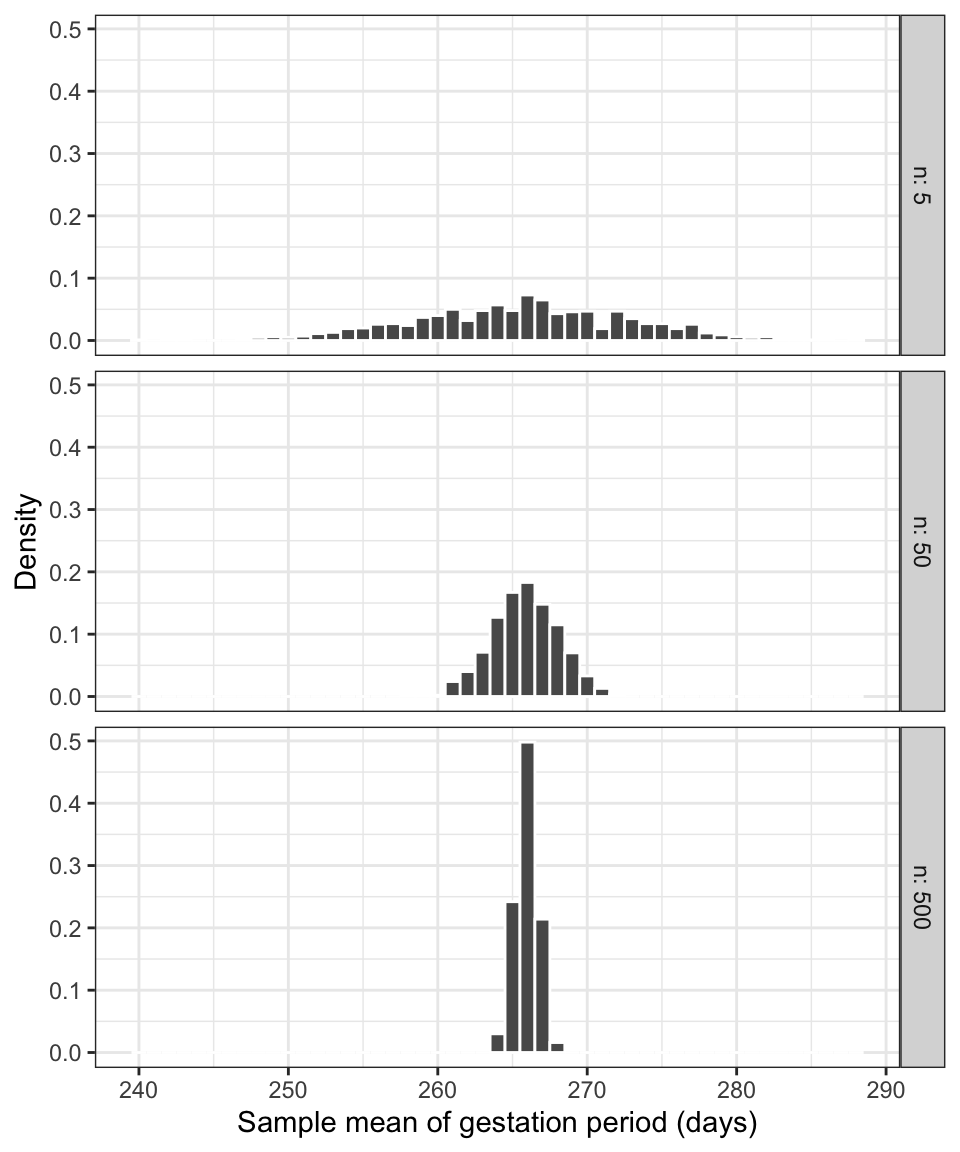

To further investigate the variability of sample means, we will now generate many more sample means computed on:

- 1,000 samples of \(n = 5\) women

- 1,000 samples of \(n = 50\) women

- 1,000 samples of \(n = 500\) women

We will also add at the end of each tibble a column specifying the sample size. In the first tibble, mutate(n = 5) creates a column called n where all values will be 5, to remind ourselves that those means were computed with samples of size \(n = 5\). Remember that mutate() takes a tibble and creates a new column or changes an existing one.

# (a) 1,000 means from 1,000 samples of 5 women each

sample_means_5 <- rep_sample_n(gest, n = 5, samples = 1000) %>%

group_by(sample) %>%

summarise(mean_gest = mean(gest_period)) %>%

mutate(n = 5)

head(sample_means_5)## # A tibble: 6 × 3

## sample mean_gest n

## <dbl> <dbl> <dbl>

## 1 1 267. 5

## 2 2 263. 5

## 3 3 255. 5

## 4 4 269. 5

## 5 5 267. 5

## 6 6 266. 5# (b) 1,000 means from 1,000 samples of 50 women each

sample_means_50 <- rep_sample_n(gest, n = 50, samples = 1000) %>%

group_by(sample) %>%

summarise(mean_gest = mean(gest_period)) %>%

mutate(n = 50)

head(sample_means_50)## # A tibble: 6 × 3

## sample mean_gest n

## <dbl> <dbl> <dbl>

## 1 1 263. 50

## 2 2 262. 50

## 3 3 269. 50

## 4 4 266. 50

## 5 5 267. 50

## 6 6 268. 50# (c) 1,000 means from 1,000 samples of 500 women each

sample_means_500 <- rep_sample_n(gest, n = 500, samples = 1000) %>%

group_by(sample) %>%

summarise(mean_gest = mean(gest_period)) %>%

mutate(n = 500)

head(sample_means_500)## # A tibble: 6 × 3

## sample mean_gest n

## <dbl> <dbl> <dbl>

## 1 1 265. 500

## 2 2 265. 500

## 3 3 267. 500

## 4 4 266. 500

## 5 5 265. 500

## 6 6 265. 500We now combine the above datasets of sample means for different sample sizes into a unique tibble. The function bind_rows() takes multiple tibbles and stacks them under each other.

sample_means_n <- bind_rows(sample_means_5,

sample_means_50,

sample_means_500)We now plot three different density histograms showing the distribution of 1,000 sample means computed from samples of size 5, 50, and 500.

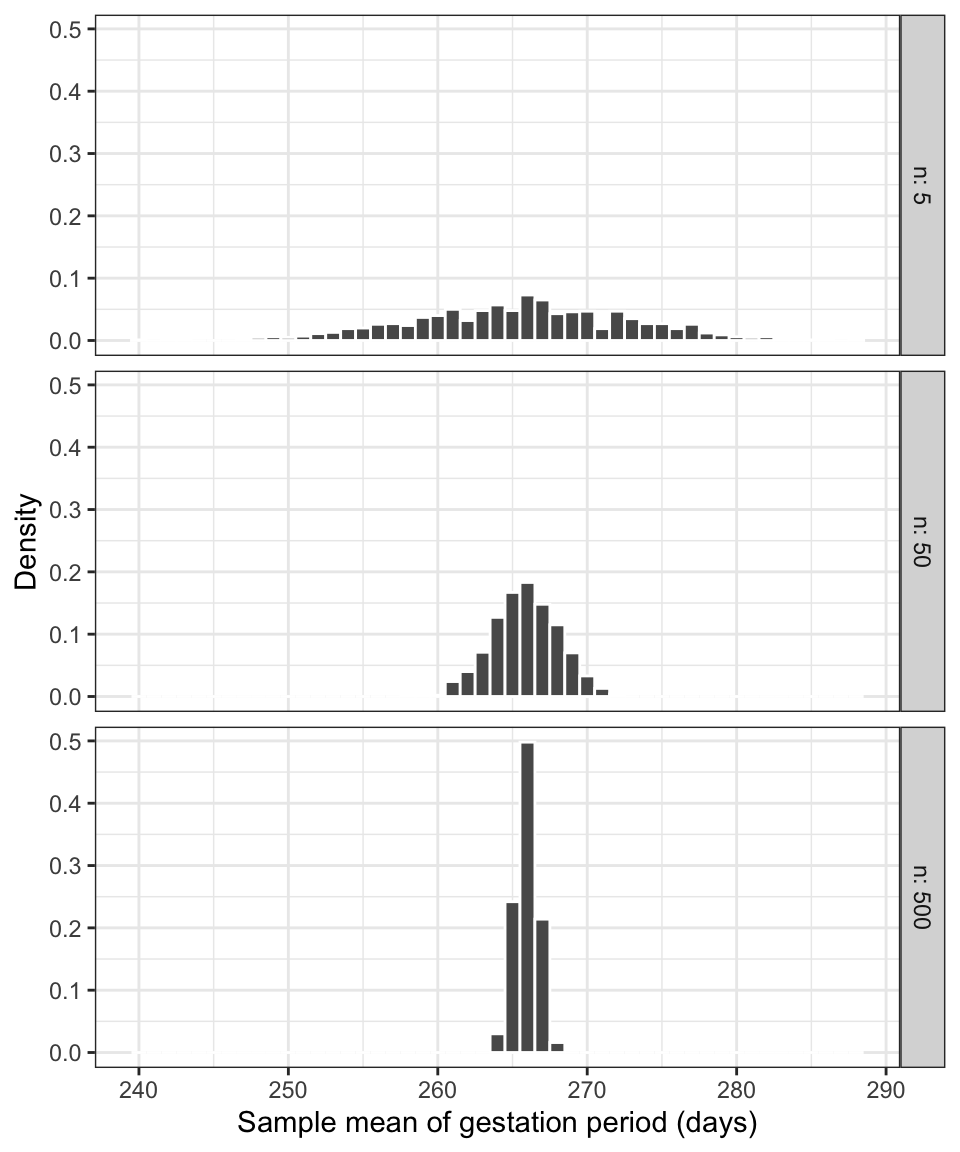

This would correspond to creating a histogram of the “red vertical bars” from Figure 1, the only difference is that we have many more samples (1,000).

ggplot(sample_means_n) +

geom_histogram(aes(mean_gest, after_stat(density)),

color = 'white', binwidth = 1) +

facet_grid(n ~ ., labeller = label_both) +

theme_bw() +

labs(x = 'Sample mean of gestation period (days)', y = 'Density')

Figure 2: Density histograms of the sample means from 1,000 samples of women (\(n\) women per sample).

Each of the density histograms above displays the distribution of the sample mean, computed on samples of the same size and from the same population.

Such a distribution is called the sampling distribution of the sample mean.

Sampling distribution

The sampling distribution of a statistic is the distribution of a sample statistic computed on many different samples of the same size from the same population.

A sampling distribution shows how the statistic varies from sample to sample due to sampling variation.

Centre and spread of the sampling distribution

What is the mean and standard deviation of each histogram?

sample_means_n %>%

group_by(n) %>%

summarise(mean_xbar = mean(mean_gest),

sd_xbar = sd(mean_gest))## # A tibble: 3 × 3

## n mean_xbar sd_xbar

## <dbl> <dbl> <dbl>

## 1 5 266. 7.38

## 2 50 266. 2.23

## 3 500 266. 0.734Compare these quantities to the population mean and standard deviation: \(\mu\) = 266 and \(\sigma\) = 16.1.

Regardless of the size of the samples we were drawing (5, 50, or 500), the average of the sample means was equal to the population mean. However, the standard deviation of the sample means was smaller than the population mean. The variability in sample means also decreases as the sample size increases.

There is an interesting patter in the decrease, which we will now verify. It can be proved that the standard deviation of the sample mean \(\sigma_{\bar X} = \frac{\sigma}{\sqrt{n}}\), i.e. the population standard deviation divided by \(\sqrt{n}\) with \(n\) being the sample size.

Obtain the population standard deviation. Remember the entire population data were called gest and in this case we are very lucky to have the data for the entire population, typically we wouldn’t have those and neither the population standard deviation.

sigma <- sd(gest$gest_period)Now compute add a column that compares the SD from sampling with the theory-based one:

sample_means_n %>%

group_by(n) %>%

summarise(mean_xbar = mean(mean_gest),

sd_xbar = sd(mean_gest)) %>%

mutate(sd_theory = sigma / sqrt(n))## # A tibble: 3 × 4

## n mean_xbar sd_xbar sd_theory

## <dbl> <dbl> <dbl> <dbl>

## 1 5 266. 7.38 7.20

## 2 50 266. 2.23 2.28

## 3 500 266. 0.734 0.720The last two columns will be closer and closer as we increase the number of different samples we take from the population (e.g. 5,000 or 10,000 or even more samples.)

The following result holds: \[ \begin{aligned} \mu_{\bar X} &= \mu = \text{Population mean} \\ \sigma_{\bar X} &= \frac{\sigma}{\sqrt{n}} = \frac{\text{Population standard deviation}}{\sqrt{\text{Sample size}}} \end{aligned} \]

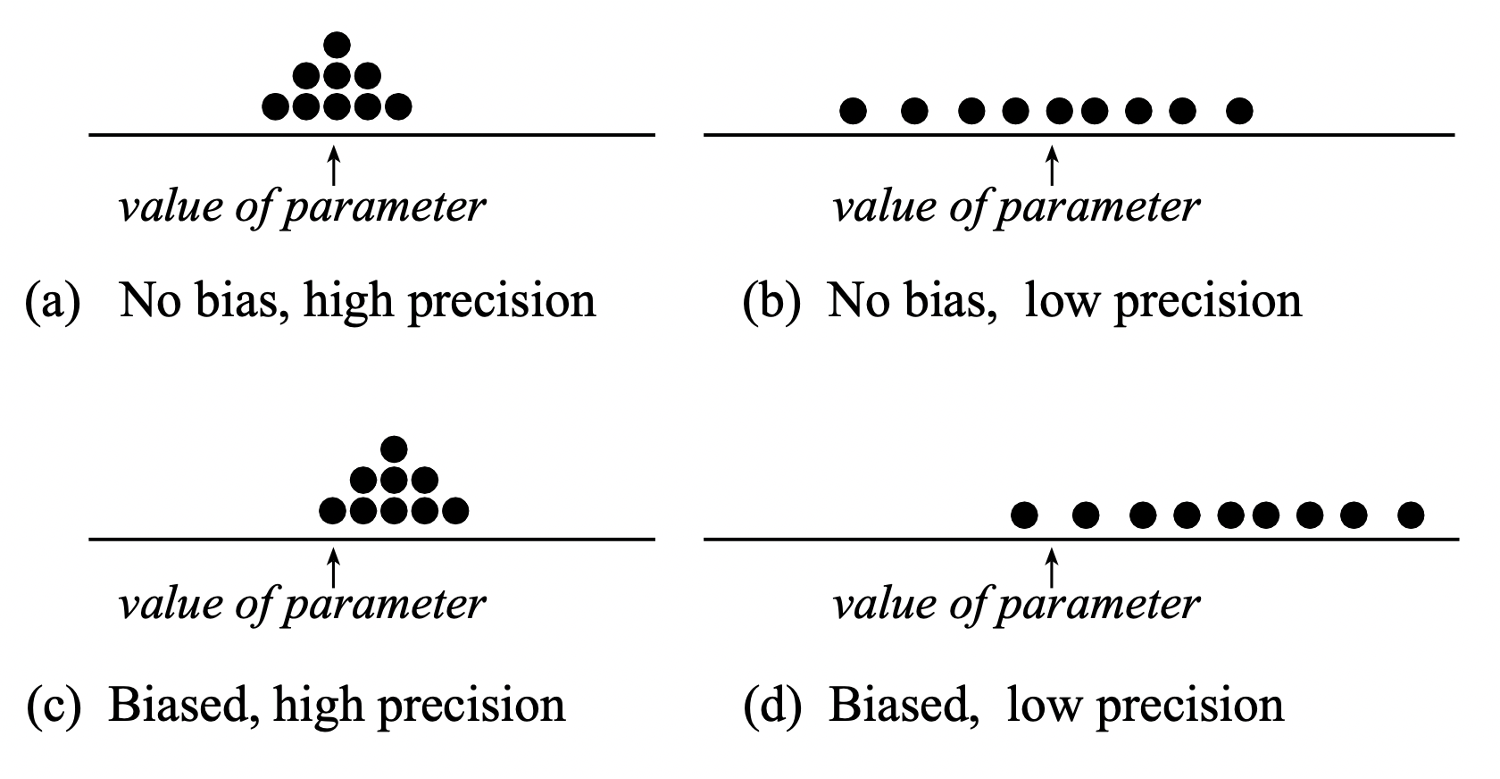

Because on average the sample mean (i.e. the estimate) is equal to the population mean (i.e. the parameter), the sample mean \(\bar X\) is an unbiased estimator of the population mean. In other words, it does not consistently “miss” the target. (However, if your sampling method is biased, the sample mean will be biased too.)

The standard deviation of the sample means tells us that the variability in the sample means gets smaller smaller as the sample size increases. Because \(\sqrt{4} = 2\) we halve \(\sigma_{\bar X}\) by making the sample size 4 times as large. Similarly, as \(\sqrt{9} = 3\), we reduce \(\sigma_{\bar X}\) by one third by making the sample size 9 times as large.

The variability, or spread, of the sampling distribution shows how much the sample statistics tend to vary from sample to sample. This is key in understanding how accurate our estimate of the population parameter, based on just one sample, will be.

Recall that the standard deviation tells us the size of a typical deviation from the mean. Here, the mean is the population parameter \(\mu\), and a deviation of \(\bar x\) from \(\mu\) is called an estimation error. Hence, the standard deviation of the sample mean is called the standard error of the mean. This tells us the typical estimation error that we commit when we estimate a population mean with a sample mean.

Standard error

The standard error of a statistic, denoted \(SE\), is the standard deviation of its sampling distribution.

So, the standard error of the mean can be either computed as the standard deviation of the sampling distribution, or using the formula \[ SE = \sigma_{\bar X} = \frac{\sigma}{\sqrt{n}} \]

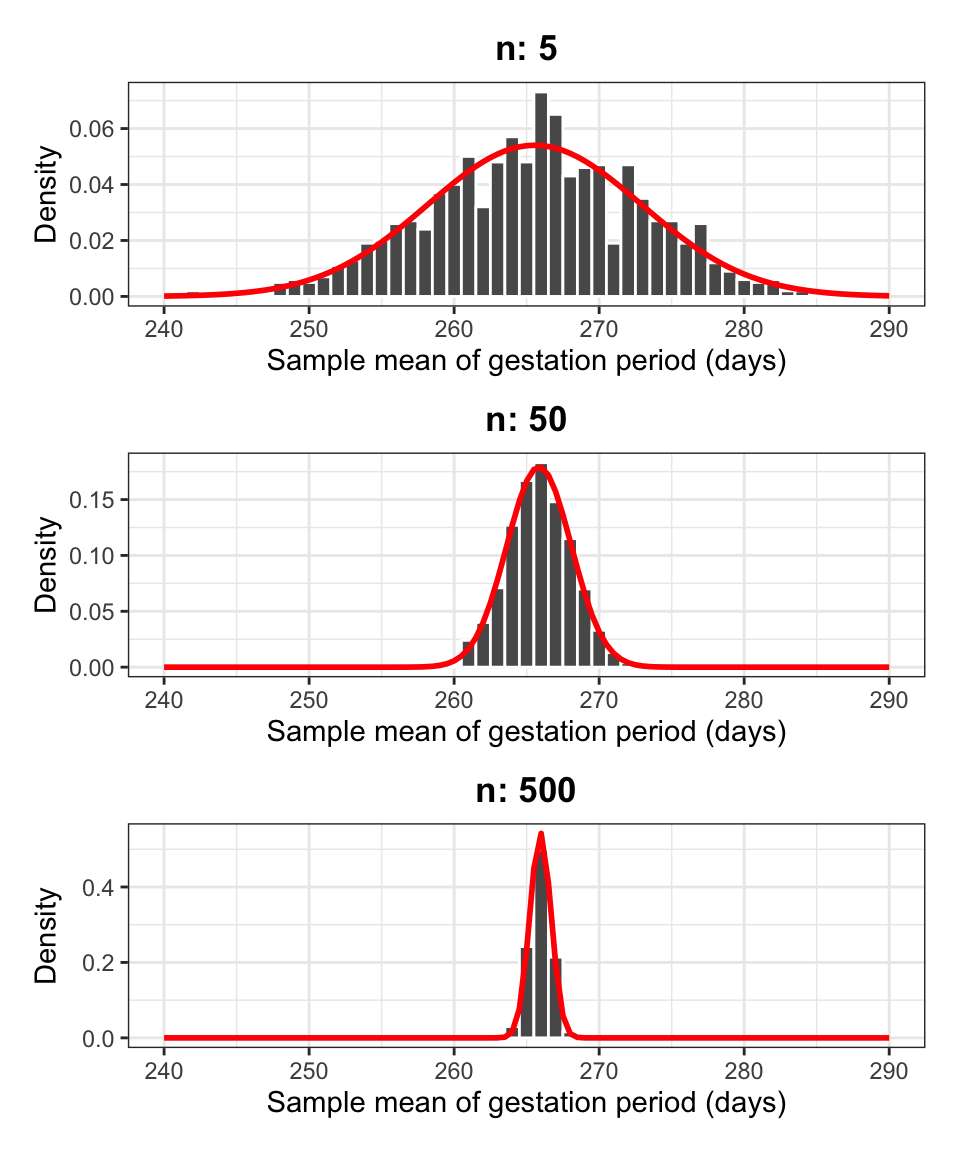

The sample mean is normally distributed

We also notice that the density histograms in Figure 2 are symmetric and bell-shaped. Hence, they follow the shape of the normal curve.

The random variable \(\bar X\) follows a normal distribution: \[ \bar X \sim N(\mu,\ SE) \]

We can also compute a z-score. We have that: \[ Z = \frac{\bar X - \mu}{SE} \sim N(0, 1) \]

We know that for a normally distributed random variable, approximately 95% of all values fall within two standard deviations of its mean. Thus, for approximately 95% of all samples, the sample means falls within \(\pm 2 SE\) of the population mean \(\mu\). Similarly, since \(P(-3 < Z < 3) = 0.997\), it is even more rare to get a sample mean which is more than three standard errors away from the population mean (only 0.3% of the times).

This suggests that:

The standard error \(SE\) is a measure of precision of \(\bar x\) as an estimate of \(\mu\).

If is a pretty safe bet to say that the true value of \(\mu\) lies somewhere between \(\bar x - 2 SE\) and \(\bar x + 2 SE\).

We will doubt any hypothesis specifying that the population mean is \(\mu\) when the value \(\mu\) is more than \(2 SE\) away from the sample mean we got from our data, \(\bar x\). We shall be even more suspicious when the hypothesised value \(\mu\) is more than \(3 SE\) away from \(\bar x\).

Centre and shape of a sampling distribution

Centre: If samples are randomly selected, the sampling distribution will be centred around the population parameter. (No bias)

Shape: For most of the statistics we consider, if the sample size is large enough, the sampling distribution will follow a normal distribution, i.e. it is symmetric and bell-shaped. (Central Limit Theorem)

Clearly, we can compute sampling distributions for other statistics too: the proportion, the standard deviation, …

This requires the following steps:

Obtaining multiple samples, all of the same size, from the same population;

For each sample, calculate the value of the statistic;

Plot the distribution of the computed statistics.

Why sample size matters

You might be wondering: why did we take multiple samples of size \(n\) from the population when, in practice, we can only afford to take one?

This is a good question. We have taken multiple samples to show how the estimation error varies with the sample size. We saw in Figure 2, shown again below, that smaller sample sizes lead to more variable statistics, while larger sample sizes lead to more precise statistics, i.e. the estimates are more concentrated around the true parameter value.

Figure 3: Density histograms of the sample means from 5,000 samples of women (\(n\) women per sample).

This teaches us that, when we have to design a study, it is better to obtain just one sample with size \(n\) as large as we can afford.

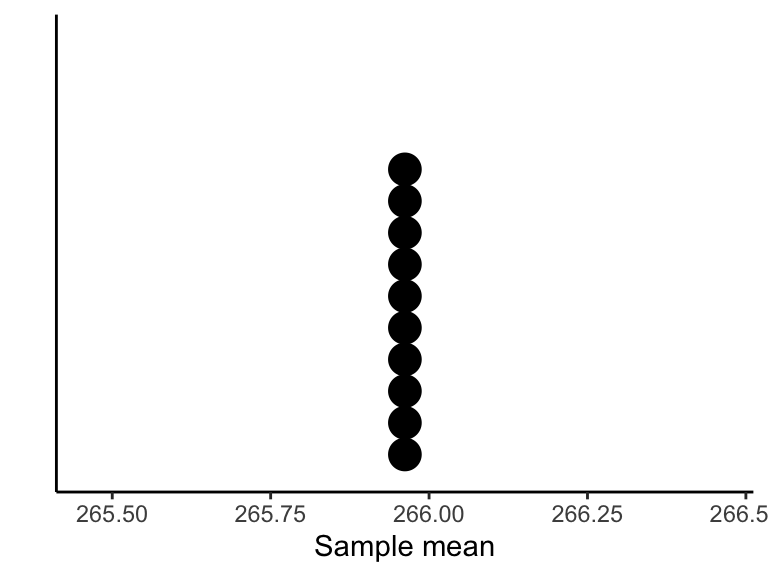

What would the sampling distribution of the mean look like if we could afford to take samples as big as the entire population, i.e. of size \(n = N\)?

If you can, it is best to measure the entire population.

If we could afford to measure the entire population, then we would find the exact value of the parameter all the time. By taking multiple samples of size equal to the entire population, every time we would obtain the population parameter exactly, so the distribution would look like a histogram with a single bar on top of the true value: we would find the true parameter with a probability of one, and the estimation error would be 0.

pop_means <- gest %>%

rep_sample_n(n = nrow(gest), samples = 10) %>%

group_by(sample) %>%

summarise(mean_gest = mean(gest_period))

pop_means## # A tibble: 10 × 2

## sample mean_gest

## <dbl> <dbl>

## 1 1 266.

## 2 2 266.

## 3 3 266.

## 4 4 266.

## 5 5 266.

## 6 6 266.

## 7 7 266.

## 8 8 266.

## 9 9 266.

## 10 10 266.The following is a dotplot of the means computed above:

To summarize:

We have high precision when the estimates are less variable, and this happens for a large sample size.

We have no bias when we select samples that are representative of the population, and this happens when we do random sampling. No bias means that the estimates will be centred at the true population parameter to be estimated.

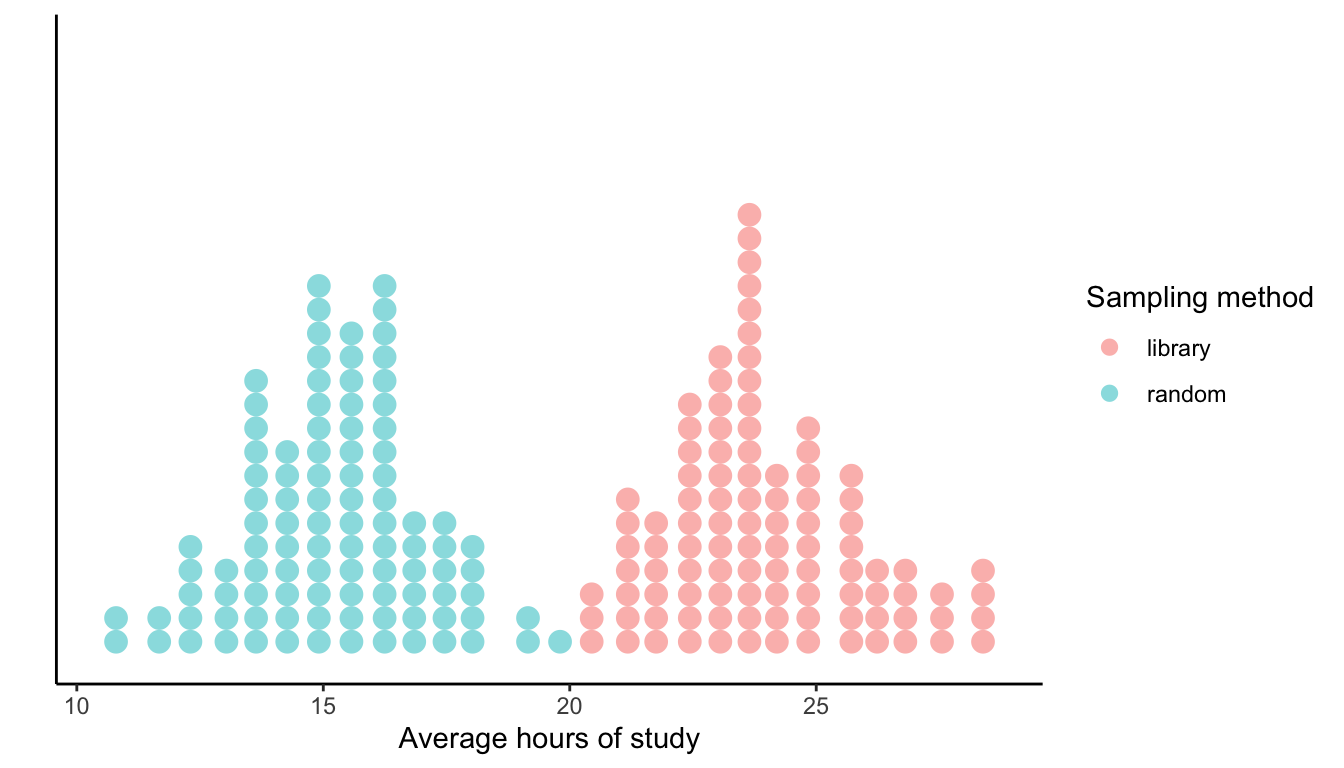

Exercises

Two students, Mary and Alex, wanted to investigate the average hours of study per week among students in their university. Each were given the task to sample \(n = 20\) students many times, and compute the mean of each sample of size 20. Mary sampled the students at random, while Alex asked students from the library. The distribution of sample means computed by Mary and Alex are shown in the dotplot below in green and red, respectively.

What do you notice in the distributions above? Why did Mary and Alex get so different results?

Mary selected samples using random sampling, so we expect the samples to be representative of the population of interest. Hence, we will believe that Mary’s sampling distribution of the mean is centred at the true population average of 15 hours of study per week.

On the other hand, Alex selected the most readily available people and took convenience samples. This leads to samples which are not a good representation of the population as a part of the population is missing. We notice that Alex got consistently higher estimates of the population mean study time than Mary did. This tendency to overestimate the population parameter shows that the sampling method is biased. Students in the library perhaps tend to study more.

Average price of goods sold by ACME Corporation

Suppose you work for a company that is interested in buying ACME Corporation1 and your boss wants to know within the next 30 minutes what is the average price of goods sold by that company and how the prices of the goods they sell differ from each other.

Since ACME Corporation has such a big mail order catalogue, see Figure 4, we will assume that the company sells many products. Furthermore, we only have the catalogue in paper-form and no online list of prices is available.

Figure 4: Product catalogue of ACME corporation.

- Identify the population of interest and the population parameters.

- Can we compute the parameters within the next 30 minutes?

- How would you proceed in estimating the population parameters if you just had time to read through 100 item descriptions? Would you pick the first 100 items or would you pick 100 random page numbers?

- State which statistics you would use to estimate the population parameters.

- The population of interest is all products sold by ACME Corporation. The population parameters are the mean price \(\mu\) and the standard deviation \(\sigma\).

- Because the catalogue has so many pages, we can not compute the population parameters within the next 30 minutes.

- We must estimate the population mean and standard deviation from a sample of size \(n = 100\). We should choose the items entering the sample at random, to avoid sampling bias. If we were to choose 100 consecutive items, we might end up with a very good estimate of the average price for the category those consecutive items belong to (e.g. gardening). However, this would not be a good estimate of the overall price across the multiple categories of products sold.

- Our best guess of the population mean would be the sample mean, \(\bar{x}\), and our best guess of the population standard deviation would be the sample standard deviation, denoted \(s\) or \(\hat{\sigma}\).

What is a parameter? Give two examples of parameters.

What is a statistic? Give two example of statistics.

What is an estimate?

A parameter is a numerical summary of the population. An example could be average blood pressure in Scotland, or the proportion of people with a car.

A statistic is a numerical summary of the sample data. Examples are the mean blood pressure in a sample of 20 people, or the proportion of people with a car in a sample of 20 people.

We call “estimate” the value of a statistic which is used to estimate an unknown population parameter.

What is the difference between a statistic before and after collecting the sample data?

Why is it made? What notational device is used to communicate the distinction?

Before we actually collect the sample, we are not sure which value the statistic will take, hence the statistic in this case is a random variable.

Once we actually collect the sample data and have computed the mean, we have an observed value for the statistic in the sample, called the observed statistic. This is just a number that we use as an estimate for the unknown population parameter.

We denote the estimate (observed statistic) with a lowercase letter and the statistic (random variable) with an uppercase letter.

Sampling distributions

What is a sampling distribution?

The sampling distribution is the distribution of the values that a statistic takes on different samples of the same size and from the same population.

Download link

Download the data here.

Description

The data set stores information about 970 movies produced in Hollywood between 2007 and 2013. It can be considered as the entire population of movies produced in Hollywood in that time period.

Among the recorded variables, three will be of interest:

Movie: title of movieGenre: one of 14 possible genresBudget: production budget (in $ millions)

Preview

| Movie | LeadStudio | RottenTomatoes | AudienceScore | Story | Genre | TheatersOpenWeek | OpeningWeekend | BOAvgOpenWeekend | DomesticGross | ForeignGross | WorldGross | Budget | Profitability | OpenProfit | Year |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Spider-Man 3 | Sony | 61 | 54 | Metamorphosis | Action | 4252 | 151.1 | 35540 | 336.53 | 554.34 | 890.87 | 258 | 345.30 | 58.57 | 2007 |

| Shrek the Third | Paramount | 42 | 57 | Quest | Animation | 4122 | 121.6 | 29507 | 322.72 | 476.24 | 798.96 | 160 | 499.35 | 76.00 | 2007 |

| Transformers | Paramount | 57 | 89 | Monster Force | Action | 4011 | 70.5 | 17577 | 319.25 | 390.46 | 709.71 | 150 | 473.14 | 47.00 | 2007 |

| Pirates of the Caribbean: At World's End | Disney | 45 | 74 | Rescue | Action | 4362 | 114.7 | 26302 | 309.42 | 654.00 | 963.42 | 300 | 321.14 | 38.23 | 2007 |

| Harry Potter and the Order of the Phoenix | Warner Bros | 78 | 82 | Quest | Adventure | 4285 | 77.1 | 17998 | 292.00 | 647.88 | 939.89 | 150 | 626.59 | 51.40 | 2007 |

| I Am Legend | Warner Bros | 69 | 69 | Quest | Thriller | 3606 | 77.2 | 21411 | 256.39 | 328.96 | 585.35 | 150 | 390.23 | 51.47 | 2007 |

Reading data into R

Read the Hollywood movies data into R, and call it hollywood.

Check that the data were read into R correctly.

hollywood <- read_csv('https://uoepsy.github.io/data/HollywoodMovies.csv')

glimpse(hollywood)## Rows: 970

## Columns: 16

## $ Movie <chr> "Spider-Man 3", "Shrek the Third", "Transformers", "P…

## $ LeadStudio <chr> "Sony", "Paramount", "Paramount", "Disney", "Warner B…

## $ RottenTomatoes <dbl> 61, 42, 57, 45, 78, 69, 93, 31, 26, 60, 97, 90, 14, 9…

## $ AudienceScore <dbl> 54, 57, 89, 74, 82, 69, 91, 72, 73, 90, 84, 78, 72, 8…

## $ Story <chr> "Metamorphosis", "Quest", "Monster Force", "Rescue", …

## $ Genre <chr> "Action", "Animation", "Action", "Action", "Adventure…

## $ TheatersOpenWeek <dbl> 4252, 4122, 4011, 4362, 4285, 3606, 3660, 3832, 3475,…

## $ OpeningWeekend <dbl> 151.1, 121.6, 70.5, 114.7, 77.1, 77.2, 69.3, 44.8, 44…

## $ BOAvgOpenWeekend <dbl> 35540, 29507, 17577, 26302, 17998, 21411, 18929, 1168…

## $ DomesticGross <dbl> 336.53, 322.72, 319.25, 309.42, 292.00, 256.39, 227.4…

## $ ForeignGross <dbl> 554.34, 476.24, 390.46, 654.00, 647.88, 328.96, 215.3…

## $ WorldGross <dbl> 890.87, 798.96, 709.71, 963.42, 939.89, 585.35, 442.8…

## $ Budget <dbl> 258.0, 160.0, 150.0, 300.0, 150.0, 150.0, 110.0, 130.…

## $ Profitability <dbl> 345.30, 499.35, 473.14, 321.14, 626.59, 390.23, 402.5…

## $ OpenProfit <dbl> 58.57, 76.00, 47.00, 38.23, 51.40, 51.47, 63.00, 34.4…

## $ Year <dbl> 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007, 2007,…

Extracting relevant variables

Extract from the hollywood tibble the three variables of interest (Movie, Genre, Budget) and keep the movies for which we have all information (no missing entries).

Hint: Check the help page for the function drop_na() or na.omit().

We can extract variables using the function select(), while to keep the rows for which we have all measurements we use na.omit():

hollywood <- hollywood %>%

select(Movie, Genre, Budget) %>%

drop_na()

hollywood## # A tibble: 665 × 3

## Movie Genre Budget

## <chr> <chr> <dbl>

## 1 Spider-Man 3 Action 258

## 2 Shrek the Third Animation 160

## 3 Transformers Action 150

## 4 Pirates of the Caribbean: At World's End Action 300

## 5 Harry Potter and the Order of the Phoenix Adventure 150

## 6 I Am Legend Thriller 150

## 7 The Bourne Ultimatum Thriller 110

## 8 National Treasure: Book of Secrets Thriller 130

## 9 Alvin and the Chipmunks Animation 70

## 10 300 Action 65

## # … with 655 more rows

Proportion of comedy movies

What is the population proportion of comedy movies? What is an estimate of the proportion of comedy movies using a sample of size 20? Using the appropriate notation, report your results in one or two sentences.

prop_comedy <- hollywood %>%

summarise(prop = sum(Genre == "Comedy") / n())

prop_comedy## # A tibble: 1 × 1

## prop

## <dbl>

## 1 0.254sample_prop_comedy <- hollywood %>%

rep_sample_n(n = 20, samples = 1) %>%

group_by(sample) %>%

summarise(prop = sum(Genre == "Comedy") / n())

sample_prop_comedy## # A tibble: 1 × 2

## sample prop

## <dbl> <dbl>

## 1 1 0.35The population proportion of comedy movies is \(p =\) 0.25, while the proportion of comedy movies in the sample is \(\hat{p} =\) 0.35.

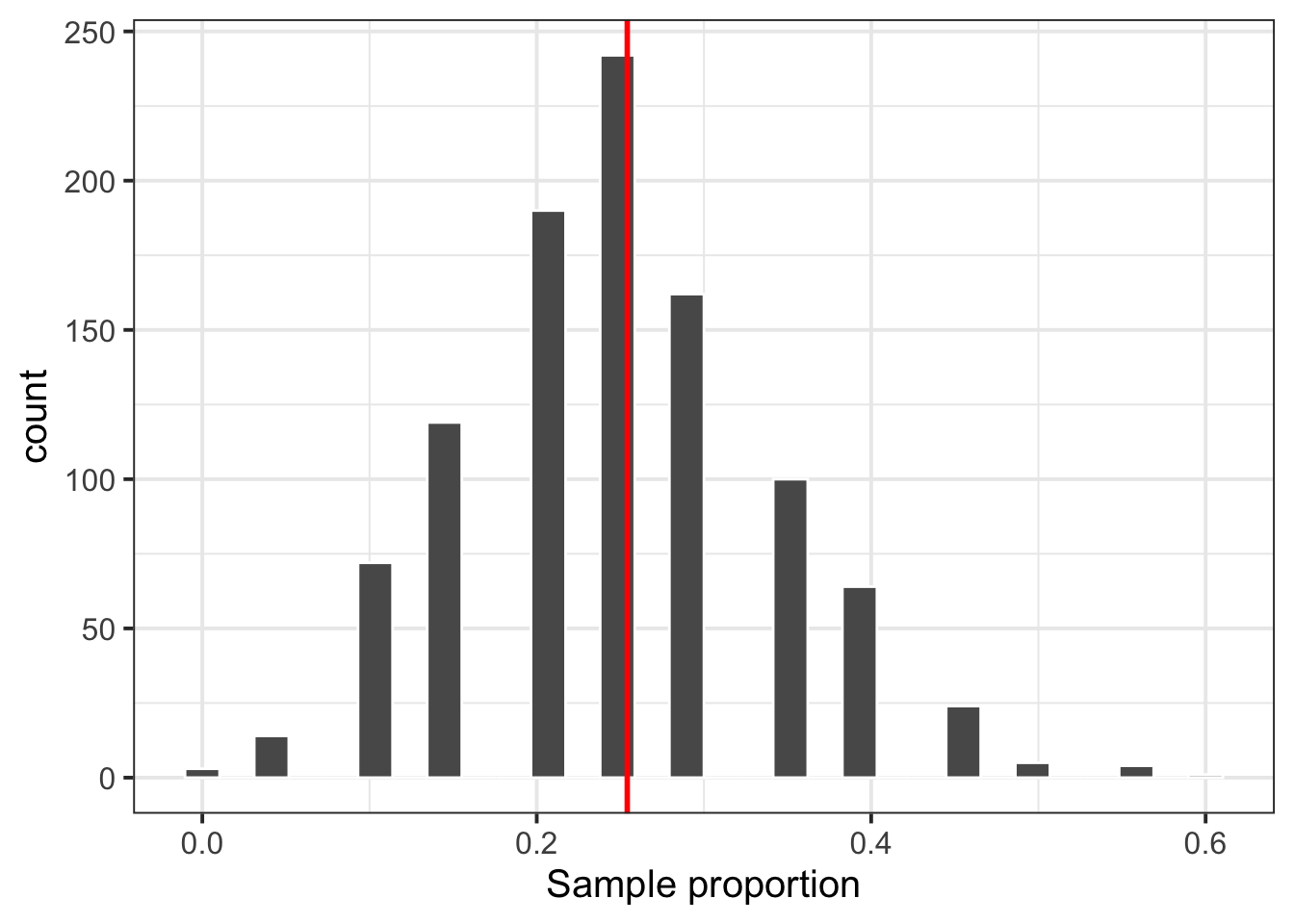

Sampling distribution of the proportion

Compute the sampling distribution of the proportion of comedy movies for samples of size \(n = 20\), using 1000 different samples.

Is it centred at the population value?

sample_props <- hollywood %>%

rep_sample_n(n = 20, samples = 1000) %>%

group_by(sample) %>%

summarise(prop = sum(Genre == 'Comedy') / n())

ggplot(sample_props, aes(x = prop)) +

geom_histogram(color = 'white') +

geom_vline(xintercept = prop_comedy$prop, color = 'red', size = 1) +

labs(x = 'Sample proportion')

Figure 5: Sampling distribution of the proportion for \(n = 20\) with population parameter \(p\) marked by a red vertical line.

Yes, Figure 5 shows that the distribution is almost bell-shaped and centred at the population parameter.

Standard error

Using the replicated samples from the previous question, what is the standard error of the sample proportion of comedy movies?

The standard error of the sample proportion is simply the standard deviation of the distribution of sample proportions for many samples. Since we have already computed the proportions for 1000 samples in the previous question, we just have to compute their variability using the standard deviation:

se_prop <- sample_props %>%

summarise(SE = sd(prop))

se_prop## # A tibble: 1 × 1

## SE

## <dbl>

## 1 0.0930The standard error of the sample proportion for sample size \(n = 20\), based on 1000 samples, is \(SE\) = 0.09.

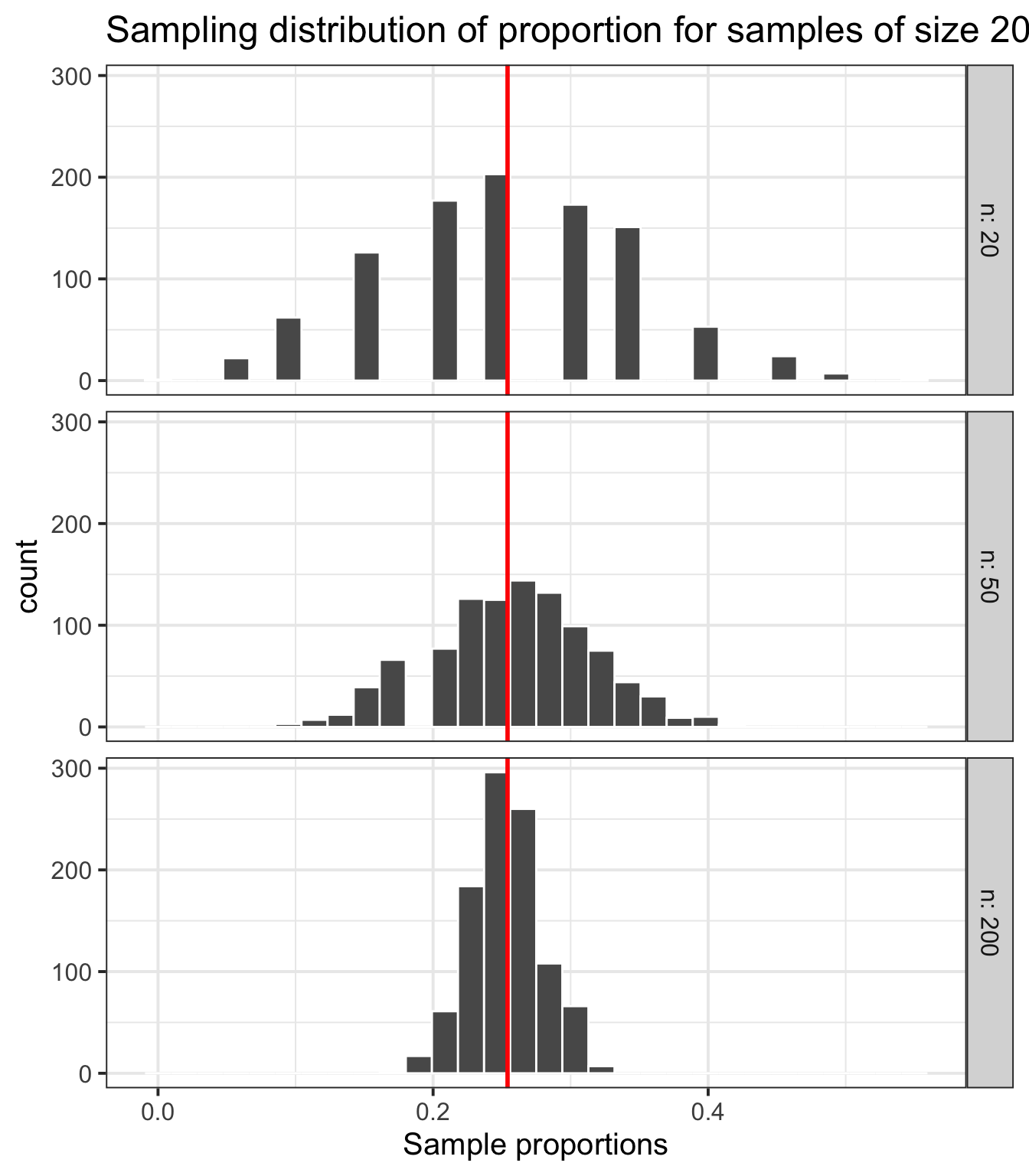

The effect of sample size on the standard error of the sample proportion

How does the sample size affect the standard error of the sample proportion? Compute the sampling distribution for the proportion of comedy movies using 1,000 samples each of size \(n = 20\), \(n = 50\), and \(n = 200\) respectively.

sample_props_20 <- hollywood %>%

rep_sample_n(n = 20, samples = 1000) %>%

group_by(sample) %>%

summarise(prop = mean(Genre == 'Comedy'))

sample_props_50 <- hollywood %>%

rep_sample_n(n = 50, samples = 1000) %>%

group_by(sample) %>%

summarise(prop = mean(Genre == 'Comedy'))

sample_props_200 <- hollywood %>%

rep_sample_n(n = 200, samples = 1000) %>%

group_by(sample) %>%

summarise(prop = mean(Genre == 'Comedy'))

sample_props_vary_n <- bind_rows(

sample_props_20 %>% mutate(n = 20),

sample_props_50 %>% mutate(n = 50),

sample_props_200 %>% mutate(n = 200)

)

ggplot(sample_props_vary_n, aes(x = prop)) +

geom_histogram(color = 'white') +

geom_vline(xintercept = prop_comedy$prop, color = 'red', size = 1) +

facet_grid(n ~ ., labeller = label_both) +

labs(x = 'Sample proportions',

title = "Sampling distribution of proportion for samples of size 20, 50, 200")

Figure 6: Three sampling distributions of the proportion, with population parameter \(p\) marked by a red vertical line.

From Figure 6 we can see that, as the sample size increases, the standard error of the sample proportion decreases. Increasing the sample size, the spread of the statistic values is reduced.

Comparing the budget for action and comedy movies

What is the population average budget (in millions of dollars) allocated for making action vs comedy movies? And the standard deviation?

budgets <- hollywood %>%

filter(Genre == 'Action' | Genre == 'Comedy') %>%

group_by(Genre) %>%

summarise(avg_budget = mean(Budget),

sd_budget = sd(Budget))

budgets## # A tibble: 2 × 3

## Genre avg_budget sd_budget

## <chr> <dbl> <dbl>

## 1 Action 85.9 64.0

## 2 Comedy 36.9 26.7From the above tibble we see that action movies have been allocated a higher budget (\(\mu_{Action} =\) 85.9) than comedy movies (\(\mu_{Comedy} =\) 36.9). At the same time, action movies have a higher variability of budgets around the mean value (\(\sigma_{Action} =\) 64 vs \(\sigma_{Comedy} =\) 26.7). Note that those numbers are population parameters as they summarise the entire population of Hollywood movies rather than just a sample.a

Glossary

- Statistical inference. The process of drawing conclusions about the population from the data collected in a sample.

- Population. The entire collection of units of interest.

- Sample. A subset of the entire population.

- Random sample. A subset of the entire population, picked at random, so that any conclusion made from the sample data can be generalised to the entire population.

- Representation bias. Happens when some units of the population are systematically underrepresented in samples.

- Generalisability. When information from the sample can be used to draw conclusions about the entire population. This is only possible if the sampling procedure leads to samples that are representative of the entire population (such as those drawn at random).

- Parameter. A fixed but typically unknown quantity describing the population.

- Statistic. A quantity computed on a sample.

- Sampling distribution. The distribution of the values that a statistic takes on different samples of the same size and from the same population.

- Standard error. The standard error of a statistic is the standard deviation of the sampling distribution of the statistic.

You might remember it from the cartoon Wile E. Coyote and the Road Runner.↩︎