Correlations

Univariate Statistics and Methodology using R

Psychology, PPLS

University of Edinburgh

Correlation

Blood Alcohol and Reaction Time

- data from 100 drivers

- are blood alcohol and RT (linearly) related?

A Simplified Case

- does \(y\) vary linearly with \(x\)?

- equivalent to asking “does \(y\) differ from its mean in the same way that \(x\) does?”

Covariance

- it’s likely the variables are related if observations differ proportionately from their means

Covariance

variance

\[ s^2 = \frac{\sum{(x-\bar{x})^2}}{n} = \frac{\sum{(x-\bar{x})(x-\bar{x})}}{n} \]

covariance

\[ \textrm{cov}(x,y) = \frac{\sum{\color{blue}{(x-\bar{x})}\color{red}{(y-\bar{y})}}}{n} \]

Covariance

| \(\color{blue}{x-\bar{x}}\) | \(\color{red}{y-\bar{y}}\) | \(\color{blue}{(x-\bar{x})}\color{red}{(y-\bar{y})}\) |

|---|---|---|

| -0.3 | 1.66 | -0.5 |

| 0.81 | 2.21 | 1.79 |

| -1.75 | -2.85 | 4.99 |

| -0.14 | -3.58 | 0.49 |

| 1.37 | 2.56 | 3.52 |

| 10.29 |

\[\textrm{cov}(x,y) = \frac{\sum{(x-\bar{x})(y-\bar{y})}}{n} = \frac{10.29}{5} \simeq \color{red}{2.06}\]

The Problem With Covariance

miles

| \(x-\bar{x}\) | \(y-\bar{y}\) | \((x-\bar{x})(y-\bar{y})\) |

|---|---|---|

| -0.3 | 1.66 | -0.5 |

| 0.81 | 2.21 | 1.79 |

| -1.75 | -2.85 | 4.99 |

| -0.14 | -3.58 | 0.49 |

| 1.37 | 2.56 | 3.52 |

| 10.29 |

\[\textrm{cov}(x,y)=\frac{10.29}{5}\simeq 2.06\]

kilometres

| \(x-\bar{x}\) | \(y-\bar{y}\) | \((x-\bar{x})(y-\bar{y})\) |

|---|---|---|

| -0.48 | 2.68 | -1.29 |

| 1.3 | 3.56 | 4.64 |

| -2.81 | -4.59 | 12.91 |

| -0.22 | -5.77 | 1.27 |

| 2.21 | 4.12 | 9.12 |

| 26.65 |

\[ \textrm{cov}(x,y)=\frac{26.65}{5}\simeq 5.33 \]

Correlation Coefficient (\(r\))

\[r = \frac{\textrm{covariance}(x,y)}{\textrm{standard deviation}(x)\cdot\textrm{standard deviation}(y)}\]

\[r=\frac{\frac{\sum{(x-\bar{x})(y-\bar{y})}}{n}}{\sqrt{\frac{\sum{(x-\bar{x})^2}}{n}}\sqrt{\frac{\sum{(y-\bar{y})^2}}{n}}}\]

\[r=\frac{\frac{\sum{(x-\bar{x})(y-\bar{y})}}{\color{red}{n}}}{\sqrt{\frac{\sum{(x-\bar{x})^2}}{\color{red}{n}}}\sqrt{\frac{\sum{(y-\bar{y})^2}}{\color{red}{n}}}}\]

\[r=\frac{\sum{(x-\bar{x})(y-\bar{y})}}{\sqrt{\sum{(x-\bar{x})^2}}\sqrt{\sum{(y-\bar{y})^2}}}\]

Correlation Coefficient

measure of how related two variables are

\(-1 \le r \le 1\) (\(\pm 1\) = perfect fit; \(0\) = no fit)

\[ r=0.4648 \]

\[ r=-0.4648 \]

What Does the Value of r Mean?

Significance

Significance of a Correlation

\[ r = 0.4648 \]

Significance of a Correlation

we can measure a correlation using \(r\)

we want to know whether that correlation is significant

- i.e., whether the probability of finding it by chance is low enough

cardinal rule in NHST: compare everything to chance

let’s investigate…

Random Correlations

pick some pairs of numbers at random, return correlation

arbitrarily, I’ve picked numbers uniformly distributed between 0 and 100

Random Correlations

pick some pairs of numbers at random, return correlation

- repeat 1000 times

Random Correlations

Calculating Probability

- distribution of random \(r\)s is \(t\) distribution, with \(n-2\) df

\[t= r\sqrt{\frac{n-2}{1-r^2}}\]

- makes it “easy” to calculate probability of getting \(\ge{}r\) for sample size \(n\) by chance

Calculating Probability

calculate \(t\)

[1] 3.637Correlation++

Back on the Road

\[r= 0.4648, p = 0.0007\]

reaction time is positively associated with blood alcohol

not a very complete picture

how much does alcohol affect RT?

The Only Equation You Will Ever Need

\[\color{red}{\textrm{outcome}_i} = (\textrm{model})_i + \textrm{error}_i\]

The Only Equation You Will Ever Need

\[\color{red}{\textrm{outcome}_i} = \color{blue}{(\textrm{model})_i} + \textrm{error}_i\]

The Only Equation You Will Ever Need

\[\color{red}{\textrm{outcome}_i} = \color{blue}{(\textrm{model})_i} + \textrm{error}_i\]

The Only Equation You Will Ever Need

\[\color{red}{\textrm{outcome}_i} = \color{blue}{(\textrm{model})_i} + \textrm{error}_i\]

The Aim of the Game

\[\color{red}{\textrm{outcome}_i} = \color{blue}{(\textrm{model})_i} + \textrm{error}_i\]

maximise the explanatory worth of the model

minimise the amount of unexplained error

explain more than one outcome!

Many Outcomes

so far, have been talking about the ith observation

we want to generalise (“for any i”)

\[\color{red}{\textrm{outcomes}}=\color{blue}{\textrm{(model)}}+\textrm{errors}\]

We need to make assumptions

- model is linear

- errors are from a normal distribution

A Linear Model

- defined by two properties

- height of the line (intercept)

- slope of the line

A Linear Model

\[\color{red}{\textrm{outcome}_i} = \color{blue}{(\textrm{model})_i} + \textrm{error}_i\] \[\color{red}{y_i} = \color{blue}{\textrm{intercept}\cdot{}1+\textrm{slope}\cdot{}x_i}+\epsilon_i\]

\[\color{red}{y_i} = \color{blue}{b_0 \cdot{} 1 + b_1 \cdot{} x_i} + \epsilon_i\] so the linear model itself is…

\[\hat{y}_i = \color{blue}{b_0 \cdot{} 1 + b_1 \cdot{} x_i}\]

\[\color{blue}{b_0=5}, \color{blue}{b_1=2}\] \[\color{orange}{x_i=1.2},\color{red}{y_i=9.9}\] \[\hat{y}_i=7.4\]

A Linear Model

\[\hat{y}_i = \color{blue}{b_0 \cdot{}}\color{orange}{1} \color{blue}{+b_1 \cdot{}} \color{orange}{x_i}\]

values of the linear model (coefficients)

values we provide (inputs)

- maps directly to R “formula” notation

A Linear Model

\[\color{red}{\textrm{outcome}_i} = \color{blue}{(\textrm{model})_i} + \textrm{error}_i\] \[\color{red}{y_i} = \color{blue}{\textrm{intercept}}\cdot{}\color{orange}{1}+\color{blue}{\textrm{slope}}\cdot{}\color{orange}{x_i}+\epsilon_i\] \[\color{red}{y_i} = \color{blue}{b_0} \cdot{} \color{orange}{1} + \color{blue}{b_1} \cdot{} \color{orange}{x_i} + \epsilon_i\] so the linear model itself is…

\[\hat{y}_i = \color{blue}{b_0} \cdot{} \color{orange}{1} + \color{blue}{b_1} \cdot{} \color{orange}{x_i}\]

\[\hat{y}_i = \color{blue}{b_0} + \color{blue}{b_1} \cdot{} \color{orange}{x_i}\]

Back on the Road 2

Linear Model Output

Call:

lm(formula = RT ~ BloodAlc, data = ourDat)

Residuals:

Min 1Q Median 3Q Max

-115.92 -40.42 1.05 42.93 126.64

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 321.24 91.05 3.53 0.00093 ***

BloodAlc 32.28 8.88 3.64 0.00067 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 55.8 on 48 degrees of freedom

Multiple R-squared: 0.216, Adjusted R-squared: 0.2

F-statistic: 13.2 on 1 and 48 DF, p-value: 0.000673Possibly Back on the Road

for every extra 0.01% blood alcohol, reaction time slows down by around 32 ms

- remember that one unit is 0.01%, because we multiplied by 100

Significance

The Null Hypotheses

b0 won’t be significantly different from zero

- not (always) interesting

b1 won’t be significantly different from zero

- “knowing x doesn’t tell you anything about y”

Let’s Simulate!

Many Regressions

Significance

- we’ve already seen something in the model summary

...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 321.24 91.05 3.53 0.00093 ***

BloodAlc 32.28 8.88 3.64 0.00067 ***

...the logic is the same as for \(t\) tests

\(t\) value is \(\frac{\textrm{estimate}}{\textrm{standard error}}\)

standard errors are calculated from model like for \(t\)-tests

Degrees of Freedom

Degrees of Freedom

Degrees of Freedom

we subtract 2 df because we “know” two things

intercept (b0)

slope (b1)

the remaining df are the residual degrees of freedom

...

BloodAlc 32.28 8.88 3.64 0.00067 ***

...

F-statistic: 13.2 on 1 and 48 DF, p-value: 0.000673reaction time slowed by 32.3 ms for every additional 0.01% blood alcohol by volume (t(48)=3.64, p=.0007)

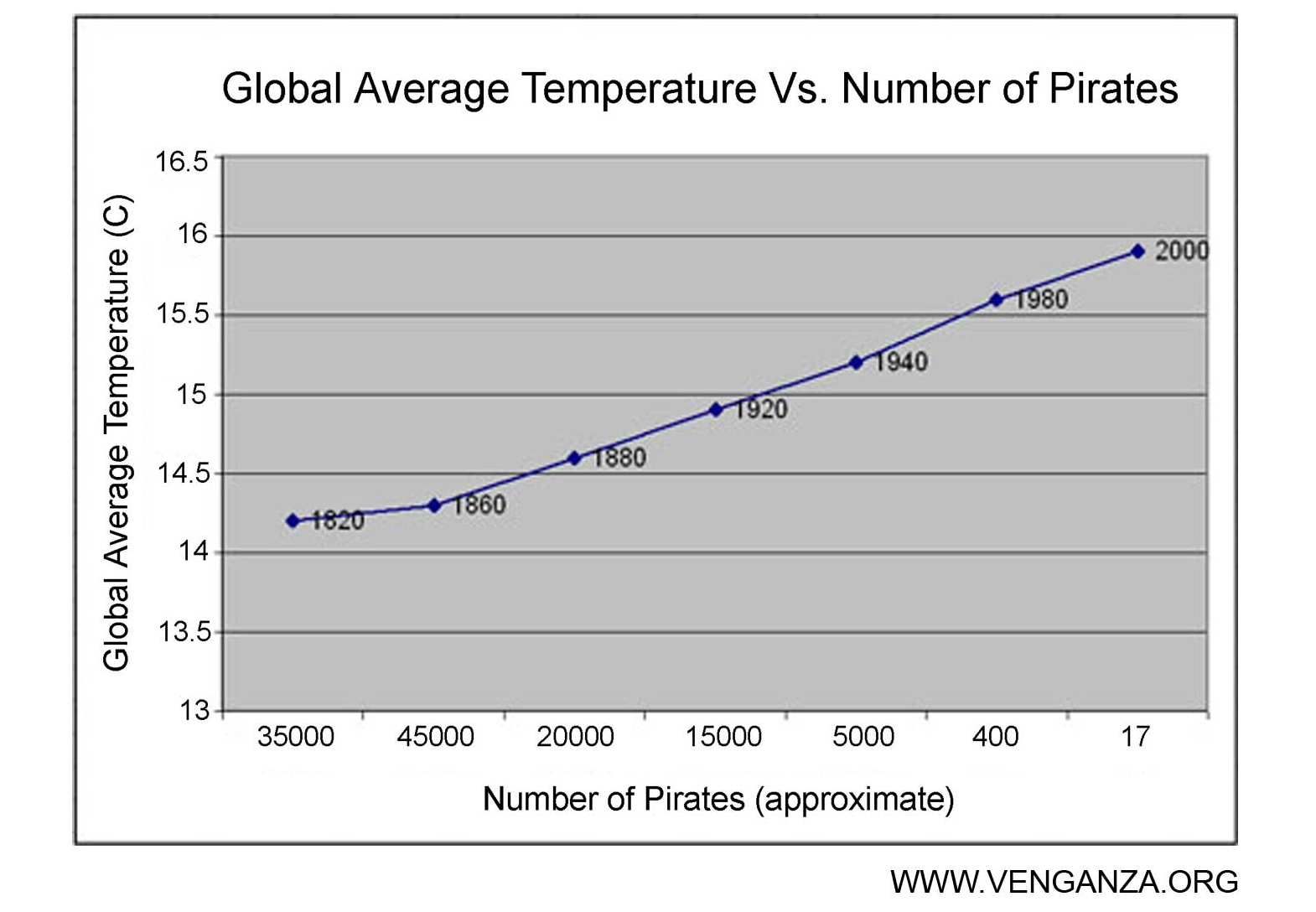

Pirates and Global Warming