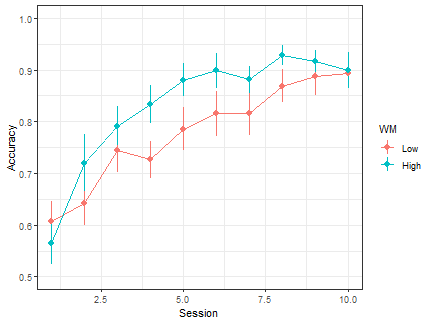

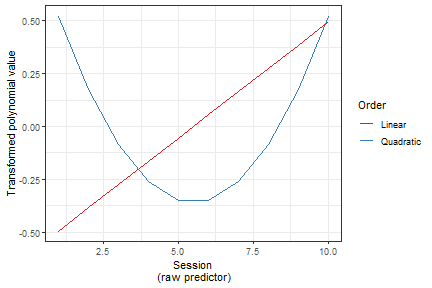

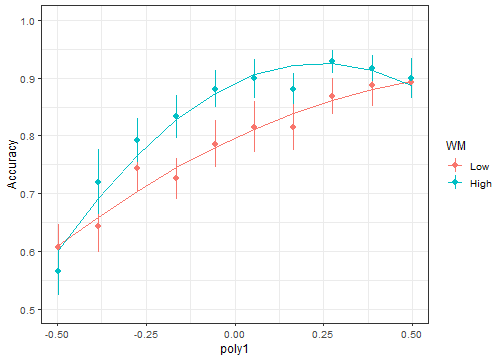

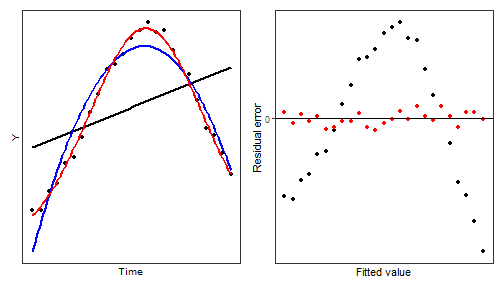

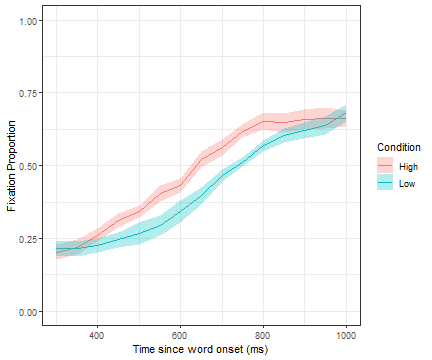

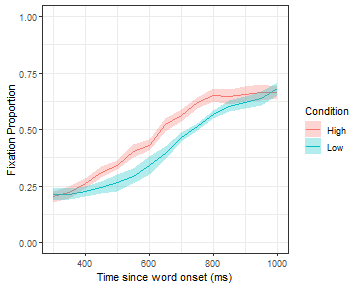

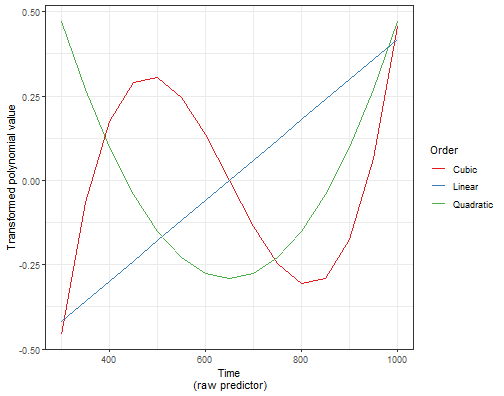

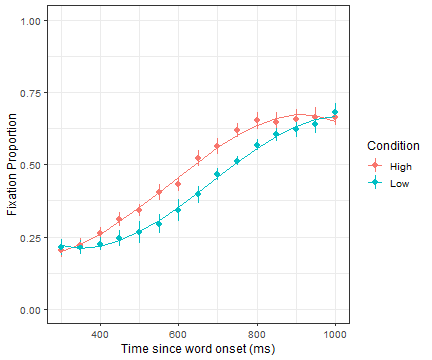

class: center, middle, inverse, title-slide .title[ # <b>Week 3: Longitudinal Data Analysis using Multilevel Modeling - Nonlinear Change</b> ] .subtitle[ ## Multivariate Statistics and Methodology using R (MSMR)<br><br> ] .author[ ### Dan Mirman ] .institute[ ### Department of Psychology<br>The University of Edinburgh ] --- # Longitudinal data are a natural application domain for MLM * Longitudinal measurements are *nested* within subjects (by definition) * Longitudinal measurements are related by a continuous variable, spacing can be uneven across participants, and data can be missing + These are problems rmANOVA * **Trajectories of longitudinal change can be nonlinear** -- This application of MLM is sometimes called "Growth Curve Analysis" (GCA) --- # Example: Word Learning Effect of working memory (high vs low) on L2 vocabulary acquisition (word learing) ```r load("./data/WordLearnEx.rda") ggplot(WordLearnEx, aes(Session, Accuracy, color=WM)) + stat_summary(fun.data = mean_se, geom="pointrange") + stat_summary(fun = mean, geom="line") + theme_bw() + expand_limits(y=c(0.5,1)) ``` <!-- --> --- # Linear model ```r m1 <- lmer(Accuracy ~ Session*WM + (Session | Subject), data = WordLearnEx, REML=F) sjPlot::tab_model(m1, show.re.var=F, show.icc=F, show.r2=F) ``` <table style="border-collapse:collapse; border:none;"> <tr> <th style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; text-align:left; "> </th> <th colspan="3" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Accuracy</th> </tr> <tr> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; text-align:left; ">Predictors</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">p</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">(Intercept)</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.61</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.54 – 0.67</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong><0.001</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Session</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.03</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.02 – 0.04</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong><0.001</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.05</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.04 – 0.15</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.277</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Session × WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.00</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.01 – 0.01</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.984</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">N <sub>Subject</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="3">56</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm; border-top:1px solid;">Observations</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="3">560</td> </tr> </table> -- Groups started and ended at the same level, linear model can't detect the subtle difference in learning rate. Need to model the curvature... --- # Quadratic model ```r WordLearnEx$Session2 <- WordLearnEx$Session^2 m2 <- lmer(Accuracy ~ (Session+Session2)*WM + (Session+Session2 | Subject), data = WordLearnEx, REML=F) sjPlot::tab_model(m2, show.re.var = F, show.r2=F) ``` <table style="border-collapse:collapse; border:none;"> <tr> <th style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; text-align:left; "> </th> <th colspan="3" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Accuracy</th> </tr> <tr> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; text-align:left; ">Predictors</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">p</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">(Intercept)</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.56</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.46 – 0.65</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong><0.001</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Session</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.06</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.02 – 0.09</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong>0.001</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Session2</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.00</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.01 – 0.00</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.126</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.06</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.19 – 0.08</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.389</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Session × WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.06</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.01 – 0.10</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong>0.023</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">Session2 × WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.01</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.01 – -0.00</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong>0.013</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">N <sub>Subject</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="3">56</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm; border-top:1px solid;">Observations</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="3">560</td> </tr> </table> --- # Quadratic model Linear term became significant after we added quadratic term? -- ```r performance::check_collinearity(m2) ``` ``` ## Model has interaction terms. VIFs might be inflated. ## You may check multicollinearity among predictors of a model without ## interaction terms. ``` ``` ## # Check for Multicollinearity ## ## Moderate Correlation ## ## Term VIF VIF 95% CI Increased SE Tolerance Tolerance 95% CI ## WM 5.28 [ 4.57, 6.14] 2.30 0.19 [0.16, 0.22] ## ## High Correlation ## ## Term VIF VIF 95% CI Increased SE Tolerance Tolerance 95% CI ## Session 36.49 [31.08, 42.88] 6.04 0.03 [0.02, 0.03] ## Session2 36.49 [31.08, 42.88] 6.04 0.03 [0.02, 0.03] ## Session:WM 66.34 [56.43, 78.01] 8.14 0.02 [0.01, 0.02] ## Session2:WM 50.07 [42.61, 58.86] 7.08 0.02 [0.02, 0.02] ``` --- # Polynomial collinearity <img src="./figs/orth-poly.png" width="40%" /> .pull-left[ ### Natural Polynomials * Correlated time terms * Very different scales ] .pull-right[ ### Orthogonal Polynomials * Uncorrelated time terms * A version on variable centering * Same scale * Need to specify range and order ] --- # Interpreting orthogonal polynomial terms Intercept ( `\(\beta_0\)` ): Overall average <img src="./figs/VisSearchOrth.png" width="30%" /> --- # Interpreting orthogonal polynomial terms .pull-left[ * Intercept ( `\(\beta_0\)` ): Overall average * Linear ( `\(\beta_1\)` ): Overall slope * Quadratic ( `\(\beta_2\)` ): Centered rise and fall rate * Cubic, Quartic, ... ( `\(\beta_3, \beta_4, ...\)` ): Inflection steepness ] .pull-right[ <img src="./figs/polys-scale.png" width="100%" /> ] --- # Back to the example Need to create an orthogonal polynomial version of `Session` Helper function `code_poly` does this ```r source("code_poly.R") # or from online version: source("https://uoepsy.github.io/msmr/functions/code_poly.R") WordLearnEx.gca <- code_poly(WordLearnEx, predictor="Session", poly.order=2) ``` <!-- --> --- # New orth poly variables added to data frame ```r summary(WordLearnEx.gca) ``` ``` ## Subject WM Session Accuracy Session2 ## 244 : 10 Low :280 Min. : 1.0 Min. :0.000 Min. : 1.0 ## 253 : 10 High:280 1st Qu.: 3.0 1st Qu.:0.667 1st Qu.: 9.0 ## 302 : 10 Median : 5.5 Median :0.833 Median : 30.5 ## 303 : 10 Mean : 5.5 Mean :0.805 Mean : 38.5 ## 305 : 10 3rd Qu.: 8.0 3rd Qu.:1.000 3rd Qu.: 64.0 ## 306 : 10 Max. :10.0 Max. :1.000 Max. :100.0 ## (Other):500 ## Session.Index poly1 poly2 ## Min. : 1.0 Min. :-0.495 Min. :-0.348 ## 1st Qu.: 3.0 1st Qu.:-0.275 1st Qu.:-0.261 ## Median : 5.5 Median : 0.000 Median :-0.087 ## Mean : 5.5 Mean : 0.000 Mean : 0.000 ## 3rd Qu.: 8.0 3rd Qu.: 0.275 3rd Qu.: 0.174 ## Max. :10.0 Max. : 0.495 Max. : 0.522 ## ``` --- # Fit model with orthogonal predictors ```r m2.orth <- lmer(Accuracy ~ (poly1+poly2)*WM + (poly1+poly2 | Subject), data = WordLearnEx.gca, REML=F) ``` <table style="border-collapse:collapse; border:none;"> <tr> <th style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; text-align:left; "> </th> <th colspan="3" style="border-top: double; text-align:center; font-style:normal; font-weight:bold; padding:0.2cm; ">Accuracy</th> </tr> <tr> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; text-align:left; ">Predictors</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">Estimates</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">CI</td> <td style=" text-align:center; border-bottom:1px solid; font-style:italic; font-weight:normal; ">p</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">(Intercept)</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.78</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.74 – 0.82</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong><0.001</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">poly1</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.29</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.21 – 0.36</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong><0.001</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">poly2</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.05</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.12 – 0.01</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.126</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.05</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.01 – 0.11</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.085</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">poly1 × WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.00</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.10 – 0.11</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">0.984</td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; ">poly2 × WM [High]</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.12</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; ">-0.21 – -0.02</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:center; "><strong>0.013</strong></td> </tr> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm;">N <sub>Subject</sub></td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left;" colspan="3">56</td> <tr> <td style=" padding:0.2cm; text-align:left; vertical-align:top; text-align:left; padding-top:0.1cm; padding-bottom:0.1cm; border-top:1px solid;">Observations</td> <td style=" padding:0.2cm; text-align:left; vertical-align:top; padding-top:0.1cm; padding-bottom:0.1cm; text-align:left; border-top:1px solid;" colspan="3">560</td> </tr> </table> --- # Plot model fit ```r ggplot(augment(m2.orth), aes(poly1, Accuracy, color=WM)) + stat_summary(fun.data = mean_se, geom="pointrange") + stat_summary(aes(y=.fitted), fun = mean, geom="line") + theme_bw(base_size=12) + expand_limits(y=c(0.5,1)) ``` <!-- --> --- # What about more complex curve shapes? ### Function must be adequate to data <!-- --> -- Use `broom.mixed::augment()` to make a quick plot of residuals vs. fitted: ```r ggplot(augment(m), aes(.fitted, .resid)) + geom_point() ``` --- # Using higher-order polynomials * <span style="color:blue">Can model any curve shape</span> * But not practical for very complex curves: Use GAMMs or another modeling framework * <span style="color:blue">Easy to implement in MLM framework (dynamically consistent, aka "collapsible")</span> * <span style="color:red">Bad at capturing asymptotic behaviour</span> * Try to avoid long flat sections * Don't extrapolate -- **How to choose polynomial order?** * Curve shape * Statistical: include only terms that statistically improve model fit * Theoretical: include only terms that are predicted to matter --- # Example: Target fixation during spoken word-to-picture matching (VWP) .pull-left[ ```r load("./data/TargetFix.rda") ``` * More complex curve shape * Within-subject `Condition` ] .pull-right[ <!-- --> ] --- # Random effects <img src="./figs/RandomEffectsLinDemo.png" width="75%" /> -- Extend to polynomial terms: individual differences in "slope" (curvature) of quadratic, cubic, etc. components. -- **Keep it maximal**: Incomplete random effects can inflate false alarms, but full random effects can produce convergence problems. If/when need to simplify random effects: consider which random effects are most expendable; that is, which individual differences are least important to your research questions or inferences. --- # Target fixation during spoken word-to-picure matching (VWP) <!-- --> ``` ## Subject Time timeBin Condition meanFix ## 708 : 30 Min. : 300 Min. : 1 High:150 Min. :0.0286 ## 712 : 30 1st Qu.: 450 1st Qu.: 4 Low :150 1st Qu.:0.2778 ## 715 : 30 Median : 650 Median : 8 Median :0.4558 ## 720 : 30 Mean : 650 Mean : 8 Mean :0.4483 ## 722 : 30 3rd Qu.: 850 3rd Qu.:12 3rd Qu.:0.6111 ## 725 : 30 Max. :1000 Max. :15 Max. :0.8286 ## (Other):120 ## sumFix N ## Min. : 1.0 Min. :33.0 ## 1st Qu.:10.0 1st Qu.:35.8 ## Median :16.0 Median :36.0 ## Mean :15.9 Mean :35.5 ## 3rd Qu.:21.2 3rd Qu.:36.0 ## Max. :29.0 Max. :36.0 ## ``` --- # Prep for analysis Create a 3rd-order orthogonal polynomial ```r TargetFix.gca <- code_poly(TargetFix, predictor="Time", poly.order=3) ``` <!-- --> --- # Prep for analysis Create a 3rd-order orthogonal polynomial ```r str(TargetFix.gca) ``` ``` ## 'data.frame': 300 obs. of 11 variables: ## $ Subject : Factor w/ 10 levels "708","712","715",..: 1 1 1 1 1 1 1 1 1 1 ... ## $ Time : num 300 300 350 350 400 400 450 450 500 500 ... ## $ timeBin : num 1 1 2 2 3 3 4 4 5 5 ... ## $ Condition : Factor w/ 2 levels "High","Low": 1 2 1 2 1 2 1 2 1 2 ... ## $ meanFix : num 0.1944 0.0286 0.25 0.1143 0.2778 ... ## $ sumFix : num 7 1 9 4 10 5 13 5 14 6 ... ## $ N : int 36 35 36 35 36 35 36 35 36 35 ... ## $ Time.Index: num 1 1 2 2 3 3 4 4 5 5 ... ## $ poly1 : num -0.418 -0.418 -0.359 -0.359 -0.299 ... ## $ poly2 : num 0.4723 0.4723 0.2699 0.2699 0.0986 ... ## $ poly3 : num -0.4563 -0.4563 -0.0652 -0.0652 0.1755 ... ``` --- # Fit full GCA model ```r m.full <- lmer(meanFix ~ (poly1+poly2+poly3)*Condition + #fixed effects (poly1+poly2+poly3 | Subject) + #random effects of Subject (poly1+poly2+poly3 | Subject:Condition), #random effects of Subj by Cond data=TargetFix.gca, REML=F) ``` ``` ## Warning in checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, : ## Model failed to converge with max|grad| = 0.00280522 (tol = 0.002, component 1) ``` ```r #summary(m.full) coef(summary(m.full)) ``` ``` ## Estimate Std. Error df t value Pr(>|t|) ## (Intercept) 0.4773228 0.01386 19.86 34.450610 3.462e-19 ## poly1 0.6385604 0.05993 17.29 10.655308 5.097e-09 ## poly2 -0.1095979 0.03849 19.98 -2.847254 9.964e-03 ## poly3 -0.0932612 0.02330 18.08 -4.002397 8.291e-04 ## ConditionLow -0.0581122 0.01879 16.18 -3.091999 6.926e-03 ## poly1:ConditionLow 0.0003188 0.06580 15.70 0.004845 9.962e-01 ## poly2:ConditionLow 0.1635455 0.05393 19.06 3.032514 6.831e-03 ## poly3:ConditionLow -0.0020869 0.02705 16.67 -0.077160 9.394e-01 ``` --- # Plot model fit ```r ggplot(TargetFix.gca, aes(Time, meanFix, color=Condition)) + stat_summary(fun.data=mean_se, geom="pointrange") + stat_summary(aes(y=fitted(m.full)), fun=mean, geom="line") + theme_bw(base_size=12) + expand_limits(y=c(0,1)) + labs(y="Fixation Proportion", x="Time since word onset (ms)") ``` <!-- --> --- # The random effects .pull-left[ ```r head(ranef(m.full)$"Subject") ``` ``` ## (Intercept) poly1 poly2 poly3 ## 708 -0.0001155 0.01084 -0.0035503 -0.004907 ## 712 0.0110129 0.10686 -0.0094179 -0.036523 ## 715 0.0113842 0.11471 -0.0110318 -0.039634 ## 720 -0.0020541 -0.01293 -0.0003802 0.003715 ## 722 0.0139054 0.16216 -0.0201988 -0.058159 ## 725 -0.0179260 -0.20721 0.0254773 0.074161 ``` ] .pull-right[ ```r head(ranef(m.full)$"Subject:Condition") ``` ``` ## (Intercept) poly1 poly2 poly3 ## 708:High 0.012238 -0.13120 -0.12985 0.01515 ## 708:Low -0.061258 0.17041 0.06226 0.01230 ## 712:High 0.021239 0.08296 0.02812 0.02003 ## 712:Low -0.014447 0.04490 0.03262 -0.01731 ## 715:High 0.012355 0.05981 0.05525 -0.02501 ## 715:Low -0.008693 0.10609 0.13038 -0.03950 ``` ] --- # The random effects ```r VarCorr(m.full) ``` ``` ## Groups Name Std.Dev. Corr ## Subject:Condition (Intercept) 0.0405 ## poly1 0.1405 -0.43 ## poly2 0.1124 -0.33 0.72 ## poly3 0.0417 0.13 -0.49 -0.43 ## Subject (Intercept) 0.0124 ## poly1 0.1194 0.91 ## poly2 0.0166 -0.43 -0.76 ## poly3 0.0421 -0.86 -0.99 0.83 ## Residual 0.0438 ``` What is being estimated? * Random variance and covariance * Unit-level random effects (but constrained to have mean = 0) -- **This is why df for parameter estimates are poorly defined in MLM** --- # Alternative random effects structure ```r m.left <- lmer(meanFix ~ (poly1+poly2+poly3)*Condition + #fixed effects ((poly1+poly2+poly3)*Condition | Subject), #random effects data=TargetFix.gca, REML=F) ``` ``` ## boundary (singular) fit: see help('isSingular') ``` ```r coef(summary(m.left)) ``` ``` ## Estimate Std. Error df t value Pr(>|t|) ## (Intercept) 0.4773228 0.01679 9.991 28.425276 6.858e-11 ## poly1 0.6385604 0.05146 10.005 12.409768 2.119e-07 ## poly2 -0.1095979 0.03718 9.993 -2.947552 1.461e-02 ## poly3 -0.0932612 0.02062 9.997 -4.523792 1.103e-03 ## ConditionLow -0.0581122 0.02111 9.993 -2.753071 2.038e-02 ## poly1:ConditionLow 0.0003188 0.07479 9.993 0.004263 9.967e-01 ## poly2:ConditionLow 0.1635455 0.06274 9.998 2.606654 2.620e-02 ## poly3:ConditionLow -0.0020869 0.03334 10.669 -0.062597 9.512e-01 ``` --- # Alternative random effects structure ```r # str(ranef(m.left)) # head(ranef(m.left)$"Subject") VarCorr(m.left) ``` ``` ## Groups Name Std.Dev. Corr ## Subject (Intercept) 0.0519 ## poly1 0.1569 0.18 ## poly2 0.1095 -0.29 0.03 ## poly3 0.0490 -0.28 -0.37 0.63 ## ConditionLow 0.0649 -0.89 -0.13 0.49 0.34 ## poly1:ConditionLow 0.2286 0.38 -0.46 -0.62 0.10 -0.56 ## poly2:ConditionLow 0.1889 0.20 0.08 -0.81 -0.22 -0.43 0.74 ## poly3:ConditionLow 0.0862 -0.08 -0.40 -0.07 -0.47 0.06 -0.27 -0.43 ## Residual 0.0430 ``` This random effect structure makes fewer assumptions: * Allows unequal variances across conditions * Allows more flexible covariance structure between random effect terms --- # Alternative random effects structure requires more parameters <img src="./figs/RandomParams.png" width="50%" /> --- # Convergence problems <span style="color:red">`Model failed to converge with max|grad| = 0.00280522 (tol = 0.002, component 1)`</span> -- ## Consider simplifying random effects **Remove random effects of higher-order terms** ```r Outcome ~ (poly1+poly2+poly3)*Condition + (poly1+poly2+poly3 | Subject) Outcome ~ (poly1+poly2+poly3)*Condition + (poly1+poly2 | Subject) ``` -- **Remove correlation between random effects** ```r Outcome ~ (poly1+poly2+poly3)*Condition + (1 | Subject) + (0+poly1 | Subject) + (0+poly2 | Subject) + (0+poly3 | Subject) ``` Alternatively: double-pipe ```r Outcome ~ (poly1+poly2+poly3)*Condition + (poly1+poly2+poly3 || Subject) ``` --- # Key points .pull-left[ **Modeling non-linear change over time** * Choose an adequate functional form + Polynomials are mathematically nice, but not practical for very complex curve shapes and be careful with extrapolation * Random effect structure + Keep it maximal, but be ready to deal with convergence problems + For within-subject variables: "left" side of pipe (random slopes) is more flexible, but requires more data to estimate; "right" side of pipe (nested) is a good alternative ] .pull-right[ <img src="./figs/max_grad_tombstone.jpg" /> ] --- # Brief break ## Next up: Live R Fixations are binary - a participant is either looking at the target object or they are not - so let's revisit the target fixation example, this time using logistic MLM to analyse the data.