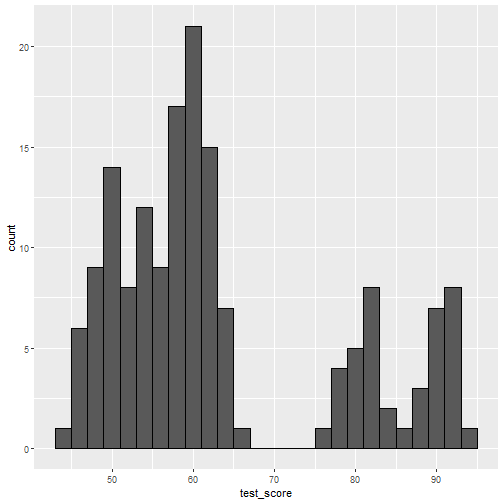

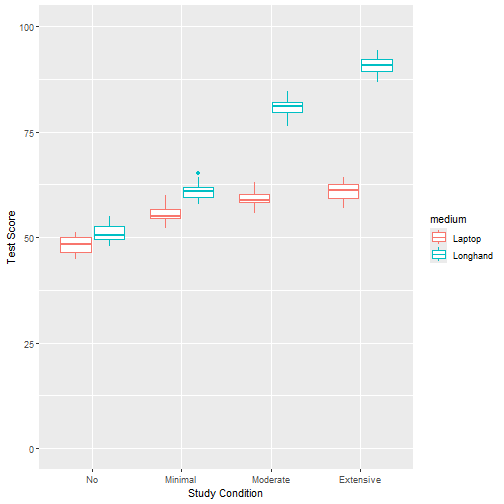

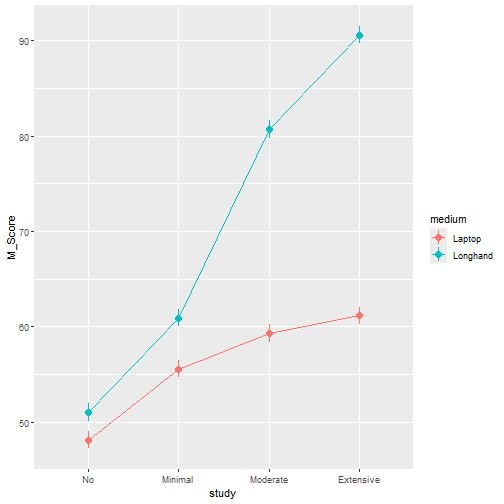

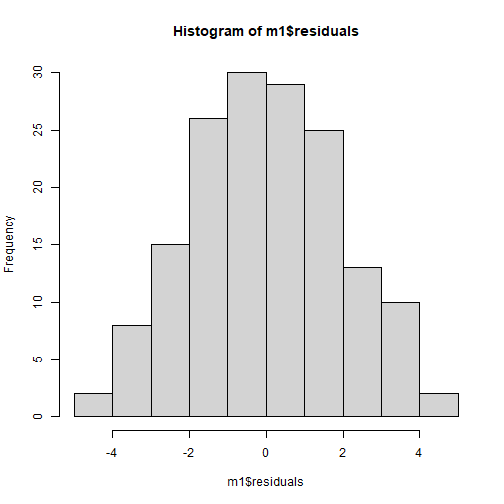

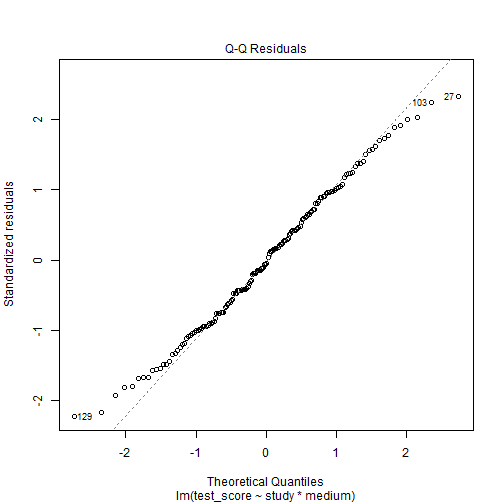

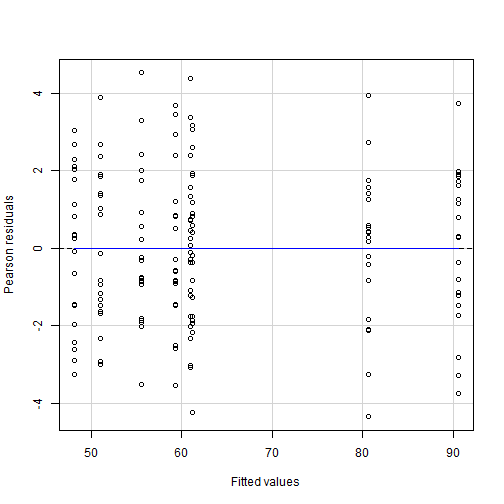

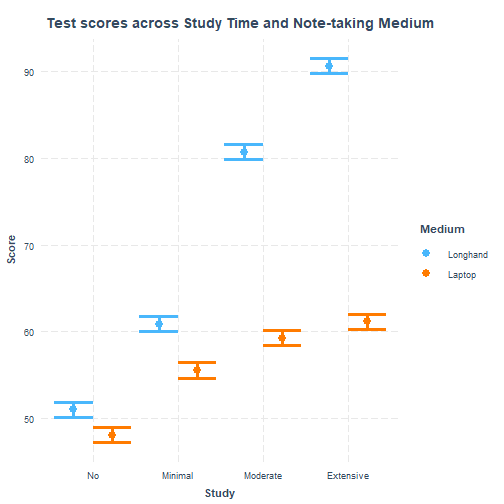

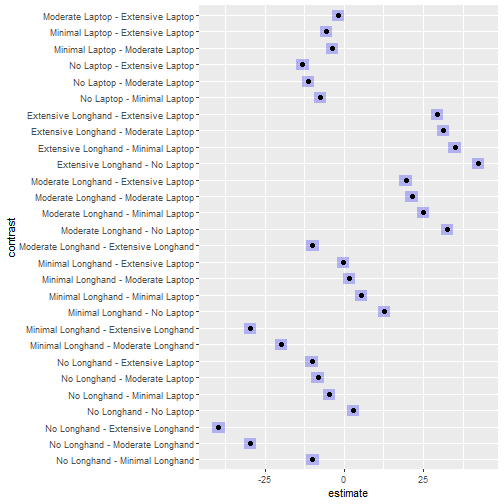

class: center, middle, inverse, title-slide .title[ # <b> Interaction Analysis </b> ] .subtitle[ ## Data Analysis for Psychology in R 2<br><br> ] .author[ ### dapR2 Team ] .institute[ ### Department of Psychology<br>The University of Edinburgh ] --- # Course Overview .pull-left[ <!--- I've just copied the output of the Sem 1 table here and removed the bolding on the last week, so things look consistent with the trailing opacity produced by the course_table.R script otherwise. --> <table style="border: 1px solid black;> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1;text-align:center;vertical-align: middle"> <b>Introduction to Linear Models</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Intro to Linear Regression</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Interpreting Linear Models</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Testing Individual Predictors</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Model Testing & Comparison</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Linear Model Analysis</td></tr> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1;text-align:center;vertical-align: middle"> <b>Analysing Experimental Studies</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Categorical Predictors & Dummy Coding</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Effects Coding & Coding Specific Contrasts</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Assumptions & Diagnostics</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Bootstrapping</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Categorical Predictor Analysis</td></tr> </table> ] .pull-right[ <table style="border: 1px solid black;> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1;text-align:center;vertical-align: middle"> <b>Interactions</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Interactions I</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Interactions II</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Interactions III</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Analysing Experiments</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> <b>Interaction Analysis</b></td></tr> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4;text-align:center;vertical-align: middle"> <b>Advanced Topics</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Power Analysis</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Binary Logistic Regression I</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Binary Logistic Regression II</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Logistic Regresison Analysis</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Exam Prep and Course Q&A</td></tr> </table> ] --- ## Overview of the Week This week, we'll be applying what we have learned to a practical example. Specifically, we'll cover: 1. Interpetation of an interaction model with categorical variables 2. Multiple pairwise comparisons with corrections **In the lectures:** + Follow along in RStudio to develop your analysis code + Take note of any additional analyses or checks you may want to perform ahead of the lab (the lectures will not cover everything) **In the lab:** + Writeup of the analysis + Some analysis code also provided + Make sure your writeup file knits to a pdf - opportunity to trouble-shoot with tutors in preparation for your Assessed Report --- ## Our example based on a paper <b> Mueller, P. A., & Oppenheimer, D. M. (2014). The pen is mightier than the keyboard: Advantages of longhand over laptop note taking. *Psychological Science, 25*(6), 1159–1168. [https://doi.org/10.1177/0956797614524581](https://doi.org/10.1177/0956797614524581) </b> + Participants were invited to take part in a study investigating the medium of note taking and study time on test scores. + The sample comprised of 160 students who took notes on a lecture via one of two mediums - either on a laptop or longhand (i.e., using pen and paper). + After watching the lecture and taking notes, they were randomly allocated to one of four study time conditions, either engaging in no, minimal, moderate, or extensive study of the notes taken on their assigned medium. + After engaging in study for their allocated time, participants took a test on the lecture content. The test involved a series of questions, where participants could score a maximum of 100 points. --- ### Research Aim Explore the associations among study time and note-taking medium on test scores ### Research Questions **RQ1.** Do differences in test scores between study conditions differ by the note-taking medium used? **RQ2.** Explore the differences between each pair of levels of each factor to determine which conditions significantly differ from each other --- ### Setup Loading all the necessary packages and the data: ``` r library(tidyverse) library(psych) library(emmeans) library(kableExtra) library(sjPlot) library(interactions) library(car) data <- read_csv("https://uoepsy.github.io/data/laptop_vs_longhand.csv") ``` --- ### Checking the Data + First look: What kind of columns do we have in our dataframe? + Let's look at the first few rows: ``` r head(data) ``` ``` ## # A tibble: 6 × 3 ## test_score medium study ## <dbl> <chr> <chr> ## 1 47.5 Laptop No ## 2 50.4 Laptop No ## 3 49.9 Laptop No ## 4 48.5 Laptop No ## 5 48.4 Laptop No ## 6 48.0 Laptop No ``` --- ### Checking the Data + Are there any missing values? ``` r table(is.na(data)) ``` ``` ## ## FALSE ## 480 ``` --- ### Checking the Data + What type of variables do we have? + Are numerical variables within the expected range? We can look at the dataframe using the `summary` function: ``` r summary(data) ``` ``` ## test_score medium study ## Min. :44.86 Length:160 Length:160 ## 1st Qu.:53.63 Class :character Class :character ## Median :59.27 Mode :character Mode :character ## Mean :63.42 ## 3rd Qu.:68.05 ## Max. :94.32 ``` --- ### Checking the Data + We have a continuous outcome variable, `test_score`, and two categorical predictor variables, `medium`, `study` + We need to turn `medium` and `study` into factors ``` r data$medium <- as_factor(data$medium) data$study <- as_factor(data$study) ``` + Let's check that this worked: ``` r summary(data) ``` ``` ## test_score medium study ## Min. :44.86 Laptop :80 No :40 ## 1st Qu.:53.63 Longhand:80 Minimal :40 ## Median :59.27 Moderate :40 ## Mean :63.42 Extensive:40 ## 3rd Qu.:68.05 ## Max. :94.32 ``` --- ### Checking the Data + 80 participants took notes on a laptop, 80 participants took notes using pen and paper + 40 participants in each of the four study conditions + Let's check if each of the 8 groups is balanced: ``` r data %>% group_by(medium, study) %>% summarise(Count = n()) ``` ``` ## # A tibble: 8 × 3 ## # Groups: medium [2] ## medium study Count ## <fct> <fct> <int> ## 1 Laptop No 20 ## 2 Laptop Minimal 20 ## 3 Laptop Moderate 20 ## 4 Laptop Extensive 20 ## 5 Longhand No 20 ## 6 Longhand Minimal 20 ## 7 Longhand Moderate 20 ## 8 Longhand Extensive 20 ``` --- ### Checking the Data: Distribution of the numerical variable ``` r ggplot(data, aes(test_score)) + geom_histogram(colour = 'black', binwidth = 2) ``` <!-- --> --- ### Data description ``` r descript <- data %>% group_by(study, medium) %>% summarise( M_Score = round(mean(test_score), 2), SD_Score = round(sd(test_score), 2), SE_Score = round(sd(test_score)/sqrt(n()), 2), Min_Score = round(min(test_score), 2), Max_Score = round(max(test_score), 2) ) ``` --- ### Data description ``` r descript ``` ``` ## # A tibble: 8 × 7 ## # Groups: study [4] ## study medium M_Score SD_Score SE_Score Min_Score Max_Score ## <fct> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 No Laptop 48.1 2 0.45 44.9 51.2 ## 2 No Longhand 51.0 2 0.45 48.0 54.9 ## 3 Minimal Laptop 55.6 2 0.45 52.1 60.1 ## 4 Minimal Longhand 60.9 2 0.45 57.8 65.3 ## 5 Moderate Laptop 59.3 2 0.45 55.8 63.0 ## 6 Moderate Longhand 80.7 2 0.45 76.3 84.6 ## 7 Extensive Laptop 61.2 2 0.45 56.9 64.3 ## 8 Extensive Longhand 90.6 2 0.45 86.8 94.3 ``` + This is useful information, but we cannot include direct R output in our writeup + Remember we can use the `kable` function to format our table --- ### A prettier descriptives table ``` r kable(descript) ``` |study |medium | M_Score| SD_Score| SE_Score| Min_Score| Max_Score| |:---------|:--------|-------:|--------:|--------:|---------:|---------:| |No |Laptop | 48.12| 2| 0.45| 44.86| 51.16| |No |Longhand | 51.02| 2| 0.45| 48.02| 54.92| |Minimal |Laptop | 55.57| 2| 0.45| 52.07| 60.10| |Minimal |Longhand | 60.91| 2| 0.45| 57.84| 65.29| |Moderate |Laptop | 59.30| 2| 0.45| 55.77| 62.98| |Moderate |Longhand | 80.69| 2| 0.45| 76.34| 84.64| |Extensive |Laptop | 61.16| 2| 0.45| 56.93| 64.32| |Extensive |Longhand | 90.58| 2| 0.45| 86.82| 94.32| <br> + Consider: What additional changes might you want to make before including this table in a writeup? --- ### Boxplot + Let's visualise data from each group, showing medians, quartiles and ranges + Test scores on the y-axis + Study duration on the x-axis + Different colours for the two note-taking mediums + Limits of the y-axis to show theoretically possible values of the outcome variable ``` r p1 <- ggplot(data = data, aes(x = study, y = test_score, color = medium)) + geom_boxplot() + ylim(0,100) + labs(x = "Study Condition", y = "Test Score") ``` --- ### Boxplot ``` r p1 ``` <!-- --> --- ### Plot means, add lines between group means + Using `geom_point` to produce points for each mean + Using `geom_linerange` to plot 2 `\(\times\)` SEs around the means + Using `geom_path` to connect the means with lines in the order they appear + Note we are coercing the levels into a numerical variable for this purpose + Not specifying y-axis limits here, so R will "zoom in" to our data + Easier to see any potential interactions ``` r p2 <- ggplot(descript, aes(x = study, y = M_Score, color = medium)) + geom_point(size = 3) + geom_linerange(aes(ymin = M_Score - 2 * SE_Score, ymax = M_Score + 2 * SE_Score)) + geom_path(aes(x = as.numeric(study))) ``` --- ### Plot means, add lines between group means ``` r p2 ``` <!-- --> --- ### ggplot + Consider: What changes might you make to the plots before including them in the writeup? + How would you go about this? --- ## Investigating RQ1 #### "Do differences in test scores between study conditions differ by the note-taking medium used?" + This RQ requires a model including an interaction to answer + Would a dummy-coded or an effects-coded model be more appropriate? **Dummy coding:** + When an interaction is included in the model, a dummy-coded model captures marginal/conditional effects (the value of one variable is fixed while estimating `\(\beta\)` for the other variable) + Interaction pertains to differences in group differences along a second factor **Effects coding:** + Captures main effects (the effect of one variable averaged over the values of the other variable), even when an interaction is included in the model + Interaction pertains to the effect of the combination of the two variables over and above the two main effects --- ### Investigating RQ1: Dummy Coding + We will go with dummy coding here, based on the RQ + We now have to choose reference levels for both of our predictors + Let's use `No` as a baseline for the `study` variable: ``` r data$study <- fct_relevel(data$study , "No", "Minimal", "Moderate", "Extensive") ``` + No principled reason for choosing one or the other level of note-taking medium as the baseline + Let's go with `Longhand` as the reference level so we can assess whether the more technically advanced approach (`Laptop`) improves student performance ``` r data$medium <- fct_relevel(data$medium , "Longhand") ``` + Check that this worked: ``` r summary(data) ``` --- ### Investigating RQ1 We'll use the following model to investigate our RQ: $$ `\begin{align} \text{Test Score} &= \beta_0 \\ &+ \beta_1 \cdot S_\text{Minimal} \\ &+ \beta_2 \cdot S_\text{Moderate} \\ &+ \beta_3 \cdot S_\text{Extensive} \\ &+ \beta_4 \cdot M_\text{Laptop} \\ &+ \beta_5 \cdot (S_\text{Minimal} \cdot M_\text{Laptop}) \\ &+ \beta_6 \cdot (S_\text{Moderate} \cdot M_\text{Laptop}) \\ &+ \beta_7 \cdot (S_\text{Extensive} \cdot M_\text{Laptop}) \\ &+ \epsilon \end{align}` $$ --- ### Running our model: ``` r m1 <- lm(test_score ~ study*medium, data = data) summary(m1) ``` ``` ## ## Call: ## lm(formula = test_score ~ study * medium, data = data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.3485 -1.4764 -0.1018 1.4039 4.5321 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 51.0200 0.4472 114.084 < 2e-16 *** ## studyMinimal 9.8900 0.6325 15.637 < 2e-16 *** ## studyModerate 29.6700 0.6325 46.912 < 2e-16 *** ## studyExtensive 39.5600 0.6325 62.550 < 2e-16 *** ## mediumLaptop -2.9000 0.6325 -4.585 9.41e-06 *** ## studyMinimal:mediumLaptop -2.4400 0.8944 -2.728 0.00712 ** ## studyModerate:mediumLaptop -18.4900 0.8944 -20.672 < 2e-16 *** ## studyExtensive:mediumLaptop -26.5200 0.8944 -29.650 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2 on 152 degrees of freedom ## Multiple R-squared: 0.9803, Adjusted R-squared: 0.9794 ## F-statistic: 1081 on 7 and 152 DF, p-value: < 2.2e-16 ``` --- ### Checking assumptions before interpreting the model #### Linearity + We can assume linearity when our model does not include any numerical predictors or covariates (see [here](https://www.bookdown.org/rwnahhas/RMPH/mlr-linearity.html)) #### Independence of Errors + We are using between-subjects data, so we'll also assume independence of our error terms --- #### Normality of Residuals (histogram) ``` r hist(m1$residuals) ``` <!-- --> --- #### Normality of Residuals (QQ plots) ``` r plot(m1, which = 2) ``` <!-- --> --- #### Equality of Variance (Homoscedasticity) Using residuals vs predicted values plots: `residualPlot` from the `car` package ``` r residualPlot(m1) ``` <!-- --> --- ### Checking model diagnostics + Are there are any high-influence cases in our data? + Consider: Which model diagnostics would be useful here? -- + Let's check DFBeta values for the present purposes + How much the estimate for a coefficient changes when a particular observation is removed ``` r dfbeta(m1) summary(dfbeta(m1)) ``` --- ``` ## (Intercept) studyMinimal studyModerate studyExtensive ## Min. :-0.1577 Min. :-0.2054 Min. :-0.2289 Min. :-0.2054 ## 1st Qu.: 0.0000 1st Qu.: 0.0000 1st Qu.: 0.0000 1st Qu.: 0.0000 ## Median : 0.0000 Median : 0.0000 Median : 0.0000 Median : 0.0000 ## Mean : 0.0000 Mean : 0.0000 Mean : 0.0000 Mean : 0.0000 ## 3rd Qu.: 0.0000 3rd Qu.: 0.0000 3rd Qu.: 0.0000 3rd Qu.: 0.0000 ## Max. : 0.2054 Max. : 0.2306 Max. : 0.2077 Max. : 0.1969 ## mediumLaptop studyMinimal:mediumLaptop studyModerate:mediumLaptop ## Min. :-0.2054 Min. :-0.23065 Min. :-0.20766 ## 1st Qu.: 0.0000 1st Qu.:-0.01337 1st Qu.:-0.01611 ## Median : 0.0000 Median : 0.00000 Median : 0.00000 ## Mean : 0.0000 Mean : 0.00000 Mean : 0.00000 ## 3rd Qu.: 0.0000 3rd Qu.: 0.00000 3rd Qu.: 0.00000 ## Max. : 0.1602 Max. : 0.23853 Max. : 0.22887 ## studyExtensive:mediumLaptop ## Min. :-0.22278 ## 1st Qu.:-0.01396 ## Median : 0.00000 ## Mean : 0.00000 ## 3rd Qu.: 0.00000 ## Max. : 0.20539 ``` + What do the minimum and maximum values for each coefficient tell us? + Consider: What might be some possible next steps here, if we wanted to understand any potentially influential cases better? --- ### Back to the model + We are generally happy with our model assumption checks and diagnostics checks here + Let's return to the model to interpret it --- ``` r summary(m1) ``` ``` ## ## Call: ## lm(formula = test_score ~ study * medium, data = data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.3485 -1.4764 -0.1018 1.4039 4.5321 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 51.0200 0.4472 114.084 < 2e-16 *** ## studyMinimal 9.8900 0.6325 15.637 < 2e-16 *** ## studyModerate 29.6700 0.6325 46.912 < 2e-16 *** ## studyExtensive 39.5600 0.6325 62.550 < 2e-16 *** ## mediumLaptop -2.9000 0.6325 -4.585 9.41e-06 *** ## studyMinimal:mediumLaptop -2.4400 0.8944 -2.728 0.00712 ** ## studyModerate:mediumLaptop -18.4900 0.8944 -20.672 < 2e-16 *** ## studyExtensive:mediumLaptop -26.5200 0.8944 -29.650 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2 on 152 degrees of freedom ## Multiple R-squared: 0.9803, Adjusted R-squared: 0.9794 ## F-statistic: 1081 on 7 and 152 DF, p-value: < 2.2e-16 ``` --- ### Interpretation with dummy coding .pull-left[ ``` ## Estimate Std. Error ## (Intercept) 51.02 0.45 ## studyMinimal 9.89 0.63 ## studyModerate 29.67 0.63 ## studyExtensive 39.56 0.63 ## mediumLaptop -2.90 0.63 ## studyMinimal:mediumLaptop -2.44 0.89 ## studyModerate:mediumLaptop -18.49 0.89 ## studyExtensive:mediumLaptop -26.52 0.89 ``` ] .pull-right[ | |Laptop |Longhand | |:---------|:------|:--------| |No Study |48.12 |51.02 | |Minimal |55.57 |60.91 | |Moderate |59.30 |80.69 | |Extensive |61.16 |90.58 | ] + **Longhand** is the reference level in **medium** + **No** is the reference level in **study** + Marginal effects betas: The differences in means within the reference groups + Interaction betas: Differences in differences <br> + Note: See the use of `tab_model` (from `sjPlot`) in the lab's analysis code for formatting regression output for the writeup --- ``` r plot_m1 <- cat_plot(model = m1, pred = study, modx = medium, main.title = "Test scores across Study Time and Note-taking Medium", x.label = "Study", y.label = "Score", legend.main = "Medium") plot_m1 ``` <!-- --> --- ### Model comparison + What if we wanted to know whether the interaction between note-taking medium and study time explains significantly more variance in test scores than note-taking medium and study time alone? + Incremental F-test needed + Run a model with study time and note-taking medium as additive predictors (this model is nested with respect to our full model) + Use the `anova` function to compare the reduced model and the full model + The result will tell us whether the interaction (the three interaction terms jointly) significantly improves model fit --- ### Model comparison ``` r m1r <- lm(test_score ~ study+medium, data = data) anova(m1r, m1) ``` ``` ## Analysis of Variance Table ## ## Model 1: test_score ~ study + medium ## Model 2: test_score ~ study * medium ## Res.Df RSS Df Sum of Sq F Pr(>F) ## 1 155 5490.7 ## 2 152 608.0 3 4882.7 406.89 < 2.2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` <br> + Consider: How would you find out how much additional variance the full model explains compared to the reduced model? --- ## Investigating RQ2 ####Explore the differences between each pair of levels of each factor to determine which conditions significantly differ from each other + Pairwise comparisons with Tukey corrections **Step 1:** Use `emmeans` to estimate group means ``` r m1_emm <- emmeans(m1, ~study*medium) ``` **Step 2:** Use the `pairs` function to obtain pairwise comparisons (Note: Tukey correction applied by default) ``` r pairs_res <- pairs(m1_emm) ``` **Step 3:** Examine the output from the pairwise comparisons and interpret it ``` r pairs_res ``` **Step 4:** Plot the pairwise differences between groups ``` r plot(pairs_res) ``` --- ``` ## contrast estimate SE df t.ratio p.value ## No Longhand - Minimal Longhand -9.89 0.632 152 -15.637 <.0001 ## No Longhand - Moderate Longhand -29.67 0.632 152 -46.912 <.0001 ## No Longhand - Extensive Longhand -39.56 0.632 152 -62.550 <.0001 ## No Longhand - No Laptop 2.90 0.632 152 4.585 0.0002 ## No Longhand - Minimal Laptop -4.55 0.632 152 -7.194 <.0001 ## No Longhand - Moderate Laptop -8.28 0.632 152 -13.092 <.0001 ## No Longhand - Extensive Laptop -10.14 0.632 152 -16.033 <.0001 ## Minimal Longhand - Moderate Longhand -19.78 0.632 152 -31.275 <.0001 ## Minimal Longhand - Extensive Longhand -29.67 0.632 152 -46.912 <.0001 ## Minimal Longhand - No Laptop 12.79 0.632 152 20.223 <.0001 ## Minimal Longhand - Minimal Laptop 5.34 0.632 152 8.443 <.0001 ## Minimal Longhand - Moderate Laptop 1.61 0.632 152 2.546 0.1847 ## Minimal Longhand - Extensive Laptop -0.25 0.632 152 -0.395 0.9999 ## Moderate Longhand - Extensive Longhand -9.89 0.632 152 -15.637 <.0001 ## Moderate Longhand - No Laptop 32.57 0.632 152 51.498 <.0001 ## Moderate Longhand - Minimal Laptop 25.12 0.632 152 39.718 <.0001 ## Moderate Longhand - Moderate Laptop 21.39 0.632 152 33.821 <.0001 ## Moderate Longhand - Extensive Laptop 19.53 0.632 152 30.880 <.0001 ## Extensive Longhand - No Laptop 42.46 0.632 152 67.135 <.0001 ## Extensive Longhand - Minimal Laptop 35.01 0.632 152 55.356 <.0001 ## Extensive Longhand - Moderate Laptop 31.28 0.632 152 49.458 <.0001 ## Extensive Longhand - Extensive Laptop 29.42 0.632 152 46.517 <.0001 ## No Laptop - Minimal Laptop -7.45 0.632 152 -11.779 <.0001 ## No Laptop - Moderate Laptop -11.18 0.632 152 -17.677 <.0001 ## No Laptop - Extensive Laptop -13.04 0.632 152 -20.618 <.0001 ## Minimal Laptop - Moderate Laptop -3.73 0.632 152 -5.898 <.0001 ## Minimal Laptop - Extensive Laptop -5.59 0.632 152 -8.839 <.0001 ## Moderate Laptop - Extensive Laptop -1.86 0.632 152 -2.941 0.0717 ## ## P value adjustment: tukey method for comparing a family of 8 estimates ``` --- ### Visualising pairwise differences <!-- --> --- ## In the lab this week Writeup in three parts: + Analysis strategy + Results + Discussion <br> Excellent practice for the upcoming Assessed Report! --- ## Reminders **Next Week** + No lectures, labs or office hours during Flexible Learning Week -- **Labs this week** + Make sure your analysis writeup file successfully knits to a pdf and does not include visible R code + A good opportunity to troubleshoot technical issues ahead of the Assessed Report -- **The Assessed Report and academic integrity** + Working on the assessed report during the lab sessions is not allowed + Other groups should not overhear your discussion of your report + Make arrangements to meet and work on the report outside of class time + Your tutors and instructors will not answer questions directly pertaining to the content of the assessed report + Clarification questions about instructions welcome + For content questions, you are allowed to ask questions on related material from lectures and past labs --- ## This week .pull-left[ ### Tasks <img src="figs/labs.svg" width="10%" /> **Attend your lab and work together on the exercises** <br> <img src="figs/exam.svg" width="10%" /> **Complete the weekly quiz** ] .pull-right[ ### Support <img src="figs/forum.svg" width="10%" /> **Help each other on the Piazza forum** <br> <img src="figs/oh.png" width="10%" /> **Attend office hours (see Learn page for details)** ]