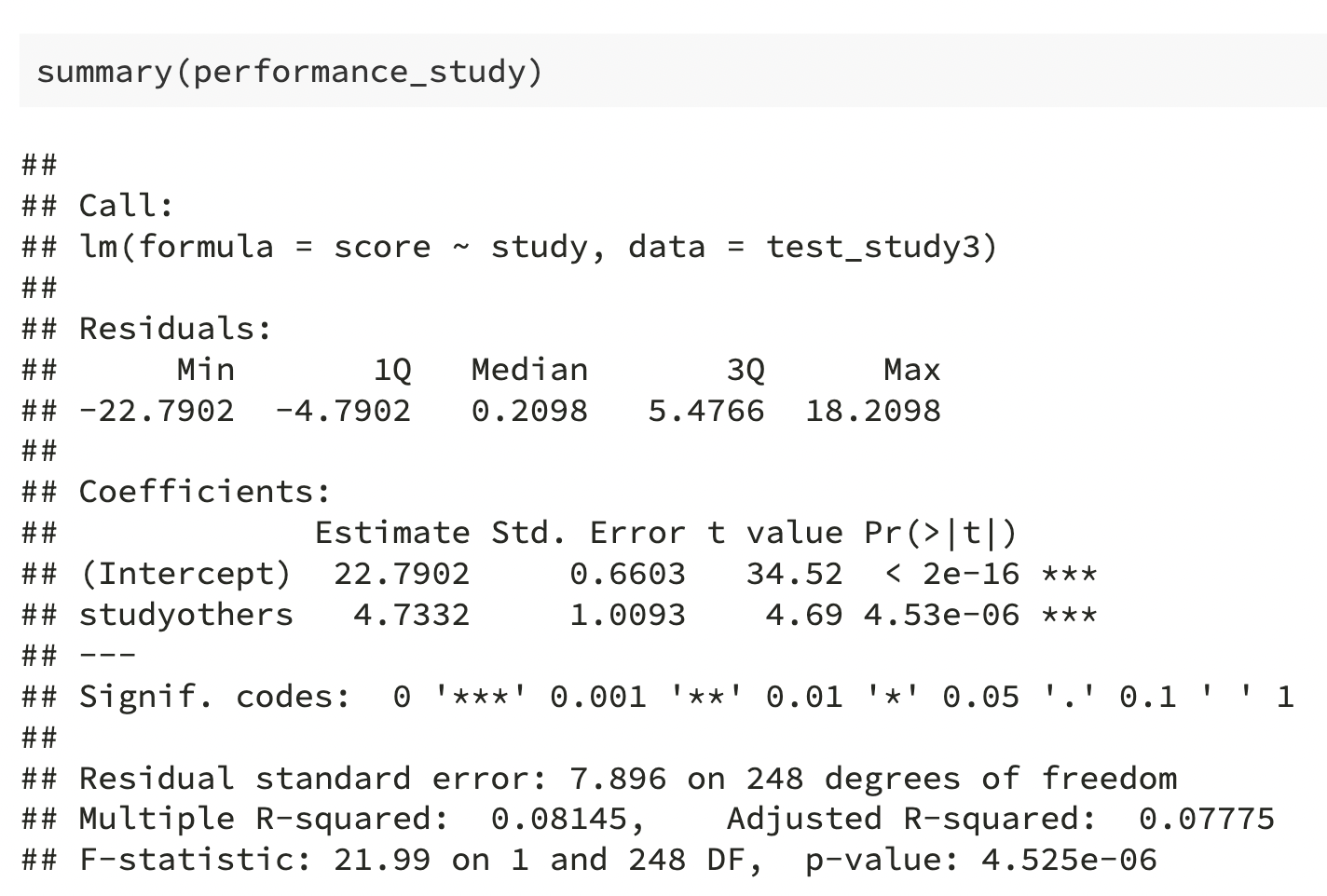

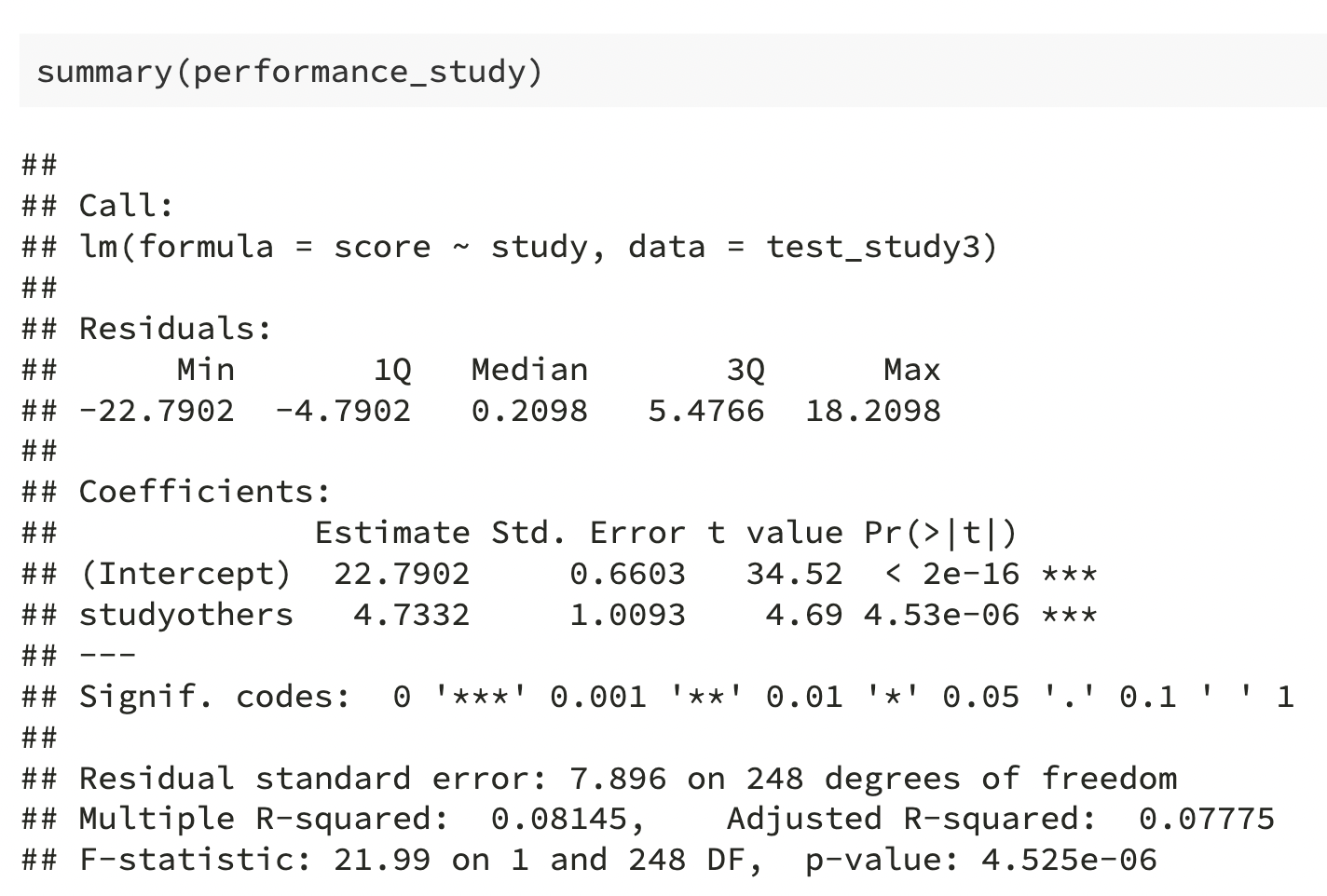

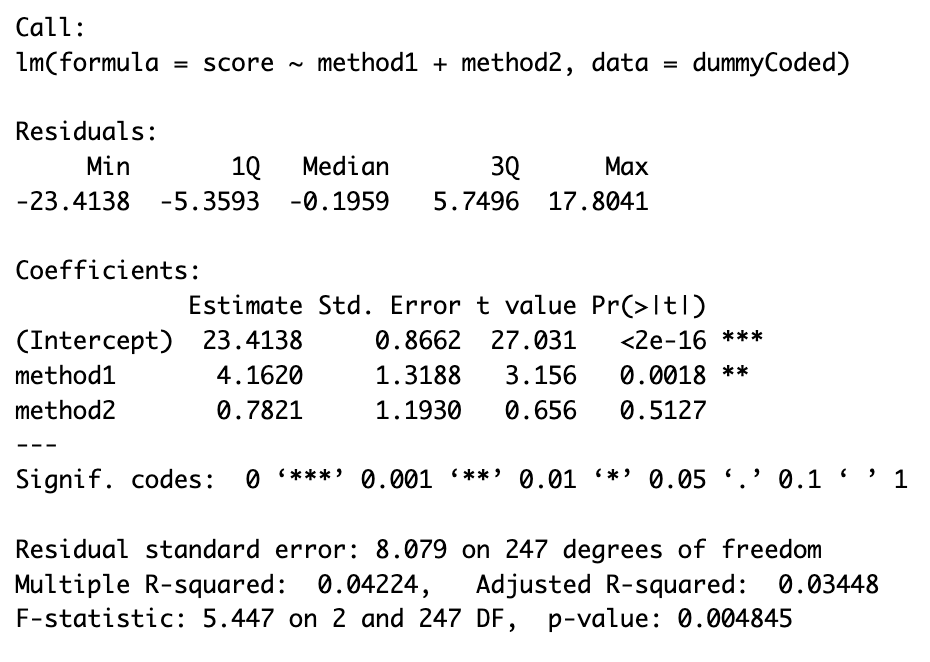

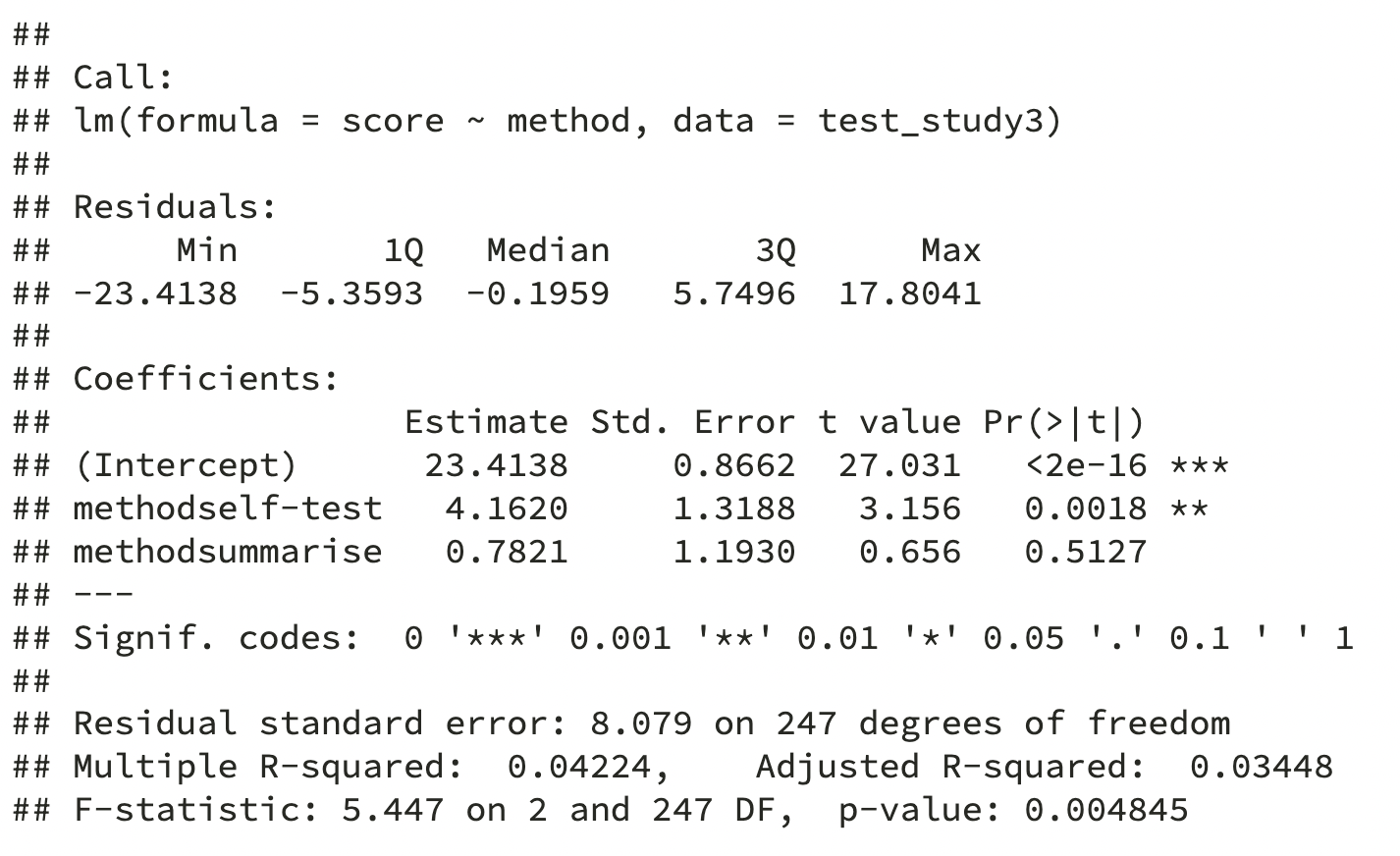

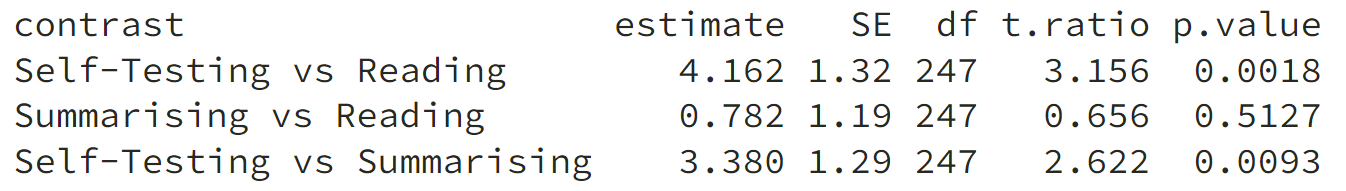

class: center, middle, inverse, title-slide .title[ # <b>Categorical Predictors </b> ] .subtitle[ ## Data Analysis for Psychology in R 2<br><br> ] .author[ ### dapR2 Team ] .institute[ ### Department of Psychology<br>The University of Edinburgh ] --- # Course Overview .pull-left[ <table style="border: 1px solid black;> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1;text-align:center;vertical-align: middle"> <b>Introduction to Linear Models</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Intro to Linear Regression</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Interpreting Linear Models</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Testing Individual Predictors</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Model Testing & Comparison</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> Linear Model Analysis</td></tr> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1;text-align:center;vertical-align: middle"> <b>Analysing Experimental Studies</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:1"> <b>Categorical Predictors & Dummy Coding</b></td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Effects Coding & Coding Specific Contrasts</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Assumptions & Diagnostics</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Bootstrapping</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Categorical Predictor Analysis</td></tr> </table> ] .pull-right[ <table style="border: 1px solid black;> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4;text-align:center;vertical-align: middle"> <b>Interactions</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Interactions I</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Interactions II</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Interactions III</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Analysing Experiments</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Interaction Analysis</td></tr> <tr style="padding: 0 1em 0 1em;"> <td rowspan="5" style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4;text-align:center;vertical-align: middle"> <b>Advanced Topics</b></td> <td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Power Analysis</td> </tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Binary Logistic Regression I</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Binary Logistic Regression II</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Logistic Regresison Analysis</td></tr> <tr><td style="border: 1px solid black;padding: 0 1em 0 1em;opacity:0.4"> Exam Prep and Course Q&A</td></tr> </table> ] --- # This Week's Learning Objectives 1. Understand the meaning of model coefficients in the case of a binary predictor 2. Understand how to apply dummy coding to include categorical predictors with 2+ levels 3. Be able to include categorical predictors into an `lm` model in R 4. Introduce `emmeans` as a tool for testing different effects in models with categorical predictors --- class: inverse, center, middle # Part 1: Categorical variables --- # Categorical variables (recap dapR1) + Categorical variables can only take discrete values + E.g., animal type: 1= duck, 2= cow, 3= wasp + They are mutually exclusive + No duck-wasps or cow-ducks! + In R, categorical variables should be of class `factor` + The discrete values are `levels` + Levels can have numeric values (1, 2 3) and labels (e.g. "duck", "cow", "wasp") + All that these numbers represent is category membership --- # Understanding category membership + When we have looked at variables like hours of study, an additional unit (1 hour) is more time + As values change (and they do not need to change by whole hours), we get a sense of more or less time being spent studying + Across our whole sample, that gives us variation in hours of study + When we have a categorical variable, we do not have this level of precision + Suppose we split hours of study into the following groups: + 0 to 2.99 hours = low study time + 3 to 9.99 hours = medium study time + 10+ hours = high study time + **Side note**: This is for point of demonstration, it is generally not a good idea to break a continuous measure into groups for the purposes of analysis --- # Understanding category membership .pull-left[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> ID </th> <th style="text-align:right;"> score </th> <th style="text-align:right;"> hours </th> <th style="text-align:left;"> hours_cat </th> <th style="text-align:left;"> hours_catNum </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> ID102 </td> <td style="text-align:right;"> 36 </td> <td style="text-align:right;"> 12 </td> <td style="text-align:left;"> high </td> <td style="text-align:left;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID104 </td> <td style="text-align:right;"> 29 </td> <td style="text-align:right;"> 13 </td> <td style="text-align:left;"> high </td> <td style="text-align:left;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID106 </td> <td style="text-align:right;"> 29 </td> <td style="text-align:right;"> 12 </td> <td style="text-align:left;"> high </td> <td style="text-align:left;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID107 </td> <td style="text-align:right;"> 18 </td> <td style="text-align:right;"> 11 </td> <td style="text-align:left;"> high </td> <td style="text-align:left;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID110 </td> <td style="text-align:right;"> 41 </td> <td style="text-align:right;"> 15 </td> <td style="text-align:left;"> high </td> <td style="text-align:left;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID103 </td> <td style="text-align:right;"> 15 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:left;"> medium </td> <td style="text-align:left;"> 2 </td> </tr> <tr> <td style="text-align:left;"> ID105 </td> <td style="text-align:right;"> 18 </td> <td style="text-align:right;"> 9 </td> <td style="text-align:left;"> medium </td> <td style="text-align:left;"> 2 </td> </tr> <tr> <td style="text-align:left;"> ID101 </td> <td style="text-align:right;"> 18 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> low </td> <td style="text-align:left;"> 1 </td> </tr> <tr> <td style="text-align:left;"> ID108 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 2 </td> <td style="text-align:left;"> low </td> <td style="text-align:left;"> 1 </td> </tr> <tr> <td style="text-align:left;"> ID109 </td> <td style="text-align:right;"> 17 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:left;"> low </td> <td style="text-align:left;"> 1 </td> </tr> </tbody> </table> ] .pull-right[ ``` ## hours hours_cat hours_catNum ## Min. : 0.00 low : 45 1: 45 ## 1st Qu.: 4.00 medium: 96 2: 96 ## Median : 9.00 high :109 3:109 ## Mean : 8.02 ## 3rd Qu.:12.00 ## Max. :15.00 ``` ] --- # Binary variable (recap) + Binary variable is a categorical variable with two levels + Traditionally coded with a 0 and 1 + In `lm`, binary variables are often referred to as dummy variables + when we use multiple dummies, we talk about the general procedure of dummy coding + Why 0 and 1? -- Quick version: It has some nice properties when it comes to interpretation -- > **Have a guess:** > What is the interpretation of the intercept if the predictor is binary? -- > What about the slope? --- class: center, middle # Questions? --- class: inverse, center, middle # Part 2: lm with a single binary predictor --- # Extending our example .pull-left[ + Our in-class example so far has used *test scores*, *revision time* and *motivation* + Let's say we also collected data on who they studied with (`study`); + 0 = alone; 1 = with others + And also which of three different revisions methods they used for the test (`study_method`) + 1 = Notes re-reading; 2 = Notes summarising; 3 = Self-testing ([see here](https://www.psychologicalscience.org/publications/journals/pspi/learning-techniques.html)) + We collect a new sample of 200 students ] .pull-right[ ``` r head(test_study3) %>% kable() ``` |ID | score| hours| motivation|study |method | |:-----|-----:|-----:|----------:|:------|:---------| |ID101 | 18| 1| -0.42|others |self-test | |ID102 | 36| 12| 0.15|alone |summarise | |ID103 | 15| 3| 0.35|alone |summarise | |ID104 | 29| 13| 0.16|others |summarise | |ID105 | 18| 9| -1.12|alone |read | |ID106 | 29| 12| -0.64|others |read | ] --- # LM with binary predictors + Now lets ask the question: + **Do students who study with others score better than students who study alone?** + Our equation looks familiar: `$$score_i = \beta_0 + \beta_1 study_{i} + \epsilon_i$$` + And in R: ``` r performance_study <- lm(score ~ study, data = test_study3) ``` --- # Model results ``` r summary(performance_study) ``` ``` ## ## Call: ## lm(formula = score ~ study, data = test_study3) ## ## Residuals: ## Min 1Q Median 3Q Max ## -22.7902 -4.7902 0.2098 5.4766 18.2098 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 22.7902 0.6603 34.52 < 2e-16 *** ## studyothers 4.7332 1.0093 4.69 4.53e-06 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 7.896 on 248 degrees of freedom ## Multiple R-squared: 0.08145, Adjusted R-squared: 0.07775 ## F-statistic: 21.99 on 1 and 248 DF, p-value: 4.525e-06 ``` --- # Interpretation .pull-left[ + As before, the intercept `\(\hat \beta_0\)` is the expected value of `\(y\)` when `\(x=0\)` + What is `\(x=0\)` here? + It is the students who study alone + So what about `\(\hat \beta_1\)`? + **Look at the output on the right hand side** + What do you notice about the difference in averages? + Let's look back at the summary results again.... ] .pull-right[ ``` r test_study3 %>% * group_by(study) %>% summarise( * Average = round(mean(score),4) ) ``` ``` ## # A tibble: 2 × 2 ## study Average ## <fct> <dbl> ## 1 alone 22.8 ## 2 others 27.5 ``` ] --- # Model results .pull-left[ <!-- --> ] .pull-right[ ``` ## # A tibble: 2 × 2 ## study Average ## <fct> <dbl> ## 1 alone 22.8 ## 2 others 27.5 ``` ] --- # Interpretation + `\(\hat \beta_0\)` = predicted expected value of `\(y\)` when `\(x = 0\)` + Or, the mean of group coded 0 (those who study alone) + `\(\hat \beta_1\)` = predicted difference between the means of the two groups + Group 1 - Group 0 (Mean `score` for those who study with others - mean `score` of those who study alone) + Notice how this maps to our question + Do students who study with others score better than students who study alone? --- class: center, middle # Questions? --- class: inverse, center, middle # Part 3: Prediction equations for groups & significance --- # Equations for each group <br> `$$\widehat{score} = \hat \beta_0 + \hat \beta_1 study$$` <br> + For those who study alone ( `\(study = 0\)` ): `$$\widehat{score}_{alone} = \hat \beta_0 + \hat \beta_1 \times 0$$` + So: `$$\widehat{score}_{alone} = \hat \beta_0$$` --- # Equations for each group + For those who study with others ( `\(study = 1\)` ): `$$\widehat{score}_{others} = \hat \beta_0 + \hat \beta_1 \times 1$$` + So: `$$\widehat{score}_{others} = \hat \beta_0 + \hat \beta_1$$` + And if we re-arrange: `$$\hat \beta_1 = \widehat{score}_{others} - \hat \beta_0$$` + Remembering that `\(\hat \beta_0 = \widehat{score}_{alone}\)`, we finally obtain: `$$\hat \beta_1 = \widehat{score}_{others} - \widehat{score}_{alone}$$` --- # Visualise the model .center[ <img src="dapr2_06_LMcategorical1_files/figure-html/unnamed-chunk-13-1.png" width="504" /> ] --- count: false # Visualise the model .center[ <img src="dapr2_06_LMcategorical1_files/figure-html/unnamed-chunk-14-1.png" width="504" /> ] --- count: false # Visualise the model .center[ <img src="dapr2_06_LMcategorical1_files/figure-html/unnamed-chunk-15-1.png" width="504" /> ] --- count: false # Visualise the model .center[ <img src="dapr2_06_LMcategorical1_files/figure-html/unnamed-chunk-16-1.png" width="504" /> ] --- # Evaluation of model and significance of `\(\beta_1\)` + `\(R^2\)` and `\(F\)`-ratio interpretation are identical to their interpretation in models with only continuous predictors + And we assess the significance of predictors in the same way + We calculate `\(\hat \beta_1\)` = difference between groups + `\(t\)`-value using the SE of `\(\hat \beta_1\)`, and associated `\(p\)`-value + Or a confidence interval around the coefficient --- # Hold on... it's a t-test ``` r t.test(score ~ study, var.equal = T, data = test_study3) ``` ``` ## ## Two Sample t-test ## ## data: score by study ## t = -4.6895, df = 248, p-value = 4.525e-06 ## alternative hypothesis: true difference in means between group alone and group others is not equal to 0 ## 95 percent confidence interval: ## -6.721058 -2.745251 ## sample estimates: ## mean in group alone mean in group others ## 22.79021 27.52336 ``` --- # Model results + **Test your understanding:** How do we interpret our results? .pull-left[ <!-- --> ] -- .pull-right[ Study habits significantly predicted student test scores, *F*(1, 248) = 21.99, *p* < .001. Study habits explained 8.15% of the variance in test scores. Specifically, students who studied with others (*M* = 27.52, *SD* = 7.94 ) scored significantly higher than students who studied alone (*M* = 22.79, *SD* = 7.86), `\(\beta_1\)` = 4.73, *SE* = 1.01, *t* = 4.69, *p* < .001. ] --- class: center, middle # Questions? --- class: inverse, center, middle # Part 4: Categorical variables with >2 levels --- # Including categorical predictors with >2 levels in a regression + The goal when analysing categorical data with 2 or more levels is that each of our `\(\beta\)` coefficients represents a specific difference between means + e.g. When using a single binary predictor, `\(\beta_1\)` is the difference between the two groups + To be able to do this when we have 2+ levels, we need to apply a **coding scheme** + Two common coding schemes are: + Dummy coding (which we will discuss now) + Effects coding (or sum to zero, which we will discuss next week) + There are LOTS of ways to do this; if curious, [see here](https://stats.oarc.ucla.edu/r/library/r-library-contrast-coding-systems-for-categorical-variables/) --- # Dummy coding + Dummy coding uses 0's and 1's to represent group membership + One level is chosen as a baseline + All other levels are compared against that baseline + Notice, this is identical to binary variables already discussed + Dummy coding is simply the process of producing a set of binary coded variables + For any categorical variable, we will create `\(k\)`-1 dummy variables + `\(k\)` = number of levels --- # Choosing a baseline? + Each level of your categorical predictor will be compared against the baseline + Good baseline levels could be: + The control group in an experiment + The group expected to have the lowest score on the outcome + The largest group + The baseline should not be: + A poorly defined level, e.g. an `Other` group + Much smaller than the other groups --- # Steps in dummy coding by hand 1. Choose a baseline level 2. Assign everyone in the baseline group `0` for all `\(k\)`-1 dummy variables 3. Assign everyone in the next group a `1` for the first dummy variable and a `0` for all the other dummy variables 4. Assign everyone in the next again group a `1` for the second dummy variable and a `0` for all the other dummy variables 5. Repeat until all `\(k\)`-1 dummy variables have had 0's and 1's assigned 6. Enter the `\(k\)`-1 dummy variables into your regression --- # Dummy coding by hand .pull-left[ + We start out with a dataset that looks like: ``` ## ID score method ## 1 ID101 18 self-test ## 2 ID102 36 summarise ## 3 ID103 15 summarise ## 4 ID104 29 summarise ## 5 ID105 18 read ## 6 ID106 29 read ## 7 ID107 18 summarise ## 8 ID108 0 read ## 9 ID109 17 read ## 10 ID110 41 read ``` ] .pull-right[ + And end up with one that looks like: ``` ## ID score method method1 method2 ## 1 ID101 18 self-test 1 0 ## 2 ID102 36 summarise 0 1 ## 3 ID103 15 summarise 0 1 ## 4 ID104 29 summarise 0 1 ## 5 ID105 18 read 0 0 ## 6 ID106 29 read 0 0 ## 7 ID107 18 summarise 0 1 ## 8 ID108 0 read 0 0 ## 9 ID109 17 read 0 0 ## 10 ID110 41 read 0 0 ``` ] --- # Dummy coding by hand + We would then enter the dummy coded variables into a regression model: .pull-left[ ``` ## ID score method method1 method2 ## 1 ID101 18 self-test 1 0 ## 2 ID102 36 summarise 0 1 ## 3 ID103 15 summarise 0 1 ## 4 ID104 29 summarise 0 1 ## 5 ID105 18 read 0 0 ## 6 ID106 29 read 0 0 ## 7 ID107 18 summarise 0 1 ## 8 ID108 0 read 0 0 ## 9 ID109 17 read 0 0 ## 10 ID110 41 read 0 0 ``` ] .pull-right[ <!-- --> ] -- > **Test your understanding:** Which method is our baseline? > How does our model know to treat it as the baseline? --- # Dummy coding with `lm` + `lm` automatically applies dummy coding when you include a variable of class `factor` in a model. + It selects the first group (alphabetically) as the baseline group + It represents this as a contrast matrix which looks like: ``` r contrasts(test_study3$method) ``` ``` ## self-test summarise ## read 0 0 ## self-test 1 0 ## summarise 0 1 ``` --- # Dummy coding with `lm` + `lm` does all the dummy coding work for us: .pull-left[ .center[**Dummy Coding in lm**] ``` r summary(lm(score ~ method, data = dummyCoded)) ``` <!-- --> ] .pull-right[ .center[**Dummy Coding by Hand**] ``` r summary(lm(score ~ method1+method2, data = dummyCoded)) ``` <img src="figs/dcResults.png" width="90%" /> ] --- # Dummy coding with `lm` .pull-left[ + The intercept is the mean of the baseline group (re-reading) + The coefficient for `methodself-test` is the mean difference between the self-testing group and the baseline group (re-reading) + The coefficient for `methodsummarise` is the mean difference between the note summarising group and the baseline group (re-reading) ] .pull-right[ ``` r mod1 ``` ``` ## ## Call: ## lm(formula = score ~ method, data = test_study3) ## ## Coefficients: ## (Intercept) methodself-test methodsummarise ## 23.4138 4.1620 0.7821 ``` ``` ## # A tibble: 3 × 2 ## method Group_Mean ## <fct> <dbl> ## 1 read 23.4 ## 2 self-test 27.6 ## 3 summarise 24.2 ``` ] --- # Dummy coding with `lm` (full results) ``` r summary(mod1) ``` ``` ## ## Call: ## lm(formula = score ~ method, data = test_study3) ## ## Residuals: ## Min 1Q Median 3Q Max ## -23.4138 -5.3593 -0.1959 5.7496 17.8041 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 23.4138 0.8662 27.031 <2e-16 *** ## methodself-test 4.1620 1.3188 3.156 0.0018 ** ## methodsummarise 0.7821 1.1930 0.656 0.5127 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 8.079 on 247 degrees of freedom ## Multiple R-squared: 0.04224, Adjusted R-squared: 0.03448 ## F-statistic: 5.447 on 2 and 247 DF, p-value: 0.004845 ``` --- # Changing the baseline group + The level that `lm` chooses as its baseline may not always be the best choice + You can change it using: ``` r contrasts(test_study3$method) <- contr.treatment(3, base = 2) ``` + `contrasts` gets updated, giving you the new coding scheme + `contr.treatment` specifies that you want dummy coding + `3` is the number of levels of your predictor + `base = 2` is the level number of your new baseline --- # Results using the new baseline .pull-left[ ``` r contrasts(test_study3$method) <- contr.treatment(3, base = 2) ``` + The intercept is the now the mean of the second group (Self-Testing) + `method1` is now the difference between Notes re-reading and Self-Testing + `method3` is now the difference between Notes summarising and Self-testing ] .pull-right[ ``` r mod2 <- lm(score ~ method, data = test_study3) mod2 ``` ``` ## ## Call: ## lm(formula = score ~ method, data = test_study3) ## ## Coefficients: ## (Intercept) method1 method3 ## 27.576 -4.162 -3.380 ``` ``` ## # A tibble: 3 × 2 ## method Group_Mean ## <fct> <dbl> ## 1 read 23.4 ## 2 self-test 27.6 ## 3 summarise 24.2 ``` ] --- # New baseline (full results) ``` r summary(mod2) ``` ``` ## ## Call: ## lm(formula = score ~ method, data = test_study3) ## ## Residuals: ## Min 1Q Median 3Q Max ## -23.4138 -5.3593 -0.1959 5.7496 17.8041 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 27.5758 0.9945 27.729 < 2e-16 *** ## method1 -4.1620 1.3188 -3.156 0.00180 ** ## method3 -3.3799 1.2892 -2.622 0.00929 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 8.079 on 247 degrees of freedom ## Multiple R-squared: 0.04224, Adjusted R-squared: 0.03448 ## F-statistic: 5.447 on 2 and 247 DF, p-value: 0.004845 ``` ??? + Note that the choice of baseline does not affect the R^2 or F-ratio --- class: center, middle # Questions? --- class: inverse, center, middle # Part 5: Testing manual contrasts using emmeans --- # Using `emmeans` to test contrasts + With dummy coding, each non-baseline level is compared to the baseline + But what if we also want to compare two non-baseline levels to each other? + We will use the package `emmeans` to test our contrasts + We will also be using this in the next few weeks to look at analysing experimental designs + **E**stimated + **M**arginal + **Means** + Essentially this package provides us with a lot of tools to help us model contrasts and linear functions --- # Working with `emmeans` + We already have our model: ``` r mod1 <- lm(score ~ method, test_study3) ``` + Next we use `emmeans` to get the estimated means of our groups ``` r method_mean <- emmeans(mod1, ~method) method_mean ``` ``` ## method emmean SE df lower.CL upper.CL ## read 23.4 0.866 247 21.7 25.1 ## self-test 27.6 0.994 247 25.6 29.5 ## summarise 24.2 0.820 247 22.6 25.8 ## ## Confidence level used: 0.95 ``` --- # Visualise estimated means .pull-left[ ``` r plot(method_mean) ``` + We then use these means and standard errors to test contrasts (differences between levels) ] .pull-right[ <img src="dapr2_06_LMcategorical1_files/figure-html/unnamed-chunk-41-1.png" width="504" /> ] --- # Defining the contrast + **NOTE**: The order of your categorical variable matters as `emmeans` uses this order ``` r levels(test_study3$method) ``` ``` ## [1] "read" "self-test" "summarise" ``` + For each pairwise comparison, assign `1` to the level you are interested in, and `-1` to the baseline you want to compare it to ``` r method_comp <- list("Self-Testing vs Reading" = c(-1, 1, 0), "Summarising vs Reading" = c(-1, 0, 1), "Self-Testing vs Summarising" = c(0, 1, -1)) ``` -- + The estimate for your `-1` level will be subtracted from your `1` level to obtain the difference between the levels + We will then look at the significance of that difference --- # Requesting the test + In order to test our effects, we use the `contrast` function from `emmeans` ``` r method_comp_test <- contrast(method_mean, method_comp) method_comp_test ``` ``` ## contrast estimate SE df t.ratio p.value ## Self-Testing vs Reading 4.162 1.32 247 3.156 0.0018 ## Summarising vs Reading 0.782 1.19 247 0.656 0.5127 ## Self-Testing vs Summarising 3.380 1.29 247 2.622 0.0093 ``` -- + We can see we have p-values, but we can also request confidence intervals ``` r confint(method_comp_test) ``` ``` ## contrast estimate SE df lower.CL upper.CL ## Self-Testing vs Reading 4.162 1.32 247 1.564 6.76 ## Summarising vs Reading 0.782 1.19 247 -1.568 3.13 ## Self-Testing vs Summarising 3.380 1.29 247 0.841 5.92 ## ## Confidence level used: 0.95 ``` --- # Interpreting the results + The estimate is the difference between the average of the group means within each comparison .pull-left[ ``` r method_comp_test ``` <!-- --> ] .pull-right[ ``` r method_mean ``` <img src="figs/contrastMeans.png" width="75%" /> ] + So for `Self-Testing vs Summarising` : ``` r 27.6-24.2 ``` ``` ## [1] 3.4 ``` + Those who test themselves on the content of their notes have higher scores than those who simply re-read their notes + And this is statistically significant --- class: center, middle # Questions? --- # Key points from this week + When we have categorical predictors, we are modelling the means of groups + When the categorical predictor has more than one level, we can represent it with dummy-coded binary variables + We have k-1 dummy variables, where k = number of levels (groups/categories) + Each dummy variable slope represents the difference between the mean of the group scored 1 and the reference group + In R, we need to make sure... + R recognises the variable as a factor (`factor()`), and + We have the correct reference level (`contr.treatment()`) + We also looked at the use of `emmeans` to test specific contrasts + Run the model + Estimate the means + Define the contrast + Test the contrast --- ## This week .pull-left[ ### Tasks <img src="figs/labs.svg" width="10%" /> **Attend your lab and work together on the exercises** <br> <img src="figs/exam.svg" width="10%" /> **Complete the weekly quiz** ] .pull-right[ ### Support <img src="figs/forum.svg" width="10%" /> **Help each other on the Piazza forum** <br> <img src="figs/oh.png" width="10%" /> **Attend office hours (see Learn page for details)** ] --- class: inverse, center, middle # Thanks for listening