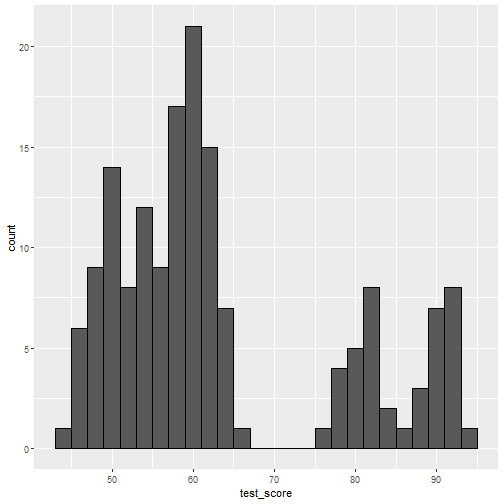

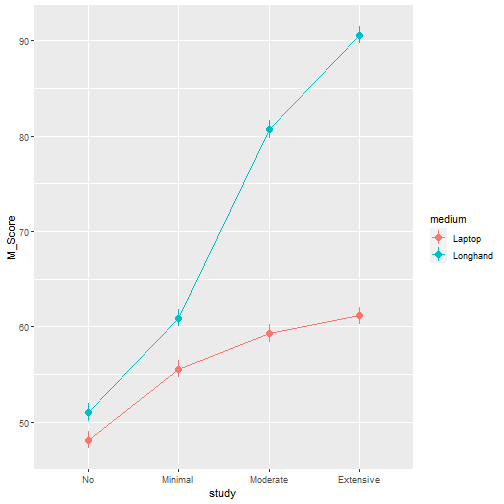

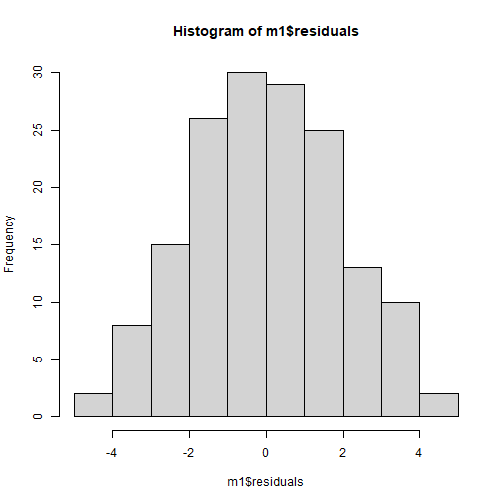

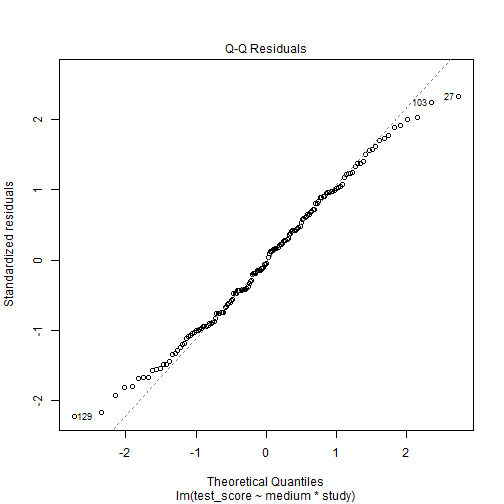

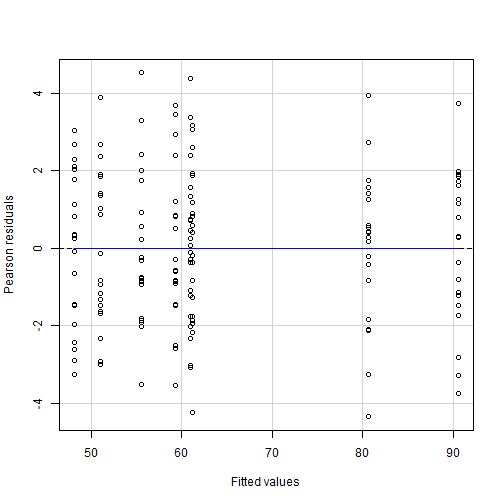

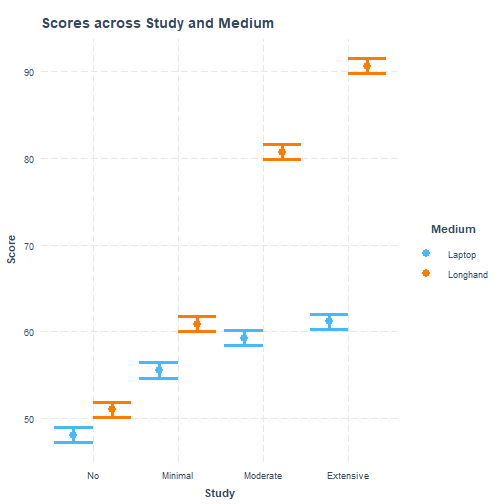

class: center, middle, inverse, title-slide .title[ # <b> Interaction Analysis </b> ] .subtitle[ ## Data Analysis for Psychology in R 2<br><br> ] .author[ ### dapR2 Team ] .institute[ ### Department of Psychology<br>The University of Edinburgh ] --- # Overview of the Week This week, we'll be reviewing what we've learned in weeks 1-4 and applying it to a practical example. Specifically, we'll cover: 1. Interpetation of an interaction model with categorical variables. 2. Multiple pairwise comparisons with corrections. --- ### Our example is based on the paper * Mueller, P. A., & Oppenheimer, D. M. (2014). The pen is mightier than the keyboard: Advantages of longhand over laptop note taking. *Psychological Science, 25*(6), 1159–1168. [https://doi.org/10.1177/0956797614524581](https://doi.org/10.1177/0956797614524581) In the current study, participants were invited to take part in a study investigating the medium of note taking and study time on test scores. The sample comprised of 160 students who took notes on a lecture via one of two mediums - either on a laptop or longhand (i.e., using pen and paper). After watching the lecture and taking notes, they were randomly allocated to one of four study time conditions, either engaging in no, minimal, moderate, or extensive study of the notes taken on their assigned medium. After engaging in study for their allocated time, participants took a test on the lecture content. The test involved a series of questions, where participants could score a maximum of 100 points. --- ### Research Aim + Explore the associations among study time and note-taking medium on test scores. ### Research Questions 1. Do differences in test scores between study conditions differ by the note-taking medium used? 2. Explore the differences between each pair of levels of each factor to determine which conditions significantly differ from each other. --- ### Setup Loading all the necessary packages and the data: ```r library(tidyverse) library(emmeans) library(kableExtra) library(car) library(sjPlot) library(interactions) data <- read_csv('laptop_vs_longhand.csv') ``` --- ### Checking the Data Looking at our data using the `summary` function: ```r data %>% summary(.) ``` ``` ## test_score medium study ## Min. :44.86 Length:160 Length:160 ## 1st Qu.:53.63 Class :character Class :character ## Median :59.27 Mode :character Mode :character ## Mean :63.42 ## 3rd Qu.:68.05 ## Max. :94.32 ``` --- ### Checking the Data You'll notice that we have a continuous outcome variable, `test_score`, and two categorical predictor variables, `medium`, `study`. We need to make `medium` and `study` factors: ```r data$medium <- as_factor(data$medium) data$study <- as_factor(data$study) summary(data) ``` ``` ## test_score medium study ## Min. :44.86 Laptop :80 No :40 ## 1st Qu.:53.63 Longhand:80 Minimal :40 ## Median :59.27 Moderate :40 ## Mean :63.42 Extensive:40 ## 3rd Qu.:68.05 ## Max. :94.32 ``` --- ### Next, looking at our continuous outcome (test scores) distribution and bar plots for factors (study and medium): --- ```r ggplot(data, aes(test_score)) + geom_histogram(colour = 'black', binwidth = 2) ``` <!-- --> --- ```r ggplot(data = data, aes(medium, fill = medium)) + geom_bar() + theme(legend.position = 'none') ``` <!-- --> --- ```r ggplot(data = data, aes(study, fill = study)) + geom_bar() + theme(legend.position = 'none') ``` <!-- --> --- ### Data description ```r descript <- data %>% group_by(study, medium) %>% summarise( M_Score = round(mean(test_score), 2), SD_Score = round(sd(test_score), 2), SE_Score = round(sd(test_score)/sqrt(n()), 2), Min_Score = round(min(test_score), 2), Max_Score = round(max(test_score), 2) ) ``` ``` ## `summarise()` has grouped output by 'study'. You can override using the ## `.groups` argument. ``` --- ### Data description ```r descript ``` ``` ## # A tibble: 8 × 7 ## # Groups: study [4] ## study medium M_Score SD_Score SE_Score Min_Score Max_Score ## <fct> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 No Laptop 48.1 2 0.45 44.9 51.2 ## 2 No Longhand 51.0 2 0.45 48.0 54.9 ## 3 Minimal Laptop 55.6 2 0.45 52.1 60.1 ## 4 Minimal Longhand 60.9 2 0.45 57.8 65.3 ## 5 Moderate Laptop 59.3 2 0.45 55.8 63.0 ## 6 Moderate Longhand 80.7 2 0.45 76.3 84.6 ## 7 Extensive Laptop 61.2 2 0.45 56.9 64.3 ## 8 Extensive Longhand 90.6 2 0.45 86.8 94.3 ``` --- ### Table of means <table> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:left;"> Laptop </th> <th style="text-align:left;"> Longhand </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> No Study </td> <td style="text-align:left;"> 48.12 </td> <td style="text-align:left;"> 51.02 </td> </tr> <tr> <td style="text-align:left;"> Minimal </td> <td style="text-align:left;"> 55.57 </td> <td style="text-align:left;"> 60.91 </td> </tr> <tr> <td style="text-align:left;"> Moderate </td> <td style="text-align:left;"> 59.30 </td> <td style="text-align:left;"> 80.69 </td> </tr> <tr> <td style="text-align:left;"> Extensive </td> <td style="text-align:left;"> 61.16 </td> <td style="text-align:left;"> 90.58 </td> </tr> </tbody> </table> --- ### All variables in a plot with mean scores for each condition: --- ```r p2 <- ggplot(descript, aes(x = study, y = M_Score, color = medium)) + geom_point(size = 3) + geom_linerange(aes(ymin = M_Score - 2 * SE_Score, ymax = M_Score + 2 * SE_Score)) + geom_path(aes(x = as.numeric(study))) p2 ``` <!-- --> --- ### Investigating RQ 1: Dummy Coding + The first research question we've specified, *"Do differences in test scores between study conditions differ by the note-taking medium used?"* + Before we run our model, we have to make a few decisions: coding and baseline comparisons to make across predictors + Dummy coding: `Laptop` as the baseline level for `medium` and `No` as the baseline level for `study` + We can use the `fct_relevel` function to order our levels accordingly: ```r data$medium <- fct_relevel(data$medium , "Laptop") data$study <- fct_relevel(data$study , "No", "Minimal", "Moderate", "Extensive") summary(data) ``` ``` ## test_score medium study ## Min. :44.86 Laptop :80 No :40 ## 1st Qu.:53.63 Longhand:80 Minimal :40 ## Median :59.27 Moderate :40 ## Mean :63.42 Extensive:40 ## 3rd Qu.:68.05 ## Max. :94.32 ``` --- ### Investigating RQ 1 Given the first research question we've specified, *"Do differences in test scores between study conditions differ by the note-taking medium used?"*, we'll use the following model: $$ `\begin{align} \text{Test Score} &= \beta_0 \\ &+ \beta_1 \cdot M_\text{Longhand} \\ &+ \beta_2 \cdot S_\text{Minimal} \\ &+ \beta_3 \cdot S_\text{Moderate} \\ &+ \beta_4 \cdot S_\text{Extensive} \\ &+ \beta_5 \cdot (M_\text{Longhand} \cdot S_\text{Minimal}) \\ &+ \beta_6 \cdot (M_\text{Longhand} \cdot S_\text{Moderate}) \\ &+ \beta_7 \cdot (M_\text{Longhand} \cdot S_\text{Extensive}) \\ &+ \epsilon \end{align}` $$ + Please note, the model, as specified here, is run as **medium*study** in R: + m1 <- lm(test_score ~ medium*study, data = data) --- ### Running our model: ```r m1 <- lm(test_score ~ medium*study, data = data) summary(m1) ``` ``` ## ## Call: ## lm(formula = test_score ~ medium * study, data = data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.3485 -1.4764 -0.1018 1.4039 4.5321 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 48.1200 0.4472 107.600 < 2e-16 *** ## mediumLonghand 2.9000 0.6325 4.585 9.41e-06 *** ## studyMinimal 7.4500 0.6325 11.779 < 2e-16 *** ## studyModerate 11.1800 0.6325 17.677 < 2e-16 *** ## studyExtensive 13.0400 0.6325 20.618 < 2e-16 *** ## mediumLonghand:studyMinimal 2.4400 0.8944 2.728 0.00712 ** ## mediumLonghand:studyModerate 18.4900 0.8944 20.672 < 2e-16 *** ## mediumLonghand:studyExtensive 26.5200 0.8944 29.650 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2 on 152 degrees of freedom ## Multiple R-squared: 0.9803, Adjusted R-squared: 0.9794 ## F-statistic: 1081 on 7 and 152 DF, p-value: < 2.2e-16 ``` --- ### Checking assumptions before interpreting the model #### Linearity We can assume linearity when working with categorical predictors (see [here](https://www.bookdown.org/rwnahhas/RMPH/mlr-linearity.html)) #### Independence of Errors We are using between-subjects data, so we'll also assume independence of our error terms. --- #### Normality of Residuals (histogram) ```r hist(m1$residuals) ``` <!-- --> --- #### Normality of Residuals (QQ plots) ```r plot(m1, which = 2) ``` <!-- --> --- #### Equality of Variance (Homoskedasticity) Checkin for heteroskedasticity using residuals vs predicted values plots: `residualPlot` from the `car` package ```r residualPlot(m1) ``` <!-- --> --- ```r summary(m1) ``` ``` ## ## Call: ## lm(formula = test_score ~ medium * study, data = data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.3485 -1.4764 -0.1018 1.4039 4.5321 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 48.1200 0.4472 107.600 < 2e-16 *** ## mediumLonghand 2.9000 0.6325 4.585 9.41e-06 *** ## studyMinimal 7.4500 0.6325 11.779 < 2e-16 *** ## studyModerate 11.1800 0.6325 17.677 < 2e-16 *** ## studyExtensive 13.0400 0.6325 20.618 < 2e-16 *** ## mediumLonghand:studyMinimal 2.4400 0.8944 2.728 0.00712 ** ## mediumLonghand:studyModerate 18.4900 0.8944 20.672 < 2e-16 *** ## mediumLonghand:studyExtensive 26.5200 0.8944 29.650 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2 on 152 degrees of freedom ## Multiple R-squared: 0.9803, Adjusted R-squared: 0.9794 ## F-statistic: 1081 on 7 and 152 DF, p-value: < 2.2e-16 ``` --- ### Interpretation with dummy coding .pull-left[ ``` ## Estimate Std. Error ## (Intercept) 48.12 0.45 ## mediumLonghand 2.90 0.63 ## studyMinimal 7.45 0.63 ## studyModerate 11.18 0.63 ## studyExtensive 13.04 0.63 ## mediumLonghand:studyMinimal 2.44 0.89 ## mediumLonghand:studyModerate 18.49 0.89 ## mediumLonghand:studyExtensive 26.52 0.89 ``` ] .pull-right[ <table> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:left;"> Laptop </th> <th style="text-align:left;"> Longhand </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> No Study </td> <td style="text-align:left;"> 48.12 </td> <td style="text-align:left;"> 51.02 </td> </tr> <tr> <td style="text-align:left;"> Minimal </td> <td style="text-align:left;"> 55.57 </td> <td style="text-align:left;"> 60.91 </td> </tr> <tr> <td style="text-align:left;"> Moderate </td> <td style="text-align:left;"> 59.30 </td> <td style="text-align:left;"> 80.69 </td> </tr> <tr> <td style="text-align:left;"> Extensive </td> <td style="text-align:left;"> 61.16 </td> <td style="text-align:left;"> 90.58 </td> </tr> </tbody> </table> ] + **Laptop** is the reference level in **medium** + **No** is the reference level in **study** + main conditional effects betas: the differences in means with the reference groups + interaction betas: differences in differences --- ```r plt_m1 <- cat_plot(model = m1, pred = study, modx = medium, main.title = "Scores across Study and Medium", x.label = "Study", y.label = "Score", legend.main = "Medium") plt_m1 ``` <!-- --> --- ### Investigating Research Question 2 + *Explore the differences between each pair of levels of each factor to determine which conditions significantly differ from each other.* + Pairwise comparisons with Tukey corrections ```r m1_emm <- emmeans(m1, ~study*medium) pairs_res <- pairs(m1_emm) ``` --- ```r pairs_res ``` ``` ## contrast estimate SE df t.ratio p.value ## No Laptop - Minimal Laptop -7.45 0.632 152 -11.779 <.0001 ## No Laptop - Moderate Laptop -11.18 0.632 152 -17.677 <.0001 ## No Laptop - Extensive Laptop -13.04 0.632 152 -20.618 <.0001 ## No Laptop - No Longhand -2.90 0.632 152 -4.585 0.0002 ## No Laptop - Minimal Longhand -12.79 0.632 152 -20.223 <.0001 ## No Laptop - Moderate Longhand -32.57 0.632 152 -51.498 <.0001 ## No Laptop - Extensive Longhand -42.46 0.632 152 -67.135 <.0001 ## Minimal Laptop - Moderate Laptop -3.73 0.632 152 -5.898 <.0001 ## Minimal Laptop - Extensive Laptop -5.59 0.632 152 -8.839 <.0001 ## Minimal Laptop - No Longhand 4.55 0.632 152 7.194 <.0001 ## Minimal Laptop - Minimal Longhand -5.34 0.632 152 -8.443 <.0001 ## Minimal Laptop - Moderate Longhand -25.12 0.632 152 -39.718 <.0001 ## Minimal Laptop - Extensive Longhand -35.01 0.632 152 -55.356 <.0001 ## Moderate Laptop - Extensive Laptop -1.86 0.632 152 -2.941 0.0717 ## Moderate Laptop - No Longhand 8.28 0.632 152 13.092 <.0001 ## Moderate Laptop - Minimal Longhand -1.61 0.632 152 -2.546 0.1847 ## Moderate Laptop - Moderate Longhand -21.39 0.632 152 -33.821 <.0001 ## Moderate Laptop - Extensive Longhand -31.28 0.632 152 -49.458 <.0001 ## Extensive Laptop - No Longhand 10.14 0.632 152 16.033 <.0001 ## Extensive Laptop - Minimal Longhand 0.25 0.632 152 0.395 0.9999 ## Extensive Laptop - Moderate Longhand -19.53 0.632 152 -30.880 <.0001 ## Extensive Laptop - Extensive Longhand -29.42 0.632 152 -46.517 <.0001 ## No Longhand - Minimal Longhand -9.89 0.632 152 -15.637 <.0001 ## No Longhand - Moderate Longhand -29.67 0.632 152 -46.912 <.0001 ## No Longhand - Extensive Longhand -39.56 0.632 152 -62.550 <.0001 ## Minimal Longhand - Moderate Longhand -19.78 0.632 152 -31.275 <.0001 ## Minimal Longhand - Extensive Longhand -29.67 0.632 152 -46.912 <.0001 ## Moderate Longhand - Extensive Longhand -9.89 0.632 152 -15.637 <.0001 ## ## P value adjustment: tukey method for comparing a family of 8 estimates ``` --- ### Modeling with effects coding + Recall how we ordered the levels of our factors for dummy coding ```r data$medium <- fct_relevel(data$medium , "Laptop") data$study <- fct_relevel(data$study , "No", "Minimal", "Moderate", "Extensive") ``` --- ### Run the model with the same order of levels using **effects coding** ```r m_effc <- lm(test_score ~ medium*study, data = data, contrasts = list(medium = contr.sum, study = contr.sum)) ``` --- ```r summary(m_effc) ``` ``` ## ## Call: ## lm(formula = test_score ~ medium * study, data = data, contrasts = list(medium = contr.sum, ## study = contr.sum)) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.3485 -1.4764 -0.1018 1.4039 4.5321 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 63.4187 0.1581 401.10 <2e-16 *** ## medium1 -7.3812 0.1581 -46.68 <2e-16 *** ## study1 -13.8488 0.2739 -50.57 <2e-16 *** ## study2 -5.1787 0.2739 -18.91 <2e-16 *** ## study3 6.5762 0.2739 24.01 <2e-16 *** ## medium1:study1 5.9312 0.2739 21.66 <2e-16 *** ## medium1:study2 4.7113 0.2739 17.20 <2e-16 *** ## medium1:study3 -3.3138 0.2739 -12.10 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2 on 152 degrees of freedom ## Multiple R-squared: 0.9803, Adjusted R-squared: 0.9794 ## F-statistic: 1081 on 7 and 152 DF, p-value: < 2.2e-16 ``` --- ### Interpretation with effects coding + Please see *"s2 w5 calculations sheet.xlsx"* file on Learn --- ### Recoding levels of interest + What if we are more interested in the **Extensive** level of the **study** factor rather than in **No** + And in the **Longhand** level of **medium** rather than in **Laptop** + Recode our levels as follows: ```r data$medium <- fct_relevel(data$medium , "Longhand") data$study <- fct_relevel(data$study , "Minimal", "Moderate", "Extensive", "No") ``` --- ### Re-running the model ```r m_effc2 <- lm(test_score ~ medium*study, data = data, contrasts = list(medium = contr.sum, study = contr.sum)) ``` --- ```r summary(m_effc2) ``` ``` ## ## Call: ## lm(formula = test_score ~ medium * study, data = data, contrasts = list(medium = contr.sum, ## study = contr.sum)) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.3485 -1.4764 -0.1018 1.4039 4.5321 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 63.4187 0.1581 401.10 <2e-16 *** ## medium1 7.3812 0.1581 46.68 <2e-16 *** ## study1 -5.1787 0.2739 -18.91 <2e-16 *** ## study2 6.5762 0.2739 24.01 <2e-16 *** ## study3 12.4513 0.2739 45.47 <2e-16 *** ## medium1:study1 -4.7113 0.2739 -17.20 <2e-16 *** ## medium1:study2 3.3138 0.2739 12.10 <2e-16 *** ## medium1:study3 7.3287 0.2739 26.76 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2 on 152 degrees of freedom ## Multiple R-squared: 0.9803, Adjusted R-squared: 0.9794 ## F-statistic: 1081 on 7 and 152 DF, p-value: < 2.2e-16 ``` ### Pairwise comparisons with Tukey corrections ```r m_effc2_emm <- emmeans(m_effc2, ~study*medium) pairs_res2 <- pairs(m_effc2_emm) ``` --- ```r pairs_res2 ``` ``` ## contrast estimate SE df t.ratio p.value ## Minimal Longhand - Moderate Longhand -19.78 0.632 152 -31.275 <.0001 ## Minimal Longhand - Extensive Longhand -29.67 0.632 152 -46.912 <.0001 ## Minimal Longhand - No Longhand 9.89 0.632 152 15.637 <.0001 ## Minimal Longhand - Minimal Laptop 5.34 0.632 152 8.443 <.0001 ## Minimal Longhand - Moderate Laptop 1.61 0.632 152 2.546 0.1847 ## Minimal Longhand - Extensive Laptop -0.25 0.632 152 -0.395 0.9999 ## Minimal Longhand - No Laptop 12.79 0.632 152 20.223 <.0001 ## Moderate Longhand - Extensive Longhand -9.89 0.632 152 -15.637 <.0001 ## Moderate Longhand - No Longhand 29.67 0.632 152 46.912 <.0001 ## Moderate Longhand - Minimal Laptop 25.12 0.632 152 39.718 <.0001 ## Moderate Longhand - Moderate Laptop 21.39 0.632 152 33.821 <.0001 ## Moderate Longhand - Extensive Laptop 19.53 0.632 152 30.880 <.0001 ## Moderate Longhand - No Laptop 32.57 0.632 152 51.498 <.0001 ## Extensive Longhand - No Longhand 39.56 0.632 152 62.550 <.0001 ## Extensive Longhand - Minimal Laptop 35.01 0.632 152 55.356 <.0001 ## Extensive Longhand - Moderate Laptop 31.28 0.632 152 49.458 <.0001 ## Extensive Longhand - Extensive Laptop 29.42 0.632 152 46.517 <.0001 ## Extensive Longhand - No Laptop 42.46 0.632 152 67.135 <.0001 ## No Longhand - Minimal Laptop -4.55 0.632 152 -7.194 <.0001 ## No Longhand - Moderate Laptop -8.28 0.632 152 -13.092 <.0001 ## No Longhand - Extensive Laptop -10.14 0.632 152 -16.033 <.0001 ## No Longhand - No Laptop 2.90 0.632 152 4.585 0.0002 ## Minimal Laptop - Moderate Laptop -3.73 0.632 152 -5.898 <.0001 ## Minimal Laptop - Extensive Laptop -5.59 0.632 152 -8.839 <.0001 ## Minimal Laptop - No Laptop 7.45 0.632 152 11.779 <.0001 ## Moderate Laptop - Extensive Laptop -1.86 0.632 152 -2.941 0.0717 ## Moderate Laptop - No Laptop 11.18 0.632 152 17.677 <.0001 ## Extensive Laptop - No Laptop 13.04 0.632 152 20.618 <.0001 ## ## P value adjustment: tukey method for comparing a family of 8 estimates ``` --- ### Summary + Investigating interactions in a 4x2 data set + Coding factors with dummy coding + Running a linear model + Checking assumptions + Interpreting the model with dummy coding + Coding factors with effects coding + Re-coding the same factors with different levels of interest + Investigating pairwise comparisons with Tukey corrections --- class: center, middle # Thanks for listening and interacting!