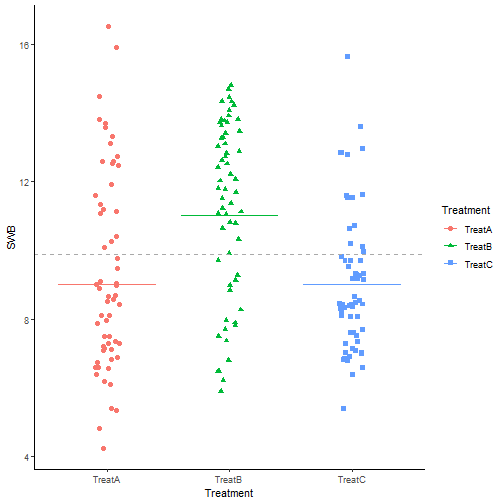

class: center, middle, inverse, title-slide .title[ # <b> Categorical Predictors: Effects (sum to zero) coding </b> ] .subtitle[ ## Data Analysis for Psychology in R 2<br><br> ] .author[ ### dapR2 Team ] .institute[ ### Department of Psychology<br>The University of Edinburgh ] --- # Weeks Learning Objectives 1. Understand the difference between dummy and sum-to-zero coding. 2. Understand the core principle of different coding schemes. 3. Interpret the output from a model using sum-to-zero coding. --- # Overview of next 3 weeks + The next few weeks will focus on how we can work with linear models with categorical predictors to test different questions. + In particular, we will look at the type of questions typically asked as part of experiments. + We will see that the tools we saw in discussing model comparisons will be important. + As well the principles of categorical data coding discussed when talking about binary and dummy variables. + We are going to generalise some of these concepts, and then show how we can use the general principles to test questions. --- # `Hospital` & `Treatment` data + **Condition 1**: `Treatment` (Levels: TreatA, TreatB, TreatC). + **Condition 2**: `Hospital` (Levels: Hosp1, Hosp2). + Total sample n = 180 (30 patients in each of 6 groups). + Between person design. + **Outcome**: Subjective well-being (`SWB`) + An average of multiple raters (the patient, a member of their family, and a friend). + SWB score ranged from 0 to 20. --- # Recap on dummy coding (reference group) + Create `\(k\)`-1 dummy variables/contrasts + where `\(k\)` is the number of levels of the categorical predictor. + Assign reference group 0 on all dummies. + Assign 1 to the focal group for a particular dummy. + Enter the dummies into the linear model and they code the difference in means between the focal group/level and the reference. --- # Model results ```r summary(lm(SWB ~ Treatment + Hospital + Treatment*Hospital, data = hosp_tbl)) ``` ``` ## ## Call: ## lm(formula = SWB ~ Treatment + Hospital + Treatment * Hospital, ## data = hosp_tbl) ## ## Residuals: ## Min 1Q Median 3Q Max ## -6.6000 -1.2533 0.1083 1.2650 5.7000 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 10.8000 0.3699 29.195 < 2e-16 *** ## TreatmentTreatB -1.3700 0.5232 -2.619 0.0096 ** ## TreatmentTreatC -0.6967 0.5232 -1.332 0.1847 ## HospitalHosp2 -2.9467 0.5232 -5.632 7.02e-08 *** ## TreatmentTreatB:HospitalHosp2 6.6333 0.7399 8.966 4.74e-16 *** ## TreatmentTreatC:HospitalHosp2 0.8233 0.7399 1.113 0.2673 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.026 on 174 degrees of freedom ## Multiple R-squared: 0.4476, Adjusted R-squared: 0.4317 ## F-statistic: 28.2 on 5 and 174 DF, p-value: < 2.2e-16 ``` --- class: center, middle # Principle behind model constraints --- # Why do we need a reference group? + Consider our example and dummy coding. + We have three groups each given a specific Treatment A, B or C + We want a model that represents our data (observations), but all we "know" is what group an observation belongs to. So; `$$y_{ij} = \mu_i + \epsilon_{ij}$$` + Where + `\(y_{ij}\)` are the individual observations + `\(\mu_i\)` is the mean of group `\(i\)` and + `\(\epsilon_{ij}\)` is the individual deviation from that mean. ??? + And this hopefully makes sense. + Given we know someone's group, our best guess is the mean + But people wont all score the mean, so there is some deviation for every person. --- # Why do we need a reference group? + An alternative way to present this idea looks much more like our linear model: `$$y_{ij} = \beta_0 + \underbrace{(\mu_{i} - \beta_0)}_{\beta_i} + \epsilon_{ij}$$` + Where + `\(y_{ij}\)` are the individual observations + `\(\beta_0\)` is an estimate of reference/overall average + `\(\mu_i\)` is the mean of group `\(i\)` + `\(\beta_1\)` is the difference between the reference and the mean of group `\(i\)`, and + `\(\epsilon_{ij}\)` is the individual deviation from that mean. --- # Why do we need a reference group? + We can write this equation more generally as: $$\mu_i = \beta_0 + \beta_i $$ + or for the specific groups (in our case 3): `$$\mu_{treatmentA} = \beta_0 + \beta_{1A}$$` `$$\mu_{treatmentB} = \beta_0 + \beta_{2B}$$` `$$\mu_{treatmentC} = \beta_0 + \beta_{3C}$$` + **The problem**: we have four parameters ( `\(\beta_0\)` , `\(\beta_{1A}\)` , `\(\beta_{2B}\)` , `\(\beta_{3C}\)` ) to model three group means ( `\(\mu_{TreatmentA}\)` , `\(\mu_{TreatmentB}\)` , `\(\mu_{TreatmentC}\)` ) + We are trying to estimate too much with too little. + This is referred to as under-identification. + We need to estimate at least 1 parameter less --- # Constraints fix identification + Consider dummy coding. + Suppose we make Treatment A the reference. Then, `$$\mu_{treatmentA} = \beta_0$$` `$$\mu_{treatmentB} = \beta_0 + \beta_{1B}$$` `$$\mu_{treatmentC} = \beta_0 + \beta_{2C}$$` + **Fixed** ! + We now only have three parameters ( `\(\beta_0\)` , `\(\beta_{1B}\)` , `\(\beta_{2C}\)` ) for the three group means ( `\(\mu_{TreatmentA}\)` , `\(\mu_{TreatmentB}\)` , `\(\mu_{TreatmentC}\)` ). > So when we code categorical variables, we need a constraint so that we can estimate our models. --- class: center, middle # Any questions? --- # Why not always use dummy coding? + We might not always want to compare against a reference group. + We might want to compare to: + The overall or grand mean + Group 1 vs groups 2, 3, 4 combined + and on we go! + Let's consider the example of the grand mean... --- # Effects coding (sum to zero coding) .pull-left[ <!-- --> ] .pull-right[ + The coloured points represent the individual SWB values for the 30 individuals in each group. + The solid coloured lines are the group means. + The dashed grey line is the grand mean (the mean of all the observations) ] --- # Model with the grand mean + If we write our model including the grand mean, we get: `$$y_{ij} = \mu + \beta_j + \epsilon_{ij}$$` + where + `\(y_{ij}\)` is the score for a given individual ( `\(i\)` ) in a given group ( `\(j\)` ) + `\(\mu\)` is the grand mean + `\(\beta_j\)` is a group specific effect + `\(\epsilon_{ij}\)` is the individual deviation from the group mean --- # Model with the grand mean + This means that each group mean is: `$$\mu_{TreatmentA} = \mu + \beta_{TreatmentA}$$` `$$\mu_{TreatmentB} = \mu + \beta_{TreatmentB}$$` `$$\mu_{TreatmentC} = \mu + \beta_{TreatmentC}$$` + And as with dummy coding, this means we have 4 things to estimate, but only 3 group means. --- # Sum to zero constraint + In sum to zero coding, we fix this with the following constraint: `$$\sum_{j=1}^m \beta_j = 0$$` + Or alternatively written for the 3 group case: `$$\beta_1 + \beta_2 + \beta_3 = 0$$` --- # Sum to zero constraint + This constraints leads to the following interpretations: + `\(\beta_0\)` is the grand mean (mean of all observations) or `\(\mu\)` + `\(\beta_j\)` are the differences between the coded group and the grand mean: `$$\beta_j = \mu_j - \mu$$` --- # Why the grand mean? `$$\beta_1 + \beta_2 + \beta_3 = 0$$` + Substitute `\(\beta_0\)` : `$$(\mu_1 - \beta_0) + (\mu_2 - \beta_0) + (\mu_3 - \beta_0) = 0$$` `$$\mu_1 + \mu_2 + \mu_3 = 3\beta_0$$` $$\beta_0 = \frac{\mu_1 + \mu_2 + \mu_3}{3} $$ `$$\beta_0 = \mu$$` --- # Sum to zero constraint + Finally, we can get back to our group means from the coefficients as follows: `$$\mu_1 = \beta_0 + \beta_1$$` `$$\mu_2 = \beta_0 + \beta_2$$` `$$\mu_3 = \beta_0 - (\beta_1 + \beta_2)$$` --- class: center, middle # Pause for breath... --- # OK, but how do we apply the constraint? + Answer, in the same way as we did with dummy coding. + We can create a set of sum to zero (sometimes called effect, or deviation) variables + Or the equivalent contrast matrix. + For effect code variables we: + Create `\(k-1\)` variables + For observations in the focal group, assign 1 + For observations in the last group, assign -1 + For all other groups assign 0 --- # Comparing coding matrices .pull-left[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Level </th> <th style="text-align:right;"> D1 </th> <th style="text-align:right;"> D2 </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Treatment A </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> Treatment B </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> Treatment C </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> </tr> </tbody> </table> `$$y_{ij} = \beta_0 + \beta_1D_1 + \beta_2D_2 + \epsilon_{ij}$$` ] .pull-right[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Level </th> <th style="text-align:right;"> E1 </th> <th style="text-align:right;"> E2 </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Treatment A </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> Treatment B </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> </tr> <tr> <td style="text-align:left;"> Treatment C </td> <td style="text-align:right;"> -1 </td> <td style="text-align:right;"> -1 </td> </tr> </tbody> </table> `$$y_{ij} = \beta_0 + \beta_1E_1 + \beta_2E_2 + \epsilon_{ij}$$` ] --- # Sum to zero/effects for group means .pull-left[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Level </th> <th style="text-align:right;"> E1 </th> <th style="text-align:right;"> E2 </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Treatment A </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> Treatment B </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> </tr> <tr> <td style="text-align:left;"> Treatment C </td> <td style="text-align:right;"> -1 </td> <td style="text-align:right;"> -1 </td> </tr> </tbody> </table> `$$\mu_1 = \beta_0 + \beta_1$$` `$$\mu_2 = \beta_0 + \beta_2$$` `$$\mu_3 = \beta_0 - (\beta_1 + \beta_2)$$` ] .pull-right[ `$$\mu_1 = \beta_0 + 1*\beta_1 + 0*\beta_2 = \beta_0 + \beta_1$$` `$$\mu_2 = \beta_0 + 0*\beta_1 + 1*\beta_2 = \beta_0 + \beta_2$$` `$$\mu_3 = \beta_0 -1*\beta_1 -1*\beta_2 = \beta_0 - \beta_1 -\beta_2$$` + Now we will look practically at the implementation and differences ] --- # Group Means ```r hosp_tbl %>% select(1:2) %>% group_by(Treatment) %>% summarise( mean = round(mean(SWB),3), sd = round(sd(SWB),1), N = n() ) ``` ``` ## # A tibble: 3 × 4 ## Treatment mean sd N ## <fct> <dbl> <dbl> <int> ## 1 TreatA 9.33 2.9 60 ## 2 TreatB 11.3 2.5 60 ## 3 TreatC 9.04 2 60 ``` --- # Effects (sum to zero) model + We need to change the contrast scheme from default. ```r contrasts(hosp_tbl$Treatment) <- contr.sum contrasts(hosp_tbl$Treatment) ``` ``` ## [,1] [,2] ## TreatA 1 0 ## TreatB 0 1 ## TreatC -1 -1 ``` --- # Effects (sum to zero) model ```r summary(lm(SWB ~ Treatment, data = hosp_tbl)) ``` ``` ## ## Call: ## lm(formula = SWB ~ Treatment, data = hosp_tbl) ## ## Residuals: ## Min 1Q Median 3Q Max ## -5.373 -1.987 -0.300 1.838 7.173 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 9.8806 0.1872 52.791 < 2e-16 *** ## Treatment1 -0.5539 0.2647 -2.093 0.0378 * ## Treatment2 1.3928 0.2647 5.262 4.09e-07 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.511 on 177 degrees of freedom ## Multiple R-squared: 0.1369, Adjusted R-squared: 0.1271 ## F-statistic: 14.04 on 2 and 177 DF, p-value: 2.196e-06 ``` --- # Effects (sum to zero) model .pull-left[ ``` ## (Intercept) Treatment1 Treatment2 ## 9.881 -0.554 1.393 ``` + Coefficients from group means `$$\beta_0 = \frac{\mu_1 + \mu_2 + \mu_3}{3}$$` `$$\beta_1 = \mu_1 - \mu$$` `$$\beta_2 = \mu_2 - \mu$$` ] .pull-right[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Treatment </th> <th style="text-align:right;"> mean </th> <th style="text-align:right;"> Gmean </th> <th style="text-align:right;"> Coefficients </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> TreatA </td> <td style="text-align:right;"> 9.327 </td> <td style="text-align:right;"> 9.881 </td> <td style="text-align:right;"> -0.554 </td> </tr> <tr> <td style="text-align:left;"> TreatB </td> <td style="text-align:right;"> 11.273 </td> <td style="text-align:right;"> 9.881 </td> <td style="text-align:right;"> 1.392 </td> </tr> <tr> <td style="text-align:left;"> TreatC </td> <td style="text-align:right;"> 9.042 </td> <td style="text-align:right;"> 9.881 </td> <td style="text-align:right;"> -0.839 </td> </tr> </tbody> </table> ] --- # Effects (sum to zero) model .pull-left[ ``` ## (Intercept) Treatment1 Treatment2 ## 9.881 -0.554 1.393 ``` + Group means from coefficients: `$$\mu_1 = \beta_0 + \beta_1$$` `$$\mu_2 = \beta_0 + \beta_2$$` `$$\mu_3 = \beta_0 - (\beta_1 + \beta_2)$$` ] .pull-right[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Treatment </th> <th style="text-align:right;"> mean </th> <th style="text-align:right;"> Gmean </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> TreatA </td> <td style="text-align:right;"> 9.327 </td> <td style="text-align:right;"> 9.881 </td> </tr> <tr> <td style="text-align:left;"> TreatB </td> <td style="text-align:right;"> 11.273 </td> <td style="text-align:right;"> 9.881 </td> </tr> <tr> <td style="text-align:left;"> TreatC </td> <td style="text-align:right;"> 9.042 </td> <td style="text-align:right;"> 9.881 </td> </tr> </tbody> </table> ```r 9.881 + -.554 ``` ``` ## [1] 9.327 ``` ```r 9.881 + 1.393 ``` ``` ## [1] 11.274 ``` ```r 9.881 - (-.554 + 1.393) ``` ``` ## [1] 9.042 ``` ] --- # The wide world of contrasts + We have now seen two examples of coding schemes (dummy and effect). + There are **lots** of different coding schemes we can use for categorical variables to make different comparisons. + If you are interested, see the excellent resource on [UCLA website](https://stats.idre.ucla.edu/r/library/r-library-contrast-coding-systems-for-categorical-variables/) + But always remember... **The data is the same, the tested contrast differs** --- class: center, middle # Questions --- # The data is the same, the tested contrasts differ + Run both models: ```r contrasts(hosp_tbl$Treatment) <- contr.treatment m_dummy <- lm(SWB ~ Treatment, data = hosp_tbl) # Change the contrasts and run again contrasts(hosp_tbl$Treatment) <- contr.sum m_zero <- lm(SWB ~ Treatment, data = hosp_tbl) ``` + Create a small data set: ```r treat <- tibble(Treatment = c("TreatA", "TreatB", "TreatC")) ``` --- # The data is the same, the tested contrasts differ + Add the predicted values from our models ```r treat %>% mutate( pred_dummy = predict(m_dummy, newdata = .), pred_zero = predict(m_zero, newdata = .) ) ``` ``` ## # A tibble: 3 × 3 ## Treatment pred_dummy pred_zero ## <chr> <dbl> <dbl> ## 1 TreatA 9.33 9.33 ## 2 TreatB 11.3 11.3 ## 3 TreatC 9.04 9.04 ``` + No matter what coding or contrasts we use, we are still modelling the group means! --- # Summary of today + We have considered different ways in which we can code categorical predictors. + Take home: + Use of coding matrices allows us to compare groups (or levels) in lots of ways. + Our `\(\beta\)`'s will represent differences in group means. + The scheme we use determines which group or combination of groups we are comparing. + **In all cases the underlying data is unchanged.** + This makes coding schemes a very flexible tool for testing hypotheses. --- class: center, middle # Thanks for listening! Any questions?