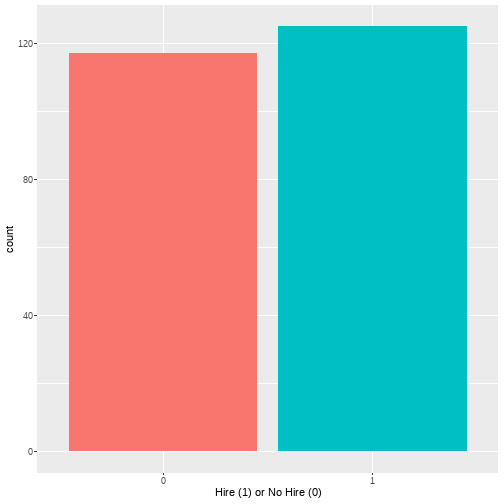

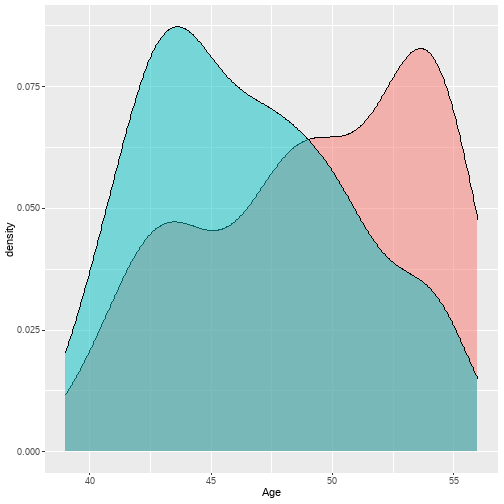

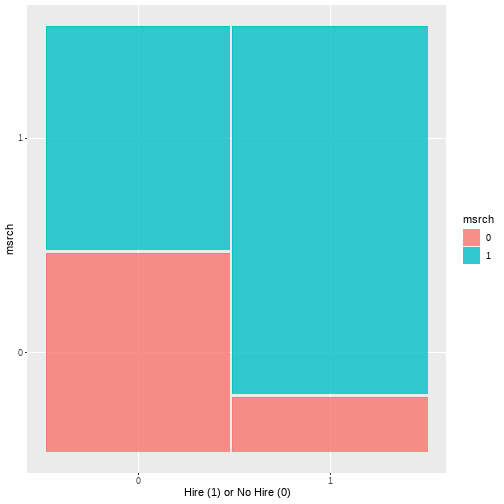

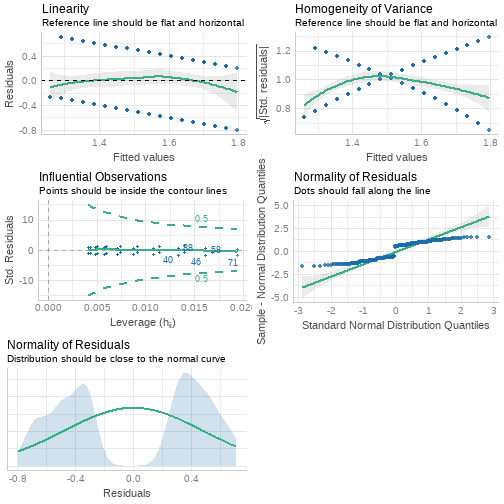

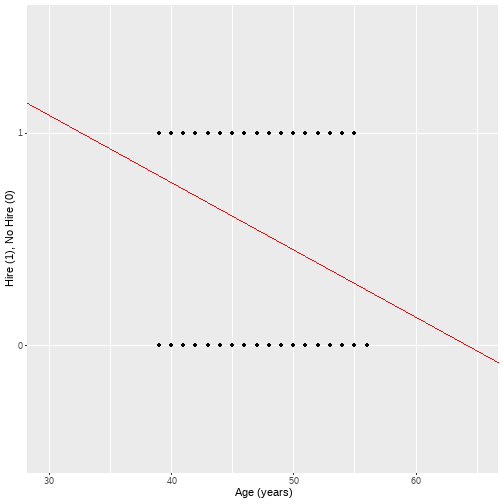

class: center, middle, inverse, title-slide # <b>Binary Logistic Model </b> ## Data Analysis for Psychology in R 2<br><br> ### dapR2 Team ### Department of Psychology<br>The University of Edinburgh ### AY 2022-2023 --- # Weeks Learning Objectives 1. Identify and provide examples of binary psychological outcomes. 2. Understand why a standard LM is not appropriate for binary data. 3. Fit and interpret a logistic model --- # Topics for today + Logistic regression + Why do we need logistic regression? + The logistic regression model + Overall model evaluation + Logistic regression in R --- # Binary outcomes + Thus far we have discussed: + linear regression with a continuous DV + linear regression with categorical (including binary) predictors + What if we have a binary outcome variable? + E.g.,: + Healthy vs diseased + Died vs survived + Hired vs not hired + Correct vs incorrect + When we have binary outcome variable, linear regression is no longer appropriate + Let's see what happens when we fit a linear regression model with a binary outcome variable? --- # Applying linear regression to binary outcomes + We can code our outcome in terms of whether or not an event happened + `\(Y=1\)` for a job offer (event occurred) + `\(Y=0\)` for no job offer (event did not occur) + If we then fit a linear regression model, our model predicts the probability of the event occurring `\(P(Y=1)\)` or `\(P(y_i)\)` --- # Some example data + Imagine we're interested in predicting hiring decisions. + We collect data on n=242 job-seekers + Age + Effort put into job application + Our variables: + DV: `work` (0 = did not get job; 1 = did get job) + IV1: `age` (in years) + IV2: `msrch` (effort into job application, 0=low effort, 1 = high effort) --- # Visualize Our Data .pull-left[ + Here we can see: 1. We have slightly more people being hired than not (light blue bar in left-hand plot) 2. Those who were hired (blue) are younger than those who were not (red) (density plot, top right) 3. There is a greater number of people who work hard (proportion of blue in bottom right plot) among those who were hired (right hand bar of this plot) ] .pull-right[ <!-- --> ] --- <!-- --> --- <!-- --> --- # Proportion hired ```r hire %>% group_by(work) %>% summarise( n = n()) %>% mutate( Prop = round(n/sum(n),2) ) ``` ``` ## # A tibble: 2 x 3 ## work n Prop ## <fct> <int> <dbl> ## 1 0 117 0.48 ## 2 1 125 0.52 ``` --- # Linear probability model + So let's just use `lm()` to predict our outcome (Hire vs no hire) + **Note**: R will actually try and stop us doing this if `work` is a factor + So we have to pretend to R that it is numeric. ```r hire <- hire %>% mutate( * work = as.numeric(work) ) m1 <- lm(work ~ age, data = hire) ``` --- # Linear probability model + Nothing looks too amiss here ``` ## ## Call: ## lm(formula = work ~ age, data = hire) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.7971 -0.4497 0.2345 0.4240 0.7082 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 3.028641 0.320801 9.441 < 2e-16 *** ## age -0.031578 0.006668 -4.736 3.74e-06 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.4799 on 240 degrees of freedom ## Multiple R-squared: 0.08545, Adjusted R-squared: 0.08164 ## F-statistic: 22.42 on 1 and 240 DF, p-value: 3.739e-06 ``` + But let's look at our assumptions checks? --- # Violated assumptions .pull-left[ + The plots here were produced using the `check_model()` function in the `performance()` package. + These are not ideally formatted plots for reports, but it is a nice quick way to look at all assumption plots. + Can also be used for different diagnostics like `vif` etc. ] .pull-right[ <!-- --> ] --- # Impossible probabilities .pull-left[ + And there is a further issue? + By definition, probabilities must be between 0 and 1. + But our model predicts some `\(P(Y=1)\)` values >1 within the plausible range of age values ] .pull-right[ <!-- --> ] --- # The problem with linear regression + In general, when we apply linear regression to binary outcomes: + The distribution of the residuals is bimodal (not normal) + The variance of the residuals is not constant + The relation between `\(x\)` and `\(y\)` is not linear + Probabilities are not constrained to be between 0 and 1 + The logistic regression model solves these issues with linear regression --- class: center, middle #Let's get some details on the logistic model --- # The logistic regression model + In logistic regression, we predict the probability that `\(Y=1\)`, `\(P(y_i)\)`, from our X's, using: `$$P(y_i) = \frac{1}{1+e^{-(\beta_0 + \beta_1x_1)}}$$` + `\(e\)` = exponential + `\(\beta_0 + \beta_1x_1\)` is a linear combination with: + a constant `\(\beta_0\)` (intercept), and + `\(\beta_1\)` capturing the effect of `\(x_1\)` on the outcome `\(y\)` --- # The logistic regression model <img src="logistic_model.png" width="70%" style="display: block; margin: auto;" /> --- # Probability, odds and log-odds + An alternative way to think about this is in terms of probability, odds and log-odds. + Our presentation above includes the exponential of the coefficients so that (a) predictions are bounded within 0 and 1, and (b) we can have a linear representation. + The alternative way to view it is to change what we are predicting... --- # Probability, odds and log-odds .pull-left[ + `\(Probability = P(Y_i)\)` + `\(odds = \frac{P(Y=1)}{1-P(Y=1)}\)` + `\(logodds = ln \left (\frac{P(Y=1)}{1-P(Y=1)} \right)\)` ] .pull-right[ <img src="MC_logistic.png" width="80%" style="display: block; margin: auto;" /> ] --- # Logistic models with different slopes + Given that we can see our log-odds provide a linear relation, we can think about predicting these: `$$ln \left (\frac{P(Y=1)}{1-P(Y=1)} \right) = \beta_0 + \beta_1x_1$$` + and we can easily extend to models with multiple predictors: `$$P(y_i) = \frac{1}{1+e^{-(\beta_0 + \beta_1x_1 + \beta_2x_2 ... + \beta_kx_k)}}$$` + Or `$$ln \left (\frac{P(Y=1)}{1-P(Y=1)} \right) = \beta_0 + \beta_1x_1 + \beta_2x_2 ... + \beta_kx_k$$` --- # Estimating logistic regression coefficients + Linear regression models can be estimated using least squares estimation + Logistic regression models are estimated using maximum likelihood estimation (MLE) + MLE finds the logistic regression coefficients that *maximise the likelihood of the observed data having occurred* + While least squares estimation minimises the SSE to find the coefficients for the line of best, MLE minimises the log-likelihood + Larger log-likelihood values indicate poorer fitting models --- # Overall model evaluation + To evaluate overall model fit in logistic regression we: + Compare our model to a baseline model with no predictors (null model) + Assess the improvement in fit + *Note we have already seen this idea when discussing the `\(F\)`-test and incremental `\(F\)`-test* + The baseline model is where the predicted values for the DV are based on the most frequent value of the DV (0 or 1) + Our best guess of the DV value in the absence of informative predictors. + Analogous to using the mean DV value as the baseline in linear regression. --- # Overall model evaluation + We compare our model with the baseline model using **deviance**: `$$deviance = -2*loglikelihood$$` + Deviance often denoted **-2LL** --- # Overall model evaluation + We calculate the -2LL differences between our model and the baseline model. + We assess the statistical significance of the -2LL difference to see if our model significantly improves on the baseline model + We compare our -2LL difference to a `\(\chi^2\)` distribution with df = k + k= number of predictors in model + Significant `\(p\)`-value indicates that our model improves on the baseline model. + Assessing the -2LL differences in this way is called a likelihood ratio test or chi-square difference test --- class: center, middle #Logistic regression in R --- # The `glm()` function + In R, we conduct logistic regression using the `glm()` function + `glm` stands for **g**eneralised **l**inear **m**odel + More on this next week + Very similar in structure to the `lm()` function --- # Run our model: a little more code ```r *m2 <- glm(work ~ age + msrch, data = hire, family = "binomial") ``` + `glm()`; R function for running generalised linear models. + Provide a formula in the same style as lm() + Provide the name of the dataset --- # Run our model: a little more code ```r m2 <- glm(work ~ age + msrch, data = hire, * family = "binomial") ``` + The new bit. + We need to state what family of probability distributions we want for our DV. + This relates to the type of variable our DV is. + We will take a little more about this next lecture. + For now, for a binary variable, we want `family = binomial` --- # Quick aside: Why binomial? + Binomial distribution is a discrete probability distribution. (you do not need to remember the probability mass function below - don't worry.) `$$f(k,n,p) = Pr(X = k) = \binom{n}{k}p^{k}q^{n-k}$$` + `\(k\)` = number of success + `\(n\)` = total trials, + `\(p\)` = probability success + `\(q\)` = `\(1-p\)` or probability of failure + `\(\binom{n}{k}\)` = `\(n\)` choose `\(k\)`, or the number of ways to select `\(k\)` unordered items from a set of `\(n\)` items. + Practically, we can think of every instance of a `Hire` in out example as a success, and every `no hire` as a failure. --- # `glm()` output ```r m2 <- glm(work ~ age + msrch, data = hire, family = "binomial") summary(m2) ``` --- # `glm()` output ``` ## ## Call: ## glm(formula = work ~ age + msrch, family = "binomial", data = hire) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -1.8834 -1.0496 0.6436 0.9204 2.0589 ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) 4.52505 1.56972 2.883 0.003943 ** ## age -0.11848 0.03214 -3.687 0.000227 *** ## msrch1 1.68335 0.33446 5.033 4.83e-07 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 335.22 on 241 degrees of freedom ## Residual deviance: 285.36 on 239 degrees of freedom ## AIC: 291.36 ## ## Number of Fisher Scoring iterations: 4 ``` --- # Overall Model Test ```r m2_null <- glm(work ~ 1, family = "binomial", data = hire) anova(m2_null, m2, test="Chisq") ``` ``` ## Analysis of Deviance Table ## ## Model 1: work ~ 1 ## Model 2: work ~ age + msrch ## Resid. Df Resid. Dev Df Deviance Pr(>Chi) ## 1 241 335.22 ## 2 239 285.36 2 49.858 1.491e-11 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # Recall our data and model + Imagine we're interested in predicting hiring decisions. + We collect data on n=242 job-seekers + Age + Effort put into job application + Our variables: + DV: `work` (0 = did not get job; 1 = did get job) + IV1: `age` (in years) + IV2: `msrch` (effort into job application, 0=low effort, 1 = high effort) ```r m2 <- glm(work ~ age + msrch, data = hire, family = "binomial") summary(m2) ``` --- # Job-seeking example ``` ## ## Call: ## glm(formula = work ~ age + msrch, family = "binomial", data = hire) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -1.8834 -1.0496 0.6436 0.9204 2.0589 ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) 4.52505 1.56972 2.883 0.003943 ** ## age -0.11848 0.03214 -3.687 0.000227 *** ## msrch1 1.68335 0.33446 5.033 4.83e-07 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 335.22 on 241 degrees of freedom ## Residual deviance: 285.36 on 239 degrees of freedom ## AIC: 291.36 ## ## Number of Fisher Scoring iterations: 4 ``` --- # Model equation: job-seeking example + Below we have the general form with two `\(x\)`'s `$$P(y_i) = \frac{1}{1+e^{-(b_0 + b_1x_1 + b_2x_2)}}$$` + And we can insert the values from the previous slide with our model results: `$$P(y_i) = \frac{1}{1+e^{-(4.525 -0.118age + 1.683msrch)}}$$` --- # Interpreting logistic model coefficients + In linear regression, the `\(b\)` coefficients for each IV are the unit increase in `\(Y\)` for every unit increase in `\(X\)` (holding other IVs constant) + In logistic regression, the `\(b\)` coefficients for each IV are the **change in log odds of `\(Y\)` for every unit increase in `\(x\)`** (holding other IVs constant) --- # What are log odds? `\(b\)` = **the change in log odds of `\(Y\)` for every unit increase in `\(X\)`** + The odds of an event occurring (e.g., a job offer; Y=1) is defined as the ratio of the probability of the event occurring to the probability of the event not occurring: `$$odds = \frac{P(Y=1)}{1-P(Y=1)}$$` + `\(P(Y=1)\)` is the same as `\(P(y_i)\)` calculated in the logistic regression model + Think of a coin toss. + Odds of tails occurring = 0.5 + Odds of not tails = 0.5 + Odds = 1 --- # What are log odds? `\(b\)` = **the change in log odds of `\(Y\)` for every unit increase in `\(X\)`** + Log odds are then the natural logarithm of the odds: `$$log odds = ln \left (\frac{P(Y=1)}{1-P(Y=1)} \right)$$` --- # Probabilities, odds and log-odds ```r tibble( Probs = seq(0.1, 0.9, 0.1) ) %>% mutate( Odds = round(Probs/(1-Probs),2), Logits = round(log(Odds),2) ) ``` ``` ## # A tibble: 9 x 3 ## Probs Odds Logits ## <dbl> <dbl> <dbl> ## 1 0.1 0.11 -2.21 ## 2 0.2 0.25 -1.39 ## 3 0.3 0.43 -0.84 ## 4 0.4 0.67 -0.4 ## 5 0.5 1 0 ## 6 0.6 1.5 0.41 ## 7 0.7 2.33 0.85 ## 8 0.8 4 1.39 ## 9 0.9 9 2.2 ``` --- # For our job-seekers example + For every additional year of age, there was a decrease in the log odds of a job offer of 0.118 + Those who showed high effort in their application had a 1.683 greater log odds of a job offer than those who showed low effort --- # Odds ratio + Log odds don't provide an easily interpretable way of understanding how the DV changes with the IV's + The `\(b\)` coefficients from logistic regression are thus often converted to odds ratios + Odds ratios are a bit easier to interpret + Odds ratios are obtained by exponentiating the `\(b\)` coefficients + In R, we exponentiate coefficients using the `exp()` function. --- # Exponentiating `\(b\)` coefficients ```r exp(coef(m2)) ``` ``` ## (Intercept) age msrch1 ## 92.3001400 0.8882662 5.3835809 ``` --- # Interpreting odds ratios + When the coefficients are converted to odds ratios, they represent the **change in odds with a unit increase in X** + Specifically the *ratio of odds* at X=x and X=x+1 + An odds ratio of 1 indicates no effect + An odds ratio < 1 indicates a negative effect + An odds ratio of >1 indicates a positive effect --- # Interpreting odds ratios ```r exp(coef(m2)) ``` ``` ## (Intercept) age msrch1 ## 92.3001400 0.8882662 5.3835809 ``` + For every year of `age`, the odds of being hired decrease by 0.88. + For those who put high effort into applications, the odds of being hired increase by a factor of 5.38. --- class: center, middle #Now let's look at the significance of predictors --- # Statistical significance of predictors + We can also evaluate the statistical significance of the predictors + To do this we can use a `\(z\)`-test: `$$z = \frac{b}{SE(b)}$$` + However , we should be aware that the `\(z\)`-test is a little prone to Type II errors + We can supplement it using model selection procedures (see later) + The z-test and associated `\(p\)`-value is provided as part of the summary output for `glm()` --- # The z-test ``` ## ## Call: ## glm(formula = work ~ age + msrch, family = "binomial", data = hire) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -1.8834 -1.0496 0.6436 0.9204 2.0589 ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) 4.52505 1.56972 2.883 0.003943 ** ## age -0.11848 0.03214 -3.687 0.000227 *** ## msrch1 1.68335 0.33446 5.033 4.83e-07 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 335.22 on 241 degrees of freedom ## Residual deviance: 285.36 on 239 degrees of freedom ## AIC: 291.36 ## ## Number of Fisher Scoring iterations: 4 ``` --- # Confidence intervals + We can also compute confidence intervals for our coefficients and associated odds ratios + For odds ratios, a value of 1= no effect + The question is, therefore, whether the confidence interval includes 1 or not --- # 95% confidence intervals for our job-seekers example .pull-left[ + We can use the `confint()` function to compute confidence intervals + We can embed this in the `exp()` function to convert our coefficients to odds ratios. + Neither 95% CI includes 1, therefore, both predictors are significant at `\(p\)`<.05. ] .pull-right[ ```r exp(confint(m2)) ``` ``` ## Waiting for profiling to be done... ``` ``` ## 2.5 % 97.5 % ## (Intercept) 4.3974299 2107.9649011 ## age 0.8328194 0.9449901 ## msrch1 2.8472535 10.6300211 ``` ] --- # Model selection + Just as in linear regression, we can compare logistic models differing in their predictors to choose a best fitting model + Methods we can use: + Likelihood ratio test + AIC + BIC --- # Likelihood ratio test + We already encountered this when we compared our model to a baseline model with no predictors. + We can compare any set of **nested** models using the likelihood ratio test + Including models differing in one predictor + This tests the statistical significance of the effect of that predictor + Provides an alternative to the z-test --- # Likelihood ratio test in R ```r m_null <- glm(work ~ 1, data = hire, family = "binomial") m_age <- glm(work ~ age , data = hire, family = "binomial") m_full <- glm(work ~ age + msrch, data = hire, family = "binomial") anova(m_null, m_age, m_full, test = "Chisq") ``` ``` ## Analysis of Deviance Table ## ## Model 1: work ~ 1 ## Model 2: work ~ age ## Model 3: work ~ age + msrch ## Resid. Df Resid. Dev Df Deviance Pr(>Chi) ## 1 241 335.22 ## 2 240 313.98 1 21.242 4.047e-06 *** ## 3 239 285.36 1 28.616 8.826e-08 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # AIC and BIC + We met AIC and BIC in the model selection section in linear regression + Can be used to compare either nested or non-nested models + Smaller (more negative) AIC and BIC indicate better fitting models + BIC, in the context of regression, penalises extra predictors more heavily + BIC differences >10 indicate that one model is better than another to a practically significant extent --- # AIC and BIC in R ```r AIC(m_null, m_age, m_full) ``` ``` ## df AIC ## m_null 1 337.2187 ## m_age 2 317.9762 ## m_full 3 291.3604 ``` ```r BIC(m_null, m_age, m_full) ``` ``` ## df BIC ## m_null 1 340.7077 ## m_age 2 324.9541 ## m_full 3 301.8273 ``` --- # Summary of logistic regression + Use logistic regression for binary data +Logistic regression coefficients are converted to odds ratios to make them more interpretable + Odds ratios tell us how the odds of the event change with a unit increase in X + 1 is no effect + Less than 1 is a negative effect + More than 1 is a positive effect + Statistical significance of predictors can be assessed via: + z-test + Confidence intervals + Likelihood ratio test + Model selection uses + Likelihood ratio test + AIC and BIC