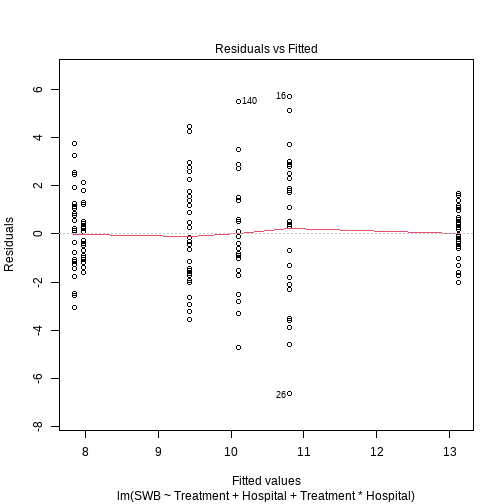

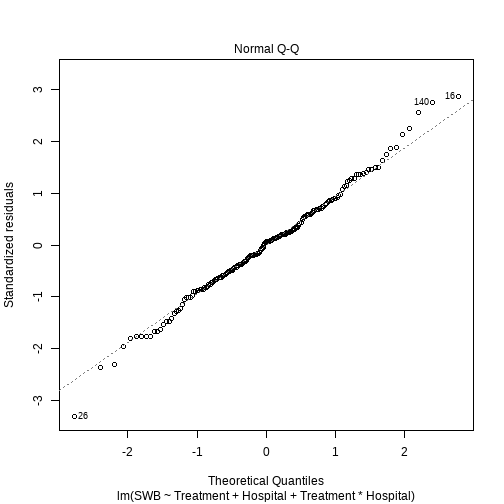

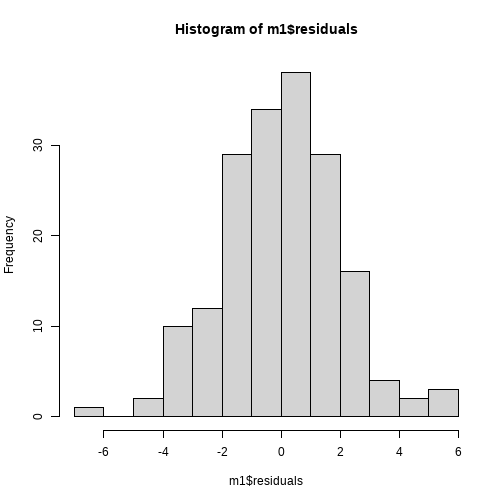

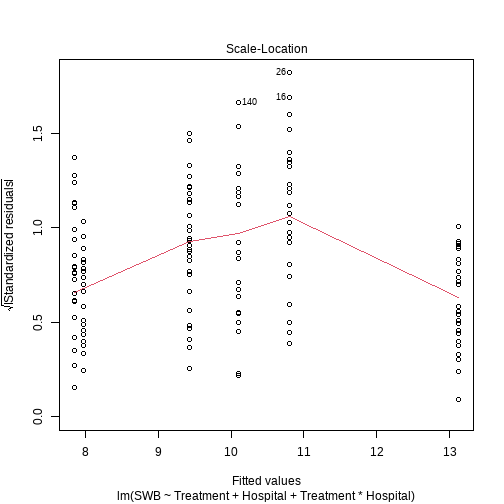

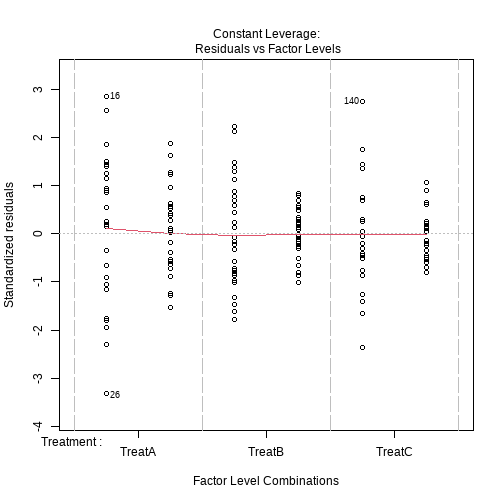

class: center, middle, inverse, title-slide # <b> Multiple Comparisons & Assumptions </b> ## Data Analysis for Psychology in R 2<br><br> ### dapR2 Team ### Department of Psychology<br>The University of Edinburgh --- # Week's Learning Objectives + Understand the issue of multiple comparisons + Understand the corrections available for multiple comparisons + Understand the specific application to pairwise comparisons in factorial designs + Recap assumptions --- # Topics for today + What is the issue with multiple comparisons? + How do we adjust for it? + Recapping assumptions. --- # Types of errors + Type I error = False Positive + Reject the null when the null is true. + `\(\alpha = P(\text{Type I Error})\)` + Type II error = False negative + Fail to reject the null when the null is false. + `\(\beta = P(\text{Type II Error})\)` --- # A single test + If we perform a single test, our Type I error rate is `\(\alpha\)`. + So if we set `\(\alpha = 0.05\)`, the probability of a false positive is 0.05 + However, what if we do multiple tests ( `\(m\)` ) each with the same `\(\alpha\)` level? + What is the probability of a false positive among `\(m\)` tests? --- # Multiple tests `$$P(\text{Type I error}) = \alpha$$` `$$P(\text{not making a Type I error}) = 1 - \alpha$$` `$$P(\text{Not making a Type I error in m tests}) = (1 - \alpha)^m$$` `$$P(\text{Making a Type I error in m tests}) = 1 - (1-\alpha)^m$$` --- # P(Making a Type I error in m tests) + Suppose `\(m=2\)` and `\(\alpha = 0.05\)` ```r 1 - ((1-0.05)^2) ``` ``` ## [1] 0.0975 ``` + Suppose `\(m=5\)` and `\(\alpha = 0.05\)` ```r 1 - ((1-0.05)^5) ``` ``` ## [1] 0.2262191 ``` + Suppose `\(m=10\)` and `\(\alpha = 0.05\)` ```r 1 - ((1-0.05)^10) ``` ``` ## [1] 0.4012631 ``` --- # Why does this matter? + The `\(P(\text{Making a Type I error in m tests}) = 1 - (1-\alpha)^m\)` is referred to as the family-wise error rate. + A "family" is a set of related tests. + When we analyse an experimental design, and we look at lots of specific comparisons, we can think of all these tests as a "family". + The larger the family, the more likely we are to find a false positive (see previous slide). --- # Corrections + There are various methods designed to control for the number of tests. + Here control means to keep the Type I Error rate at an intended `\(\alpha\)`. + Many options. Some of most common: + Bonferroni + Sidak + Tukey + Scheffe + Others you may see: + Holm's step-down + Hochberg's step-up --- # Example ```r hosp_tbl <- read_csv("hospital.csv", col_types = "dff") ``` + A researcher was interested in whether the subjective well-being of patients differed dependent on the post-operation treatment schedule they were given, and the hospital in which they were staying. + **Condition 1**: `Treatment` (Levels: TreatA, TreatB, TreatC). + **Condition 2**: `Hosp` (Levels: Hosp1, Hosp2). + Total sample n = 180 (30 patients in each of 6 groups). + Between person design. + **Outcome**: Subjective well-being (SWB). + Importantly, remember from last week, with this design, we have a lot of pairwise tests! --- # Our results ```r m1 <- lm(SWB ~ Treatment + Hospital + Treatment*Hospital, data = hosp_tbl) anova(m1) ``` ``` ## Analysis of Variance Table ## ## Response: SWB ## Df Sum Sq Mean Sq F value Pr(>F) ## Treatment 2 177.02 88.511 21.5597 4.315e-09 *** ## Hospital 1 9.57 9.568 2.3306 0.1287 ## Treatment:Hospital 2 392.18 196.088 47.7635 < 2.2e-16 *** ## Residuals 174 714.34 4.105 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- class: center, middle # Time for a break --- class: center, middle # Welcome Back! --- # Bonferroni & Sidak + Both are considered "conservative" adjustments. + Each treats individual tests within the family as if they are independent. + Consider an `\(\alpha = 0.05\)` and `\(m=\text{number of tests}=15\)` + **Bonferroni**: `\(\alpha_{Bonferroni} = \frac{\alpha}{m}\)` ```r 0.05/15 ``` ``` ## [1] 0.003333333 ``` + **Sidak**: `\(\alpha_{Sidak} = 1 - (1- \alpha)^{\frac{1}{m}}\)` ```r 1-((1-0.05)^(1/15)) ``` ``` ## [1] 0.003413713 ``` --- # No adjustments (as last week) ```r m1_means <- emmeans(m1, ~Treatment*Hospital) pairs(m1_means, adjust="none") ``` ``` ## contrast estimate SE df t.ratio p.value ## TreatA Hosp1 - TreatB Hosp1 1.370 0.523 174 2.619 0.0096 ## TreatA Hosp1 - TreatC Hosp1 0.697 0.523 174 1.332 0.1847 ## TreatA Hosp1 - TreatA Hosp2 2.947 0.523 174 5.632 <.0001 ## TreatA Hosp1 - TreatB Hosp2 -2.317 0.523 174 -4.428 <.0001 ## TreatA Hosp1 - TreatC Hosp2 2.820 0.523 174 5.390 <.0001 ## TreatB Hosp1 - TreatC Hosp1 -0.673 0.523 174 -1.287 0.1998 ## TreatB Hosp1 - TreatA Hosp2 1.577 0.523 174 3.014 0.0030 ## TreatB Hosp1 - TreatB Hosp2 -3.687 0.523 174 -7.047 <.0001 ## TreatB Hosp1 - TreatC Hosp2 1.450 0.523 174 2.772 0.0062 ## TreatC Hosp1 - TreatA Hosp2 2.250 0.523 174 4.301 <.0001 ## TreatC Hosp1 - TreatB Hosp2 -3.013 0.523 174 -5.760 <.0001 ## TreatC Hosp1 - TreatC Hosp2 2.123 0.523 174 4.059 0.0001 ## TreatA Hosp2 - TreatB Hosp2 -5.263 0.523 174 -10.061 <.0001 ## TreatA Hosp2 - TreatC Hosp2 -0.127 0.523 174 -0.242 0.8090 ## TreatB Hosp2 - TreatC Hosp2 5.137 0.523 174 9.819 <.0001 ``` --- # Bonferroni in action: `emmeans` ```r pairs(m1_means, adjust="bonferroni") ``` ``` ## contrast estimate SE df t.ratio p.value ## TreatA Hosp1 - TreatB Hosp1 1.370 0.523 174 2.619 0.1441 ## TreatA Hosp1 - TreatC Hosp1 0.697 0.523 174 1.332 1.0000 ## TreatA Hosp1 - TreatA Hosp2 2.947 0.523 174 5.632 <.0001 ## TreatA Hosp1 - TreatB Hosp2 -2.317 0.523 174 -4.428 0.0003 ## TreatA Hosp1 - TreatC Hosp2 2.820 0.523 174 5.390 <.0001 ## TreatB Hosp1 - TreatC Hosp1 -0.673 0.523 174 -1.287 1.0000 ## TreatB Hosp1 - TreatA Hosp2 1.577 0.523 174 3.014 0.0445 ## TreatB Hosp1 - TreatB Hosp2 -3.687 0.523 174 -7.047 <.0001 ## TreatB Hosp1 - TreatC Hosp2 1.450 0.523 174 2.772 0.0928 ## TreatC Hosp1 - TreatA Hosp2 2.250 0.523 174 4.301 0.0004 ## TreatC Hosp1 - TreatB Hosp2 -3.013 0.523 174 -5.760 <.0001 ## TreatC Hosp1 - TreatC Hosp2 2.123 0.523 174 4.059 0.0011 ## TreatA Hosp2 - TreatB Hosp2 -5.263 0.523 174 -10.061 <.0001 ## TreatA Hosp2 - TreatC Hosp2 -0.127 0.523 174 -0.242 1.0000 ## TreatB Hosp2 - TreatC Hosp2 5.137 0.523 174 9.819 <.0001 ## ## P value adjustment: bonferroni method for 15 tests ``` --- # Sidak with `emmeans` ```r pairs(m1_means, adjust = "sidak") ``` ``` ## contrast estimate SE df t.ratio p.value ## TreatA Hosp1 - TreatB Hosp1 1.370 0.523 174 2.619 0.1348 ## TreatA Hosp1 - TreatC Hosp1 0.697 0.523 174 1.332 0.9533 ## TreatA Hosp1 - TreatA Hosp2 2.947 0.523 174 5.632 <.0001 ## TreatA Hosp1 - TreatB Hosp2 -2.317 0.523 174 -4.428 0.0003 ## TreatA Hosp1 - TreatC Hosp2 2.820 0.523 174 5.390 <.0001 ## TreatB Hosp1 - TreatC Hosp1 -0.673 0.523 174 -1.287 0.9647 ## TreatB Hosp1 - TreatA Hosp2 1.577 0.523 174 3.014 0.0436 ## TreatB Hosp1 - TreatB Hosp2 -3.687 0.523 174 -7.047 <.0001 ## TreatB Hosp1 - TreatC Hosp2 1.450 0.523 174 2.772 0.0889 ## TreatC Hosp1 - TreatA Hosp2 2.250 0.523 174 4.301 0.0004 ## TreatC Hosp1 - TreatB Hosp2 -3.013 0.523 174 -5.760 <.0001 ## TreatC Hosp1 - TreatC Hosp2 2.123 0.523 174 4.059 0.0011 ## TreatA Hosp2 - TreatB Hosp2 -5.263 0.523 174 -10.061 <.0001 ## TreatA Hosp2 - TreatC Hosp2 -0.127 0.523 174 -0.242 1.0000 ## TreatB Hosp2 - TreatC Hosp2 5.137 0.523 174 9.819 <.0001 ## ## P value adjustment: sidak method for 15 tests ``` --- # What about if there were less tests? ```r pairs(m1_means, simple="Treatment", adjust="bonferroni") ``` ``` ## Hospital = Hosp1: ## contrast estimate SE df t.ratio p.value ## TreatA - TreatB 1.370 0.523 174 2.619 0.0288 ## TreatA - TreatC 0.697 0.523 174 1.332 0.5541 ## TreatB - TreatC -0.673 0.523 174 -1.287 0.5993 ## ## Hospital = Hosp2: ## contrast estimate SE df t.ratio p.value ## TreatA - TreatB -5.263 0.523 174 -10.061 <.0001 ## TreatA - TreatC -0.127 0.523 174 -0.242 1.0000 ## TreatB - TreatC 5.137 0.523 174 9.819 <.0001 ## ## P value adjustment: bonferroni method for 3 tests ``` --- # Tukey & Scheffe + **Scheffe procedure** involves an adjustment to the critical value of `\(F\)`. + The adjustment relates to the number of comparisons being made. + And makes the critical value of `\(F\)` larger for a fixed `\(\alpha\)`. + The more tests, the larger `\(F\)`. + The square-root of the adjusted F provides and adjusted `\(t\)`. -- + **Tukey's HSD** (Honest significant Differences) + Compares all pairwise group means. + Each difference is divided by the `\(SE\)` of the sum of means. + This produces a `\(q\)` statistic for each comparison. + And is compared against a studentized range distribution. --- # With `emmeans` ```r pairs(m1_means, adjust = "tukey") ``` ``` ## contrast estimate SE df t.ratio p.value ## TreatA Hosp1 - TreatB Hosp1 1.370 0.523 174 2.619 0.0982 ## TreatA Hosp1 - TreatC Hosp1 0.697 0.523 174 1.332 0.7670 ## TreatA Hosp1 - TreatA Hosp2 2.947 0.523 174 5.632 <.0001 ## TreatA Hosp1 - TreatB Hosp2 -2.317 0.523 174 -4.428 0.0002 ## TreatA Hosp1 - TreatC Hosp2 2.820 0.523 174 5.390 <.0001 ## TreatB Hosp1 - TreatC Hosp1 -0.673 0.523 174 -1.287 0.7918 ## TreatB Hosp1 - TreatA Hosp2 1.577 0.523 174 3.014 0.0346 ## TreatB Hosp1 - TreatB Hosp2 -3.687 0.523 174 -7.047 <.0001 ## TreatB Hosp1 - TreatC Hosp2 1.450 0.523 174 2.772 0.0670 ## TreatC Hosp1 - TreatA Hosp2 2.250 0.523 174 4.301 0.0004 ## TreatC Hosp1 - TreatB Hosp2 -3.013 0.523 174 -5.760 <.0001 ## TreatC Hosp1 - TreatC Hosp2 2.123 0.523 174 4.059 0.0010 ## TreatA Hosp2 - TreatB Hosp2 -5.263 0.523 174 -10.061 <.0001 ## TreatA Hosp2 - TreatC Hosp2 -0.127 0.523 174 -0.242 0.9999 ## TreatB Hosp2 - TreatC Hosp2 5.137 0.523 174 9.819 <.0001 ## ## P value adjustment: tukey method for comparing a family of 6 estimates ``` --- # With `emmeans` ```r pairs(m1_means, adjust = "scheffe") ``` ``` ## contrast estimate SE df t.ratio p.value ## TreatA Hosp1 - TreatB Hosp1 1.370 0.523 174 2.619 0.2372 ## TreatA Hosp1 - TreatC Hosp1 0.697 0.523 174 1.332 0.8787 ## TreatA Hosp1 - TreatA Hosp2 2.947 0.523 174 5.632 <.0001 ## TreatA Hosp1 - TreatB Hosp2 -2.317 0.523 174 -4.428 0.0021 ## TreatA Hosp1 - TreatC Hosp2 2.820 0.523 174 5.390 0.0001 ## TreatB Hosp1 - TreatC Hosp1 -0.673 0.523 174 -1.287 0.8935 ## TreatB Hosp1 - TreatA Hosp2 1.577 0.523 174 3.014 0.1119 ## TreatB Hosp1 - TreatB Hosp2 -3.687 0.523 174 -7.047 <.0001 ## TreatB Hosp1 - TreatC Hosp2 1.450 0.523 174 2.772 0.1809 ## TreatC Hosp1 - TreatA Hosp2 2.250 0.523 174 4.301 0.0033 ## TreatC Hosp1 - TreatB Hosp2 -3.013 0.523 174 -5.760 <.0001 ## TreatC Hosp1 - TreatC Hosp2 2.123 0.523 174 4.059 0.0072 ## TreatA Hosp2 - TreatB Hosp2 -5.263 0.523 174 -10.061 <.0001 ## TreatA Hosp2 - TreatC Hosp2 -0.127 0.523 174 -0.242 1.0000 ## TreatB Hosp2 - TreatC Hosp2 5.137 0.523 174 9.819 <.0001 ## ## P value adjustment: scheffe method with rank 5 ``` --- class: center, middle # Time for a break --- class: center, middle # Welcome Back! --- # Assumptions and Checks + You will be pleased to hear, that essentially nothing changes. + Key difference is we do not worry about assessing linearity. + Without a continuous predictor, we do not really have slopes + Instead we look to see if the within group errors have a zero mean --- # Within group errors .pull-left[ ```r plot(m1, which = 1) ``` ] .pull-right[ <!-- --> ] --- # Normality of residuals .pull-left[ ```r plot(m1, which = 2) ``` ] .pull-right[ <!-- --> ] --- # Normality of residuals .pull-left[ ```r hist(m1$residuals) ``` ] .pull-right[ <!-- --> ] --- # Equal variances .pull-left[ ```r plot(m1, which = 3) ``` ] .pull-right[ <!-- --> ] --- # Equal variances .pull-left[ ```r plot(m1, which = 5) ``` ] .pull-right[ <!-- --> ] --- # Summary + We have discussed the issue of multiple comparisons + We have seen how to implement corrections using `emmeans` + We have recapped assumptions for linear models. --- class: center, middle # Thanks for listening!