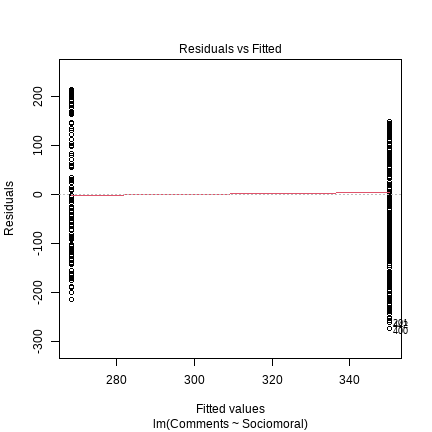

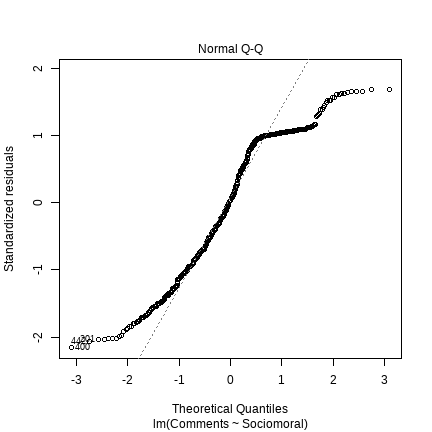

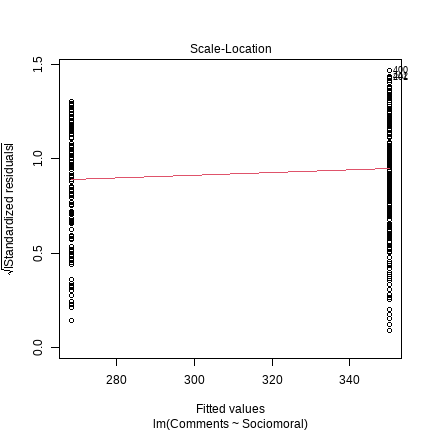

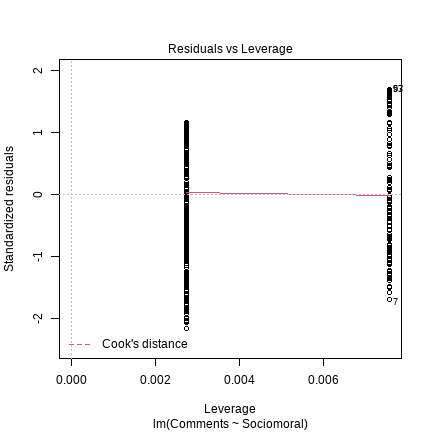

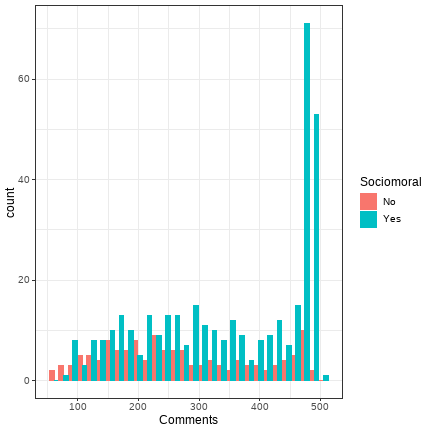

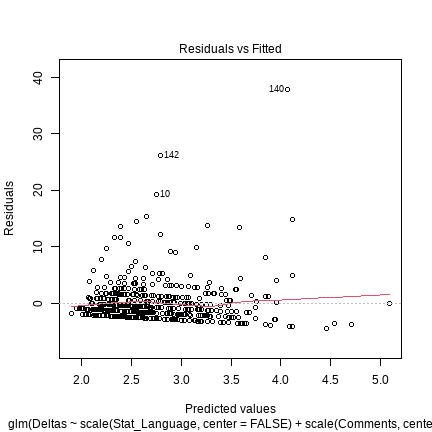

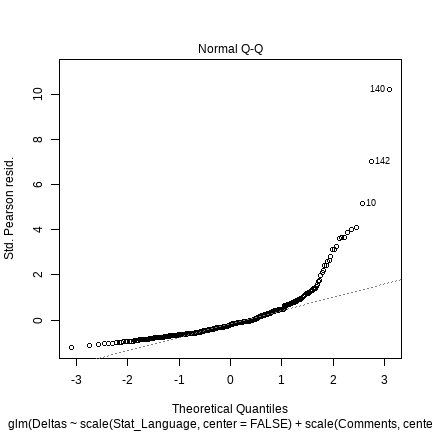

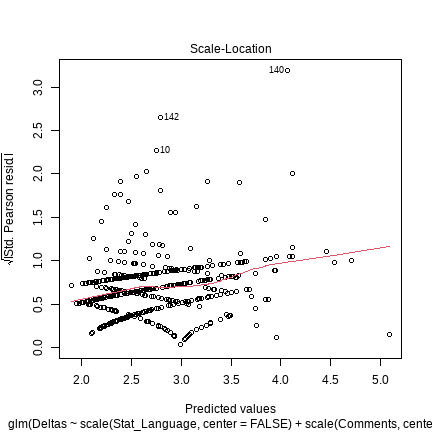

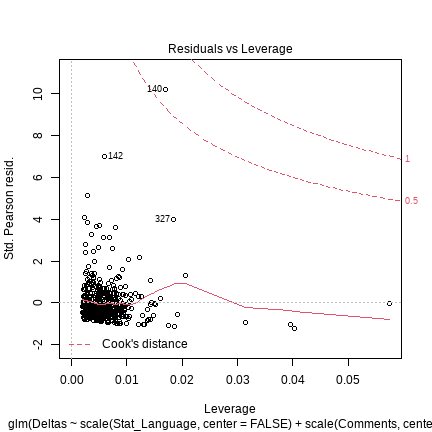

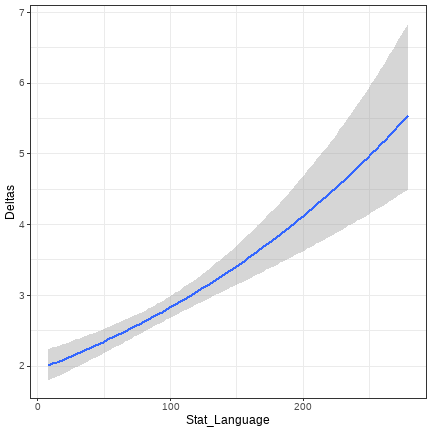

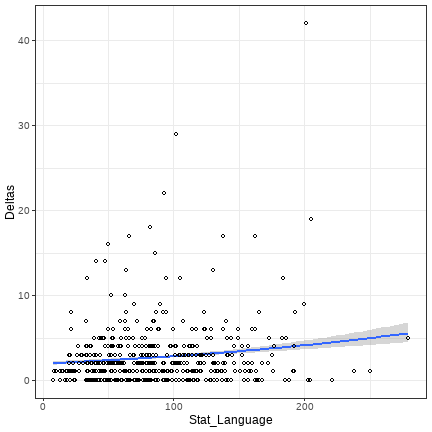

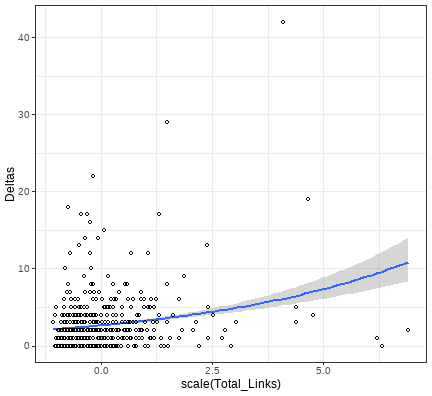

class: center, middle, inverse, title-slide # <b> Preregistration and Analytic Freedom </b> ## Data Analysis for Psychology in R 2<br><br> ### dapR2 Team ### Department of Psychology<br>The University of Edinburgh ### AY 2020-2021 --- # Learning Objectives - What is preregistration and why would we preregister studies. - Limitations to preregistration and the relationship between preregistration and exploratory analyses - Concrete example of the benefits and difficulties of preregistration --- # The Issue: Standard Scientific Practice Involves Analytic Freedom - Stopping criterion: How many participants should I collect? Is 20 enough? 100? -- - Exclusion criteria: Are there participants whom I should exclude? How would I figure that out? Is there a "bad" participant -- - Analysis choices: What covariates should I include in my statistical model? How should I treat my dependent variable -- should I transform it in some way? How would that impact the conclusions I draw -- - Family of comparisons: Do I have a key dependent variable or many I'm interested in? What are the implications of looking at one vs. many? --- # Nosek et al. 2018 "A vast number of choices in analyzing data could be made. If those choices are made during analysis, observing the data may make some paths more likely and others less likely. By the end, it may be impossible to estimate the paths that could have been selected had the data looked different" --- # Proposed Solution: Preregistrations - Conduct exploratory or background research -- - Form a prediction having explored some initial data / read the literature specifying the analysis (es) you aim to run completely. - For example, DV ~ IV1 + IV2 vs. DV ~ IV1*IV2 -- - Test (either based on fit or test statistic) your model on this new data after having registered it -- - Warning: This is more challenging than you might think for all but the simplest designs! And the less you know going into the study the harder these registrations will be. --- # Several ways to register your predictions - https://aspredicted.org/ - https://osf.io/ --- # What are included in registrations - There are several templates for different branches of research, but the area you work in will likely affect what makes the most sense to use - For social psychology, one template from vant Veer, A.E., and Giner-Sorolla, R. (2016) -- - This template asks for information like the following: - Describe the hypotheses in terms of directional relationships between manipulated/measured variables -- - For interactions, describe the shape these interactions are likely to take -- - If you are manipulating a variable, make predictions for successful manipulation check variables or explain why no manipulation check is included -- - Describe the analyses which will test the main predictions and for each one include the relevant variables of interest, how they are calculated, the statistical technique / model and the rationale for including or omitting covariates --- # Other templates More templates: https://osf.io/zab38/wiki/home/ - OSF Preregistration page: https://osf.io/k5wns/ - OSF How-To and Resources: https://www.cos.io/initiatives/prereg?ga=2.85949657.1114272946.1607948696-474133586.1547657474 --- # Exploratory Analyses - Exploration is good - Do not let concerns about preregistration interfere with asking questions about your data you didn't think of when you first registered your analyses! - In fact, exploration is very useful for discovering patterns in data that were not predicted and motivating future confirmatory research -- - But, preregistrations make it clear (mostly to your future self but also to others) what analyses you considered primary when you began the project, and what was comparatively secondary. --- # Many Challenges - Writing analysis plans is difficult and takes quite a lot of time - Foreseeing contingencies -- - The documents one creates can be pretty long -- - Upside: Once you get the data, the analysis is pretty fast. You've already thought a lot about the analyses you will run and even written the R script --- # Many Challenges - With very new projects, it can be difficult to know exactly what to preregister - Initial preregistrations can be quite general and thus might not be completely convincing to the skeptical reader. But that doesn't mean they aren't worth doing. -- - Subsequent preregistrations of direct replications or follow-up experiments will often be more specific and constrained -- - Deviations and amendments are just fine, but the point is to be transparent about those deviations --- # What preregistration does not fix - Deliberate dishonesty - Preregistering predictions after you've already looked at the data - Ignoring the preregistration (e.g., dropping a dependent variable that was a central part of your analytic plan) - Results that don't generalize because of biased sampling, poorly designed experiments, or all the other stuff that can go wrong in science --- # Working through an example from an actual preregistration I wrote but haven't look at in three years - First, what is change my view: https://www.reddit.com/r/changemyview/comments/mas8o3/cmv_once_fully_vaccinated_for_covid19_you_can/ - https://osf.io/jdxa8/ --- # Read data into R The file path used here will depend on where your file is saved, but something like the following will work: ```r library(tidyverse) Preregistration_Dataset_Example <- read_csv("preregistration_reddit.csv") ``` ``` ## ## -- Column specification -------------------------------------------------------- ## cols( ## Title = col_character(), ## Text = col_character(), ## Comments = col_double(), ## Dacs = col_double(), ## Deltas = col_double(), ## Comments_with_Links = col_double(), ## Total_Links = col_double(), ## Link = col_character(), ## Dac_with_links = col_double(), ## Stat_Language = col_double(), ## Dacs_with_stats = col_double(), ## Sociomoral = col_character() ## ) ``` ```r head(Preregistration_Dataset_Example) ``` ``` ## # A tibble: 6 x 12 ## Title Text Comments Dacs Deltas Comments_with_L~ Total_Links Link ## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <chr> ## 1 "CMV:Str~ "Suprem~ 403 1 2 15 15 "[['ref~ ## 2 "CMV: Ne~ "Here a~ 263 0 0 14 14 "[['fac~ ## 3 "CMV: Th~ "The fi~ 145 1 2 6 7 "[['exp~ ## 4 "CMV: Ou~ "To me ~ 458 4 5 36 51 "[['fin~ ## 5 "CMV: FI~ "ME NO ~ 127 0 0 6 6 "[['^^^~ ## 6 "CMV: Ov~ "So thi~ 479 5 6 22 28 "[['htt~ ## # ... with 4 more variables: Dac_with_links <dbl>, Stat_Language <dbl>, ## # Dacs_with_stats <dbl>, Sociomoral <chr> ``` --- ```r library(summarytools) ``` ``` ## Registered S3 method overwritten by 'pryr': ## method from ## print.bytes Rcpp ``` ``` ## For best results, restart R session and update pander using devtools:: or remotes::install_github('rapporter/pander') ``` ``` ## ## Attaching package: 'summarytools' ``` ``` ## The following object is masked from 'package:tibble': ## ## view ``` ```r view(dfSummary(Preregistration_Dataset_Example)) ``` ``` ## Switching method to 'browser' ``` ``` ## Output file written: C:\Users\zachs\AppData\Local\Temp\Rtmp6fsBJo\file6190f8d74a5.html ``` --- We can see looking at some of these variables, Comments, Dacs, Deltas are all distributed non-normally - In our preregistration, we anticipated that this count variable would not be normally distributed and we would likely need to fit either a poisson model or a negative binomial model ```r library(MASS) ``` ``` ## ## Attaching package: 'MASS' ``` ``` ## The following object is masked from 'package:dplyr': ## ## select ``` --- - The most basic thing we expected was for non-social moral posts to have more deltas than social moral posts. These categorizations were prespecified by handcoding the dataset. - So we need to fit a model to test this because notice that in our preregistration, it was quite open what model we would fit to exam this question. Because this is a count-based variable, we will begin with a poisson distribution. We need to fit a reduced model first because we expect the number of deltas in a thread to always be conditional on the number of comments in the thread. - Note this assumption was never preregistered and so a skeptical reviewer might not completely believe including the predictor was necessary/well thought through. --- ```r summary(preregistered.model.1.reduced <- glm(Deltas ~ scale(Comments, center=FALSE), data = Preregistration_Dataset_Example), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Comments, center = FALSE), data = Preregistration_Dataset_Example) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -3.102 -2.081 -0.950 0.903 38.926 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 1.9800 0.4524 4.377 1.47e-05 *** ## scale(Comments, center = FALSE) 0.8079 0.4529 1.784 0.075 . ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 14.17598) ## ## Null deviance: 7076.4 on 497 degrees of freedom ## Residual deviance: 7031.3 on 496 degrees of freedom ## AIC: 2737.7 ## ## Number of Fisher Scoring iterations: 2 ``` --- ```r summary(preregistered.model.1 <- glm(Deltas ~ Sociomoral + scale(Comments, center=FALSE), data = Preregistration_Dataset_Example), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ Sociomoral + scale(Comments, center = FALSE), ## data = Preregistration_Dataset_Example) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -3.333 -2.060 -0.985 0.922 38.962 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2.1052 0.4847 4.344 1.7e-05 *** ## SociomoralYes -0.2873 0.3975 -0.723 0.4702 ## scale(Comments, center = FALSE) 0.9006 0.4709 1.913 0.0564 . ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 14.18965) ## ## Null deviance: 7076.4 on 497 degrees of freedom ## Residual deviance: 7023.9 on 495 degrees of freedom ## AIC: 2739.2 ## ## Number of Fisher Scoring iterations: 2 ``` --- The initial model we've run provides some evidence against the prediction. Adding the Sociomoral predictor didn't improve model fit as indicated by a similar AIC. About the same number of deltas in a thread for sociomoral vs non sociomoral posts. --- We controlled for comments because it might be that sociomoral posts are simply discussed more. To check that, let's predict comments on sociomoral variable. What distribution we fit is quite tricky here. Let's begin with just a gaussian ```r summary(exploratory.model.comments <- lm(Comments ~ Sociomoral, data = Preregistration_Dataset_Example)) ``` ``` ## ## Call: ## lm(formula = Comments ~ Sociomoral, data = Preregistration_Dataset_Example) ## ## Residuals: ## Min 1Q Median 3Q Max ## -274.044 -104.448 3.956 126.956 214.417 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 268.58 11.08 24.244 < 2e-16 *** ## SociomoralYes 81.46 12.92 6.304 6.42e-10 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 127.3 on 496 degrees of freedom ## Multiple R-squared: 0.07417, Adjusted R-squared: 0.07231 ## F-statistic: 39.74 on 1 and 496 DF, p-value: 6.423e-10 ``` --- Are we violating any of the assumptions of this model? It does look like people comment a lot more about sociomoral issues, which makes sense but would be hard to predict because it wasn't exactly our main interest in this paper. Let's plot to see how a gaussian distribution would fit ```r plot(exploratory.model.comments) ``` <!-- --><!-- --><!-- --><!-- --> --- Our qqplot shows some pretty big deviations and indeed when we plot the distribution, it does not look normal (though distribution of the residuals is of primary interest). ```r ggplot(Preregistration_Dataset_Example)+ geom_histogram(aes(x = Comments, colour = Sociomoral, fill = Sociomoral), position = "dodge")+ theme_bw(12) ``` ``` ## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`. ``` <!-- --> --- - We can also see here that a statistical model isn't really necessary to understand that there are vast differences in the number of comments on sociomoral vs non-sociomoral posts. - However, our standard modeling tools might not be up to the task with this dataset, and it isn't some a preregistration could be easy to identify in advance. However, by preregistering what we knew or predicted, it makes it very clear how much we are learning from the data as we model it. --- There were several other things of interest in this study we subsequently explored. For example, the extent to which controlling for comments, statistical language predicted Delta awarding ```r summary(exploratory.model.2.reduced <- glm(Deltas ~ scale(Comments, center=FALSE), data = Preregistration_Dataset_Example), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Comments, center = FALSE), data = Preregistration_Dataset_Example) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -3.102 -2.081 -0.950 0.903 38.926 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 1.9800 0.4524 4.377 1.47e-05 *** ## scale(Comments, center = FALSE) 0.8079 0.4529 1.784 0.075 . ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 14.17598) ## ## Null deviance: 7076.4 on 497 degrees of freedom ## Residual deviance: 7031.3 on 496 degrees of freedom ## AIC: 2737.7 ## ## Number of Fisher Scoring iterations: 2 ``` --- ```r summary(exploratory.model.2 <- glm(Deltas ~ scale(Stat_Language, center=FALSE) + scale(Comments, center=FALSE), data = Preregistration_Dataset_Example), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Stat_Language, center = FALSE) + ## scale(Comments, center = FALSE), data = Preregistration_Dataset_Example) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -4.462 -2.111 -0.761 0.841 37.933 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 1.9093 0.4513 4.231 2.78e-05 *** ## scale(Stat_Language, center = FALSE) 1.2517 0.5259 2.380 0.0177 * ## scale(Comments, center = FALSE) -0.3121 0.6516 -0.479 0.6321 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 14.04388) ## ## Null deviance: 7076.4 on 497 degrees of freedom ## Residual deviance: 6951.7 on 495 degrees of freedom ## AIC: 2734.1 ## ## Number of Fisher Scoring iterations: 2 ``` --- It appears to, and we had some a priori reason to think this, but we didn't write this model down in advance so we need to be on guard about reading too much into it. And model fit is only slightly improved adding this predictor. We should also plot this relationship. ```r plot(exploratory.model.2) ``` <!-- --><!-- --><!-- --><!-- --> --- ```r ggplot(Preregistration_Dataset_Example)+ geom_smooth(aes(x = Stat_Language, y = Deltas), method = "glm", method.args = list(family = "poisson"))+ theme_bw(12) ``` ``` ## `geom_smooth()` using formula 'y ~ x' ``` <!-- --> --- ```r ggplot(Preregistration_Dataset_Example)+ geom_smooth(aes(x = Stat_Language, y = Deltas), method = "glm", method.args = list(family = "poisson"))+ geom_point(aes(x = Stat_Language, y = Deltas), shape = 1)+ theme_bw(12) ``` ``` ## `geom_smooth()` using formula 'y ~ x' ``` <!-- --> --- ```r df <- Preregistration_Dataset_Example%>% filter(Deltas < 30) summary(exploratory.model.2.1 <- glm(Deltas ~ scale(Stat_Language, center=FALSE) + scale(Comments, center=FALSE), data = df), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Stat_Language, center = FALSE) + ## scale(Comments, center = FALSE), data = df) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -3.5480 -1.9965 -0.7302 0.7836 26.2499 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2.08716 0.40167 5.196 2.98e-07 *** ## scale(Stat_Language, center = FALSE) 0.64729 0.46912 1.380 0.168 ## scale(Comments, center = FALSE) -0.01195 0.57965 -0.021 0.984 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 11.10901) ## ## Null deviance: 5531.1 on 496 degrees of freedom ## Residual deviance: 5487.9 on 494 degrees of freedom ## AIC: 2612.1 ## ## Number of Fisher Scoring iterations: 2 ``` --- # We can ask other questions of our dataset while we're exploring. - Maybe that will help us run a subsequent study that we preregister --- For instance, we could ask is there converging evidence that other things that relate to evidence rather than emotional appeals predicts delta awarding. First fit the reduced model. --- ```r summary(exploratory.model.3.reduced <- glm(Deltas ~ scale(Comments, center=FALSE), data = Preregistration_Dataset_Example), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Comments, center = FALSE), data = Preregistration_Dataset_Example) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -3.102 -2.081 -0.950 0.903 38.926 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 1.9800 0.4524 4.377 1.47e-05 *** ## scale(Comments, center = FALSE) 0.8079 0.4529 1.784 0.075 . ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 14.17598) ## ## Null deviance: 7076.4 on 497 degrees of freedom ## Residual deviance: 7031.3 on 496 degrees of freedom ## AIC: 2737.7 ## ## Number of Fisher Scoring iterations: 2 ``` --- ```r summary(exploratory.model.3 <- glm(Deltas ~ scale(Total_Links, center=FALSE)+ scale(Comments, center=FALSE), data = Preregistration_Dataset_Example), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Total_Links, center = FALSE) + scale(Comments, ## center = FALSE), data = Preregistration_Dataset_Example) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -7.956 -2.090 -0.904 0.816 35.923 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2.1131 0.4451 4.747 2.70e-06 *** ## scale(Total_Links, center = FALSE) 1.2490 0.2809 4.447 1.08e-05 *** ## scale(Comments, center = FALSE) -0.3227 0.5121 -0.630 0.529 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 13.65892) ## ## Null deviance: 7076.4 on 497 degrees of freedom ## Residual deviance: 6761.2 on 495 degrees of freedom ## AIC: 2720.2 ## ## Number of Fisher Scoring iterations: 2 ``` --- Providing evidence in the form of links to citations seems to predict delta awarding ```r ggplot(Preregistration_Dataset_Example)+ geom_smooth(aes(x = scale(Total_Links), y = Deltas), method = "glm", method.args = list(family = "poisson"))+ geom_point(aes(x = scale(Total_Links), y = Deltas), shape = 1)+ theme_bw(12) ``` ``` ## `geom_smooth()` using formula 'y ~ x' ``` <!-- --> --- ```r summary(exploratory.model.3.1.reduced <- glm(Deltas ~ scale(Comments, center=FALSE), data = df), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Comments, center = FALSE), data = df) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -2.9116 -1.8972 -0.8158 1.0868 26.1012 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2.1258 0.4011 5.301 1.74e-07 *** ## scale(Comments, center = FALSE) 0.5655 0.4015 1.409 0.16 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 11.1293) ## ## Null deviance: 5531.1 on 496 degrees of freedom ## Residual deviance: 5509.0 on 495 degrees of freedom ## AIC: 2612 ## ## Number of Fisher Scoring iterations: 2 ``` --- Some evidence even after removing this observation that included links improves model fit. ```r summary(exploratory.model.3.1 <- glm(Deltas ~ scale(Total_Links, center=FALSE)+ scale(Comments, center=FALSE), data = df), family = "poisson") ``` ``` ## ## Call: ## glm(formula = Deltas ~ scale(Total_Links, center = FALSE) + scale(Comments, ## center = FALSE), data = df) ## ## Deviance Residuals: ## Min 1Q Median 3Q Max ## -5.7555 -2.0854 -0.7856 0.7322 25.6483 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2.1970 0.3990 5.507 5.89e-08 *** ## scale(Total_Links, center = FALSE) 0.7220 0.2531 2.853 0.00451 ** ## scale(Comments, center = FALSE) -0.0843 0.4591 -0.184 0.85438 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for gaussian family taken to be 10.97106) ## ## Null deviance: 5531.1 on 496 degrees of freedom ## Residual deviance: 5419.7 on 494 degrees of freedom ## AIC: 2605.9 ## ## Number of Fisher Scoring iterations: 2 ``` --- # Just a few of the possible analyses - We were interested in several other things in this dataset. -- - How much comments with links, sociomoral, and so forth predicted attitude change. -- - We also had several dv's dacs (Delta awarded comments), comments, Total_Links -- - This is really just the beginning of the dataset because we could go back to reddit an extract a host of additional information from these same posts. - For instance, we could also look at upvotes; maybe good arguments are upvoted a lot but not particularly persuasive to people who disagree. --- # Where are we? - We began with a very open ended preregistration to investigate this Reddit dataset. We went through the registration, but on the analytic front there were MANY things left unspecified. This can happen when you first begin exploring a new questions, as indeed we were. - But by preregistering something it makes it clear how open ended these analyses were. This example shows that exploration was also necessary because it would be difficult to predict the shape of the distributions we were working with and so our preregistered analyses may have needed adjustment in any case. - Imagine we had preregistered a model as a linear regression but, upon seeing the distribution of the residuals, it was clear this model was not the most appropriate. In this case, we needed exploration to make the right modeling choices. --- # Summary - What covered what preregistration is, where to do it, and how to do it - Limitations to preregistration and the relationship between preregistration and exploratory analyses - Concrete example of the benefits and difficulties of preregistration