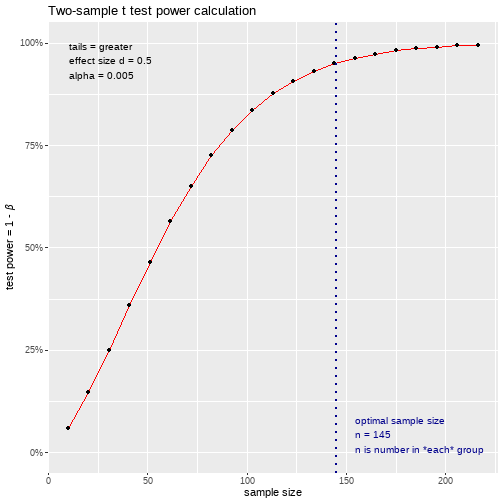

class: center, middle, inverse, title-slide # <b> Introduction to Power Analysis </b> ## Data Analysis for Psychology in R 2<br><br> ### dapR2 Team ### Department of Psychology<br>The University of Edinburgh ### AY 2020-2021 --- # Weeks Learning Objectives - Recap the concept of statistical power - Introduce power analysis for study planning - Differentiate analytic from simulation based power calculations - Introduce R tools for both analytic and simulation power calculations --- # What is power? <img src="figs/ht-errors-table-2.png" width="80%" /> --- # What is power? - Power depends on: - Sample size - Effect size (i.e. an alternative hypothesis) - Significance level ( `\(\alpha\)` ) --- # Power and sample size - In planning, power analysis often boils down to understanding what sample size is big enough for a study. - What is big enough? - It depends! - On the study design, the sample characteristics, the expected effect size, the desired level of precision, the costs and benefits of different results… - Power analysis can help us to work out how much data we should collect for our studies. --- # Why is power important? - So you don't waste your time. - So you don't waste other people's time. - So you can answer the question(s) you're interested in. --- # Analytic power analysis - Analytical solutions essentially just take known numbers; - `\(\alpha\)`, - power, - n, and - effect size - ...and solve for an unknown number - These four variables relate to each other in a way that means each one *“is a function of the other three”* (Cohen, 1992, p. 156). --- # Analytic power analysis - If you have an... - effect size of interest, a chosen alpha level and a planned sample size - you can calculate the statistical power of your planned study. - If you have an... - effect size of interest, a chosen alpha level and a chosen statistical power level - you can calculate the required sample size of your planned study. --- # Power by simulation - For complex designs, simulation can be used to estimate power. - If enough simulations are conducted, simulation-based estimates of power will approximate estimates of power from analytical solutions. - Why is it needed? - sometimes the analytic solutions are not accurate --- # The power of what? - People commonly talk about underpowered studies. - This is not strictly true. - What we are conducting our power analysis on is a particular combination of design, statistical test, and effect size. - yes, these are elements of the study, but it is not *the* study itself. - Read more [here](https://towardsdatascience.com/why-you-shouldnt-say-this-study-is-underpowered-627f002ddf35) --- # Post-hoc power - Often researchers are asked to calculate power **after they have collected data**. - This is referred to as post-hoc or observed power analysis. - If does not use a anticipated effect size, but the effect size you observed from your data. - It is meaningless. - Consider a definition of power (bold added): "The power of a test to detect a correct alternative hypothesis is the **pre-study probability** that the test will reject the test hypothesis (e.g., the probability that P will not exceed a pre-specified cut-off such as 0.05)." (Greenland et al., 2016, p. 345) - Read more [here](http://daniellakens.blogspot.com/2014/12/observed-power-and-what-to-do-if-your.html) --- # Conventions - Conventionally, alpha is fixed, most commonly at .05. A common conventional value for power is .8. - However, both of these conventions are arbitrary cut-offs on continuous scales. - Note that if these conventions are used together, we should expect four times as many Type II errors as Type I errors. - It is important to justify your decisions, including the alpha and power levels you choose (see Lakens et al., 2017). --- class: center, middle # Time for a break --- class: center, middle # Welcome Back! **Where we left off... ** --- # Analytic power in R - Let's use an an independent samples t-test. - In our imaginary study, we will compare the high striker scores of two population-representative groups: - one that has not undergone any training and - one that has taken an intensive day-long high striker training course. - We hypothesise that the training group will have higher scores. --- # Analytic power in R - To begin, let's work with typical sample and effect sizes. - The median total sample size across four APA journals in 2006 was 40 (Marsazalek, Barber, Kohlhart, & Holmes, 2011, Table 1). - A Cohen's d effect size of 0.5 has been considered a medium effect size (Cohen, 1992, Table 1). We can plug these values in and work out what our statistical power would be with them if we use the conventional alpha level of .05. - We will use the `pwr` package. ```r library(pwr) pwr.t.test(n = 20, d = 0.5, sig.level = .05, type= "two.sample", alternative = "two.sided") ``` --- # Analytic power in R ```r pwr.t.test(n = 20,d = 0.5, sig.level = .05, type= "two.sample", alternative = "two.sided") ``` ``` ## ## Two-sample t test power calculation ## ## n = 20 ## d = 0.5 ## sig.level = 0.05 ## power = 0.337939 ## alternative = two.sided ## ## NOTE: n is number in *each* group ``` - Our power is only .34! - So if there is a true standardised effect of .5 in the population, studies with 20 participants per group should only expect to reject the null hypothesis about 34% of the time. --- # Analytic power in R - But this wasn't the appropriate power analysis. - We have a directional hypothesis. - we think they will score *higher* than the non-training group. ```r pwr.t.test(n = 20, d = 0.5, sig.level = .05, type= "two.sample", * alternative = "greater") ``` ``` ## ## Two-sample t test power calculation ## ## n = 20 ## d = 0.5 ## sig.level = 0.05 ## power = 0.4633743 ## alternative = greater ## ## NOTE: n is number in *each* group ``` - Our power for a one-sided test is about .46. --- # Analytic power in R - Instead of computing the power given a sample size of 20 participants per group, let's set the level of power. ```r *pwr.t.test(power = 0.95, d = 0.5, sig.level = .05, type= "two.sample", alternative = "greater") ``` ``` ## ## Two-sample t test power calculation ## ## n = 87.26261 ## d = 0.5 ## sig.level = 0.05 ## power = 0.95 ## alternative = greater ## ## NOTE: n is number in *each* group ``` - We would need to collect 88 participants per group to have 95% power for an effect size of 0.5. --- # Analytic power in R - What if we wanted to change `\(\alpha\)` ? - Some have argued for a change in `\(\alpha\)` norms to .005 (Benjamin et al., 2017). - How many participants would we need for the same design if we used an alpha of .005? ```r pwr.t.test(power = 0.95, d = 0.5, * sig.level = .005, type= "two.sample", alternative = "greater") ``` ``` ## ## Two-sample t test power calculation ## ## n = 144.1827 ## d = 0.5 ## sig.level = 0.005 ## power = 0.95 ## alternative = greater ## ## NOTE: n is number in *each* group ``` - We would need 145 participants if our alpha was to be .005. --- # Analytic power in R .pull-left[ - We can plot our power at different levels of N. ```r res <- pwr.t.test(power = 0.95, d = 0.5, sig.level = .005, type= "two.sample", alternative = "greater") plot(res) ``` ] .pull-right[ <!-- --> ] --- class: center, middle # Time for a break --- class: center, middle # Welcome Back! **Where we left off... ** --- # Power by sim - We are primarily showing you how to use the `pwr` functions for common tests. - These are analytic calculations for power. - They are going to cover a lot of situations you will encounter in dissertations. - But sometimes we need to estimate power a different way. - Here we will briefly flag two packages that can be used for power by simulation. --- # Power by sim in R (`paramtest`) - To use `paramtest` we define a function that... - simulates data that is consistent with our model - runs the associated statistical test on each of these data sets. - We then use the `paramtest` functions to run the simulation. --- # Power by sim in R (`paramtest`) ```r t_func <- function(simNum, N, d) { # simNum is the number of simulations we want x1 <- rnorm(N, 0, 1) # N is the number of participants in each group x2 <- rnorm(N, d, 1) # d is the expected effect size t <- t.test(x1, x2, var.equal=TRUE) # runs t-tests on the simulated datasets stat <- t$statistic # extracts t-values from the t-tests p <- t$p.value # extracts p-values from the t-tests return(c(t = stat, p = p, sig = (p < .05))) } ``` --- # Power by sim in R (`paramtest`) ```r head(results(power_ttest <- run_test( t_func, n.iter = 1000, output = 'data.frame', N = 20, d = 0.5))) ``` ``` ## Running 1,000 tests... ``` ``` ## iter t.t p sig ## 1 1 -1.2440002 0.22111872 0 ## 2 2 -0.1268177 0.89975335 0 ## 3 3 -0.6888123 0.49512577 0 ## 4 4 -2.1953907 0.03431417 1 ## 5 5 -1.4636570 0.15151133 0 ## 6 6 -0.0323007 0.97440132 0 ``` - We now have the t-values and p-values from our simulation, along with a binary indicator of whether a significant p-value was produced. --- # Power by sim in R (`paramtest`) ```r table(power_ttest$results$sig)/1000 ``` ``` ## ## 0 1 ## 0.647 0.353 ``` - The proportion of significant results is our simulation-based estimate of power. - Here, even with only 1000 iterations, the power estimate from simulation is similar to the one obtained analytically. --- # Power by sim in R (`WebPower`) - I want to do no more than tell you `WebPower` exists. - See [here](https://webpower.psychstat.org/wiki/) - There is an R package that does all of the simple analytic tests - It also does more complex simulation methods - And there is an online (non-R) variant for complex models. --- # Summary of today - Power analysis is an important step in study design - But post-hoc power is meaningless - For simple models, we can make use of the `pwr._` functions for analytic solutions - To do so we need to know `\(\alpha\)` , power, and effect size - For complex models, we can estimate power by simulation. - To do so we need .... - Both can be done in R.