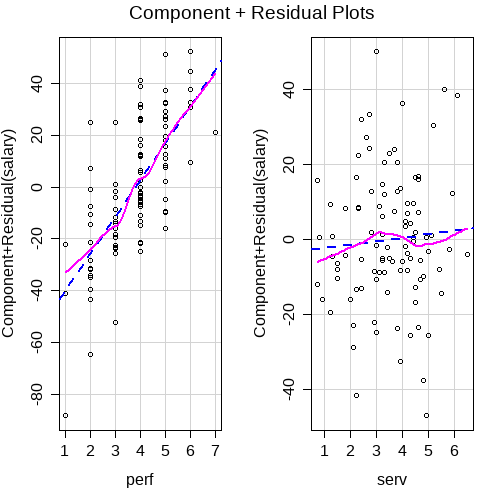

class: center, middle, inverse, title-slide # <b>Week 9: Further assumptions </b> ## Data Analysis for Psychology in R 2<br><br> ### TOM BOOTH & ALEX DOUMAS ### Department of Psychology<br>The University of Edinburgh ### AY 2020-2021 --- # Week's Learning Objectives 1. Specify the assumptions underlying a LM with multiple predictors 2. Assess if a fitted model satisfies the assumptions 3. Test and assess the effect of influential cases on LM coefficients and overall model evaluations --- # Topics for today + Recapping assumptions for LM + Additional steps in the presence of multiple predictors --- # Recap Assumptions + **Linearity**: The relationship between `\(y\)` and `\(x\)` is linear. + Assuming a linear relation when the true relation is non-linear can result in under-estimating that relation + **Normally distributed errors**: The errors ( `\(\epsilon_i\)` ) are normally distributed around each predicted value. + **Homoscedasticity**: The equal variances assumption is constant across values of the predictors `\(x_1\)`, ... `\(x_k\)`, and across values of the fitted values `\(\hat{y}\)` + **Independence of errors**: The errors are not correlated with one another ??? The assumptions do not change when we have multiple predictors, but we do need some additional tools to investigate them --- # Some data for today .pull-left[ + Let's look again at our data predicting salary from years or service and performance ratings (no interaction). `$$y_i = \beta_0 + \beta_1 x_{1} + \beta_2 x_{2} + \epsilon_i$$` + `\(y\)` = Salary (unit = thousands of pounds ). + `\(x_1\)` = Years of service. + `\(x_2\)` = Average performance ratings. ] .pull-right[ <table class="table" style="width: auto !important; margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> id </th> <th style="text-align:right;"> salary </th> <th style="text-align:right;"> serv </th> <th style="text-align:right;"> perf </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> ID101 </td> <td style="text-align:right;"> 80.18 </td> <td style="text-align:right;"> 2.2 </td> <td style="text-align:right;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID102 </td> <td style="text-align:right;"> 123.98 </td> <td style="text-align:right;"> 4.5 </td> <td style="text-align:right;"> 5 </td> </tr> <tr> <td style="text-align:left;"> ID103 </td> <td style="text-align:right;"> 80.55 </td> <td style="text-align:right;"> 2.4 </td> <td style="text-align:right;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID104 </td> <td style="text-align:right;"> 84.35 </td> <td style="text-align:right;"> 4.6 </td> <td style="text-align:right;"> 4 </td> </tr> <tr> <td style="text-align:left;"> ID105 </td> <td style="text-align:right;"> 83.76 </td> <td style="text-align:right;"> 4.8 </td> <td style="text-align:right;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID106 </td> <td style="text-align:right;"> 117.61 </td> <td style="text-align:right;"> 4.4 </td> <td style="text-align:right;"> 4 </td> </tr> <tr> <td style="text-align:left;"> ID107 </td> <td style="text-align:right;"> 96.38 </td> <td style="text-align:right;"> 4.3 </td> <td style="text-align:right;"> 5 </td> </tr> <tr> <td style="text-align:left;"> ID108 </td> <td style="text-align:right;"> 96.49 </td> <td style="text-align:right;"> 5.0 </td> <td style="text-align:right;"> 5 </td> </tr> <tr> <td style="text-align:left;"> ID109 </td> <td style="text-align:right;"> 88.23 </td> <td style="text-align:right;"> 2.4 </td> <td style="text-align:right;"> 3 </td> </tr> <tr> <td style="text-align:left;"> ID110 </td> <td style="text-align:right;"> 143.69 </td> <td style="text-align:right;"> 4.6 </td> <td style="text-align:right;"> 6 </td> </tr> </tbody> </table> ] --- # Non-linearity + With multiple predictors, we need to know whether the relations are linear between each predictor and outcome, controlling for the other predictors + This can be done using **component-residual plots** + Also known as partial-residual plots + Component-residual plots have the `\(x\)` values on the X-axis and partial residuals on the Y-axis + *Partial residuals* for each X variable are: `$$\epsilon_i + B_jX_{ij}$$` + Where : + `\(\epsilon_i\)` is the residual from the linear model including all the predictors + `\(B_jX_{ij}\)` is the partial (linear) relation between `\(x_j\)` and `\(y\)` --- # `crPlots()` + Component-residual plots can be obtained using the `crPlots()` function from `car` package ```r m1 <- lm(salary ~ perf + serv, data = salary2) crPlots(m1) ``` + The plots for continuous predictors show a linear (dashed) and loess (solid) line + The loess line should follow the linear line closely, with deviations suggesting non-linearity --- # `crPlots()` <!-- --> ??? + Here the relations look pretty good. + Deviations of the line are minor --- # Multi-collinearity + Multi-collinearity refers to the correlation between predictors + We saw this in the formula for the standard error of model slopes for an lm with multiple predictors. + When there are large correlations between predictors, the standard errors are increased + Therefore, we don't want our predictors to be too correlated --- # Variance Inflation Factor + The **Variance Inflation Factor** or VIF quantifies the extent to which standard errors are increased by predictor inter-correlations + It can be obtained in R using the `vif()` function: ```r vif(m1) ``` ``` ## perf serv ## 1.001337 1.001337 ``` + The function gives a VIF value for each predictor + Ideally, we want values to be close to 1 + VIFs> 10 indicate a problem --- # What to do about multi-collinearity + In practice, multi-collinearity is not often a major problem + When issues arise, consider: + Combining highly correlated predictors into a single composite + E.g. create a sum or average of the two predictors + Dropping an IV that is obviously statistically and conceptually redundant with another from the model --- # Summary of today + Looked at two additional considerations when checking model assumptions for models with multiple predictors. + Firstly, an alternative way to look at linearity + Second, assessing the magnitude of correlations between predictors. --- # Next tasks + This week: + Complete your lab + Come to office hours + Weekly quiz - content from week 8 + Open Monday 09:00 + Closes Sunday 17:00